python爬虫:selenuim+phantomjs模拟浏览器操作,用BeautifulSoup解析页面,用requests下载文件

来源:互联网 发布:wamp配置域名 编辑:程序博客网 时间:2024/05/18 03:10

phantomjs安装(参考http://www.cnblogs.com/yestreenstars/p/5511212.html)

# 安装依赖软件yum -y install wget fontconfig# 下载PhantomJSwget -P /tmp/ https://bitbucket.org/ariya/phantomjs/downloads/phantomjs-2.1.1-linux-i686.tar.bz2# 解压tar xjf /tmp/phantomjs-2.1.1-linux-i686.tar.bz2 -C /usr/local/# 重命名mv /usr/local/phantomjs-2.1.1-linux-i686 /usr/local/phantomjs# 建立软链接ln -s /usr/local/phantomjs/bin/phantomjs /usr/bin/

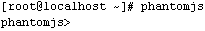

测试:

直接执行phantomjs命令,出现下图则表示成功:

selenuim安装参考官网教程,有时需要装浏览器驱动

这次目标网页为异步的,内容为JS生成的部分,JS中还带有翻页,每一页的内容都要爬下来。

使用selenuim+phantomjs模拟浏览器操作,phantomjs为不带窗口的浏览器,

这里需要注意执行完后webdriver需要退出,否则进程会一直保留,退出方法为quit(),详细用法参考代码。

代码如下:

# -*- coding: UTF-8 -*-import reimport time import Queue import urllib2 import threading import picklefrom bs4 import BeautifulSoup from selenium import webdriver from selenium import * def get_source(page_num): list = [] #加载浏览器驱动 browser = webdriver.PhantomJS(executable_path="/usr/local/phantomjs/bin/phantomjs") #窗口最大化 browser.maximize_window() #与网址建立连接 browser.get('------------url------------') #找到某元素并点击 browser.find_element_by_xpath("//a[contains(text(),'年报') and @data-toggle='tab']").click() #等待5秒 time.sleep(5) #将源码赋给beautifulsoup去解析 soup = BeautifulSoup(browser.page_source) parser(soup) list.extend(parser(soup)) pre = "//a[@class='classStr' and @id='idStr' and @page='" suf = "' and @name='nameStr' and @href='javascript:;']" for i in range(2,page_num): browser.find_element_by_xpath(pre + str(i) + suf).click() time.sleep(10) soup = BeautifulSoup(browser.page_source) list.extend(parser(soup)) browser.quit() return listdef parser(soup): pdf_path_list = [] domain = "------url---------" #用正则找到所有‘href’属性包含‘pdf’的标签 for tag in soup.find_all(href=re.compile("pdf")): hre = tag.get('href') if hre.find("main_cn") < 0: pdf_path = domain + hre pdf_path_list.append(pdf_path) print pdf_path return pdf_path_list def main(): list = get_source(96) f = open('pdf_url.txt', 'w') pickle.dump(list, f)main()selenuim元素定位参考 http://www.cnblogs.com/yufeihlf/p/5717291.html#test2

和 http://www.cnblogs.com/yufeihlf/p/5764807.html

BeautifulSoup解析页面参考 http://cuiqingcai.com/1319.html

以上代码得到了要下载的pdf的url,接着使用requests下载,代码如下:

import requestsimport timeimport pickledef download_file(url): # NOTE the stream=True parameter local_filename = "./pdfs/" + url[url.rfind('/') + 1:] print local_filename r = requests.get(url, stream=True) if r.status_code == 200: with open(local_filename, 'wb') as f: for chunk in r.iter_content(chunk_size=1024): if chunk: # filter out keep-alive new chunks f.write(chunk) f.flush() print(url + " has been downloaded") else: print("r.status_code = " + str(r.status_code) + ", download failed")f = open("pdf_url.txt", "r")for line in f.readlines(): download_file(line.strip()) time.sleep(10) 0 0

- python爬虫:selenuim+phantomjs模拟浏览器操作,用BeautifulSoup解析页面,用requests下载文件

- Python爬虫笔记之用BeautifulSoup及requests库爬取

- python 爬虫试手 requests+BeautifulSoup

- 用htmlunit模拟浏览器辅助python做页面爬虫

- 【Python爬虫】requests+Beautifulsoup存入数据库

- Python爬虫入门之一-requests+BeautifulSoup

- 用BeautifulSoup,urllib,requests写twitter爬虫(1)

- 爬虫:用requests和BeautifulSoup爬取网上图片

- 用Python模拟浏览器操作

- 用Python模拟浏览器操作

- [python爬虫]selenium+PhantomJS模拟登陆

- Python 用BeautifulSoup 解析Html

- 使用requests+beautifulsoup模块实现python网络爬虫功能

- Python爬虫实例——基于BeautifulSoup和requests实现

- python简单爬虫开发(urllib2、requests + BeautifulSoup)

- python股票数据爬虫requests、etree、BeautifulSoup学习

- Python下基于requests及BeautifulSoup构建网络爬虫

- 使用requests+beautifulsoup模块实现python网络爬虫功能

- Android SDK 国内镜像

- 多文件上传

- 快速排序

- log4j

- 如何优雅的上传iOS项目到应用商店

- python爬虫:selenuim+phantomjs模拟浏览器操作,用BeautifulSoup解析页面,用requests下载文件

- java实现基于SMTP发送邮件的方法

- Java继承_内存分析(六)

- 轻便型轮播图---CleverBanner

- [POJ 1459 Power Network] Dinic网络流

- JS date 和 datetime差一天(差8个小时)

- 给大家推荐一个免费国内的SVN代码托管平台

- Android抽屉式导航栏使用及相关类认识

- hdu 3038 How Many Answers Are Wrong (种类并查集)