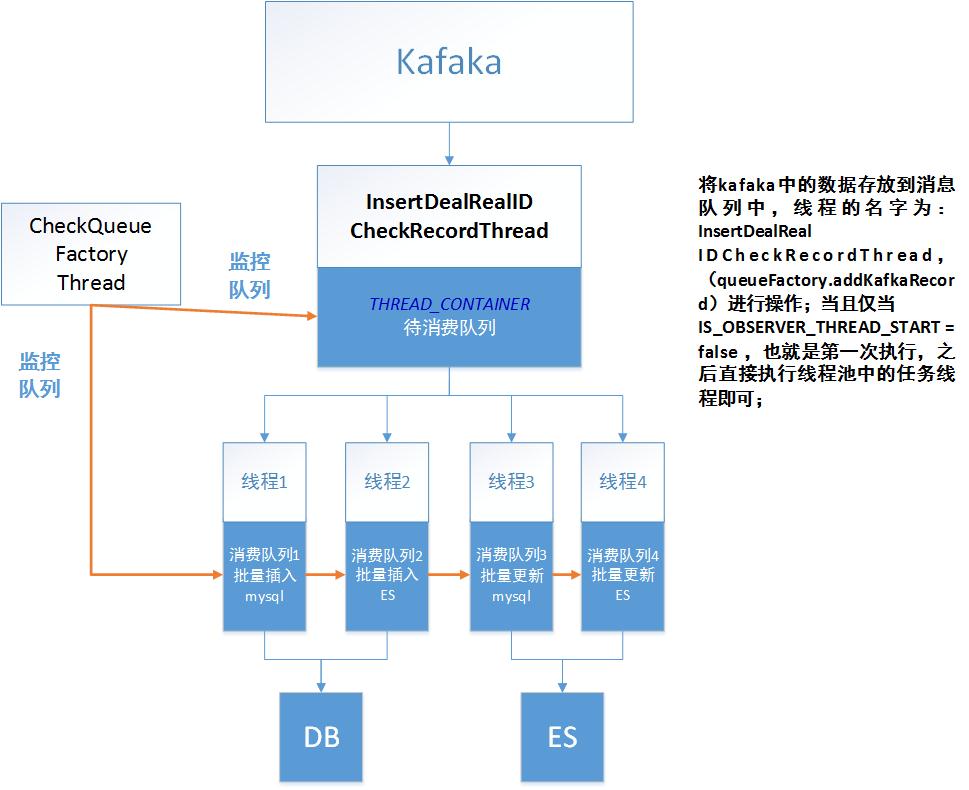

多线程消费队列中的接口数据,接口数据来源是kafka

来源:互联网 发布:淘宝网免费活动 编辑:程序博客网 时间:2024/05/18 00:59

1 消费者执行消息类

package com.aspire.ca.prnp.service.impl;

import java.util.concurrent.atomic.AtomicInteger;

import java.util.concurrent.atomic.AtomicLong;

import org.apache.kafka.clients.consumer.ConsumerRecord;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import com.aspire.ca.prnp.db.service.impl.Constants;

import com.aspire.ca.prnp.db.service.impl.InsertDealRealIDCheckRecordThread;

import com.aspire.ca.prnp.db.service.impl.QueueFactory;

import com.aspire.ca.prnp.domain.express.status.RealIDCheckRecord;

import com.aspire.ca.prnp.kafka.AbstractPrnpConsumer;

import com.aspire.ca.prnp.service.PrnpKernelServer;

import com.aspire.prnp.util.JsonUtil;

import com.aspire.ca.prnp.db.service.impl.ThreadFactory;

public class PostalStatusConsumer extends AbstractPrnpConsumer {

private Logger logger = LoggerFactory.getLogger(PostalStatusConsumer.class);

private QueueFactory queueFactory;

@Override //record为消费数据,kafka到InsertDealRealIDCheckRecordThread

public Boolean execute(ConsumerRecord<String, String> record) throws Exception {

if (Constants.KAFKA_CONSUM_MESSAGE_COUNT.get() == 0) {

Constants.LAST_START_COUNT_TIME = new AtomicLong(System.currentTimeMillis());

}

logger.info("消费者执行消息 offset = {}, key = {}, value = {}", record.offset(), record.key(), record.value());

RealIDCheckRecord realIDCheckRecord = JsonUtil.jsonToBean(record.value(), RealIDCheckRecord.class); //ConsumerRecord<String, String>转化为实体类

//获取队列工厂类

queueFactory = (QueueFactory) PrnpKernelServer.getCtx().getBean("queueFactory");

//启动InsertDealRealIDCheckRecordThread线程,将InsertDealRealIDCheckRecordThread放到消费队列中,打开监控线程;

queueFactory.addKafkaRecord(InsertDealRealIDCheckRecordThread.class, realIDCheckRecord); //6、2位置

//增加InsertDealRealIDCheckRecordThread数量,放到线程池中执行 ; 位置4

ThreadFactory.getInstance().exec(InsertDealRealIDCheckRecordThread.class, 20);

if (Constants.KAFKA_CONSUM_MESSAGE_COUNT.get() + 5 >= Integer.MAX_VALUE) {

long costTime = System.currentTimeMillis() - Constants.LAST_START_COUNT_TIME.get();

if(costTime!=0){

logger.info(

">>KafkaConsumSpeedMonitor:本次消费计数期间,共消费数据:" + Constants.KAFKA_CONSUM_MESSAGE_COUNT.get() + ",耗时:" + (costTime / 1000) + "秒,消费速率:" + (Constants.KAFKA_CONSUM_MESSAGE_COUNT.get() / costTime) + "条/秒");

}

Constants.KAFKA_CONSUM_MESSAGE_COUNT = new AtomicInteger(0);

} else {

long costTime = (System.currentTimeMillis() - Constants.LAST_START_COUNT_TIME.get())/1000;

if(Constants.KAFKA_CONSUM_MESSAGE_COUNT.get()%1000==0&&costTime!=0){

logger.info(">>KafkaConsumSpeedMonitor:本次启动共消费数据:"+Constants.KAFKA_CONSUM_MESSAGE_COUNT.get()+"条,消费速率:"+(Constants.KAFKA_CONSUM_MESSAGE_COUNT.get()/costTime)+" 条/秒");

}

}

Constants.KAFKA_CONSUM_MESSAGE_COUNT.incrementAndGet();

return true;

}

}

- 多线程消费队列中的接口数据,接口数据来源是kafka

- sparkstreaming消费kafka中的数据

- Kafka - 消费接口分析

- Storm-Kafka模块常用接口分析及消费kafka数据例子

- storm消费kafka数据

- STORM整合kafka消费数据

- storm实时消费kafka数据

- 初始化接口中的数据成员

- 解析接口中的json数据

- 数据接口

- 数据接口

- kafka查看消费了多少条数据

- Sparak-Streaming基于Offset消费Kafka数据

- 关于kafka重新消费数据问题

- Kafka重复消费和丢失数据研究

- Kafka 生产消费 Avro 序列化数据

- SparkStreaming消费Kafka数据遇到的问题

- kafka多线程消费

- rex 管理定时任务

- mac idea svn 使用(上传新项目+下载项目)

- WIN7 JDK Tomcat 环境变量配置

- [翻译]"双重检查锁被打破了"宣言

- Java 数学函数与常量

- 多线程消费队列中的接口数据,接口数据来源是kafka

- Socket通信原理和实践

- 项目部署失败原因记录

- WSAAsyncSelect之win32示例模型

- idea maven spring mvc mybatis

- hyperscan 学习-跨包检测

- eclipse上项目栏不显示了,怎么办?

- 如何将本地文件上传到github托管

- Maven instal Could not resolve dependencies for project 找不到本地仓库下的jar