Life Without DevStack: OpenStack Development With OSA

来源:互联网 发布:专业数据分析软件 编辑:程序博客网 时间:2024/04/29 13:07

转自:https://developer.rackspace.com/blog/life-without-devstack-openstack-development-with-osa/

If you are an OpenStack contributor, you likely rely on DevStack for most of your work. DevStack is, and has been for a long time, the de-facto platform that contributors use for development, testing, and reviews. In this article, I want to introduce you to a project I'm a contributor to, called openstack-ansible. For the last few months, I have been using this project as an alternative to DevStack for OpenStack upstream development, and the experience has been very positive.

What’s Wrong with DevStack?

Before I delve into openstack-ansible, I want to briefly discuss the reasons that motivated me to look for an alternative to DevStack. Overall, DevStack is a well rounded project, but there are a couple of architectural decisions that bother me.

First of all, DevStack comes with a monolithic installer. To perform an install, you run stack.sh and that installs all the modules you configured. If you later want to add or remove modules, the only option is to run unstack.sh to uninstall everything, and then re-run stack.sh with the updated configuration. A few times, when I made source code changes to a module, I inadvertently caused the module to operate in an erratic way. If I'm in that situation, the safest option is to reinstall it, and the only way to do that with DevStack is to reinstall everything.

DevStack performs a development installation of all the modules, which creates an environment that is very different from production deployments. In my opinion, a proper development environment would have the module I'm working on installed for development, with everything else installed for production. This is not possible to do with DevStack.

Another problem I used to have with DevStack is the constant fight to keep dependencies in a consistent state. In DevStack, dependencies are shared among all the modules, so a simple action of syncing the dependencies for one module may cause a chain reaction that requires updating several other modules. To some extent, this can be alleviated in recent DevStack releases with the use of per-module virtual environments, but, even with that, OS level packages remain shared.

What is openstack-ansible (OSA)?

The openstack-ansible project is a Rackspace open source initiative that uses the power of Ansible to deploy OpenStack. You may have heard of this project with the name os-ansible-deployment on StackForge before it moved to the OpenStack big tent.

Like DevStack, openstack-ansible deploys all the OpenStack services directly from their git repositories, without any vendor patches or add-ons. But the big difference is that openstack-ansible deploys the OpenStack services in LXC containers, so there is complete OS level and Python package isolation between the services hosted on a node.

Another difference between DevStack and openstack-ansible is that the latter is a production distribution. With it, you can deploy enterprise scale private clouds that range from a handful nodes to large cluster with hundreds or even thousands of nodes.

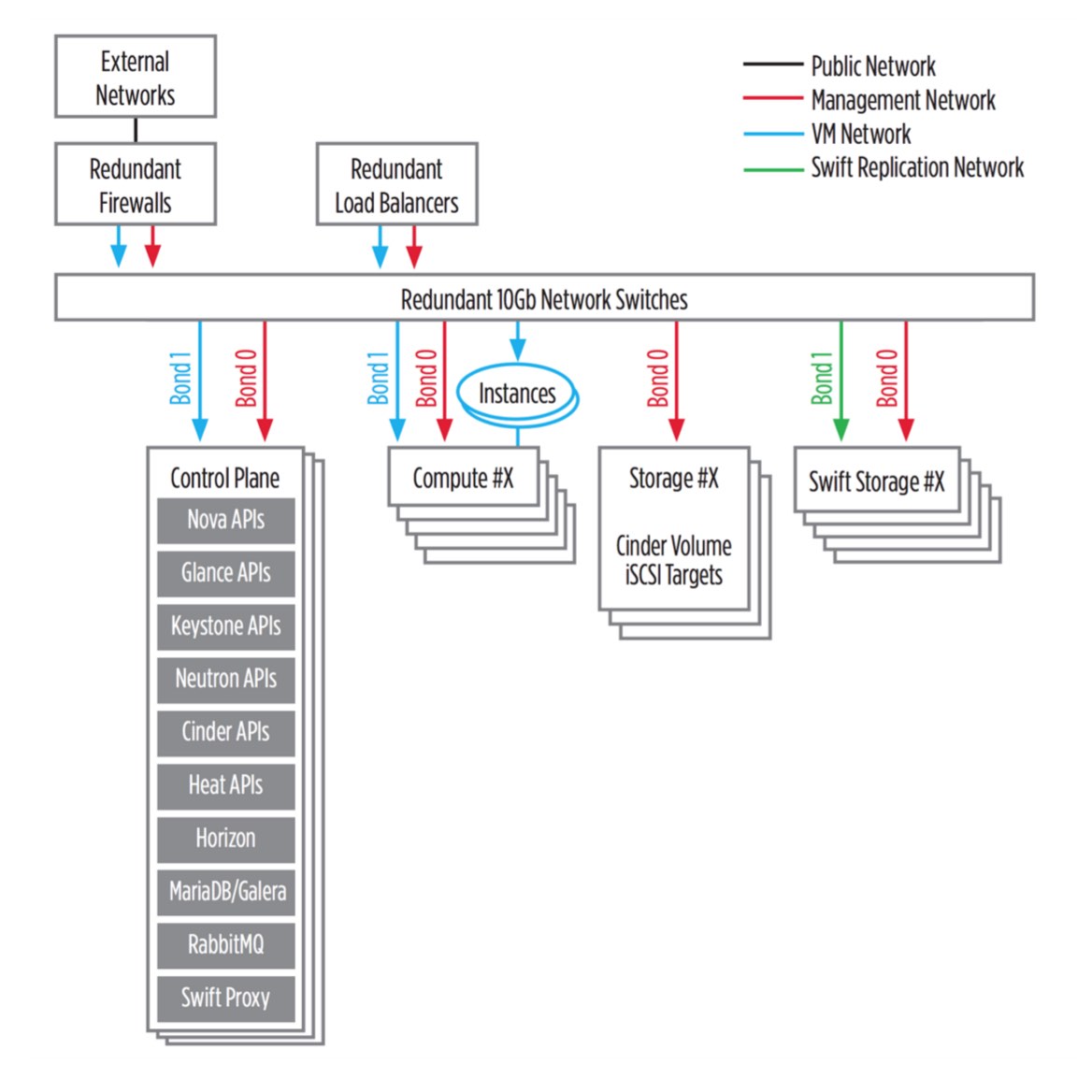

The following diagram shows the structure of an openstack-ansible private cloud:

After looking at this, you are probably scratching your head thinking how can this project match the simplicity of DevStack for upstream development, given that it is clearly oriented to multi-node private clouds. Do not despair! I cover this in the next section.

OSA All-In-One

The openstack-ansible project contributors use a single-node deployment for day-to-day work and for gating, because that is much more convenient and resource efficient. With this method of deployment it is possible to stand up OSA on a cloud server or virtual machine, so taking advantage of this feature makes this project comparable to DevStack.

Unfortunately, the host requirements for a single-node deployment of OSA are higher than DevStack, mainly due to the overhead introduced by the container infrastructure. For a usable installation, the host must have 16GB of RAM and 80GB of disk space. At this time, the only host OS that is supported is Ubuntu 14.04.

One nice advantage that OSA has over DevStack is that, thanks to the containerized architecture, it can deploy redundant services even on a single-node install. On a default single-node deployment, galera, rabbitmq, and keystone are deployed with redundancy, and HAProxy is installed in the host to do the load balancing.

Are you ready to get your hands dirty? If you have access to a fresh Ubuntu 14.04 server that meets the specs mentioned above, you can clone the OSA repository with the following commands:

You do not need to clone the project to this directory. Instead, you can clone it anywhere you like.

Next, generate a single-node configuration file. Luckily, the project comes with a script that does that for you:

After the above command runs, the directory /etc/openstack_deploy will be populated with several configuration files in YAML format. Of particular interest is file user_secrets.yml, which contains all the passwords that will be used in your installation. These passwords are randomly generated, so they are hard to remember. I typically edit the admin password, because I use it a lot. Basically I change this:

to this:

The remaining passwords are not that useful, so I leave them alone. However, you can change any password you think you may need to use to something that is easy to remember.

Next, run another script that installs Ansible in the host, plus a few Ansible extras and wrapper scripts that simplify its use:

At this point, the host is ready to receive the OSA install, so run the Ansible playbooks that carry out the installation:

On a Rackspace Public Cloud server, a full run through all the playbooks takes about 45 minutes to complete. One nice aspect of working with Ansible is that all the tasks are idempotent, meaning that the playbooks can be executed multiple times without causing any problem. If a task has already been performed, running it a second time does nothing. This is actually very useful, as it makes it possible to re-configure a live system simply by changing the appropriate configuration files and re-running the playbooks, without the need to tear everything down first.

A Quick Tour of openstack-ansible

In this section, I want to give you an overview of the OSA single-node structure, so that those of you that were brave enough to stand one up know where everything is.

First of all, the Horizon dashboard is deployed and should be accessible on the public IP address of the host. You can use the admin account, with the password that you entered in the /etc/openstackdeploy/usersecrets.yml file. If you did not edit this file, you have to open this file and locate thekeystone_auth_admin_password setting to find out what password was used.

As I mentioned above, the servers are all installed in LXC containers. The following command shows you the list of containers:

By going through this list, you can see what services were deployed. You can get inside a container using the lxc-attach command. A particularly interesting container is the one with the utility name at the bottom of the list. To enter this container this is the command that you should use:

The utility container is useful because it has all the OpenStack command line clients installed, plus a ready to go openrc file for the admin account. In the following example session, I use the openstack utility to query the list of users in my deployment:

To exit the container and return to the host, type exit or hit Ctrl-D.

Here is another nice feature of Ansible: it allows you to reinstall a service, like one that got corrupted during development by mistake. To do this, simply destroy the affected container:

After the sick container is destroyed, running the playbooks one more time will cause a fresh one to be made as a replacement, in a fraction of the time it would take to install everything from scratch.

Development Workflow

You surely want to know how I use an OSA all-in-one deployment as a replacement for DevStack, in practical terms. The process involves a few simple steps:

Deploy OSA-AIO

Obviously, everything starts with deploying a single-node openstack-ansible cloud. I normally use a Rackspace Public Cloud server as my host, but you can use any Ubuntu 14.04 host with the specs I listed above.

Attach to the target container

I then go inside the container that runs the service I want to work on, using the

lxc-attachcommand I showed above. If I'm going to work on a service that was deployed with redundancy, I first edit the HAProxy configuration to leave only one container active. The remaining containers can be used as backups if something goes wrong with the selected one.Stop the target service

The container is running a production version of the service I intend to work on. Because this service is of no use to me, I stop it by using the standard

service <name> stopcommand. For example, if I'm in the heat-engine container, I would runservice heat-engine stop.Clone development version

Now I have a container that is prepared to run the target service, so I can clone the actual code I will be working with. For this, I might use the official git repository, my fork with custom changes, or maybe a patch from Gerrit, if I'm doing a review.

Update dependencies

The development version might require a different set of dependencies than the version that was installed by the Ansible playbooks, so, for safety, I run

pip install -r requirements.txtin the container. Since OSA creates its own private Python package repository, a required version of a package might not be available in it. When that happens, I setno-index = Falsein the container's /root/.pip/pip.conf file to enable access to pypi, and then try again.Sync database

Another possible difference between the original version installed with OSA and my development version is in database migrations, so I always sync the database, just in case. The command to do this varies slightly between services, but it usually requires invoking the management script with the

db_syncoption. For example, when working with Keystone, the command to sync the database iskeystone-manage db_syncMake changes to the original config files, if necessary

The playbooks create configuration files for all the services, so, in most cases, the configuration that was left in the /etc directory by the installer can be used without changes for development. If I need to make any custom changes related to my work, I make them manually with a text editor.

Run manually, or install and run as a service

Finally, the development version can be started. To do that easily, just run the Python application directly. For example, if I'm working on the heat-engine service, I can run

bin/heat-enginefrom the root directory of the project to start the service in the foreground, with logs going out to the terminal. To stop the service, I can hit Ctrl-C, just like it's done in DevStack.Terminal friendly debuggers, such as pdb (command line) or pudb (interactive), can be installed inside containers and work great. Remote debugging over ssh from the host to the container is also possible, if you prefer to use more complex debuggers such as PyCharm.

For most services, running them manually is enough to work as comfortably as with DevStack. The only exception is for services that do not run Python scripts directly, such as Keystone, which normally runs under Apache. Even though Apache is used in production, for development it is perfectly safe to run the Python application directly, which will likely run an eventlet based web server. If for any reason using Apache is desired, then the alternative is to install the development version by running

python setup.py installand then restart the already installed Apache service withservice apache2 restart. It is also possible to run the application from its source directory by installing it withpython setup.py developand then adding the home directory to the Apache site configuration file.

OSA-AIO: The Pros

Working with OSA in place of DevStack has been a mostly pleasant experience for me. Not having dependency conflicts anymore is great, because with OSA, if I need to rebase one of the services and that requires new dependencies, the other services are unaffected in their own containers.

I have also found I rarely need to recreate the whole deployment from scratch. I usually do that when I want to upgrade the entire system to a new release of OpenStack, but, for day to day work, I find it easy to do local updates or repairs to an existing installation. I like being able to destroy a container and then have Ansible regenerate it for me without touching the rest of the services.

Finally, I really like that OSA allows me to choose what part of the system I install as development packages, while keeping the rest of the OpenStack cloud installed and configured for production use.

OSA-AIO: The Cons

But of course, as is the case with everything, there are some aspects of working with OSA that are not great, so I want to give you that side of the story as well.

OSA is a young project, and, as such, it does not have the wide support of the community that DevStack enjoys. This is particularly important if you work on modules that are not at the core of OpenStack. At the time I'm writing this, OSA supports the deployment of Keystone, Nova, Neutron, Glance, Cinder, Swift, Heat, Ceilometer and Horizon. If you want to work on a module not included in this list, then OSA is probably not that useful to you. But on the other side, if you want to create an Ansible playbook for a module currently not supported, you will be received with open arms.

In general, there are a fair number of configuration options exposed as Ansible variables for all modules, with one exception. When it comes to Neutron, configuration is not as flexible. Network tunnels across containers and VMs are always configured to use Linux Bridge. For example, if you need to work with Open vSwitch, you'll need to manually modify the configuration after running the playbooks, which is not fun. Also, none of the Neutron plugins are supported at this time.

Conclusion

I hope you find my workflow with openstack-ansible interesting to learn and use. It has saved me time when working on Heat upstream features and bug fixes. I have also heavily relied on OSA to debug and troubleshoot Keystone federation.

If you are interested in using OSA, I also encourage you to do a little bit of searching, as that will lead you to more articles and blog posts (such as this one or this other one), in which other OpenStack contributors explain how they incorporated OSA into their own workflows.

- Life Without DevStack: OpenStack Development With OSA

- 三个OpenStack自动化部署工具:Devstack,OSA,Kolla对比

- How to create openstack single node with devstack

- Openstack Integration with VMware vCenter by Devstack and Opencontrail

- DevStack部署Openstack教程(DevStack)

- Openstack 安装(devstack)

- devstack安装openstack

- devstack安装openstack

- devstack安装openstack日志

- devstack安装openstack 心得

- OpenStack mitaka DevStack 部署

- 使用devstack安装OpenStack

- openstack之DevStack安装

- devstack 安装openstack

- Devstack install openstack

- 使用devstack部署openstack

- DevStack 部署OpenStack

- How to set up live-migration environment for Openstack with devstack

- 柯西收敛准则

- java泛型

- Python学习之闭包

- PHP 正则表达式

- 哲学家进餐问题

- Life Without DevStack: OpenStack Development With OSA

- C++多态

- java中没有引用传递!

- 杨思杰简历

- 面试题目4:替换空格

- overfeat论文待续

- LeetCode (Binary Tree Level Order Traversal)

- hot-reloading

- UnicodeDecodeError: 'utf8' codec can't decode byte 0x80 : invalid start byte