cs224d 作业 problem set2 (二) TensorFlow 实现命名实体识别

来源:互联网 发布:apm调参软件 编辑:程序博客网 时间:2024/06/03 21:19

所有的这些包括之前的两篇都可以通过tensorflow 模型的托管部署到 google cloud 上面,发布成restful接口,从而与任何的ERP,CRM系统集成、

天呀,这就是赤果果的钱呀。好血腥。感觉tensorflow的革命性意义就是能够将学校学到的各种数学算法成功地与各种系统结合起来。

实现了matlab一直不能与其他系统结合的功能,并且提供GPU并行计算的功能,简直屌爆了

理论上来讲像啥 运输问题,规划问题,极值问题。都可以通过tensorflow来进行解决,最主要的是能成功地与其他系统进行结合

练习反向传播算法和训练深度神经网络,通过它们来实现命名实体识别 (人名,地名,机构名,专有名词)

模型是一个单隐藏层神经网络,有一个类似在word2vec中看到的表现层,这里不需要求平均值,或者抽样,

而是明确地将上下文定义为一个“窗口”。包含目标词和它左右紧邻的词,是一个3d维度的行向量

xt-1,xt,xt+1是one-hot行向量,

是嵌入矩阵

是嵌入矩阵

每一行Li其实就代表一个特定的词,然后做如下预测

定义的交叉熵损失函数:

下面初始化各变量的值(Random initialize)然后求损失函数对各个需要更新变量的导数(梯度)

用来进行梯度下降寻找最优解

(1)训练的过程就是先在上一篇文章中训练出词向量,

(2)然后对于每一个词构造成三元组的形式

(3)然后初始化下面公式中所有需要随机生成的变量

(4)然后根据上面的每个变量的梯度公式,求出每个变量的梯度值

(5)然后应用梯度下降方法, 新变量值=初始值+步长*梯度值 来更新每一个变量的值

(6)将新的变量代入到上面的公式中,如果交叉熵损失小于固定值则停止学习,否则继续学习

(7)对于每一个词的向量应用上面的迭代方法,直至训练完毕,便得到了命名实体识别的神经网络模型

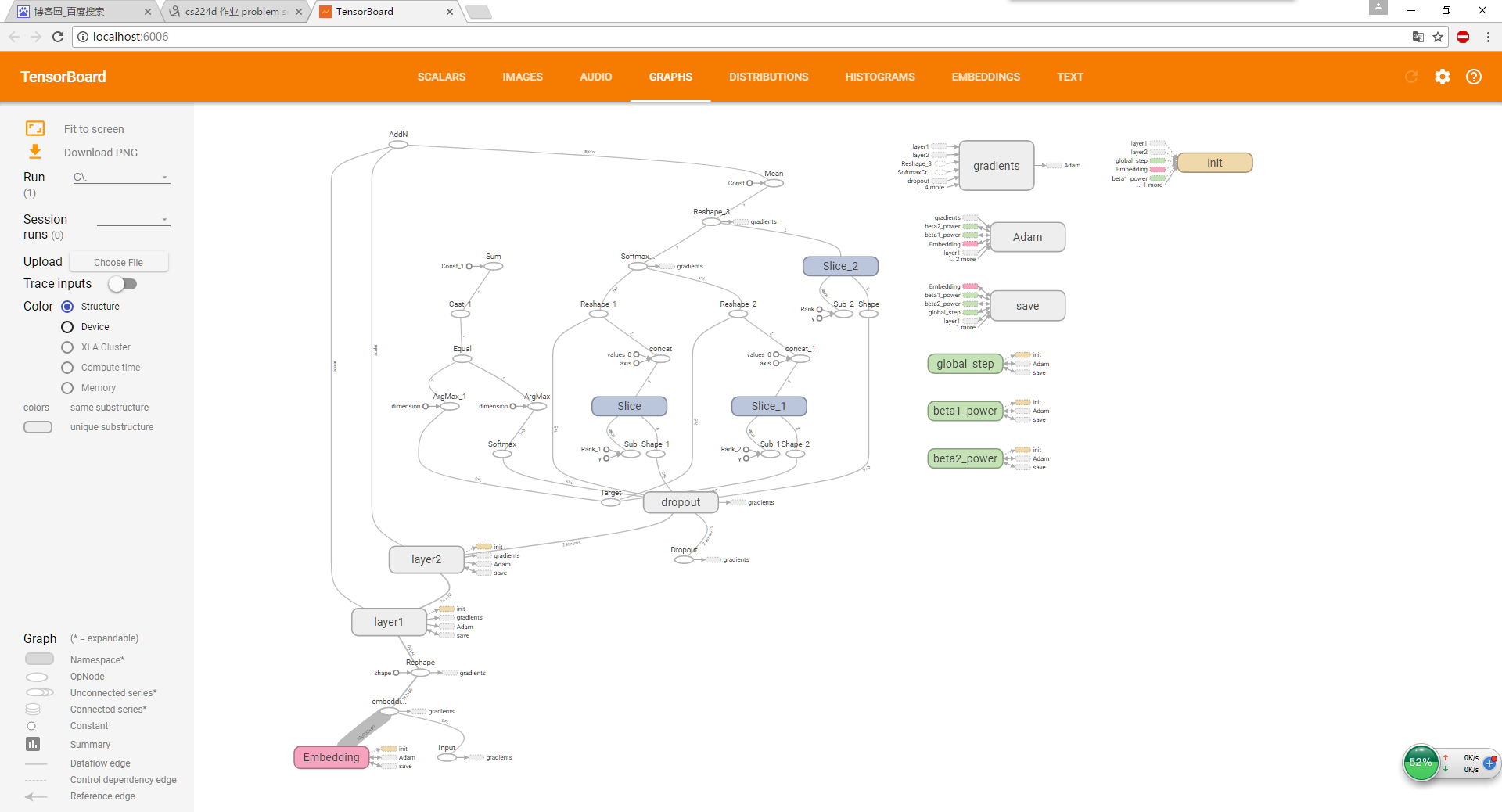

这里边这个网络的结构如下图所示:

'''Created on 2017年9月22日@author: weizhen'''import osimport getpassimport sysimport timeimport structimport numpy as npimport tensorflow as tffrom q2_initialization import xavier_weight_initimport data_utils.utils as duimport data_utils.ner as nerfrom utils import data_iteratorfrom model import LanguageModelclass Config(object): """ 配置模型的超参数和数据信息 这个配置类是用来存储超参数和数据信息,模型对象被传进Config() 实例对象在初始化的时候 """ embed_size = 50 batch_size = 64 label_size = 5 hidden_size = 100 max_epochs = 50 early_stopping = 2 dropout = 0.9 lr = 0.001 l2 = 0.001 window_size = 3class NERModel(LanguageModel): """ Implements a NER (Named Entity Recognition) model. 实现命名实体识别的模型 这个类实现了一个深度的神经网络用来进行命名实体识别 它继承自LanguageModel 一个有着add_embedding 方法,除了标准的模型方法 """ def load_data(self, debug=False): """ 加载开始的word-vectors 并且开始训练 train/dev/test data """ # Load the starter word vectors self.wv, word_to_num, num_to_word = ner.load_wv('data/ner/vocab.txt', 'data/ner/wordVectors.txt') tagnames = ['O', 'LOC', 'MISC', 'ORG', 'PER'] self.num_to_tag = dict(enumerate(tagnames)) tag_to_num = {v:k for k, v in self.num_to_tag.items()} # Load the training set docs = du.load_dataset("data/ner/train") self.X_train, self.y_train = du.docs_to_windows(docs, word_to_num, tag_to_num, wsize=self.config.window_size) if debug: self.X_train = self.X_train[:1024] self.y_train = self.y_train[:1024] # Load the dev set (for tuning hyperparameters) docs = du.load_dataset('data/ner/dev') self.X_dev, self.y_dev = du.docs_to_windows(docs, word_to_num, tag_to_num, wsize=self.config.window_size) if debug: self.X_dev = self.X_dev[:1024] self.y_dev = self.y_dev[:1024] # Load the test set (dummy labels only) docs = du.load_dataset("data/ner/test.masked") self.X_test, self.y_test = du.docs_to_windows(docs, word_to_num, tag_to_num, wsize=self.config.window_size) def add_placeholders(self): """ 生成placeholder 变量去接收输入的 tensors 这些placeholder 被用作输入在模型的其他地方调用,并且会在训练的时候被填充数据 当"None"在placeholder的大小当中的时候 ,是非常灵活的 在计算图中填充如下节点: input_placeholder: tensor(None,window_size) . type:tf.int32 labels_placeholder: tensor(None,label_size) . type:tf.float32 dropout_placeholder: Dropout value placeholder (scalar), type: tf.float32 把这些placeholders 添加到 类对象自己作为 常量 """ self.input_placeholder = tf.placeholder(tf.int32, shape=[None, self.config.window_size], name='Input') self.labels_placeholder = tf.placeholder(tf.float32, shape=[None, self.config.label_size], name='Target') self.dropout_placeholder = tf.placeholder(tf.float32, name='Dropout') def create_feed_dict(self, input_batch, dropout, label_batch=None): """ 为softmax分类器创建一个feed字典 feed_dict={ <placeholder>:<tensor of values to be passed for placeholder>, } Hint:The keys for the feed_dict should be a subset of the placeholder tensors created in add_placeholders. Hint:When label_batch is None,don't add a labels entry to the feed_dict Args: input_batch:A batch of input data label_batch:A batch of label data Returns: feed_dict: The feed dictionary mapping from placeholders to values. """ feed_dict = { self.input_placeholder:input_batch, } if label_batch is not None: feed_dict[self.labels_placeholder] = label_batch if dropout is not None: feed_dict[self.dropout_placeholder] = dropout return feed_dict def add_embedding(self): # The embedding lookup is currently only implemented for the CPU with tf.device('/cpu:0'): embedding = tf.get_variable('Embedding', [len(self.wv), self.config.embed_size]) window = tf.nn.embedding_lookup(embedding, self.input_placeholder) window = tf.reshape( window, [-1, self.config.window_size * self.config.embed_size]) # ## END YOUR CODE return window def add_model(self, window): """Adds the 1-hidden-layer NN Hint:使用一个variable_scope ("layer") 对于第一个隐藏层 另一个("Softmax")用于线性变换在最后一个softmax层之前 确保使用xavier_weight_init 方法,你之前定义好的 Hint:确保添加了正则化和dropout在这个网络中 正则化应该被添加到损失函数上, dropout应该被添加到每一个变量的梯度上面 Hint:可以考虑使用tensorflow Graph 集合 例如(total_loss)来收集正则化 和损失项,你之后会在损失函数中添加的 Hint:这里会需要创建不同维度的变量,如下所示: W:(window_size*embed_size,hidden_size) b1:(hidden_size,) U:(hidden_size,label_size) b2:(label_size) Args: window: tf.Tensor of shape(-1,window_size*embed_size) Returns: output: tf.Tensor of shape(batch_size,label_size) """ with tf.variable_scope('layer1', initializer=xavier_weight_init()) as scope: W = tf.get_variable('w', [self.config.window_size * self.config.embed_size, self.config.hidden_size]) b1 = tf.get_variable('b1', [self.config.hidden_size]) h = tf.nn.tanh(tf.matmul(window, W) + b1) if self.config.l2: tf.add_to_collection('total_loss', 0.5 * self.config.l2 * tf.nn.l2_loss(W)) with tf.variable_scope('layer2', initializer=xavier_weight_init()) as scope: U = tf.get_variable('U', [self.config.hidden_size, self.config.label_size]) b2 = tf.get_variable('b2', [self.config.label_size]) y = tf.matmul(h, U) + b2 if self.config.l2: tf.add_to_collection('total_loss', 0.5 * self.config.l2 * tf.nn.l2_loss(U)) output = tf.nn.dropout(y, self.dropout_placeholder) return output def add_loss_op(self, y): """将交叉熵损失添加到计算图上 Hint:你或许可以使用tf.nn.softmax_cross_entropy_with_logits 方法来简化你的 实现, 或许可以使用tf.reduce_mean 参数: pred:A tensor shape:(batch_size,n_classes) 返回值: loss:A 0-d tensor (数值类型) """ cross_entropy = tf.reduce_mean(tf.nn.softmax_cross_entropy_with_logits(logits=y, labels=self.labels_placeholder)) tf.add_to_collection('total_loss', cross_entropy) loss = tf.add_n(tf.get_collection('total_loss')) return loss def add_training_op(self, loss): """设置训练目标 创建一个优化器并且将梯度下降应用到所有变量的更新上面 Hint:对于这个模型使用tf.train.AdamOptimizer优化方法 调用optimizer.minimize()会返回一个train_op的对象 Args: loss:Loss tensor,from cross entropy_loss Returns: train_op:The Op for training """ optimizer = tf.train.AdamOptimizer(self.config.lr) global_step = tf.Variable(0, name='global_step', trainable=False) train_op = optimizer.minimize(loss, global_step=global_step) return train_op def __init__(self, config): """使用上面定义好的函数来构造神经网络""" self.config = config self.load_data(debug=False) self.add_placeholders() window = self.add_embedding() y = self.add_model(window) self.loss = self.add_loss_op(y) self.predictions = tf.nn.softmax(y) one_hot_prediction = tf.arg_max(self.predictions, 1) correct_prediction = tf.equal(tf.arg_max(self.labels_placeholder, 1), one_hot_prediction) self.correct_predictions = tf.reduce_sum(tf.cast(correct_prediction, 'int32')) self.train_op = self.add_training_op(self.loss) def run_epoch(self, session, input_data, input_labels, shuffle=True, verbose=True): orig_X, orig_y = input_data, input_labels dp = self.config.dropout # We 're interested in keeping track of the loss and accuracy during training total_loss = [] total_correct_examples = 0 total_processed_examples = 0 total_steps = len(orig_X) / self.config.batch_size for step, (x, y) in enumerate(data_iterator(orig_X, orig_y, batch_size=self.config.batch_size, label_size=self.config.label_size, shuffle=shuffle)): feed = self.create_feed_dict(input_batch=x, dropout=dp, label_batch=y) loss, total_correct, _ = session.run( [self.loss, self.correct_predictions, self.train_op], feed_dict=feed) total_processed_examples += len(x) total_correct_examples += total_correct total_loss.append(loss) if verbose and step % verbose == 0: sys.stdout.write('\r{}/{} : loss = {}'.format(step, total_steps, np.mean(total_loss))) if verbose: sys.stdout.write('\r') sys.stdout.flush() return np.mean(total_loss), total_correct_examples / float(total_processed_examples) def predict(self, session, X, y=None): """从提供的模型中进行预测""" # 如果y已经给定,loss也已经计算出来了 # 我们对dropout求导数通过把他设置为1 dp = 1 losses = [] results = [] if np.any(y): data = data_iterator(X, y, batch_size=self.config.batch_size, label_size=self.config.label_size, shuffle=False) else: data = data_iterator(X, batch_size=self.config.batch_size, label_size=self.config.label_size, shuffle=False) for step, (x, y) in enumerate(data): feed = self.create_feed_dict(input_batch=x, dropout=dp) if np.any(y): feed[self.labels_placeholder] = y loss, preds = session.run([self.loss, self.predictions], feed_dict=feed) losses.append(loss) else: preds = session.run(self.predictions, feed_dict=feed) predicted_indices = preds.argmax(axis=1) results.extend(predicted_indices) return np.mean(losses), results def print_confusion(confusion, num_to_tag): """Helper method that prints confusion matrix""" # Summing top to bottom gets the total number of tags guessed as T total_guessed_tags = confusion.sum(axis=0) # Summing left to right gets the total number of true tags total_true_tags = confusion.sum(axis=1) print("") print(confusion) for i, tag in sorted(num_to_tag.items()): print(i, "-----", tag) prec = confusion[i, i] / float(total_guessed_tags[i]) recall = confusion[i, i] / float(total_true_tags[i]) print("Tag: {} - P {:2.4f} / R {:2.4f}".format(tag, prec, recall)) def calculate_confusion(config, predicted_indices, y_indices): """帮助方法计算混淆矩阵""" confusion = np.zeros((config.label_size, config.label_size), dtype=np.int32) for i in range(len(y_indices)): correct_label = y_indices[i] guessed_label = predicted_indices[i] confusion[correct_label, guessed_label] += 1 return confusiondef save_predictions(predictions, filename): """保存predictions 到 提供的文件中""" with open(filename, "w") as f: for prediction in predictions: f.write(str(prediction) + "\n")def test_NER(): """测试NER模型的实现 你可以使用这个函数来测试你实现了的命名实体识别的神经网络 当调试的时候,设置最大的max_epochs 在 Config 对象里边为1 这样便可以快速地进行迭代 """ config = Config() with tf.Graph().as_default(): model = NERModel(config) init = tf.initialize_all_variables() saver = tf.train.Saver() with tf.Session() as session: best_val_loss = float('inf') best_val_epoch = 0 session.run(init) for epoch in range(config.max_epochs): print('Epoch {}'.format(epoch)) start = time.time() # ## train_loss, train_acc = model.run_epoch(session, model.X_train, model.y_train) val_loss, predictions = model.predict(session, model.X_dev, model.y_dev) print('Training loss : {}'.format(train_loss)) print('Training acc : {}'.format(train_acc)) print('Validation loss : {}'.format(val_loss)) if val_loss < best_val_loss: best_val_loss = val_loss best_val_epoch = epoch if not os.path.exists("./weights"): os.makedirs("./weights") saver.save(session, './weights/ner.weights') if epoch - best_val_epoch > config.early_stopping: break confusion = calculate_confusion(config, predictions, model.y_dev) print_confusion(confusion, model.num_to_tag) print('Total time: {}'.format(time.time() - start)) #saver.restore(session, './weights/ner.weights') #print('Test') #print('=-=-=') #print('Writing predictions t o q2_test.predicted') #_, predictions = model.predict(session, model.X_test, model.y_test) #save_predictions(predictions, "q2_test.predicted")if __name__ == "__main__": test_NER()

下面是训练完的log

WARNING:tensorflow:From C:\Users\weizhen\Documents\GitHub\TflinearClassifier\q2_NER.py:291: initialize_all_variables (from tensorflow.python.ops.variables) is deprecated and will be removed after 2017-03-02.Instructions for updating:Use `tf.global_variables_initializer` instead.2017-10-02 16:31:40.821644: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.822256: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE2 instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.822842: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE3 instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.823263: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.1 instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.823697: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use SSE4.2 instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.824035: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.824464: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use AVX2 instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:40.824850: W c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\platform\cpu_feature_guard.cc:45] The TensorFlow library wasn't compiled to use FMA instructions, but these are available on your machine and could speed up CPU computations.2017-10-02 16:31:42.184267: I c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\common_runtime\gpu\gpu_device.cc:887] Found device 0 with properties: name: GeForce 940MXmajor: 5 minor: 0 memoryClockRate (GHz) 1.189pciBusID 0000:01:00.0Total memory: 2.00GiBFree memory: 1.66GiB2017-10-02 16:31:42.184794: I c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\common_runtime\gpu\gpu_device.cc:908] DMA: 0 2017-10-02 16:31:42.185018: I c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\common_runtime\gpu\gpu_device.cc:918] 0: Y 2017-10-02 16:31:42.185582: I c:\tf_jenkins\home\workspace\release-win\device\gpu\os\windows\tensorflow\core\common_runtime\gpu\gpu_device.cc:977] Creating TensorFlow device (/gpu:0) -> (device: 0, name: GeForce 940MX, pci bus id: 0000:01:00.0)Epoch 00/3181.578125 : loss = 1.6745071411132812Training loss : 1.6745071411132812Training acc : 0.046875Validation loss : 1.6497892141342163[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 0 0 1268] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OC:\Users\weizhen\Documents\GitHub\TflinearClassifier\q2_NER.py:262: RuntimeWarning: invalid value encountered in true_divide prec = confusion[i, i] / float(total_guessed_tags[i])Tag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 3.293267250061035Epoch 10/3181.578125 : loss = 1.6299598217010498Training loss : 1.6299598217010498Training acc : 0.0625Validation loss : 1.6258254051208496[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 0 0 1268] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OTag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 3.019239664077759Epoch 20/3181.578125 : loss = 1.6292331218719482Training loss : 1.6292331218719482Training acc : 0.078125Validation loss : 1.6021082401275635[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 0 0 1268] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OTag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 2.9794013500213623Epoch 30/3181.578125 : loss = 1.6349217891693115Training loss : 1.6349217891693115Training acc : 0.015625Validation loss : 1.5785211324691772[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 0 0 1268] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OTag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 2.4377009868621826Epoch 40/3181.578125 : loss = 1.5779037475585938Training loss : 1.5779037475585938Training acc : 0.09375Validation loss : 1.5549894571304321[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 0 0 1268] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OTag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 2.4941294193267822Epoch 50/3181.578125 : loss = 1.5726330280303955Training loss : 1.5726330280303955Training acc : 0.078125Validation loss : 1.5313135385513306[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 1 0 1267] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OTag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 1.0000 / R 0.00083 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 2.4369616508483887Epoch 60/3181.578125 : loss = 1.530135989189148Training loss : 1.530135989189148Training acc : 0.046875Validation loss : 1.5071308612823486[[ 0 0 0 0 42759] [ 0 0 0 0 2094] [ 0 0 6 0 1262] [ 0 0 0 0 2092] [ 0 0 0 0 3149]]0 ----- OTag: O - P nan / R 0.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 1.0000 / R 0.00473 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0613 / R 1.0000Total time: 2.4289004802703857Epoch 70/3181.578125 : loss = 1.4907350540161133Training loss : 1.4907350540161133Training acc : 0.0625Validation loss : 1.482757806777954[[ 789 0 0 0 41970] [ 19 0 0 0 2075] [ 1 0 7 0 1260] [ 45 0 1 0 2046] [ 48 0 0 0 3101]]0 ----- OTag: O - P 0.8747 / R 0.01851 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 0.8750 / R 0.00553 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0615 / R 0.9848Total time: 3.0616846084594727Epoch 80/3181.578125 : loss = 1.474185824394226Training loss : 1.474185824394226Training acc : 0.046875Validation loss : 1.4580132961273193[[ 7684 0 0 0 35075] [ 364 0 0 0 1730] [ 51 0 11 0 1206] [ 445 0 1 0 1646] [ 500 0 0 0 2649]]0 ----- OTag: O - P 0.8496 / R 0.17971 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 0.9167 / R 0.00873 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0626 / R 0.8412Total time: 2.969224214553833Epoch 90/3181.578125 : loss = 1.498674988746643Training loss : 1.498674988746643Training acc : 0.28125Validation loss : 1.4329923391342163[[20553 0 1 0 22205] [ 1273 0 0 0 821] [ 364 0 7 0 897] [ 980 0 2 0 1110] [ 1426 0 0 0 1723]]0 ----- OTag: O - P 0.8356 / R 0.48071 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 0.7000 / R 0.00553 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0644 / R 0.5472Total time: 2.5193586349487305Epoch 100/3181.578125 : loss = 1.4385690689086914Training loss : 1.4385690689086914Training acc : 0.421875Validation loss : 1.4074962139129639[[34564 0 4 0 8191] [ 1764 0 0 0 330] [ 767 0 7 0 494] [ 1594 0 2 0 496] [ 2355 0 0 0 794]]0 ----- OTag: O - P 0.8421 / R 0.80831 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 0.5385 / R 0.00553 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.0770 / R 0.2521Total time: 2.3797054290771484Epoch 110/3181.578125 : loss = 1.4594019651412964Training loss : 1.4594019651412964Training acc : 0.546875Validation loss : 1.3817591667175293[[40966 0 2 0 1791] [ 1976 0 0 0 118] [ 1088 0 4 0 176] [ 1900 0 0 0 192] [ 2814 0 0 0 335]]0 ----- OTag: O - P 0.8404 / R 0.95811 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P 0.6667 / R 0.00323 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.1283 / R 0.1064Total time: 2.4073784351348877Epoch 120/3181.578125 : loss = 1.3720815181732178Training loss : 1.3720815181732178Training acc : 0.78125Validation loss : 1.3555692434310913[[42709 0 0 0 50] [ 2085 0 0 0 9] [ 1266 0 0 0 2] [ 2086 0 0 0 6] [ 3115 0 0 0 34]]0 ----- OTag: O - P 0.8332 / R 0.99881 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P 0.3366 / R 0.0108Total time: 2.4379138946533203Epoch 130/3181.578125 : loss = 1.3634321689605713Training loss : 1.3634321689605713Training acc : 0.828125Validation loss : 1.328884482383728[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.8378336429595947Epoch 140/3181.578125 : loss = 1.3688112497329712Training loss : 1.3688112497329712Training acc : 0.75Validation loss : 1.302013635635376[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.7120652198791504Epoch 150/3181.578125 : loss = 1.3235018253326416Training loss : 1.3235018253326416Training acc : 0.78125Validation loss : 1.2748615741729736[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6104214191436768Epoch 160/3181.578125 : loss = 1.3185033798217773Training loss : 1.3185033798217773Training acc : 0.765625Validation loss : 1.2475427389144897[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.3700265884399414Epoch 170/3181.578125 : loss = 1.3193732500076294Training loss : 1.3193732500076294Training acc : 0.703125Validation loss : 1.2201541662216187[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.439958095550537Epoch 180/3181.578125 : loss = 1.2185351848602295Training loss : 1.2185351848602295Training acc : 0.75Validation loss : 1.1924999952316284[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.8921308517456055Epoch 190/3181.578125 : loss = 1.2128124237060547Training loss : 1.2128124237060547Training acc : 0.75Validation loss : 1.164793610572815[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.3643531799316406Epoch 200/3181.578125 : loss = 1.174509882926941Training loss : 1.174509882926941Training acc : 0.71875Validation loss : 1.137137770652771[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.7417917251586914Epoch 210/3181.578125 : loss = 1.056962490081787Training loss : 1.056962490081787Training acc : 0.84375Validation loss : 1.1092265844345093[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.5229830741882324Epoch 220/3181.578125 : loss = 1.1316486597061157Training loss : 1.1316486597061157Training acc : 0.796875Validation loss : 1.0816625356674194[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.7065508365631104Epoch 230/3181.578125 : loss = 1.073209524154663Training loss : 1.073209524154663Training acc : 0.78125Validation loss : 1.0542036294937134[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6912477016448975Epoch 240/3181.578125 : loss = 1.0102397203445435Training loss : 1.0102397203445435Training acc : 0.859375Validation loss : 1.0271364450454712[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.5654654502868652Epoch 250/3181.578125 : loss = 1.0918526649475098Training loss : 1.0918526649475098Training acc : 0.734375Validation loss : 1.001003623008728[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.663228750228882Epoch 260/3181.578125 : loss = 1.0216875076293945Training loss : 1.0216875076293945Training acc : 0.84375Validation loss : 0.9754133820533752[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.5872113704681396Epoch 270/3181.578125 : loss = 1.0990902185440063Training loss : 1.0990902185440063Training acc : 0.78125Validation loss : 0.9509017467498779[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.443047285079956Epoch 280/3181.578125 : loss = 0.9783419966697693Training loss : 0.9783419966697693Training acc : 0.8125Validation loss : 0.9272997379302979[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6797432899475098Epoch 290/3181.578125 : loss = 1.0568724870681763Training loss : 1.0568724870681763Training acc : 0.765625Validation loss : 0.904884934425354[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.3945367336273193Epoch 300/3181.578125 : loss = 1.0237849950790405Training loss : 1.0237849950790405Training acc : 0.78125Validation loss : 0.883781909942627[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.7488887310028076Epoch 310/3181.578125 : loss = 1.0338774919509888Training loss : 1.0338774919509888Training acc : 0.765625Validation loss : 0.8637697696685791[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.381608247756958Epoch 320/3181.578125 : loss = 0.9260292649269104Training loss : 0.9260292649269104Training acc : 0.78125Validation loss : 0.8448039889335632[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.5534446239471436Epoch 330/3181.578125 : loss = 0.8264249563217163Training loss : 0.8264249563217163Training acc : 0.875Validation loss : 0.8267776370048523[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.558243751525879Epoch 340/3181.578125 : loss = 0.9866911768913269Training loss : 0.9866911768913269Training acc : 0.8125Validation loss : 0.8101168274879456[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.464554786682129Epoch 350/3181.578125 : loss = 0.8703485727310181Training loss : 0.8703485727310181Training acc : 0.8125Validation loss : 0.7947656512260437[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.3714654445648193Epoch 360/3181.578125 : loss = 0.8071379661560059Training loss : 0.8071379661560059Training acc : 0.84375Validation loss : 0.7804707288742065[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.8228049278259277Epoch 370/3181.578125 : loss = 0.6435794234275818Training loss : 0.6435794234275818Training acc : 0.875Validation loss : 0.7670522928237915[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6811318397521973Epoch 380/3181.578125 : loss = 0.6902540326118469Training loss : 0.6902540326118469Training acc : 0.890625Validation loss : 0.7546741962432861[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.596187114715576Epoch 390/3181.578125 : loss = 0.6969885230064392Training loss : 0.6969885230064392Training acc : 0.859375Validation loss : 0.7434151768684387[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6147048473358154Epoch 400/3181.578125 : loss = 0.87004554271698Training loss : 0.87004554271698Training acc : 0.78125Validation loss : 0.7334315776824951[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.3841097354888916Epoch 410/3181.578125 : loss = 0.95426344871521Training loss : 0.95426344871521Training acc : 0.78125Validation loss : 0.7244532704353333[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.304476022720337Epoch 420/3181.578125 : loss = 0.8543925285339355Training loss : 0.8543925285339355Training acc : 0.78125Validation loss : 0.7163312435150146[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6401660442352295Epoch 430/3181.578125 : loss = 0.6948934197425842Training loss : 0.6948934197425842Training acc : 0.859375Validation loss : 0.7088930606842041[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.954803228378296Epoch 440/3181.578125 : loss = 0.8735166192054749Training loss : 0.8735166192054749Training acc : 0.796875Validation loss : 0.7022351622581482[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.6469264030456543Epoch 450/3181.578125 : loss = 0.8812070488929749Training loss : 0.8812070488929749Training acc : 0.828125Validation loss : 0.695988118648529[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.8571887016296387Epoch 460/3181.578125 : loss = 0.5007133483886719Training loss : 0.5007133483886719Training acc : 0.90625Validation loss : 0.690228283405304[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.360257625579834Epoch 470/3181.578125 : loss = 0.8069882988929749Training loss : 0.8069882988929749Training acc : 0.8125Validation loss : 0.6848161220550537[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.2997841835021973Epoch 480/3181.578125 : loss = 0.6994635462760925Training loss : 0.6994635462760925Training acc : 0.8125Validation loss : 0.6798946857452393[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.5274598598480225Epoch 490/3181.578125 : loss = 0.7816348075866699Training loss : 0.7816348075866699Training acc : 0.8125Validation loss : 0.6751761436462402[[42759 0 0 0 0] [ 2094 0 0 0 0] [ 1268 0 0 0 0] [ 2092 0 0 0 0] [ 3149 0 0 0 0]]0 ----- OTag: O - P 0.8325 / R 1.00001 ----- LOCTag: LOC - P nan / R 0.00002 ----- MISCTag: MISC - P nan / R 0.00003 ----- ORGTag: ORG - P nan / R 0.00004 ----- PERTag: PER - P nan / R 0.0000Total time: 2.7655985355377197

更完整的代码请参考:

- cs224d 作业 problem set2 (二) TensorFlow 实现命名实体识别

- cs224d 作业 problem set2 (一) 用tensorflow纯手写实现sofmax 函数,线性判别分析,命名实体识别

- cs224d 作业 problem set2 (三) 用RNNLM模型实现Language Model,来预测下一个单词的出现

- cs224d 作业 problem set1 (二) 简单的情感分析

- CS224d Problem set 1作业

- CS224d Problem set 2作业

- CS224d Problem set 3作业

- 怎样用OpenNLP来实现命名实体识别

- 用神经网络实现命名实体识别

- lstm+crf实现命名实体识别

- cs224d 作业 problem set3 (一) 实现Recursive Nerual Net Work 递归神经网络

- cs224d 作业 problem set1 (一) 主要是实现word2vector模型,SGD,CBOW,Softmax,算法

- 命名实体识别

- 命名实体识别

- 中文命名实体识别

- 命名实体识别NER

- NLTK命名实体识别

- 命名实体识别

- Zookeeper笔记(三)部署与启动Zookeeper

- vue中的event bus非父子组件通信

- cs224d 作业 problem set2 (一) 用tensorflow纯手写实现sofmax 函数,线性判别分析,命名实体识别

- SQL 注入防御方法总结

- 上传单张/多张图片,删除图片

- cs224d 作业 problem set2 (二) TensorFlow 实现命名实体识别

- “Canvas: trying to draw too large bitmap” when Android N Display Size set larger than Small

- cs224d 作业 problem set2 (三) 用RNNLM模型实现Language Model,来预测下一个单词的出现

- 安装Tez 0.9.0

- JMeter java.net.URISyntaxException: Illegal character in query at index 60

- 最长公共子序列的问题

- Lavarel初识

- python3爬虫初探(八)requests

- pygame.key