Deep Learning frameworks: a review before finishing 2016

来源:互联网 发布:文件集中管理 编程 编辑:程序博客网 时间:2024/05/16 11:59

https://medium.com/@ricardo.guerrero/deep-learning-frameworks-a-review-before-finishing-2016-5b3ab4010b06

Deep Learning frameworks: a review before finishing 2016

I love to visit Machine Learning meetups organized in Madrid (Spain) and I’m a regular attendant to Tensorflow Madrid and Machine Learning Spaingroups. At least I was until the beginning of the Self-Driving Car course, but that is another story. The fact is that too often, during “pizza & beer” time or networking I heard people talking about Deep Learning. Sentences like “where should I begin? Tensorflow is the most popular, isn’t it?”, “I’ve heard that Caffe is very used, but I think it’s a bit difficult”.

Because in BEEVA Labs we have dealt (and fight) with many Deep Learning libraries, I thought that could be interesting to share our discoveries and impressions to help people that is starting in this fascinating world.

Let’s start:

Tensorflow

This is everyone’s favorite (if they know something about Deep Learning or not seems to be uncorrelated), but I’m going to demystify it a bit.

In their web is defined as “An open-source software library for Machine Intelligence” but I think is more accurate this definition that appear just below: “ TensorFlow™ is an open source software library for numerical computation using data flow graphs”. Here, they don’t include Tensorflow in “Deep Learning Frameworks” but rather in the “Graph compilers” category, together with Theano.

After finishing the Udacity’s Deep Learning course, my impression is that Tensorflow is a very good framework, but too low level. There is a lot of code to write, and you need to reinvent the wheel over and over again. And seems that I’m not the only one that thinks like this. If we take a glance to some tweets of the great Andrej Karpathy:

Some months ago I went to “Google Experts Summit: TensorFlow, Machine Learning for everyone, with Sergio Guadarrama”. Sergio, one of the engineers that develops Tensorflow didn’t show us Tensorflow, he showed us a higher-level library called tf.contrib that works over Tensorflow. My impression is that they internally have realized that if they want to make more people use Tensorflow, they need to ease its use by creating layers on top with a higher abstraction level.

Tensorflow supports Python and C++, along to allow computing distribution among CPU, GPU (many simultaneous) and even horizontal scaling using gRPC.

In summary: Tensorflow is very good, but you must know where. The most of the things you are going to do, you don’t need to program everything by hand and reinvent the wheel, you can use easier libraries (cough cough Keras).

Theano

Theano is one of the most veterans and stable libraries. To the best of my knowledge, the beginning of Deep Learning libraries is disputed between Theano and Caffe.

Theano is low-level library, following Tensorflow style. And as it, it its not properly for Deep Learning, but for numerical computations optimization. It allows automatic function gradient computations, which together with its Python interface and it’s integration with Numpy, made this library in it’s beginning in one of the most used for general purpose Deep Learning.

As of today, it’s health is good, but the fact that doesn’t have multi-GPU support nor horizontal capabilities, coupled with the huge hype of Tensorflow (that fights in the same league), is causing it to be left behind.

Keras

“You have just found Keras.”

That’s the first sentence you can see when you reach the docs page. I remember when I discover Keras the first time. I was trying to get into the Deep Learning libraries world for my final project of Data Science Retreat in Berlin. I had enough Deep Learning knowledge to start, but I didn’t have time to make things by hand, neither time to explore or to learn a new library (the deadline would be in less than 2 months and I still had to go to class). And I found Keras.

I really liked Keras because its syntax was fairly clear, the documentation was very good (despite being relatively new) and because it worked in a language that I knew (Python). It was so easy to use, that it was pretty straightforward to learn the commands, functions and how to chain every block.

Keras it’s a very high-level library that works on top of Theano or Tensorflow (it’s configurable). Also, Keras reinforces minimalism, you can build a Neural Network in just a few lines of code. Here you can see a Keras code compared with the code needed to program in Tensorflow for achieving the same purpose.

Lasagne

Lasagne emerged as a library that works on top of Theano. Its mission was to abstract a bit the complex computation underlying to Deep Learning algorithms and also provide a more friendly interface (in Python too). It’s a veteran library (for the times that are handle in this area) and for a long time it was a very extended tool but, from my point of view, it’s losing speed in favor of Keras, which arose a little bit ago. Both of them compete in the same league, but Keras has better documentation and is more complete.

Caffe

Caffe is one of the most veteran frameworks, but the most.

In my opinion, it has very good features and some small drawbacks. Initially, it’s not a general purpose framework. It focuses only in computer vision, but it does it really well. In the experiments we did in my lab, the training of CaffeNet architecture took 5 time less in Caffe than in Keras (using the Theano backend). The drawbacks are that it’s not flexible. If you want to introduce new changes you need to program in C++ and CUDA, but for less novel changes you can use its Python or Matlab interfaces.

It’s documentations is very poor. A lot of times you need to check the code for trying to understand it (what is Xavier initialization doing? what is Glorot?)

One of it’s bigger drawbacks is its installation. It has a lot of dependencies to solve… and the 2 times I had to install it, it was a real pain.

But beware, not everything is bad. As a tool for put in production computer vision systems is the undisputed leader. It’s robust and very fast. My recommendation is the following: experiment and test in Keras and move to production in Caffe.

DSSTNE

Pronounced Destiny, it’s a very cool framework but it’s often overlooked. Why? Because, among other things, it is not for general purpose. DSSTNE does only one thing, but it does it very well: recommender systems. It’s not mean for research, neither for testing ideas (it’s advertised in their web), it is a framework for production.

We have been doing some test here in BEEVA and we got the impression that it was a very fast tool that give a very good results (with a big mAP). To achieve that speed, it uses GPU, and that is one of its drawbacks: unlike other frameworks/libraries analysed, it doesn’t allow you to chose between CPU and GPU, which could be useful for some experimentation, but we were already warned.

Other conclusions we found is that for the moment, DSSTNE is not a project mature enough and it’s too “black box”. To get some insights on how it works we had to go down to its source code and we found many important //TODO. We also realized that there are no enough tutorials on internet, there is too few people doing experiments. My opinion is that is better wait 4 months and check its evolution. It’s a really interesting project that still needs a bit of maturity.

By the way, programming skills are not needed. Every interaction with DSSTNE is done through commands in the terminal.

Since this point, there are frameworks or libraries that I know and are popular but I haven’t used them yet, so I will not be able to give so much detail.

Torch

There are many battles every day, but a good Guerrero(warrior in Spanish) must know to choose between those that want to fight and those that prefer to let go. Torch is a specially known framework because it’s used in Facebook Research and in DeepMind before being acquired by Google (after that, they migrate to Tensorflow). It uses the programming language Lua, and this is the battle I was talking about. In a landscape where the most of Deep Learning is focused on Python, a framework that works in in Lua could be more an inconvenience rather than an advantage. I have no experience on this language, so if I wanted to start with this tool, I will have to learn Lua first and then learn Torch. It’s a very valid process, but in my personal case, I prefer to focus on those that work in Python, Matlab or C++.

mxnet

Python, R, C++, Julia.. mxnet is one of the most languages-supported libraries. I guess that R people are going to like it specially, because until now Python was wining in this area in an indisputable way (Python Vs R. Guess in which side am I? :-p )

Told to be truth, I wasn’t paying too much attention to mxnet some time ago… but when Amazon AWS choose it as one of the libraries to be included in their Deep Learning AMI it fires my radar. I had to take a look. When later I see that Amazon made mxnet it’s reference library for Deep Learning and they talked about its enormous horizontal scaling capabilities… something was happening and I needed to get into. That’s why it’s now in our list of technologies to be tested in 2017 in BEEVA.

I’m a bit sceptical with respect to its multi-GPU scaling capabilities and I would love to see more details about the experiment, but for the moment I’m going to give it the benefit of the doubt.

DL4J

I reach to this library… because of its documentation. I was looking for Restricted Boltzman Machines, Autoencoders and was here where I found it. Very clear, with parts of theory and code examples. I have to say that D4LJ’s documentation is an artwork, and should be the reference for other libraries to document their code.

Skymind, the company behind DeepLearning4J realized that, while in the Deep Learning world, Python is the king, the big mass of programmers were from Java, so a solution should be found. DL4J is compatible with JVM and works with Java, Clojure and Scala. With the rise and hype of Scala and its use in some of the most promising startups, I will follow this library up close.

By the way, Skymind have a very active twitter account where they publish new scientific papers, examples and tutorials. Very very recommended to take a look.

Cognitive Toolkit

Cognitive Toolkit was previously known by its acronym, CNTK, but has experienced recently a re-branding, probably to take advantage of the pull that Microsoft Cognitive services are having these days. In the benchmarkpublished, it seems a very powerful tool for vertical and horizontal scaling.

For the moment, Cognitive Toolkit doesn’t seem to be too popular. I haven’t seen many blogs, internet examples or comments in Kaggle making use of this library. But it seems a bit strange to me taking into account the scaling capabilities they point out and the fact that behind this library is Microsoft Research, the research team that broke the world record in speech recognitionreaching human level.

I have been looking at an example they have in their project’s wiki and I’ve seen that the Cognitive Toolkit’s syntax for Python (it also supports C++) is very similar to Keras’, which leads me to think (rather confirm) that Keras’ is the correct way.

Conclusions

My conclusions are that if you want to get into this field, you should start learning Python. There are more languages supported, but this one is the most extended and one of the easiest ones. Anyway, why to choose this language if it’s too slow? Because the most of the libraries use a symbolic language approach rather than a imperative one. Let me explain: instead of executing your instructions one bye one, they wait until you give them all, with this, a computing graph is created. This graph its internally optimized, compiled into C++ code an executed. This way you achieve the best of this 2 worlds: the development speed that Python gives you and the speed of execution that allows C++.

Deep Learning is becoming more and more interested, but people is not willing to wait the big computing times that are needed to train the algorithms (and I’m talking about GPU, with CPU is better not to consider). That’s why multi-GPU support, horizontal scaling on multiple machines and even the introduction of custom-made hardware start to take a lot of power.

The Deep Learning landscape is very active and changing. It’s possible that some of the things I already told you, in the middle of next year have changed.

If you are a beginner, use Keras. If you are not, use it too.

Keras is really cool. If you have a walk into Kaggle, you will see that there are two rockstars: Keras and XGBoost.

My theory: Game of Frameworks

Why did Google release Tensorflow to the community? For the common good? To get talent already trained in the technologies they used day by day in Google? At least that’s what they said in some of their notice. My theory is that it is not, it’s completely unrelated. My theory is that it was an strategic decision inside the company related to Google Cloud service and their new TPUs (which are not yet publicly available, but I’ve read that we will see them in 2017). Unlike CPUs that which are general purpose, or GPUs which have a more limited scope but allow, with some exceptions, a general purpose too, TPUs are custom-made hardware with only one purpose: accelerate Tensorflow’s computations. So.. If I’m used to Tensorflow and I like it, where am I going to run the big experiments? In AWS? In Azure? Or better in Google Cloud where probably it takes the half of the time and I will cost less?

I remember that, after the Amazon’s announcement to choose mxnet as its reference library for Deep Learning, some people commented to me that it was strange they hadn’t choose Tensorflow. It was not a big surprise for me… even less when, some time later, in the re:Invent event they announce that they will expand their services in the cloud adding FPGA instances and also that they will build custom hardware for mxnet.

It seems that the Cloud Computing war for Deep Learning will be waged on the battlefield of frameworks.

I would like to have documented a bit more this last part and added some links to allow you go in deeper… but the year is finishing and I’m going for holidays!! Kind regards and see you next year :-)

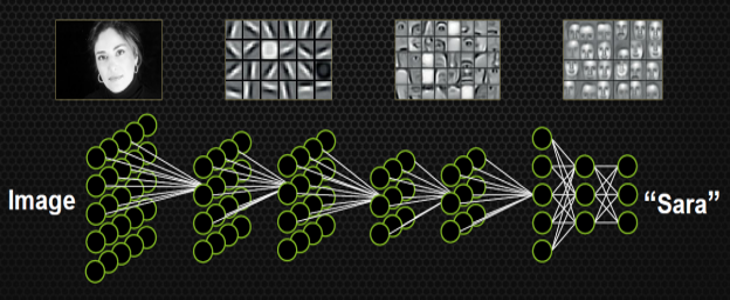

Main image source: https://www.microway.com/hpc-tech-tips/deep-learning-applications/

- Deep Learning frameworks: a review before finishing 2016

- Deep Learning Frameworks.

- Deep Learning Frameworks

- Deep learning---------Representation Learning: A Review and New Perspectives

- Deep Learning Review

- 【Review】A Review on Deep Learning Techniques Applied to Semantic Segmentation

- Deep Machine Learning libraries and frameworks

- 【Deep Learning】Review of Stereo Matching by Training a Convolutional Neural Network to Compare Image

- A Review on Deep Learning Techniques Applied to Semantic Segmentation(译)-(1)

- A Review on Deep Learning Techniques Applied to Semantic Segmentation(译)-(2)

- A Review on Deep Learning Techniques Applied to Semantic Segmentation 阅读笔记

- 综述论文翻译:A Review on Deep Learning Techniques Applied to Semantic Segmentation

- 【转载】A Review on Deep Learning Techniques Applied to Semantic Segmentation(译)-(1)

- 2016.4.12 nature deep learning review[2]

- 2016.4.15 nature deep learning review[3]

- A Summary of Current Machine Learning Frameworks

- Deep Learning in a Nutshell

- A Guide to Deep Learning

- Nginx 使用basePath 后响应速度慢

- 考研院校专业课选择及自动控制原理备考的宏观战略分析(一)

- Matlab函数备忘

- 并行计算大作业之多边形相交(OpenMP、MPI、Java、Windows)

- iOS 的几种创建多线程方法

- Deep Learning frameworks: a review before finishing 2016

- 国内大公司的开源项目一览表

- java 如何提交list 到后台

- BZOJ-1208: [HNOI2004]宠物收养所 (splay 查询前驱后继 set也可)

- WPA2 KRACK Attacks 原文转载翻译

- 单调队列

- 注册登录会员抽奖系统

- IT安全漏洞、威胁与风险的区别,你都知道吗?

- OkHttp请求网络数据,并listview展示