Python定时爬取某网页内容

来源:互联网 发布:vs2015配置unity3d 编辑:程序博客网 时间:2024/06/16 08:01

前言

昨天小伙伴说他最近在投资一个类似比特币的叫ETH币(以太币),他想定时获取某网站以太币的“购买价格最低”和“出售价格最低”,他自己没学过Python,也找到一个定时获取股票的例子(没看懂),看我平时有在玩Python,就问我能不能做到;平时我对Python挺感兴趣的,就想试试看。

思路

- 定时任务

- 主程序

- 爬取网页

- 解析网页 获取所要内容

- 存入表格

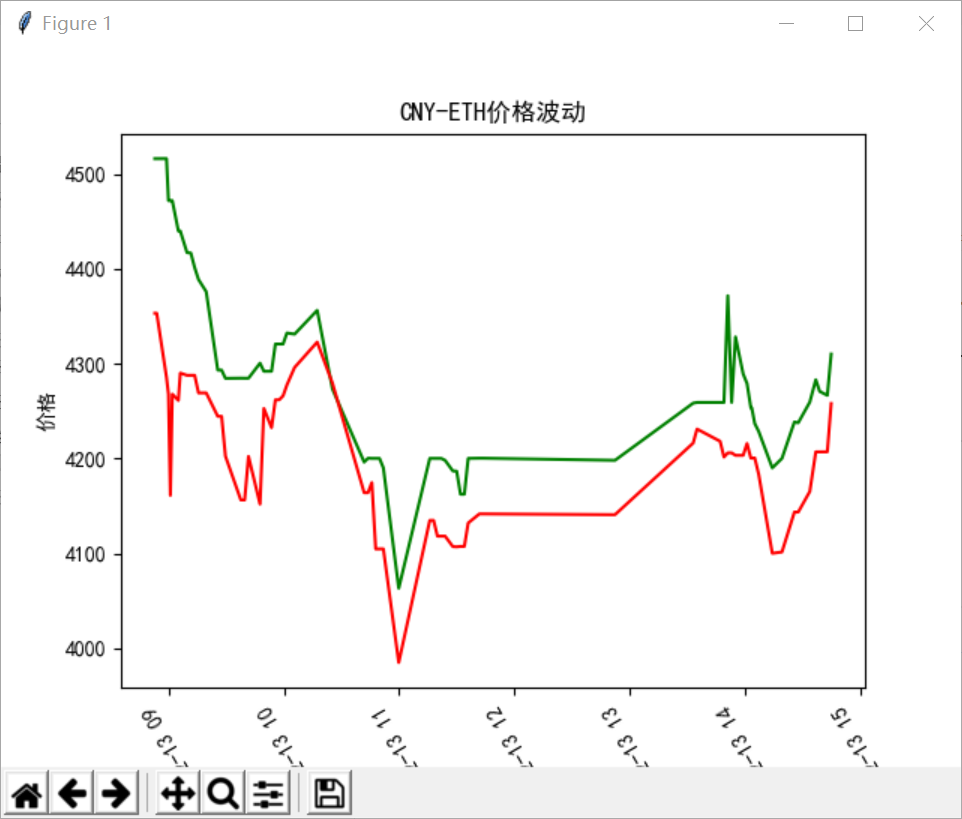

- 绘图

- 异常处理

如何实现定时爬取

# 定时任务# 设定一个标签 确保是运行完定时任务后 再修改时间flag = 0# 获取当前时间now = datetime.datetime.now()# 启动时间# 启动时间为当前时间 加5秒sched_timer = datetime.datetime(now.year, now.month, now.day, now.hour, now.minute, now.second) + datetime.timedelta(seconds=5)# 启动时间也可自行手动设置# sched_timer = datetime.datetime(2017,12,13,9,30,10)while (True): # 当前时间 now = datetime.datetime.now() # print(type(now)) # 本想用当前时间 == 启动时间作为判断标准,但是测试的时候 毫秒级的时间相等成功率很低 而且存在启动时间秒级与当前时间毫秒级比较的问题 # 后来换成了以下方式,允许1秒之差 if sched_timer < now < sched_timer + datetime.timedelta(seconds=1): time.sleep(1) # 主程序 main() flag = 1 else: # 标签控制 表示主程序已运行,才修改定时任务时间 if flag == 1: # 修改定时任务时间 时间间隔为2分钟 sched_timer = sched_timer + datetime.timedelta(minutes=2) flag = 0代码(内带注释)

# -*- coding: utf-8 -*-__author__ = 'iccool'from bs4 import BeautifulSoupimport requestsfrom requests.exceptions import RequestExceptionimport csvimport pandas as pdimport datetimeimport timeimport matplotlib.pyplot as pltimport random# 设置中文显示plt.rcParams['font.sans-serif']=['SimHei'] #用来正常显示中文标签plt.rcParams['axes.unicode_minus']=False #用来正常显示负号# 由于只爬取两个网页的内容,就直接将该两个网页放入列表中url_sell = 'https://otcbtc.com/sell_offers?currency=eth&fiat_currency=cny&payment_type=all'url_buy = 'https://otcbtc.com/buy_offers?currency=eth&fiat_currency=cny&payment_type=all'urls = [url_sell, url_buy]# 更换User-Agentdef getHeaders(): user_agent_list = [ 'Mozilla/5.0 (Windows NT 10.0; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/49.0.2623.75 Safari/537.36', 'Mozilla/5.0(Windows;U;WindowsNT6.1;en-us)AppleWebKit/534.50(KHTML,likeGecko)Version/5.1Safari/534.50', 'Mozilla/5.0(WindowsNT6.1;rv:2.0.1)Gecko/20100101Firefox/4.0.1', 'Mozilla/4.0(compatible;MSIE7.0;WindowsNT5.1;Trident/4.0;SE2.XMetaSr1.0;SE2.XMetaSr1.0;.NETCLR2.0.50727;SE2.XMetaSr1.0)' ] index = random.randrange(0,len(user_agent_list)) headers = { 'User-Agent': user_agent_list[index] } return headers# 获取页面内容def getHtml(url): try: response = requests.get(url, headers=getHeaders()) if response.status_code == 200: return response.text except RequestException: print('===request exception===') return None# 解析网页 获取价格def parse_html(html): try: soup = BeautifulSoup(html, 'lxml') price = soup.find("div", class_='can-buy-count').contents[0] price = float(str(price).strip().strip('\n').replace(',', '')) return price except Exception: print('===parseHtml exception===') return None# 保存到csv表中def save2csv(prices, sched_timer): with open('CNY-ETH.csv', 'a+', newline='', encoding='utf-8') as csvfile: writer = csv.writer(csvfile) # writer.writerow(['购买价格最低', '出售价格最低']) writer.writerow([sched_timer, prices[0], prices[1]]) df = pd.read_csv('CNY-ETH.csv') print(df)# 绘图def drawPic(): eth_file = pd.read_csv('CNY-ETH.csv') eth_file['DATE'] = pd.to_datetime(eth_file['DATE']) # print(eth_file.head(80)) xlen = eth_file[0:120] plt.plot(eth_file['DATE'], xlen['购买价格最低'], c='g', ls='-') plt.plot(eth_file['DATE'], xlen['出售价格最低'], c='r', ls='-') plt.xticks(rotation=120) # plt.yticks(rotation=range(3400,3800)) plt.xlabel('时间') plt.ylabel('价格') plt.title('CNY-ETH价格波动') # 由于plt.show()程序会停留不运行 ,采用以下方法,绘图后,停留15s,再关闭 plt.ion() plt.pause(15) plt.close()def main(sched_timer): prices = [] for url in urls: html = getHtml(url) price = parse_html(html) if price == None: prices.append(None) else: prices.append(price) # print('购买价格最低:', prices[0],'\n出售价格最低:', prices[1]) if prices[0] == None or prices[1] == None: print('===price has None===') pass else: save2csv(prices, sched_timer) drawPic()if __name__ == '__main__': # 定时任务 # 设定一个标签 确保是运行完定时任务后 再修改时间 flag = 0 # 获取当前时间 now = datetime.datetime.now() # 启动时间 # 启动时间为当前时间 加5秒 sched_timer = datetime.datetime(now.year, now.month, now.day, now.hour, now.minute, now.second) + datetime.timedelta(seconds=5) # 启动时间也可自行手动设置 # sched_timer = datetime.datetime(2017,12,13,9,30,10) while (True): # 当前时间 now = datetime.datetime.now() # print(type(now)) # 本想用当前时间 == 启动时间作为判断标准,但是测试的时候 毫秒级的时间相等成功率很低 而且存在启动时间秒级与当前时间毫秒级比较的问题 # 后来换成了以下方式,允许1秒之差 if sched_timer < now < sched_timer + datetime.timedelta(seconds=1): time.sleep(1) print(now) # 运行程序 main(sched_timer) # 将标签设为 1 flag = 1 else: # 标签控制 表示主程序已运行,才修改定时任务时间 if flag == 1: # 修改定时任务时间 时间间隔为2分钟 sched_timer = sched_timer + datetime.timedelta(minutes=2) flag = 0效果

能够成功获取信息,并插入表格,根据表格绘制图形。

DATE,购买价格最低,出售价格最低2017-12-13 08:52:47,4515.93,4353.022017-12-13 08:53:47,4515.93,4353.022017-12-13 08:58:53,4516.0,4285.32017-12-13 08:59:53,4471.71,4267.732017-12-13 09:00:53,4471.71,4161.032017-12-13 09:01:53,4471.71,4267.762017-12-13 09:05:03,4439.7,4261.132017-12-13 09:06:03,4439.7,4289.992017-12-13 09:09:32,4416.94,4287.582017-12-13 09:11:32,4416.52,4287.582017-12-13 09:13:32,4401.11,4287.582017-12-13 09:15:32,4388.56,4269.032017-12-13 09:19:32,4375.76,4269.032017-12-13 09:25:33,4293.11,4244.362017-12-13 09:27:33,4293.1,4244.362017-12-13 09:29:33,4284.38,4202.342017-12-13 09:37:33,4284.56,4156.242017-12-13 09:39:33,4284.5,4156.182017-12-13 09:41:33,4284.5,4202.132017-12-13 09:47:33,4300.42,4151.832017-12-13 09:49:33,4291.85,4252.712017-12-13 09:53:33,4291.85,4232.382017-12-13 09:55:33,4320.55,4261.772017-12-13 09:57:33,4320.55,4261.772017-12-13 09:59:33,4320.55,4265.972017-12-13 10:01:33,4332.18,4277.452017-12-13 10:05:33,4331.0,4295.92017-12-13 10:17:18,4356.05,4322.612017-12-13 10:25:16,4272.99,4279.222017-12-13 10:41:45,4196.1,4163.882017-12-13 10:43:45,4200.18,4163.882017-12-13 10:45:45,4200.0,4174.562017-12-13 10:47:45,4200.0,4104.582017-12-13 10:49:45,4200.0,4104.612017-12-13 10:51:45,4190.0,4104.612017-12-13 10:59:45,4063.06,3984.82017-12-13 11:15:54,4200.0,4134.652017-12-13 11:17:54,4200.0,4134.652017-12-13 11:19:54,4200.0,4118.042017-12-13 11:21:54,4200.0,4118.042017-12-13 11:23:54,4197.94,4118.042017-12-13 11:27:54,4186.77,4107.082017-12-13 11:29:54,4186.52,4106.832017-12-13 11:31:54,4162.25,4107.242017-12-13 11:33:54,4162.25,4107.242017-12-13 11:35:54,4200.0,4131.922017-12-13 11:41:43,4200.2,4141.522017-12-13 11:43:43,4200.2,4141.522017-12-13 12:52:19,4198.0,4140.72017-12-13 13:33:01,4258.28,4216.22017-12-13 13:35:01,4259.0,4230.962017-12-13 13:47:02,4259.0,4218.042017-12-13 13:49:02,4258.98,4201.42017-12-13 13:51:02,4371.48,4205.842017-12-13 13:53:02,4259.0,4205.842017-12-13 13:55:02,4328.08,4203.232017-12-13 13:59:02,4288.99,4203.232017-12-13 14:01:02,4278.99,4215.752017-12-13 14:03:02,4253.35,4200.292017-12-13 14:03:30,4253.27,4200.292017-12-13 14:05:03,4236.82,4200.482017-12-13 14:07:06,4228.48,4183.352017-12-13 14:14:11,4189.99,4100.02017-12-13 14:19:13,4200.0,4101.372017-12-13 14:25:42,4238.39,4143.442017-12-13 14:27:42,4237.57,4143.442017-12-13 14:33:42,4259.31,4165.122017-12-13 14:36:50,4282.96,4206.912017-12-13 14:38:50,4270.65,4206.912017-12-13 14:42:50,4266.46,4206.97

问题

爬取一段时间,就出现异常

程序运行一段时间,出现异常;重新启动程序又能运行一段时间,然后又报异常。只爬取两个网页,而且还是隔2分钟爬一次,应该不是因为爬取过于频繁导致,应该是网络问题。

想实现出现异常,该次爬取无数据,就选择上次的数据

如果出现异常,如何将上次的数据作为异常时段的数据插入到csv中?

插入csv表,关于列名插入的问题

需求:在定时爬取数据插入csv表中,先插入列名,后面更新数据直接只插入数据

绘图,图片停留一段时间,自动关闭

需求:绘制图片后,图片一直保留直到下次更新数据,重新绘制图片

目前想法是把图片保留的时间延长到重新绘制前几秒钟,有没有更好的方法。

针对以上问题,欢迎评论提供解决办法

随想

最近一则关于“Python加入计算机二级考试科目”的新闻刷屏,各大培训机构也拿这则新闻宣传培训课程,让我产生一种“对这门语言是不是有点吹的太过了”的想法。

参考:

如何用Python写一个每分每时每天的定时程序

阅读全文

0 0

- Python定时爬取某网页内容

- python 截取网页内容

- Python 获取网页内容

- Python抓网页内容

- python抓取网页内容

- python抓取网页内容

- python 网页内容抓取

- Python抓取网页内容

- python 抓取网页内容

- Python抓取网页内容

- python定时替换文件内容

- Python-定时打开一个网页

- 采集网页内容,pdo入库,定时采集

- 定时抓取网页连接,提取网页内容,存入数据库

- [python]抓取网页的内容

- python 抓取网页内容教程

- 使用python解析网页内容

- python爬取网页内容

- Android自定义控件 倒计时

- 第六天实训!!!

- combobox输入中文而对应的hidden域的value值没有及时修改的问题

- Setup smtps

- 学习笔记之开发相关概念(6)--云

- Python定时爬取某网页内容

- Java虚拟机字节码执行引擎

- Redis集群

- 深入了解构造函数

- Tensorflow深度学习之二十一:LeNet的实现(CIFAR-10数据集)

- Swiper动态加载不显示没效果

- 数据结构实验之查找三:树的种类统计

- struts2文件上传

- Group_concat介绍与例子