HBase in Production at Facebook – Jonathan Gray at Hadoop World 2010

来源:互联网 发布:淘宝乡亲大药房卖假药 编辑:程序博客网 时间:2024/04/29 23:32

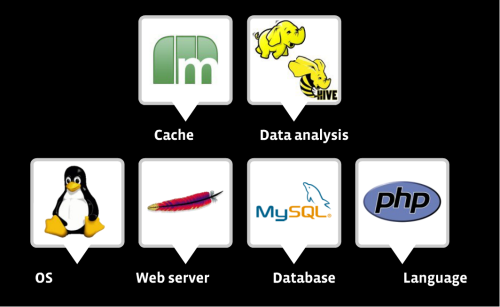

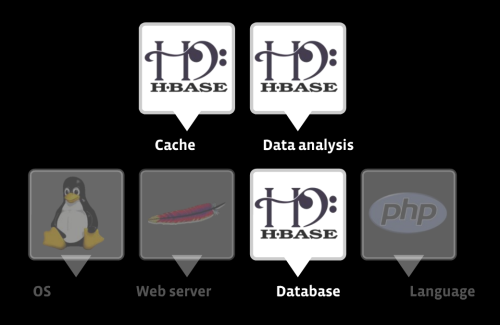

原文地址: http://glennas.wordpress.com/2011/03/09/hbase-in-production-at-facebook-jonathan-gray-at-hadoop-world-2010/ Interesting presentation from Facebook’s Jonathan Gray at Hadoop World 2010 on Facebook’s current and future plans for using HBase in their data platform. And then a slide that hints at the impact HBase has on various elements of the stack: Note that HBase does not actually replace any of these element of the stack, but rather plays an interesting intermediate role between online transactional and offline batch data processing. In his presentation, Gray speaks to the advantages of HBase. Here’s a few snippets: And then on the Data Analysis side, HBase doesn’t actually do data analysis. And it doesn’t actually store data. But HDFS stores data, and Hive does the analysis. But with HBase in the middle you can do random access and you can do incremental updating. You also have fast Index writes. HBase is a sorted store. So every single table is sorted by row, every single row is sorted by the column. And then columns are sorted by versions. That’s a really powerful thing that you can build inverted search indexes on, you can build secondary indexes on. So you can do a lot more that just what you can do with a key-value store. So it has a very powerful data structure. And lastly, there’s real tight integration with Hadoop. And my favorite thing: It’s an interesting product that kind of bridges this gap between the online world and the offline world – the serving, transactional world and the offline, batch-processing world. Says Gray: Right now (at Facebook), we’re doing night updates of UDBs into the data warehouse. And the reason we’re doing that is because HDFS doesn’t have incremental operations. I can only append to something. I can’t edit something, I can’t delete something. So merging in the changes of transactional data, you basically have to rewrite the entire thing. But with HBase, what we’re able to do is, all of our MySQL data is already being replicated. So we already have existing replication streams. So we can actually hook directly into those replication streams, and then write them into HBase. And then HBase then allows us to expose Hive tables, so we can actually have completely up-to-date UBD data in the datawarehouse. So now we can have UDB data into our data warehouse in minutes, rather than in hours or a day. Again quoting Gray: The second use case is around high-frequency counters, and then real-time analytics of those counters. This is something I think a lot of people have used HBase for for a long time. … It’s a really interesting use case. Counters aren’t writes, they’re read-modify-writes. So they’re actually a real expensive operation. In a relational database, I’d actually have to do a read lookup, and then write that data back. So it’s a real expensive thing. And if you’re talking about billions of counter updates per hour – or I think right now on one of our clusters it’s about 100,000 updates per second. So doing a 100,000 increments a second on a SQL machine, it’s a cluster of machines now – a lot of machines. And then the other part is, well now that I’m taking all this increment data, I want to be able to do analysis on it. If I’m taking click-stream data, I want to say What’s the most popular link today? And this past hour? And of all time? So I want to be able to do all that stuff, and if I have all my data sitting in MySQL or HDFS, it’s not necessarily very efficient to compute these things. So the way we do it now is Scribe Logs. So everytime you click on something, for example, that’s going into Scribe as a log line saying this user clicked this link. That’s being fed into HDFS. And then periodically we’re saying, OK once an hour, or once a day or whatever, let’s take all of our click data and do some analysis on it. Let’s do sums and max-mins and averages and group-by, and different kinds of queries like that. And then once we have our computations, let’s feed it back into the UDBs so people can read it. So looking at this flow here, we have Scribe going downline into HDFS. And then once we’re in HDFS, we’re writing things as Hive tables so we can get the dimensions that we need. And then we’re doing these huge MapReduce joins to join everything by URL. So it takes a long time to do that job. It’s really, really inefficient. It uses lots and lots of I/O. And it’s not real-time. If this job takes an hour to run, we’ll always have at least an hour of stale data. But with HBase what we’re doing is we’re going from Scribe directly into HBase. Which means that as soon as that edit comes into HBase, it’s available. You can read it. You can randomly read it. You can do MapReduce on it. You can do whatever you want. And like I was talking about before, you can do real-time reads of it. And I could say “How many clicks have there been for newyorktimes.com today, and I can just grab that data out of HBase. Or, if I want to do things like “What are the top 10 links across all domains?” Well, the way we do that is kind of like through a trigger-based system. Because the increments are so efficient, I can actually increment 10 things for each increment. So I can say “Increment this domain. But then increment the link. Increment it for today. Increment it for these demographics.” Just do a whole bunch of increments because the increments are so efficient. So you can actually pre-compute a lot of this stuff. And then when you want to do big aggregations, you can do a MapReduce directly on HBase. And then when you’re done and you have your results, rather than having to feed them back to the UDBs, you just put them in HBase and they’re there. So it’s really, really cool – storage, serving, analysis as one system. And we’re able to basically keep up with huge, huge numbers of increments and operations, and at the same time do analytics on it. And the third scenario: The last case I want to talk about … is using HBase as a user-facing database – almost as a transactional database, and specifically for Write workloads. When you have lots and lots of Writes and very few Reads, or you have a huge amount of data liked I talked about before. If I’m storing 500K, I don’t necessarily want to put that in my UDB. … I’m not going to elaborate further on this use case. Please listen to the presentation for a full discussion. On production development of HBase at Facebook, Gray has this to say: But the first thing we did was the Hive integration. … This unlocks a whole new potential, and not just the way I was describing it earlier that we can now randomly write into our data warehouse. You can also randomly read into the data warehouse. So for certain kinds of joins, for example, rather than having to stream the joins we can actually do point lookups into HBase tables. So it unlocks a whole new bunch of ways that we can potentially optimize Hive queries. But the base of Hive integration is really HBase tables become Hive tables. So you can map Hive tables into HBase. You can use that then as an ETL data target, meaning that we can write our data into it. It can also be a Query data source so we can read data from it. And like I was saying, the Hive integration supports different read and write patterns. So on the Write side, it supports API random writing like we would do with UDBs. It also supports this bulk load facility through something called HFile output format. So HFile is the ondisk format that HBase uses. And it just looks like a sequence file or a Map file or anything else, but it has some special facilities for HBase. And we extensively are doing this, which is taking data, writing it out as HFiles, which basically means you’re writing into HBase at the same speed you write to HDFS. And then you just kind of hit a button, and HBase loads all those files in. And now you have really efficient random access to all that data. We’re using that extensively. Also, on the Read side. You can randomly read into stuff. Or you can do full table scans. Or you can do range scans. All that kind of stuff through Hive. Another great presentation from Hadoop World. glenn

HBase Use Case #1: Near real-time Incremental updates to the Data Warehouse

HBase Use Case #2: High Frequency Counters and Real-time Analytics

HBase Use Case #3: User-facing Database for Write-intensive workloads

HBase and Hive Integration

In Summary

- HBase in Production at Facebook – Jonathan Gray at Hadoop World 2010

- Introduction to HBase By Jonathan Gray

- Realtime Hadoop usage at Facebook -- Part 1

- Apache Hadoop Goes Realtime at Facebook(译)

- Realtime Hadoop usage at Facebook -- Part 2 - Workload Types

- Scaling memcached at Facebook

- Design at Facebook

- Scaling Memcache at Facebook

- Scaling Memcache At Facebook

- A Year of Blink at Alibaba: Apache Flink in Large Scale Production--翻译

- Software development procedure at Facebook

- Apache Hadoop Goes Realtime at Facebook(中文,Hadoop在脸谱达成实时应用)

- Flashcache at Facebook: From 2010 to 2013 and beyond

- at

- @ (at)

- at

- AT

- AT

- oracle中关于定时器的创建与删除

- Boost学习--再窥shared_ptr

- HBase Coprocessors

- map容器基础

- CPU占用率

- HBase in Production at Facebook – Jonathan Gray at Hadoop World 2010

- GDI+点图形的图法

- 要交c语言大作业了 找到的一些资料

- 五角星的画法

- 单例模式

- Android 编码规范 | 代码风格指南

- A Practical Approach to Exploiting Coarse-Grained Pipeline Parallelism in C Program

- Oracle数据块损坏恢复总结

- TIOBE 2011年4月编程语言排行榜:Lua接近TOP 10