THE CAST TESTING COMPETITION执行测试用例比赛

来源:互联网 发布:海报制作软件下载 编辑:程序博客网 时间:2024/05/21 09:47

I sponsored the testing competition at CAST, last week, awarding $1,426.00 of my own money to the winners.

My game, my rules, of course, but I tried to be fair and give out the prizes to deserving winners.

There was some controversy…

We set it up with simple rules, and put the onus on the contestants to sort themselves out. The way it worked is that teams signed up during the day (a team could be one tester or many), then at 6pm they received a link to the software. They had to download it, test it, report bugs, and write a report in 4 hours. We set up a website for them to submit reports and receive updates. The developer of the product was sitting in the same ballroom as the contestants, available to anyone who wished to speak with him.

I left the scoring algorithm unexplained, because I wanted the teams to use their testing skills to discover it (that’s how real life works, anyway). A few teams investigated the victory conditions. Most seemed to guess at them. No one associated with the conference organizers could compete for a prize.

During the competition, I made several rounds with my notebook, asking each team what they were doing and challenging them to justify their strategy. Most teams were not particularly crisp or informative in their answers (this is expected, since most testers do not practice their stand-up reporting skills). A few impressed me. When I felt good about an answer, I made a start in my notebook next to their name. My objective was partly to help me decide the winner, and partly to make myself available in case a team had any questions.

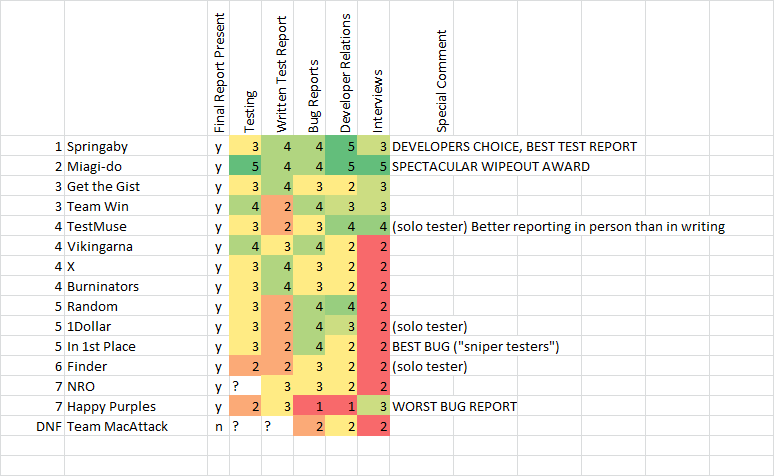

David reviewed the 350 bug reports, while I analyzed the final test reports. We created a multi-dimensional ordinal scale to aid in scoring:

Awards:

- Worst Bug Report: Happy Purples ($26)

- Best Bug Report: In 1st Place ($400)

- Developer’s Choice Award: Springaby ($200)

- Best Test Report: Springaby ($800)

These rankings don’t follow any algorithm. We used a heuristic approach. We translated the raw experience data into 1-5 scales (where 5 is OMG and 1 is WTF). David and I discussed and agreed to each assessment, then we looked at the aggregates and decided who would get the awards. My final orderings for best test report (where report means the overall test report, not just the written summary report) are on the left.

Note: I don’t have all the names of the testers involved in these teams (I’ll add them if they are sent to me).

Now for the special notes and controversies.

Happy Purples

Happy Purples won $26 for the worst report made of a bug (which was actually for two bug reports: that it was too slow to download the software at the start of the competition AND that a tooltip was inconsistent with a button title because it wasn’t a duplicate of the button title).

The Happies were not a very experienced team, and that showed in their developer relations. I thought their overall bug list was not terrible, although it wasn’t very deep, either. They earned the ire of the developer because they tried to defend the weird bug reports, mentioned above, and that so offended to David that he flipped the bozo bit on them as a team. Hey, that’s realistic. Developers do that. So be careful, testers.

TestMuse

Keith Stobie was the solo tester known as TestMuse. He was a good explainer when I stopped by to challenge him on what he was doing, but I don’t think he took his written test report very seriously. I had a hard time judging from that what he did and why he did it. I know Keith well enough that I think he’s capable of writing a good report, so maybe he didn’t realize it was a major part of the score.

In 1st Place

They didn’t report many bugs (9, I think). But the ones they reported were just the kind the developer was looking for. I don’t remember which report David told me was the bug that won them the Best Bug Report award, but each bug on their list was a solid functionality problem, rather than a nitpicky UI thing. We called these guys the sniper testers, because they picked their shots.

Springaby

A portmanteau of “springbok” and “wallaby”, Springaby consisted of Australian tester Ben Kelly and South African Louise Perold. Like “Hey David!”, the winner of the previous CAST competition (2007), they used the tactic of sitting right next to the developer during the whole four hours. Just like last time, this method worked. It’s so simple: be friendly with the developer, help the developer, ask him questions, and maybe you will win the competition. Springaby won Developer’s Choice, which goes to David’s favorite team based on personal interactions during the competition, and they won for best test report… But mainly that was because Miagi-Do wiped out.

Note: Springaby reported on of their bugs in Japanese. However, the developer took this as a jest and did not mark them down.

Miagi-Do

Miagi-Do was kind of an all-star team, reminiscent of the Canadian team that should have won the competition in 2007 before they were disqualified for having Paul Holland (a conference organizer) on their side. This time we were very clear that no conference organizer could compete for a prize. But Miagi-Do, which consisted mainly of the friends and proteges of Matthew Heusser (a conference organizer) decided they would rather have him on their team and lose prize money than not have him and lose fun. Ah sportsmanship!

The Miagi-Do team was serious from the start. Some prominent names were on it: Markus Gaertner, Ajay Balamurugadas, and Michael Larsen, to name three. Also, gutsy newcomer to our community, Adam Yuret.

Miagi-Do got the best rating from my walkaround interviews. They were using session-based test management with facilitated debriefings, and Matt grilled me about the scoring. They talked with the developer, and also consulted with my brother Jon about their final report. I expected them to cruise to a clear victory.

In the end, they won the “Spectacular Wipeout” award (an honorary award made up on the spot), for the best example of losing at the last minute. More about that, below.

The Controversy: Bad Report or Bad Call?

Let’s contrast the final reports of Miagi-Do and Springaby.

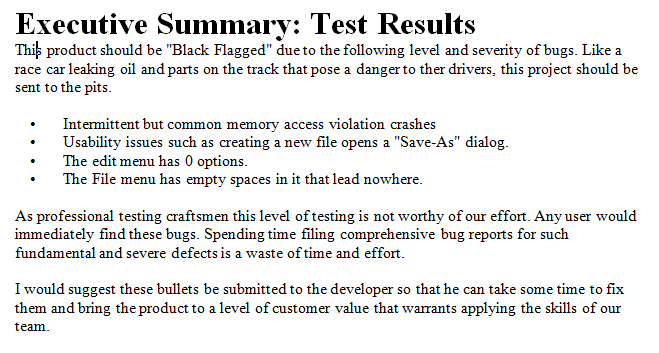

This is the summary from Miagi-Do. Study it carefully:

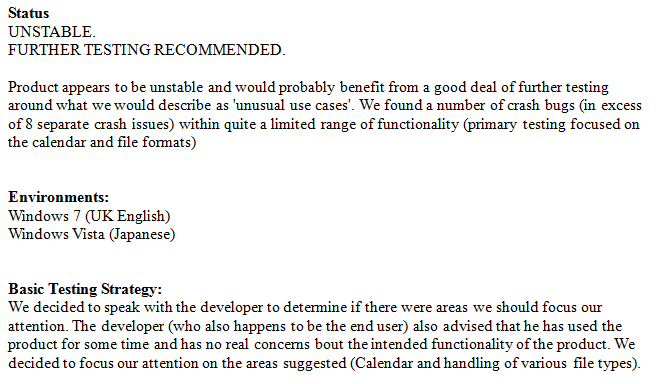

Now this is the summary from Springaby:

Bottom line is this: They both criticize the product pretty strongly, but Miagi-Do insulted the developer as well. That’s the spectacular wipeout. David was incensed. He spouted unprintable replies to Miagi-Do.

The reason why Miagi-Do was the goat, while Springaby was the pet, is that Springaby did not impose their own standard of quality onto the product. They did not make a quality judgment. They made descriptive statements about the product. Calling it unstable, for instance, is not to say it’s a bad product. In fact, Springaby was the ONLY team who checked with David about what quality standard is appropriate for his product. The other teams made assumptions about what the standard should be. They were generally reasonable assumptions, but still, the vendor of the product was right there– why assume you know what the intended customer, use, and quality standard is when you can just ask?

Meanwhile, Miagi-Do claimed the product was not “worthy” to be tested. Oh my God. Don’t say things like that, my fellow testers. You can say that the effort to test the product further at this time may not be justified, but that’s not the same thing as questioning the “worthiness” of the product, which is a morally charged word. The reference to black flagging, in this case, also seems gratuitous. I coined the concept of black flagging bugs (my brother came up with the term itself, by borrowing from NASCAR). I like the idea, but it’s not a term you want to pull out and use in a test report unless everyone is already fully familiar with it. The attempt to define it in the test report makes it appear as if the tester is reaching for colorful metaphors to rub in how much the programmer, and his product, sucks.

Springaby did not presume to know whether the product was bad or good, just that it was unstable and contained many potentially interesting bugs. They came to a meeting of minds with the developer, instead of dictating to him.Thus, even those both teams concurred in their technical findings, one team pleased their client, the other infuriated him.

This judgment of mine and David’s is controversial, because Adam Yuret, the up and coming tester who actually wrote the report, consulted with my brother Jon on the wording. Jon felt that the wording was good, and that the developer should develop a thicker skin. However, Jon wasn’t aware that Miagi-Do was working on the basis of their own imagined quality standard, rather than the one their client actually cared about. I think Adam did the right thing consulting with Jon (although if they had been otherwise eligible to win a prize, that consultation would have disqualified them). Adam tried hard and did what he thought was right. But it turns out the rest of the Miagi-Do team had not fully reviewed the test report, and perhaps if they did, they would have noticed the logical and diplomatic issues with it.

Well, there you go. I feel good about the scoring. I also learned something: most testers are poorly practiced at writing test reports. Start practicing, guys.

- THE CAST TESTING COMPETITION执行测试用例比赛

- UI常见测试用例-51testing

- 什么是好的测试用例[51Testing]

- Standing out from the competition

- The summary of competition season

- 测试未来的预测-Predicting the Future of Testing译文

- 第1讲:The nature of Testing--测试的本质

- ACM比赛中通过freopen读取测试用例

- Competition

- 转载:避免测试用例设计的误区 (来源: 51Testing软件测试博客)

- 测试用例设计之二——Pairwise Testing——成对测试

- 测试用例执行态度

- web 登录界面的测试用例-转自51testing

- 分析设计测试用例从哪些方面按考虑? QA QC Testing的关系?

- 测试用例设计之一——Orthogonal Array Testing Strategy(OATS)

- Testing, the Next Generation

- The classfy of testing

- The data for testing

- dynamic_cast

- C++11 中值得关注的几大变化(详解)

- vc中字符格式的转换TCHAR char string

- 时序图

- 铂金投资价值不高 回购价不到零售价一半

- THE CAST TESTING COMPETITION执行测试用例比赛

- 安装myelipse

- CC2430调试接口与JTAG的区别

- HDU 1089 1090 1091 1092 1093 1094 1095 1096

- Eclipse中建立JAX-WS的web服务-客户端

- SSH整合一

- Perl 正则表达式小结

- SSH整合二

- validation for email, ip and netmask