小玩OpenSURF图像识别 (C++)

来源:互联网 发布:梦幻西游mac补丁 编辑:程序博客网 时间:2024/05/02 04:32

在我的一篇文章 http://blog.csdn.net/chenyujing1234/article/details/7601800

里面讲到C#版的OpenSURF图像识别例子,它是不信赖于OpenCV的;

这里我将对C++版本进行代码分析。希望对大家有帮助。(源码可到http://code.google.com/p/opensurf1/ 下载)

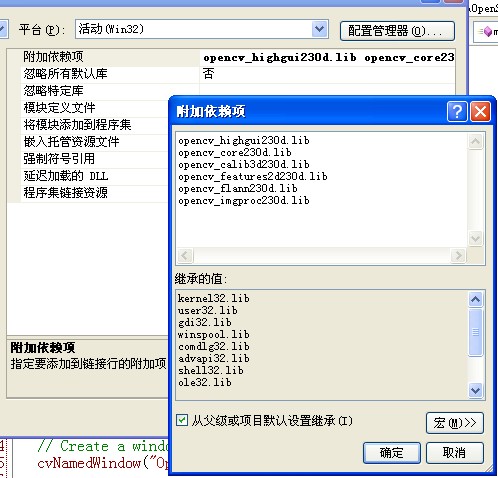

链接的openCV lib文件有:

这些库文件的名字有点长,因为它是我自己下opencv源码用CMake编译出来的。

OpenCV 链接库的得到请参考我的文章<<OpenCV2.1.0编译详细讲解>>

====================================================================================================

本例子分为6个小功能:

(1) 单张图征特征的提取

(2)Video特征的提取

(3)单张图片与视频的特征匹配

(4)

(5)

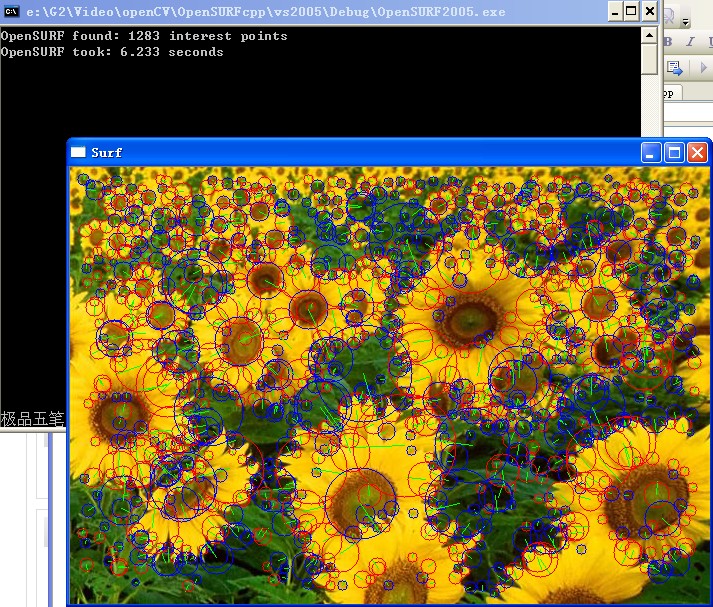

一、 单张图片的特征点识别

先看看本文实现的效果:

1、实现过程

1、1 加载图片

主要调用了opencv里的函数 cvLoadImage

// Declare Ipoints and other stuff IpVec ipts; IplImage *img=cvLoadImage("imgs/sf.jpg");

1、2 检测和描述图片中的兴趣点

// Detect and describe interest points in the image clock_t start = clock(); surfDetDes(img, ipts, false, 5, 4, 2, 0.0004f); clock_t end = clock();

以下是surflib.h里的代码

//! Library function builds vector of described interest pointsinline void surfDetDes(IplImage *img, /* image to find Ipoints in */ std::vector<Ipoint> &ipts, /* reference to vector of Ipoints */ bool upright = false, /* 运行在交替不变模式? */ int octaves = OCTAVES, /* 要计算的八度数量 */ int intervals = INTERVALS, /* 每个八度的兴趣点数目 */ int init_sample = INIT_SAMPLE, /* 初始化采样 step */ float thres = THRES /* blob response threshold */){ // 创建 integral-image representation of the image IplImage *int_img = Integral(img); // 创建 Fast Hessian Object FastHessian fh(int_img, ipts, octaves, intervals, init_sample, thres); // 提取兴趣点并存在 vector ipts fh.getIpoints(); // 创建 Surf Descriptor Object Surf des(int_img, ipts); // 提取 the ipts 的描述符 des.getDescriptors(upright); // 释放整个图像 cvReleaseImage(&int_img);}

1、2、1 看创建图片的intergral-image表现的过程

//! Computes the integral image of image img. Assumes source image to be a //! 32-bit floating point. Returns IplImage of 32-bit float form.IplImage *Integral(IplImage *source){ // 转化图片到单通道 32f IplImage *img = getGray(source); IplImage *int_img = cvCreateImage(cvGetSize(img), IPL_DEPTH_32F, 1); // 为数据访问建立变量 int height = img->height; int width = img->width; int step = img->widthStep/sizeof(float); float *data = (float *) img->imageData; float *i_data = (float *) int_img->imageData; // 首先是行 float rs = 0.0f; for(int j=0; j<width; j++) { rs += data[j]; i_data[j] = rs; } // remaining cells are sum above and to the left for(int i=1; i<height; ++i) { rs = 0.0f; for(int j=0; j<width; ++j) { rs += data[i*step+j]; i_data[i*step+j] = rs + i_data[(i-1)*step+j]; } } // 释放 gray image cvReleaseImage(&img); // return the integral image return int_img;}

将图片转化为单通道是调用了OpenCv里的库

// 转化图片到单通道 32FIplImage *getGray(const IplImage *img){ // 检查我们已经提供了一个非空的img指针 if (!img) error("Unable to create grayscale image. No image supplied"); IplImage* gray8, * gray32; gray32 = cvCreateImage( cvGetSize(img), IPL_DEPTH_32F, 1 ); if( img->nChannels == 1 ) gray8 = (IplImage *) cvClone( img ); else { gray8 = cvCreateImage( cvGetSize(img), IPL_DEPTH_8U, 1 ); cvCvtColor( img, gray8, CV_BGR2GRAY ); } cvConvertScale( gray8, gray32, 1.0 / 255.0, 0 ); cvReleaseImage( &gray8 ); return gray32;}

1、2、2 提取兴趣点

前提是创建了 Fast Hessian Object.

在getIpoins里完成了几件事:

(1)建立回复map

(2)获得回复layers

(3)判断是否是要提取的特征点

//! 找到图片的特征,并写到特征的vecto中void FastHessian::getIpoints(){ // 过滤 index map static const int filter_map [OCTAVES][INTERVALS] = {{0,1,2,3}, {1,3,4,5}, {3,5,6,7}, {5,7,8,9}, {7,9,10,11}}; // 清除掉存在的ipts的vector ipts.clear(); // 建立回复 map buildResponseMap(); // 获得回复 layers ResponseLayer *b, *m, *t; for (int o = 0; o < octaves; ++o) for (int i = 0; i <= 1; ++i) { b = responseMap.at(filter_map[o][i]); m = responseMap.at(filter_map[o][i+1]); t = responseMap.at(filter_map[o][i+2]); // loop over middle response layer at density of the most // sparse layer (always top), to find maxima across scale and space for (int r = 0; r < t->height; ++r) { for (int c = 0; c < t->width; ++c) { if (isExtremum(r, c, t, m, b)) { interpolateExtremum(r, c, t, m, b); } } } }}

1、2、2、1 建立responses map的过程

(1) 获得图片属性

(2) 计算接近的决定性的hessian值

(3) 从图片中解析responses layer

//! Build map of DoH responsesvoid FastHessian::buildResponseMap(){ // Calculate responses for the first 4 octaves: // Oct1: 9, 15, 21, 27 // Oct2: 15, 27, 39, 51 // Oct3: 27, 51, 75, 99 // Oct4: 51, 99, 147,195 // Oct5: 99, 195,291,387 // Deallocate memory and clear any existing response layers for(unsigned int i = 0; i < responseMap.size(); ++i) delete responseMap[i]; responseMap.clear(); // 获得图片的属性 int w = (i_width / init_sample); int h = (i_height / init_sample); int s = (init_sample); // 计算接近的决定性的hessian 值 if (octaves >= 1) { responseMap.push_back(new ResponseLayer(w, h, s, 9)); responseMap.push_back(new ResponseLayer(w, h, s, 15)); responseMap.push_back(new ResponseLayer(w, h, s, 21)); responseMap.push_back(new ResponseLayer(w, h, s, 27)); } if (octaves >= 2) { responseMap.push_back(new ResponseLayer(w/2, h/2, s*2, 39)); responseMap.push_back(new ResponseLayer(w/2, h/2, s*2, 51)); } if (octaves >= 3) { responseMap.push_back(new ResponseLayer(w/4, h/4, s*4, 75)); responseMap.push_back(new ResponseLayer(w/4, h/4, s*4, 99)); } if (octaves >= 4) { responseMap.push_back(new ResponseLayer(w/8, h/8, s*8, 147)); responseMap.push_back(new ResponseLayer(w/8, h/8, s*8, 195)); } if (octaves >= 5) { responseMap.push_back(new ResponseLayer(w/16, h/16, s*16, 291)); responseMap.push_back(new ResponseLayer(w/16, h/16, s*16, 387)); } // 从图片中解析回复 for (unsigned int i = 0; i < responseMap.size(); ++i) { buildResponseLayer(responseMap[i]); }}

1、2、2、2 判断是否是要提取的特征点

//! Non Maximal Suppression functionint FastHessian::isExtremum(int r, int c, ResponseLayer *t, ResponseLayer *m, ResponseLayer *b){ // bounds check int layerBorder = (t->filter + 1) / (2 * t->step); if (r <= layerBorder || r >= t->height - layerBorder || c <= layerBorder || c >= t->width - layerBorder) return 0; // check the candidate point in the middle layer is above thresh float candidate = m->getResponse(r, c, t); if (candidate < thresh) return 0; for (int rr = -1; rr <=1; ++rr) { for (int cc = -1; cc <=1; ++cc) { // if any response in 3x3x3 is greater candidate not maximum if ( t->getResponse(r+rr, c+cc) >= candidate || ((rr != 0 || cc != 0) && m->getResponse(r+rr, c+cc, t) >= candidate) || b->getResponse(r+rr, c+cc, t) >= candidate ) return 0; } } return 1;}

//! 插入标尺空间的极值到亚像素精度来形成一个图像特征. void FastHessian::interpolateExtremum(int r, int c, ResponseLayer *t, ResponseLayer *m, ResponseLayer *b){ // 获得在filters之间的步距离 // 检查中间filter是在top与bottom的中间 int filterStep = (m->filter - b->filter); assert(filterStep > 0 && t->filter - m->filter == m->filter - b->filter); // 获得极限值的真实位置的偏移 double xi = 0, xr = 0, xc = 0; interpolateStep(r, c, t, m, b, &xi, &xr, &xc ); // 如果点与真实的极限值是足够近的话,就把此点存起来 if( fabs( xi ) < 0.5f && fabs( xr ) < 0.5f && fabs( xc ) < 0.5f ) { Ipoint ipt; ipt.x = static_cast<float>((c + xc) * t->step); ipt.y = static_cast<float>((r + xr) * t->step); ipt.scale = static_cast<float>((0.1333f) * (m->filter + xi * filterStep)); ipt.laplacian = static_cast<int>(m->getLaplacian(r,c,t)); ipts.push_back(ipt); }}

1、2、2 提取 ipts 的描述符

提取前提是 创建了 Surf Descriptor Object

对vector 每个元素做以下事情:

(1) 分配Orientations并提取旋转不变描述符

(2) 获得描述符

//! 描述在提供的vector中的所有特征void Surf::getDescriptors(bool upright){ // Check there are Ipoints to be described if (!ipts.size()) return; // 获得vector的大小 int ipts_size = (int)ipts.size(); if (upright) { // U-SURF loop just gets descriptors for (int i = 0; i < ipts_size; ++i) { // Set the Ipoint to be described index = i; // Extract upright (i.e. not rotation invariant) descriptors getDescriptor(true); } } else { // Main SURF-64 loop assigns orientations 并获得描述符 for (int i = 0; i < ipts_size; ++i) { // 设置被描述的Ipoint index = i; // 分配 Orientations 并提取旋转不变描述符 getOrientation(); getDescriptor(false); } }}

//! Assign the supplied Ipoint an orientationvoid Surf::getOrientation(){ Ipoint *ipt = &ipts[index]; float gauss = 0.f, scale = ipt->scale; const int s = fRound(scale), r = fRound(ipt->y), c = fRound(ipt->x); std::vector<float> resX(109), resY(109), Ang(109); const int id[] = {6,5,4,3,2,1,0,1,2,3,4,5,6}; int idx = 0; // calculate haar responses for points within radius of 6*scale for(int i = -6; i <= 6; ++i) { for(int j = -6; j <= 6; ++j) { if(i*i + j*j < 36) { gauss = static_cast<float>(gauss25[id[i+6]][id[j+6]]); // could use abs() rather than id lookup, but this way is faster resX[idx] = gauss * haarX(r+j*s, c+i*s, 4*s); resY[idx] = gauss * haarY(r+j*s, c+i*s, 4*s); Ang[idx] = getAngle(resX[idx], resY[idx]); ++idx; } } } // calculate the dominant direction float sumX=0.f, sumY=0.f; float max=0.f, orientation = 0.f; float ang1=0.f, ang2=0.f; // loop slides pi/3 window around feature point for(ang1 = 0; ang1 < 2*pi; ang1+=0.15f) { ang2 = ( ang1+pi/3.0f > 2*pi ? ang1-5.0f*pi/3.0f : ang1+pi/3.0f); sumX = sumY = 0.f; for(unsigned int k = 0; k < Ang.size(); ++k) { // get angle from the x-axis of the sample point const float & ang = Ang[k]; // determine whether the point is within the window if (ang1 < ang2 && ang1 < ang && ang < ang2) { sumX+=resX[k]; sumY+=resY[k]; } else if (ang2 < ang1 && ((ang > 0 && ang < ang2) || (ang > ang1 && ang < 2*pi) )) { sumX+=resX[k]; sumY+=resY[k]; } } // if the vector produced from this window is longer than all // previous vectors then this forms the new dominant direction if (sumX*sumX + sumY*sumY > max) { // store largest orientation max = sumX*sumX + sumY*sumY; orientation = getAngle(sumX, sumY); } } // assign orientation of the dominant response vector ipt->orientation = orientation;}

//! Get the modified descriptor. See Agrawal ECCV 08//! Modified descriptor contributed by Pablo Fernandezvoid Surf::getDescriptor(bool bUpright){ int y, x, sample_x, sample_y, count=0; int i = 0, ix = 0, j = 0, jx = 0, xs = 0, ys = 0; float scale, *desc, dx, dy, mdx, mdy, co, si; float gauss_s1 = 0.f, gauss_s2 = 0.f; float rx = 0.f, ry = 0.f, rrx = 0.f, rry = 0.f, len = 0.f; float cx = -0.5f, cy = 0.f; //Subregion centers for the 4x4 gaussian weighting Ipoint *ipt = &ipts[index]; scale = ipt->scale; x = fRound(ipt->x); y = fRound(ipt->y); desc = ipt->descriptor; if (bUpright) { co = 1; si = 0; } else { co = cos(ipt->orientation); si = sin(ipt->orientation); } i = -8; //Calculate descriptor for this interest point while(i < 12) { j = -8; i = i-4; cx += 1.f; cy = -0.5f; while(j < 12) { dx=dy=mdx=mdy=0.f; cy += 1.f; j = j - 4; ix = i + 5; jx = j + 5; xs = fRound(x + ( -jx*scale*si + ix*scale*co)); ys = fRound(y + ( jx*scale*co + ix*scale*si)); for (int k = i; k < i + 9; ++k) { for (int l = j; l < j + 9; ++l) { //Get coords of sample point on the rotated axis sample_x = fRound(x + (-l*scale*si + k*scale*co)); sample_y = fRound(y + ( l*scale*co + k*scale*si)); //Get the gaussian weighted x and y responses gauss_s1 = gaussian(xs-sample_x,ys-sample_y,2.5f*scale); rx = haarX(sample_y, sample_x, 2*fRound(scale)); ry = haarY(sample_y, sample_x, 2*fRound(scale)); //Get the gaussian weighted x and y responses on rotated axis rrx = gauss_s1*(-rx*si + ry*co); rry = gauss_s1*(rx*co + ry*si); dx += rrx; dy += rry; mdx += fabs(rrx); mdy += fabs(rry); } } //Add the values to the descriptor vector gauss_s2 = gaussian(cx-2.0f,cy-2.0f,1.5f); desc[count++] = dx*gauss_s2; desc[count++] = dy*gauss_s2; desc[count++] = mdx*gauss_s2; desc[count++] = mdy*gauss_s2; len += (dx*dx + dy*dy + mdx*mdx + mdy*mdy) * gauss_s2*gauss_s2; j += 9; } i += 9; } //Convert to Unit Vector len = sqrt(len); for(int i = 0; i < 64; ++i) desc[i] /= len;}

1、3 画出检测的点

即把IpVec ipts在img上画出来

// Draw the detected points drawIpoints(img, ipts);

//! Draw all the Ipoints in the provided vectorvoid drawIpoints(IplImage *img, vector<Ipoint> &ipts, int tailSize){ Ipoint *ipt; float s, o; int r1, c1, r2, c2, lap; for(unsigned int i = 0; i < ipts.size(); i++) { ipt = &ipts.at(i); s = (2.5f * ipt->scale); o = ipt->orientation; lap = ipt->laplacian; r1 = fRound(ipt->y); c1 = fRound(ipt->x); c2 = fRound(s * cos(o)) + c1; r2 = fRound(s * sin(o)) + r1; if (o) // Green line indicates orientation cvLine(img, cvPoint(c1, r1), cvPoint(c2, r2), cvScalar(0, 255, 0)); else // Green dot if using upright version cvCircle(img, cvPoint(c1,r1), 1, cvScalar(0, 255, 0),-1); if (lap == 1) { // Blue circles indicate dark blobs on light backgrounds cvCircle(img, cvPoint(c1,r1), fRound(s), cvScalar(255, 0, 0),1); } else if (lap == 0) { // Red circles indicate light blobs on dark backgrounds cvCircle(img, cvPoint(c1,r1), fRound(s), cvScalar(0, 0, 255),1); } else if (lap == 9) { // Red circles indicate light blobs on dark backgrounds cvCircle(img, cvPoint(c1,r1), fRound(s), cvScalar(0, 255, 0),1); } // Draw motion from ipoint dx and dy if (tailSize) { cvLine(img, cvPoint(c1,r1), cvPoint(int(c1+ipt->dx*tailSize), int(r1+ipt->dy*tailSize)), cvScalar(255,255,255), 1); } }}

1、4 显示图片

// Display the result showImage(img);

//! Show the provided image and wait for keypressvoid showImage(const IplImage *img){ cvNamedWindow("Surf", CV_WINDOW_AUTOSIZE); cvShowImage("Surf", img); cvWaitKey(0);}

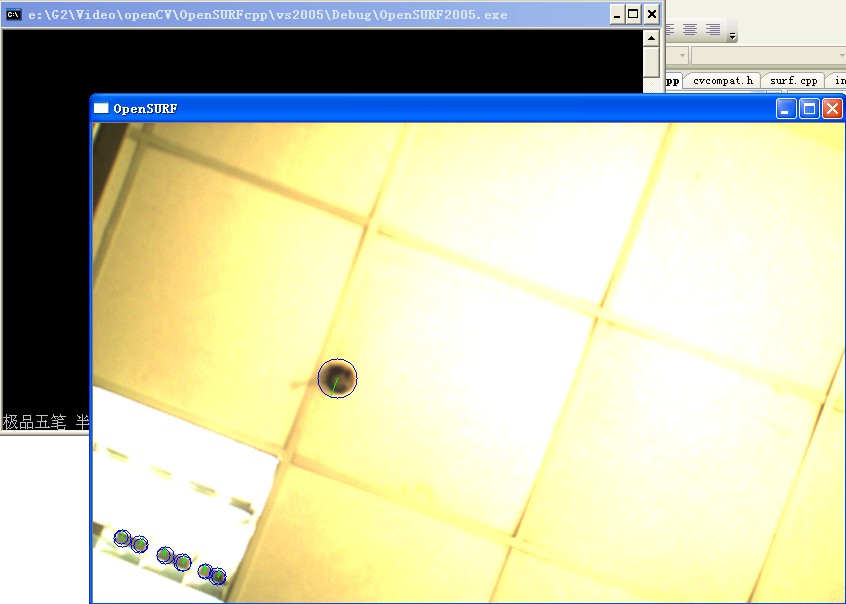

二、 Video特征的提取

在计算机世界里,“视觉”含义非常丰富,一些情况下,我们要分析从其他地方载入的固定图像,在更多情况下,

我们想处理从某些摄像设备中实时读入的视频流。

这时我们调用的是cvCreateCameraCapture(),而不是cvCreateFileCapture().

1 效果

很多人可能会得到提示“Error: No Capture”.那是因为openCV的接口在电脑上查找不到可用的DirectShow摄像头.

所以运行此功能前提是你有摄像头接入到电脑上,且你的摄像头驱动运行DirectShow filter功能。

/** * Camera dispatching method: index is the camera number. * If given an index from 0 to 99, it tries to find the first * API that can access a given camera index. * Add multiples of 100 to select an API. */CV_IMPL CvCapture * cvCreateCameraCapture (int index){int domains[] ={#ifdef HAVE_VIDEOINPUT CV_CAP_DSHOW,#endifCV_CAP_IEEE1394, // identical to CV_CAP_DC1394CV_CAP_STEREO,CV_CAP_VFW, // identical to CV_CAP_V4LCV_CAP_MIL,CV_CAP_QT,CV_CAP_UNICAP,-1};// interpret preferred interface (0 = autodetect)int pref = (index / 100) * 100;if (pref){domains[0]=pref;index %= 100;domains[1]=-1;}// try every possibly installed camera APIfor (int i = 0; domains[i] >= 0; i++){ #if defined(HAVE_VIDEOINPUT) || defined(HAVE_TYZX) || defined(HAVE_VFW) || \ defined(HAVE_CAMV4L) || defined (HAVE_CAMV4L2) || defined(HAVE_GSTREAMER) || \ defined(HAVE_DC1394_2) || defined(HAVE_DC1394) || defined(HAVE_CMU1394) || \ defined(HAVE_GSTREAMER) || defined(HAVE_MIL) || defined(HAVE_QUICKTIME) || \ defined(HAVE_UNICAP)// local variable to memorize the captured deviceCvCapture *capture;#endifswitch (domains[i]){ #ifdef HAVE_VIDEOINPUT case CV_CAP_DSHOW: capture = cvCreateCameraCapture_DShow (index); if (capture) return capture; break; #endif#ifdef HAVE_TYZXcase CV_CAP_STEREO:capture = cvCreateCameraCapture_TYZX (index);if (capture)return capture;break;#endifcase CV_CAP_VFW:#ifdef HAVE_VFWcapture = cvCreateCameraCapture_VFW (index);if (capture)return capture;#endif#if defined (HAVE_CAMV4L) || defined (HAVE_CAMV4L2)capture = cvCreateCameraCapture_V4L (index);if (capture)return capture;#endif#ifdef HAVE_GSTREAMERcapture = cvCreateCapture_GStreamer(CV_CAP_GSTREAMER_V4L2, 0);if (capture)return capture;capture = cvCreateCapture_GStreamer(CV_CAP_GSTREAMER_V4L, 0);if (capture)return capture;#endifbreak;case CV_CAP_FIREWIRE:#ifdef HAVE_DC1394_2capture = cvCreateCameraCapture_DC1394_2 (index);if (capture)return capture;#endif#ifdef HAVE_DC1394capture = cvCreateCameraCapture_DC1394 (index);if (capture)return capture;#endif#ifdef HAVE_CMU1394capture = cvCreateCameraCapture_CMU (index);if (capture)return capture;#endif#ifdef HAVE_GSTREAMERcapture = cvCreateCapture_GStreamer(CV_CAP_GSTREAMER_1394, 0);if (capture)return capture;#endifbreak;#ifdef HAVE_MILcase CV_CAP_MIL:capture = cvCreateCameraCapture_MIL (index);if (capture)return capture;break;#endif#ifdef HAVE_QUICKTIMEcase CV_CAP_QT:capture = cvCreateCameraCapture_QT (index);if (capture)return capture;break;#endif#ifdef HAVE_UNICAPcase CV_CAP_UNICAP: capture = cvCreateCameraCapture_Unicap (index); if (capture) return capture; break;#endif}}// failed open a camerareturn 0;}

先让大家看一下效果吧(拍的是天花板):

2、 实现过程

实现过程于对单张图征的特征的提取的方法类似,只是加了从摄像头获得图片的过程。

int mainVideo(void){ // 初始化capture 设备 CvCapture* capture = cvCaptureFromCAM( CV_CAP_ANY ); if(!capture) error("No Capture"); // Initialise video writer //cv::VideoWriter vw("c:\\out.avi", CV_FOURCC('D','I','V','X'),10,cvSize(320,240),1); //vw << img; // 创建一个窗口Create a window cvNamedWindow("OpenSURF", CV_WINDOW_AUTOSIZE ); // 声明 Ipoints 和其它的事务 IpVec ipts; IplImage *img=NULL; // 主要的捕获循环 while( 1 ) { // 从摄像头中捕获帧 img = cvQueryFrame(capture); // 提取 surf 点 surfDetDes(img, ipts, false, 4, 4, 2, 0.004f); // 画检测到的点 drawIpoints(img, ipts); // 画出FPS值 //drawFPS(img); // 显示结果 cvShowImage("OpenSURF", img); // If ESC key pressed exit loop if( (cvWaitKey(10) & 255) == 27 ) break; } cvReleaseCapture( &capture ); cvDestroyWindow( "OpenSURF" ); return 0;}

3 、 单张图片与视频的特征匹配

经过我验证,这个功能效果不好,表现为不敏感,其实openCV 的主要功能就是用在匹配上,而这里的表现有点让我失望。

不管如何,我们还是讲一下它的实现:

它先取一张要比较的源图片,提取它的特征点,然后从视频中提取一帧,得到的特征点vector与源特征点的vector对比,看是否有类似的。

int mainMatch(void){ // Initialise capture device CvCapture* capture = cvCaptureFromCAM( CV_CAP_ANY ); if(!capture) error("No Capture"); // Declare Ipoints and other stuff IpPairVec matches; IpVec ipts, ref_ipts; // This is the reference object we wish to find in video frame // Replace the line below with IplImage *img = cvLoadImage("imgs/object.jpg"); // where object.jpg is the planar object to be located in the video IplImage *img = cvLoadImage("imgs/object.jpg"); if (img == NULL) error("Need to load reference image in order to run matching procedure"); CvPoint src_corners[4] = {{0,0}, {img->width,0}, {img->width, img->height}, {0, img->height}}; CvPoint dst_corners[4]; // Extract reference object Ipoints surfDetDes(img, ref_ipts, false, 3, 4, 3, 0.004f); drawIpoints(img, ref_ipts); showImage(img); // Create a window cvNamedWindow("OpenSURF", CV_WINDOW_AUTOSIZE ); // Main capture loop while( true ) { // Grab frame from the capture source img = cvQueryFrame(capture); // Detect and describe interest points in the frame surfDetDes(img, ipts, false, 3, 4, 3, 0.004f); // Fill match vector getMatches(ipts,ref_ipts,matches); // This call finds where the object corners should be in the frame if (translateCorners(matches, src_corners, dst_corners)) { // Draw box around object for(int i = 0; i < 4; i++ ) { CvPoint r1 = dst_corners[i%4]; CvPoint r2 = dst_corners[(i+1)%4]; cvLine( img, cvPoint(r1.x, r1.y), cvPoint(r2.x, r2.y), cvScalar(255,255,255), 3 ); } for (unsigned int i = 0; i < matches.size(); ++i) drawIpoint(img, matches[i].first); } // Draw the FPS figure //drawFPS(img); // Display the result cvShowImage("OpenSURF", img); // If ESC key pressed exit loop if( (cvWaitKey(10) & 255) == 27 ) break; } // Release the capture device cvReleaseCapture( &capture ); cvDestroyWindow( "OpenSURF" ); return 0;}

- 小玩OpenSURF图像识别 (C++)

- 小玩OpenSURF图像识别

- 小玩OpenSURF图像识别

- 小玩OpenSURF图像识别

- 小玩OpenSURF图像识别

- OpenSURF图像识别

- [置顶] (有趣小程序) Java OCR 图像识别

- C#WinForm-OCRTessnet图像识别

- 图像库---Image Datasets---OpenSift源码---openSurf源码

- 【C#】身份证识别(二):提取目标区域图像

- 玩转矩阵的C小加

- 玩转矩阵的C小加

- 玩转矩阵的C小加

- Android 不规则图像填充 小玩着色游戏

- Android 不规则图像填充 小玩着色游戏

- Android 不规则图像填充 小玩着色游戏

- Android 不规则图像填充 小玩着色游戏

- Android 不规则图像填充 小玩着色游戏

- 补充 OSGI bundle的知识

- CouchDB与MySQL的选择

- android view的setVisibility方法值的意思

- JSP中文验证码

- sicily--1317(深度优先搜索)

- 小玩OpenSURF图像识别 (C++)

- 浅谈S3C2440的中断寄存器及中断过程

- Javascript 三种合并数组方法!

- 如何连接多张原理图

- c++设计模式之单例模式

- typeid

- JS获取当前时间戳的方法-JavaScript 获取当前时间戳

- poj 1625 Censored!

- Document对象内容集合