ACM MM 2010的一篇优秀学生论文《A New Approach To Cross-Modal Multimedia Retrieval》,其主要方法就是CCA(典型相关分析),今天看见JerryLead的博文有写,就转载过来了,首先介绍下这篇文章。

Cross-Modal Multimedia Retrieval Starting from the extensive literature available on text and image analysis, including the representation of documents as bags of features (word histograms for text, SIFT histograms for images), and the use of topic models (such as latent Dirichlet allocation) to extract low-dimensionality generalizations from document corpora. We build on these representations to design a joint model for images and text. The performance of this model is evaluated on a crossmodal retrieval problem that includes two tasks: 1) the retrieval of text documents in response to a query image, and 2) the retrieval of images in response to a query text. These tasks are central to many applications of practical interest, such as finding on the web the picture that best illustrates a given text (e.g., to illustrate a page of a story book), finding the texts that best match a given picture (e.g., a set of vacation accounts about a given landmark), or searching using a combination of text and images. We use performance on the retrieval tasks as an indirect measure of the model quality, under the intuition that the best model should produce the highest retrieval accuracies.Whenever the image and text spaces have a natural correspondence, cross-modal retrieval reduces to a classical retrieval problem. However, the text component is represented as a sample from a hidden topic model, learned with latent Dirichlet allocation, and images are represented as bags of visual (SIFT) features. These representations evidently lack a common feature space. Therefore the question is how to establish correspondence between two modality feature spaces.

Two hypotheses are investigated: that 1) there is a benefit to explicitly modeling correlations between the two components, and 2) this modeling is more effective in feature spaces with higher levels of abstraction.

To test the first hypothesis, correlations between the two components are learned with canonical correlation analysis. For the second hypothesis, abstraction is achieved by representing text and images at a more general, semantic level. These two hypotheses are studied in the context of the task of cross-modal document retrieval. This includes retrieving the text that most closely matches a query image, or retrieving the images that most closely match a query text. It is shown, independently, that accounting for cross-modal correlations and semantic abstraction both improve retrieval accuracy. In fact, a combination of the two hypotheses, that we define as Semantic Correlation Matching, produces the best results for cross-modal retrieval.Database:We have colected the following dataset for cross-modal retrieval experiments:The collected documents are selected sections from the Wikipedia's featured articles collection. This is a continuously growing dataset, that at the time of collection (October 2009) had 2,669 articles spread over 29 categories. Some of the categories are very scarce, therefore we considered only the 10 most populated ones. The articles generally have multiple sections and pictures. We have split them into sections based on section headings, and assign each image to the section in which it was placed by the author(s). Then this dataset was prunned to keep only sections that contained a single image and at least 70 words.

The final corpus contains 2,866 multimedia documents. The median text length is 200 words.

Publications:A New Approach to Cross-Modal Multimedia Retrieval

(Best student paper award ACM-MM 2010)

N. Rasiwasia, J. Costa Pereira, E. Coviello, G. Doyle,

G.R.G. Lanckriet, R.Levy and N. Vasconcelos

ACM Proceedings of the 18th International Conference on Multimedia

© IEEE [ps] [pdf] [BibTeX]Presentations:A New Approach to Cross-Modal Multimedia Retrieval

N. Rasiwasia

ACM Proceedings of the 18th International Conference on Multimedia

Florence, Italy. October 27, 2010. [ppt]

然后转载了CCA:

[pdf版本] 典型相关分析.pdf

1. 问题

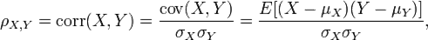

在线性回归中,我们使用直线来拟合样本点,寻找n维特征向量X和输出结果(或者叫做label)Y之间的线性关系。其中 。然而当Y也是多维时,或者说Y也有多个特征时,我们希望分析出X和Y的关系。

。然而当Y也是多维时,或者说Y也有多个特征时,我们希望分析出X和Y的关系。

当然我们仍然可以使用回归的方法来分析,做法如下:

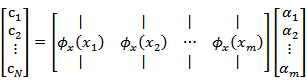

假设 ,那么可以建立等式Y=AX如下

,那么可以建立等式Y=AX如下

,形式和线性回归一样,需要训练m次得到m个

,形式和线性回归一样,需要训练m次得到m个 和

和 ,解题正确率

,解题正确率 ,理解程度

,理解程度 和

和

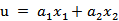

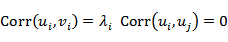

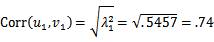

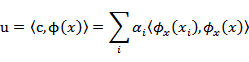

来度量u和v的关系,我们期望寻求一组最优的解a和b,使得Corr(u, v)最大,这样得到的a和b就是使得u和v就有最大关联的权重。

到这里,基本上介绍了典型相关分析的目的。

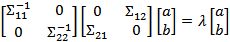

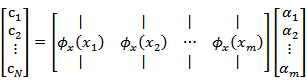

2. CCA表示与求解

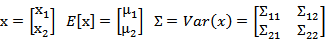

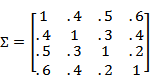

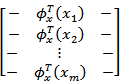

给定两组向量 (替换之前的x为

(替换之前的x为![clip_image034[1] clip_image034[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016063833.png) ),

), ,

, ,默认

,默认

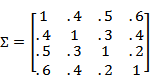

![clip_image032[3] clip_image032[3]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/20110620201610641.png) 自己的协方差矩阵;右上角是

自己的协方差矩阵;右上角是 ,也是

,也是![clip_image034[3] clip_image034[3]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016127459.png) 的协方差矩阵。

的协方差矩阵。

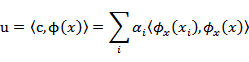

与之前一样,我们从![clip_image034[4] clip_image034[4]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016135955.png) 的整体入手,定义

的整体入手,定义

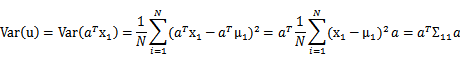

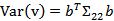

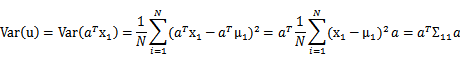

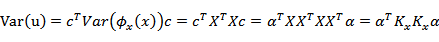

我们可以算出u和v的方差和协方差:

最后,我们需要算Corr(u,v)了

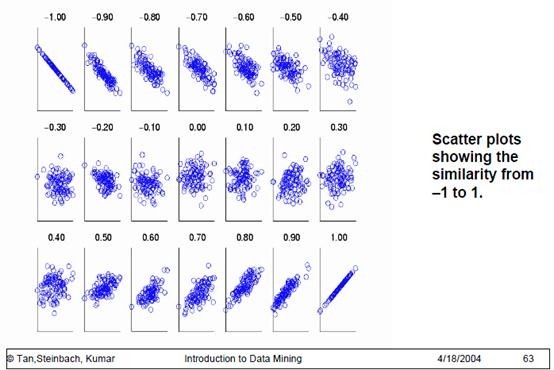

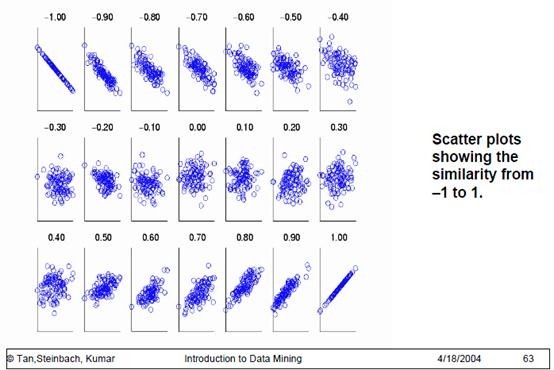

横轴是u,纵轴是v,这里我们期望通过调整a和b使得u和v的关系越像最后一个图越好。其实第一个图和最后一个图有联系的,我们可以调整a和b的符号,使得从第一个图变为最后一个。

接下来我们求解a和b。

回想在LDA中,也得到了类似Corr(u,v)的公式,我们在求解时固定了分母,来求分子(避免a和b同时扩大n倍仍然符号解条件的情况出现)。这里我们同样这么做。

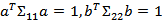

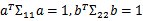

这个优化问题的条件是:

Maximize

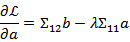

求解方法是构造Lagrangian等式,这里我简单推导如下:

,第二个左乘

,第二个左乘 ,得到

,得到

即是Corr(u,v),只需找最大

即是Corr(u,v),只需找最大

令

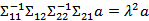

显然,又回到了求特征值的老路上了,只要求得 ,那么Corr(u,v)和a和b都可以求出。

,那么Corr(u,v)和a和b都可以求出。

在上面的推导过程中,我们假设了 均可逆。一般情况下都是可逆的,只有存在特征间线性相关时会出现不可逆的情况,在本文最后会提到不可逆的处理办法。

均可逆。一般情况下都是可逆的,只有存在特征间线性相关时会出现不可逆的情况,在本文最后会提到不可逆的处理办法。

再次审视一下,如果直接去计算

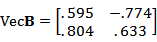

这样先对 和特征向量

和特征向量![clip_image090[2] clip_image090[2]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016286493.png) 最大时的

最大时的 。那么

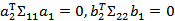

。那么![clip_image120[1] clip_image120[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/20110620201631901.png) 称为典型变量(canonical variates),

称为典型变量(canonical variates),

Subject to:

其实第二组约束条件就是![clip_image090[4] clip_image090[4]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016335069.png) 取

取 和

和 即

即  和

和

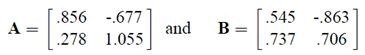

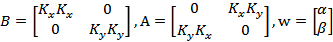

![clip_image112[2] clip_image112[2]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016377135.png) ,得

,得

中的A不是一回事(这里符号有点乱,不好意思)。

中的A不是一回事(这里符号有点乱,不好意思)。

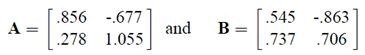

然后对A求特征值和特征向量,得到

求b,这里,我们也可以采用类似求a的方法来求b。

求b,这里,我们也可以采用类似求a的方法来求b。

回想之前的等式

![clip_image094[1] clip_image094[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016393679.png)

我们将上面的式子代入下面的,得

求特征向量即可,注意

求特征向量即可,注意![clip_image112[3] clip_image112[3]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/20110620201639713.png) 的特征值相同,这个可以自己证明下。

的特征值相同,这个可以自己证明下。

不管使用哪种方法,

这里我们得到a和b的两组向量,到这还没完,我们需要让它们满足之前的约束条件

应该是我们之前得到的VecA中的列向量的m倍,我们只需要求得m,然后将VecA中的列向量乘以m即可。

应该是我们之前得到的VecA中的列向量的m倍,我们只需要求得m,然后将VecA中的列向量乘以m即可。

是VecA的列向量。

是VecA的列向量。

第一组典型变量为

第二组典型变量为

这里的 (解题正确率),

(解题正确率), (阅读理解程度)。他们前面的系数意思不是特征对单个u或v的贡献比重,而是从u和v整体关系看,当两者关系最密切时,特征计算时的权重。

(阅读理解程度)。他们前面的系数意思不是特征对单个u或v的贡献比重,而是从u和v整体关系看,当两者关系最密切时,特征计算时的权重。

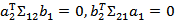

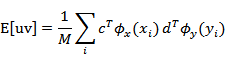

4. Kernel Canonical Correlation Analysis(KCCA)

通常当我们发现特征的线性组合效果不够好或者两组集合关系是非线性的时候,我们会尝试核函数方法,这里我们继续介绍Kernel CCA。

在《支持向量机-核函数》那一篇中,大致介绍了一下核函数,这里再简单提一下:

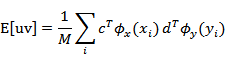

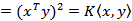

当我们对两个向量作内积的时候

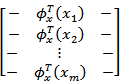

,

, 和

和![clip_image204[1] clip_image204[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016499785.png) 特征向量为

特征向量为

如果![clip_image200[1] clip_image200[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016517475.png) 的构造一样,那么

的构造一样,那么

这样,仅通过计算x和y的内积的平方就可以达到在高维空间(这里为 和

和

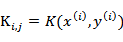

即第 列的元素是第

列的元素是第![clip_image226[1] clip_image226[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016556857.png) 个样例在核函数下的内积。

个样例在核函数下的内积。

一个很好的核函数定义:

![clip_image218[1] clip_image218[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202016569465.png) 变换后,从n维特征上升到了N维特征,其中每一个特征是

变换后,从n维特征上升到了N维特征,其中每一个特征是

和

和

,这里的

,这里的

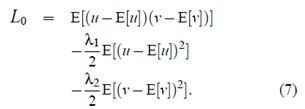

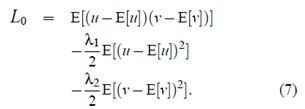

其中

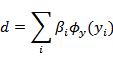

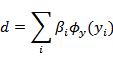

然后让L对a求导,令导数等于0,得到(这一步我没有验证,待会从宏观上解释一下)

求出c和d干嘛呢?c和d只是 和

和 ,然后用K替换之,根本没有打算去计算出实际的

,然后用K替换之,根本没有打算去计算出实际的![clip_image258[2] clip_image258[2]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/20110620201703747.png) 让我们去做

让我们去做![clip_image260[1] clip_image260[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/20110620201704290.png) 和

和![clip_image258[3] clip_image258[3]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017052058.png) 将

将![clip_image262[2] clip_image262[2]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017072506.png) 上升到高维,他们在高维对应的权重就是c和d。

上升到高维,他们在高维对应的权重就是c和d。

虽然![clip_image262[3] clip_image262[3]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017082322.png) 是在原始空间中(维度为样例个数M),但其作用点不是在原始特征上,而是原始样例上。看上面得出的c和d的公式就知道。

是在原始空间中(维度为样例个数M),但其作用点不是在原始特征上,而是原始样例上。看上面得出的c和d的公式就知道。![clip_image260[5] clip_image260[5]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017096915.png) 和

和

表示可以将第i个样例上升到的N维向量,

表示可以将第i个样例上升到的N维向量,

简写为

我们发现

![clip_image204[2] clip_image204[2]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017121149.png) 和

和

这里 维度可以不一样。

维度可以不一样。

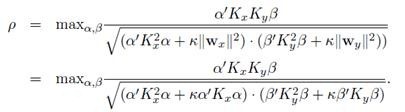

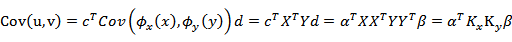

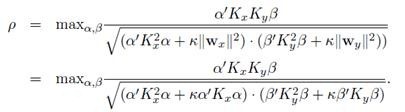

最后,我们得到Corr(u,v)

![clip_image018[1] clip_image018[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017144935.png) 和

和 ,

,![clip_image044[1] clip_image044[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017156495.png) 替换成了两个K乘积。

替换成了两个K乘积。

因此,得到的结果也是一样的,之前是

![clip_image098[1] clip_image098[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017163497.png)

引入核函数后,得到

注意这里的两个w有点区别,前面的 维度和y的特征数相同。后面的

维度和y的特征数相同。后面的 维度和y的样例数相同,严格来说“

维度和y的样例数相同,严格来说“![clip_image306[1] clip_image306[1]](http://images.cnblogs.com/cnblogs_com/jerrylead/201106/201106202017205006.png) 维度”。

维度”。

5. 其他话题

1、当协方差矩阵不可逆时,怎么办?

要进行regularization。

一种方法是将前面的KCCA中的拉格朗日等式加上二次正则化项,即:

2、求Kernel矩阵效率不高怎么办?

使用Cholesky decomposition压缩法或者部分Gram-Schmidt正交化法,。

3、怎么使用CCA用来做预测?

先找出X和Y的典型相关系数,新来一个样例Xnew,在X中使用KNN,然后找到在Y中对应的N个样例,求均值或者带权重均值等预测Ynew。

4、如果有多个集合怎么办?X、Y、Z…?怎么衡量多个样本集的关系?

这个称为Generalization of the Canonical Correlation。方法是使得两两集合的距离差之和最小。可以参考文献2。

6. 参考文献

1、 http://www.stat.tamu.edu/~rrhocking/stat636/LEC-9.636.pdf

2、 Canonical correlation analysis: An overview with application to learning methods. David R. Hardoon , Sandor Szedmak and John Shawe-Taylor

3、 A kernel method for canonical correlation analysis. Shotaro Akaho

4、 Canonical Correlation a Tutorial. Magnus Borga

5、 Kernel Canonical Correlation Analysis. Max Welling