Open Source Rendering Library

来源:互联网 发布:开微店 知乎 编辑:程序博客网 时间:2024/05/17 01:33

Open source

OpenVDB, Alembic, OpenExr 2, Open Shader Language (OSL), Cortex and other open source initiatives have really taken off in the last few years. For some companies this is a great opportunity, for a few others they are considering them but have higher priorities, but no one is ignoring them.

OpenVDB, Alembic, OpenExr 2, Open Shader Language (OSL), Cortex and other open source initiatives have really taken off in the last few years. For some companies this is a great opportunity, for a few others they are considering them but have higher priorities, but no one is ignoring them.

A scene from The Croods using OpenVDB.

With online communities and tools such as GitHub, people around the world have worked together to move in-house projects and standardizations into the public open source community. While most of this is based on genuine community motivation, the growth of patent trolls and the costs of isolated development have also contributed.

Companies such as The Foundry, who excel at commercializing in-house projects into main stream products such as Nuke, Katana, Mari and now FLIX have also been key in adopting and helping to ratify standards such as ColorIO, Alembic and OpenEXR 2. This partnership between the vendors and the big facilities has huge advantages for the smaller players also. Several smaller render companies expressed complete support for such open standards, one might even say exuberance, since they feel they can not complete with the big companies, but when open standards are adopted it allows smaller player to correctly and neatly fit into bigger production pipelines. In reference to open source in general, Juan Cañada, the Head of Maxwell Render Technology, commented “this is something we have been praying for. We are not Autodesk, we are not even The Foundry. We are smaller, and we are never force people to use just our file formats, or a proprietary approach to anything, so anything that is close to a standard for us is a blessing. As soon as Alembic came along we supported that, the same with Open VDB, OpenEXR etc. For a medium sized company like us, it is super important that people follow standards, and from the user’s point of view we understand this is even more important. It is critical. We have committed ourselves to follow standards as much as possible.”

Below are some of the key open source initiatives that are relevant to rendering that we have not included in our round-up of the main players (see part 2), but will be covered from SIGGRAPH, and others that were just excluded due to current levels of adoption such as OpenGL (Caustic by Imagination) which is supported by Brazil only currently as we understand, but aims to aid in GPU and CPU low level levels of abstraction for ray tracing.

OpenEXR 2 (major support from ILM)

The most powerful and valuable open source standard that has impacted rendering would have to be the OpenEXR and OpenEXR 2 file formats. Really containers of data, this format has exploded as the floating point file format of choice and in recent time expanded further to cover stereo and storing of deep color or deep compositing data. The near universal acceptance of OpenEXR as the floating point big brother of the DPX/Cineon file format/data container has been a the lighthouse of inspiration that has fathered so much of the open source community in our field. But more than that, it has been central to the collaborative workflow that allows facilities all over the world to work together. Steered and supported by ILM and added to by Weta Digital, arguably the two most important visual effects facilities in the world, the standard has been successful and been expanded to be kept relevant.

OpenEXR 2.0 was recently released with major work from Weta and ILM. It contains:

Although OpenEXR 2.0 is a major version update, files created by the new library that don’t exercise the new feature set are completely backwards compatible with previous versions of the library.

The most powerful and valuable open source standard that has impacted rendering would have to be the OpenEXR and OpenEXR 2 file formats. Really containers of data, this format has exploded as the floating point file format of choice and in recent time expanded further to cover stereo and storing of deep color or deep compositing data. The near universal acceptance of OpenEXR as the floating point big brother of the DPX/Cineon file format/data container has been a the lighthouse of inspiration that has fathered so much of the open source community in our field. But more than that, it has been central to the collaborative workflow that allows facilities all over the world to work together. Steered and supported by ILM and added to by Weta Digital, arguably the two most important visual effects facilities in the world, the standard has been successful and been expanded to be kept relevant.

OpenEXR 2.0 was recently released with major work from Weta and ILM. It contains:

Although OpenEXR 2.0 is a major version update, files created by the new library that don’t exercise the new feature set are completely backwards compatible with previous versions of the library.

Weta Digital uses deep compositing with RenderMan: Man of Steel (2013).

RenderMan from Pixar fully supports deep color and they have worked very successfully with Weta Digital who use deep color on most if not now all productions including the complex work for the battle on Krypton for Man of Steel.

Arnold does not ‘out of the box’ support deep color / deep data compositing but for select clients such as ILM they have developed special pipelines for shows such as Pacific Rim. It is Solid Angle’s intention to implement this more widely in a future version of Arnold. Next Limit have already implemented deep color into Maxwell Render and it is currently in Beta to be shipping with the new Release 3.0 around October hopefully (along with Alembic, OpenEXR 2.0 etc Next limit is committed to open source generally).

Side Effects Software’s Houdini is another open source supporter. “We are really excited about all the open source stuff that is coming out especially the Alembic, OpenVDB and OpenEXR 2, and the fact that the deep images support is there in OpenEXR 2.0 makes it really great for composting volumes and the other things Houdini is go good at,” explains Mark Elendt, senior mathematician and very senior Side Effects team member, talking about Houdini’s forthcoming full support of OpenEXR 2.0. Deep compositing was driven by Weta, ILM and also The Foundry for Nuke compositing. But Nuke is scanline based and Side Effects is tile based, so there was a whole section of the spec and implementation that was somewhat untested. Side Effects worked very closely with the OpenEXR group to make sure the deep compositing workflow worked well with Houdini and other titled solutions.

Arnold does not ‘out of the box’ support deep color / deep data compositing but for select clients such as ILM they have developed special pipelines for shows such as Pacific Rim. It is Solid Angle’s intention to implement this more widely in a future version of Arnold. Next Limit have already implemented deep color into Maxwell Render and it is currently in Beta to be shipping with the new Release 3.0 around October hopefully (along with Alembic, OpenEXR 2.0 etc Next limit is committed to open source generally).

Side Effects Software’s Houdini is another open source supporter. “We are really excited about all the open source stuff that is coming out especially the Alembic, OpenVDB and OpenEXR 2, and the fact that the deep images support is there in OpenEXR 2.0 makes it really great for composting volumes and the other things Houdini is go good at,” explains Mark Elendt, senior mathematician and very senior Side Effects team member, talking about Houdini’s forthcoming full support of OpenEXR 2.0. Deep compositing was driven by Weta, ILM and also The Foundry for Nuke compositing. But Nuke is scanline based and Side Effects is tile based, so there was a whole section of the spec and implementation that was somewhat untested. Side Effects worked very closely with the OpenEXR group to make sure the deep compositing workflow worked well with Houdini and other titled solutions.

Alembic (major support from SPI/ILM)

Alembic has swept through the industry as one of the great success stories of open source. The baked out geometric process provided reducing complexity and file size and passed on a powerful but simplified version of an animation, or performance. All in an agreed standardized file format. It has been welcomed in almost every section of the rendering community. It allows for better file exchange between facilities and better integration and faster operation inside facilities. Since its launch at Siggraph 2010 and its public release at Siggraph 2011 (see our coverage and video from the event) both facilities and equipment manufacturers have embraced it.

Alembic is:

Alembic reduces data replication, and just this feature gave 48% disc reduction improvements in say Men in Black 3 (an Imageworks show). ILM first adopted it studio wide for their pipeline refresh as part of gearing up for the original Avengers, and they have used it ever since. And it is easy to see why SPI saw files on some productions drop from 87 gig to 173MB.

Alembic was helmed by a joint effort from ILM and Sony, spearheaded by Tony Burnette and Rob Bredow – the two company’s respective CTOs. Together they put forward a killer production solution with a strong code base contributed to by many other studios and from the commercial partners such as Pixar, The Foundry, Solid Angle, Autodesk and others. With product implementations such as Maya, Houdini, RenderMan, Arnold and Katana all joining from the outset. Since then most other renderers have moved to support Alembic. Not all but most major packages now support Alembic.

Alembic 1.5 will be released at SIGGRAPH 2013 with a new support for muli-threading. This new version includes support for the Ogawa libraries. This new approach means significant improvements, unofficially:

1) File sizes are on av. 5-15% smaller. Scenes with many small objects should see even greater reductions

2) Single-threaded reads average around 4x faster

3) Multi-threaded reads can improve by 25x (on 8 core systems)

Key developers seem pretty enthusiastic about it. Commenting on the next release, Mark Elendt from Side Effects says “it uses their Ogawa libraries, which is a huge efficiency over the HDF5 implementation that they had.”

The new system will maintain backwards compatibility. The official details should be published at SIGGRAPH 2013. Also being shown at SIGGRAPH is V-Ray’s support of Alembic which is already in alpha or beta stage testing. Already for key customers “on our nightly builds we have Alembic support and OpenEXR 2 support,” commented Lon Grohs, business development manager of Chaos Group.

Alembic is:

Alembic reduces data replication, and just this feature gave 48% disc reduction improvements in say Men in Black 3 (an Imageworks show). ILM first adopted it studio wide for their pipeline refresh as part of gearing up for the original Avengers, and they have used it ever since. And it is easy to see why SPI saw files on some productions drop from 87 gig to 173MB.

Alembic was helmed by a joint effort from ILM and Sony, spearheaded by Tony Burnette and Rob Bredow – the two company’s respective CTOs. Together they put forward a killer production solution with a strong code base contributed to by many other studios and from the commercial partners such as Pixar, The Foundry, Solid Angle, Autodesk and others. With product implementations such as Maya, Houdini, RenderMan, Arnold and Katana all joining from the outset. Since then most other renderers have moved to support Alembic. Not all but most major packages now support Alembic.

Alembic 1.5 will be released at SIGGRAPH 2013 with a new support for muli-threading. This new version includes support for the Ogawa libraries. This new approach means significant improvements, unofficially:

1) File sizes are on av. 5-15% smaller. Scenes with many small objects should see even greater reductions

2) Single-threaded reads average around 4x faster

3) Multi-threaded reads can improve by 25x (on 8 core systems)

Key developers seem pretty enthusiastic about it. Commenting on the next release, Mark Elendt from Side Effects says “it uses their Ogawa libraries, which is a huge efficiency over the HDF5 implementation that they had.”

The new system will maintain backwards compatibility. The official details should be published at SIGGRAPH 2013. Also being shown at SIGGRAPH is V-Ray’s support of Alembic which is already in alpha or beta stage testing. Already for key customers “on our nightly builds we have Alembic support and OpenEXR 2 support,” commented Lon Grohs, business development manager of Chaos Group.

OpenVDB (major support from Dreamworks Animation)

OpenVDB is an open source (C++ library) standard for a new hierarchical data structure and a suite of tools for the efficient storage and manipulation of sparse volumetric data discretized on three-dimensional grids. In other words, it helps with volume rendering by being a better way to store volumetric data and access it. It comes with some great features, not the least of which is that it allows for an infinite volume, something hard to store normally (!).

OpenVDB is an open source (C++ library) standard for a new hierarchical data structure and a suite of tools for the efficient storage and manipulation of sparse volumetric data discretized on three-dimensional grids. In other words, it helps with volume rendering by being a better way to store volumetric data and access it. It comes with some great features, not the least of which is that it allows for an infinite volume, something hard to store normally (!).

A scene from DreamWorks Animation’s Puss in Boots.

It was developed and is supported by DreamWorks Animation, who use it in their volumetric applications in feature film production.

OpenVDB was developed by Ken Museth from DreamWorks Animation. He points out that for dense volumes you can have a huge memory overhead and it is slow to traverse the voxels when ray tracing. To solve this people turned to spare data storage. One only stores exactly what one needs, but then the problem is finding data in this new data structure.

There are two main methods commonly used by ray tracing now, the first is an Octree (Brick Maps in RenderMan for example use this effectively for Surfaces). While this is a common solution with volumes these can get very “tall”, meaning that it is a long way from the root of the data to the leaf. Therefore, a long data traversal equals slow ray tracing especially for random access. The second is a Tile Grid approach. This is much “flatter” where there is just the root and immediately the leaf data, but it does not scale as it is a wide table. OpenVDB tries to balance these two methods by producing a fast data transversal, rarely requiring more than 4 levels and is also scalable. This is needed as a volumetric data set as it can easily be tens of thousands of voxels or more. While this idea to employ a shallow wide tree, a so-called B+ Tree, has been used in databases such as Oracle and file systems (i.e. NFTS), OpenVDB is the first to apply it to the problem of compact and fast volumes.

OpenVDB was developed by Ken Museth from DreamWorks Animation. He points out that for dense volumes you can have a huge memory overhead and it is slow to traverse the voxels when ray tracing. To solve this people turned to spare data storage. One only stores exactly what one needs, but then the problem is finding data in this new data structure.

There are two main methods commonly used by ray tracing now, the first is an Octree (Brick Maps in RenderMan for example use this effectively for Surfaces). While this is a common solution with volumes these can get very “tall”, meaning that it is a long way from the root of the data to the leaf. Therefore, a long data traversal equals slow ray tracing especially for random access. The second is a Tile Grid approach. This is much “flatter” where there is just the root and immediately the leaf data, but it does not scale as it is a wide table. OpenVDB tries to balance these two methods by producing a fast data transversal, rarely requiring more than 4 levels and is also scalable. This is needed as a volumetric data set as it can easily be tens of thousands of voxels or more. While this idea to employ a shallow wide tree, a so-called B+ Tree, has been used in databases such as Oracle and file systems (i.e. NFTS), OpenVDB is the first to apply it to the problem of compact and fast volumes.

Puss in Boots cloud environment.

On top of this OpenVDB provides a range of tools to work with the data structure.

The result of implementing OpenVDB is:

Just how small? The memory footprint of one Dreamworks Animation model dropped from 1/2 a terabyte to less than a few hundred megabytes. And a fluid simulation skinning (polygonization) operation that took an earlier Houdini version some 30 minutes per section, (and it had to be split into bins for memory reasons) – “with OpenVDB it could all be done in around 10 seconds,” says Museth.

An additional advantage is that the volume can be dynamic (vs static) which lends itself very well for fluids of smoke etc.

(Click here for Museth’s SIGGRAPH 2013 paper)

OpenVDB has had rapid adoption, most noticeably by Side Effects Software who were first to publicly back the initiative, and additionally by Solid Angle (Arnold) and Pixar (RenderMan).

“The ease of integration was a huge factor in enabling us to introduce OpenVDB support,” says

Chris Ford, RenderMan Business Director at Pixar Animation Studios. “The API is well thought

out and enabled us to support the rendering requirements we think our customers need. The

performance from threading and compact memory footprint is icing on the cake.”

“In addition to our Arnold core and Houdini-to-Arnold support of OpenVDB, we’re also pleased

to announce planned support in Maya-to-Arnold and Softimage-to-Arnold package plugins,” said

Marcos Fajardo, Solid Angle.

The use of DreamWorks Animation’s OpenVDB in Houdini was a key component to producing the many environmental effects in DreamWorks Animation’s movies, The Croods. “The complexity of our clouds, explosions, and other volumetric effects could not have been done without the VDB Tools in Houdini,” said Matt Baer, Head of Effects for The Croods.

“The response to OpenVDB is overwhelmingly positive,” said Lincoln Wallen, CTO at DreamWorks Animation. “Feedback from our partners and the community has helped the team refine the toolset and create a robust release that is poised to set an industry standard.”

OpenVDB uses a very efficient narrow-band sparse data format. “This means that OpenVDB volumes have an extremely efficient in-memory data structure that let them represent unbounded volumes. The fact that the volumes are unbounded is really key. If you think of volumes as 3D texture maps, unbounded volumes are like having a texture map that can have infinite resolution” explained Mark Elendt from Side Effects Software.

Side Effects found it was fairly easy to integrate OpenVDB into their existing modeling and rendering pipelines. “When we plugged VDB into Mantra’s existing volumetric architecture we could immediately use all the shading and rendering techniques that had been built around traditional volumes, such as volumetric area lights. Thanks to OpenVDB’s efficient data structures we can now model and render much higher fidelity volumes than ever before” he said.

For more information click here.

The result of implementing OpenVDB is:

Just how small? The memory footprint of one Dreamworks Animation model dropped from 1/2 a terabyte to less than a few hundred megabytes. And a fluid simulation skinning (polygonization) operation that took an earlier Houdini version some 30 minutes per section, (and it had to be split into bins for memory reasons) – “with OpenVDB it could all be done in around 10 seconds,” says Museth.

An additional advantage is that the volume can be dynamic (vs static) which lends itself very well for fluids of smoke etc.

(Click here for Museth’s SIGGRAPH 2013 paper)

OpenVDB has had rapid adoption, most noticeably by Side Effects Software who were first to publicly back the initiative, and additionally by Solid Angle (Arnold) and Pixar (RenderMan).

“The ease of integration was a huge factor in enabling us to introduce OpenVDB support,” says

Chris Ford, RenderMan Business Director at Pixar Animation Studios. “The API is well thought

out and enabled us to support the rendering requirements we think our customers need. The

performance from threading and compact memory footprint is icing on the cake.”

“In addition to our Arnold core and Houdini-to-Arnold support of OpenVDB, we’re also pleased

to announce planned support in Maya-to-Arnold and Softimage-to-Arnold package plugins,” said

Marcos Fajardo, Solid Angle.

The use of DreamWorks Animation’s OpenVDB in Houdini was a key component to producing the many environmental effects in DreamWorks Animation’s movies, The Croods. “The complexity of our clouds, explosions, and other volumetric effects could not have been done without the VDB Tools in Houdini,” said Matt Baer, Head of Effects for The Croods.

“The response to OpenVDB is overwhelmingly positive,” said Lincoln Wallen, CTO at DreamWorks Animation. “Feedback from our partners and the community has helped the team refine the toolset and create a robust release that is poised to set an industry standard.”

OpenVDB uses a very efficient narrow-band sparse data format. “This means that OpenVDB volumes have an extremely efficient in-memory data structure that let them represent unbounded volumes. The fact that the volumes are unbounded is really key. If you think of volumes as 3D texture maps, unbounded volumes are like having a texture map that can have infinite resolution” explained Mark Elendt from Side Effects Software.

Side Effects found it was fairly easy to integrate OpenVDB into their existing modeling and rendering pipelines. “When we plugged VDB into Mantra’s existing volumetric architecture we could immediately use all the shading and rendering techniques that had been built around traditional volumes, such as volumetric area lights. Thanks to OpenVDB’s efficient data structures we can now model and render much higher fidelity volumes than ever before” he said.

For more information click here.

OSL – Open Shading Language (major support from SPI)

At last year’s SIGGRAPH in a special interest group meeting that SIGGRAPH calls “Birds of a Feather” around Alembic, Rob Bredow, CTO SPI asked the packed meeting room how many people used Alembic. A vast array of hands shot up, which given the steering role of SPI clearly pleased Bredow. It was not so much the same response from the same question on OSL. At the time Sony Pictures Imageworks used OSL internally and at the show Bill Collis committed The Foundry to exploring it with Katana, but there was no wide spread groundswell seen around say Alembic.

Run the clock forward a year and the situation has changed or is about to change, fairly dramatically. Key renderer V-Ray has announced OSL support. “OSL support is already ready and in the nightly builds, and should be announced with version 3.0, says Chaos Group’s Grohs. “We have found artists tend to gravitate to OpenSource and we get demands which we try and support.”

As has Autodesk’s Beast (a game asset renderer – ex Turtle Renderer) and Blender, but there is more on the way and while it is not a slam dunk, OSL is in striking distance of a tipping point that could see wide scale adoption of OSL, which in turn would be thanks to people like Bredow who is a real champion of open source and one of the most influential CTO’s in the world in this respect.

“OSL has been a real success for us so far,” says Bredow. “With the delivery of MIB3 and The Amazing Spider-Man and now Smurf’s 2 and Cloudy with a Chance of Meatballs 2 on their way, OSL is now a production-proven shading system. Not only are the OSL shaders much faster to write, they actually execute significantly faster than our old hand-coded C and C++ shaders. Our shader writers are now focused on innovation and real production challenges, rather than spending a lot of time chasing compiler configurations!” – Bredow explained to fxguide from location in Africa last week.

OSL usage and adoption is growing and importantly for any open source project it is moving from the primary supporter doing all the work to being a community project “We’re getting great contributions back from the developer community now as well” Bredow says.

OSL does not have an open runway ahead. Some people believe the OSL is not wanted by their customers. “I have been to many film studios post merger, I’ve talked to a lot of customers and in the last 3 months I have done nothing but travel and visit people and not once has it come up,” explained Modo’s Brad Peebler. While the Foundry and Luxology very much support open source, they seem to have no interest in OSL.

Others groups are exploring something similar to OSL but different. Some companies are considering Material Description Language (MDL) which is a sort of different approach to shaders being developed by Nvidia with iRay as explained by Autodesk’s rendering expert Håkan “Zap” Andersson. Zap as he likes to be known feels that OSL in general is a more modern and intelligent way to approach shading than traditional shaders but Nvidia is moving in a different direction again.

“If you look at OSL there is no such thing as a light loop, no such things as tracing rays, you basically tell the renderer how much of ‘what kind of shading goes where,’” says Zap. “At the end of your shader there is not a bunch of code that loops through your lights or sends a bunch of rays…at the end instead of returning a color like a traditional shader does OSL returns something called a closure which is really just a list of shading that needs to be done at this point. It hands this to the renderer and the render makes intelligent decisions about this.” This pattern of moving smarts from shaders to the renderer. By contrast the Nvidia MDL is more of a way of passing to iRay a description of materials.

“MDL is not a shading language as we would think of it, but rather a notation for iRay for describing materials: basically weighting BRDFs and setting their parameters,” says Bredow. “Since iRay doesn’t allow for programmable shaders the way we expect, it’s not really trying to solve the same problem.”

Zap says the difference between MDL and OSL is a “little bit like 6 of one and half a dozen of the other.” While it is clear Autodesk has not decided to have a unified material system, one would have to expect that Nvidia would be very keen to get Autodesk on board. One would have to expect Autodesk, with multiple products, would benefit from having a unified shader language.

SPI and the OSL community would of course be very happy to see Autodesk use OSL more widely in their products, as Autodesk only has Beast currently supporting it, and Autodesk is Nvidia’s biggest customer for Mental Ray. Zap would not be drawn on Autodesk’s preference to move more towards either MDL or OSL but one gets the distinct impression that the 3D powerhouse is actively exploring the implications for both. If Autodesk was to throw its weight behind something like OSL, it would be decisive for this long term adoption. “I believe it would be a great fit across a wide array of their applications. I’d love for you to reach out to them directly as well as it would be great if they had more to share beyond Beast,” offered Bredow.

Given Sony’s use of OSL, one might expect Solid Angle’s Arnold to support OSL, but as of now it does not. While the company is closely monitoring Sony’s use of OSL, Marcos Fajardo explains that ”we are looking at it with a keen eye and I would like to do something about it – maybe next year,” but nothing is implemented right now.

Many other companies who do not yet support OSL, such as Next Limit, are actively looking at OSL to perhaps support it in an upcoming release of Maxwell.

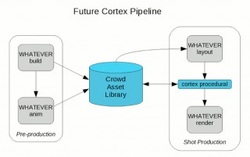

Cortex (major support from Image Engine)

Image Engine in collaboration with several other companies has been promoting a new system for visual effects support (C++ and Python modules) that provides a level of common abstraction while unifying a number of ways of rendering a range of common vfx problems in a similar way. Cortex is a suite of open source libraries providing a cross-application framework for computation and rendering. The Cortex group is not new but it has yet to reach critical mass, although as with many successful such projects it comes from being used in production and facing real world tests, particularly inside Image Engine which uses it to tackle problems it sees as normally being much bigger than a company their size could tackle. For example it provides a unified solution to fur/hair, crowds and procedural instancing. Image Engine used it most recently on Fast and Furious 6 – but it is used extensively inside Image Engine.

John Haddon, R&D Programmer at Image Engine: “Cortex’s software components have broad applicability to a variety of visual effects development problems. It was developed primarily in-house at Image Engine and initially deployed on District 9. Since then, it has formed the backbone of the tools for all subsequent Image Engine projects, and has seen some use and development in other facilities around the globe.”

Image Engine in collaboration with several other companies has been promoting a new system for visual effects support (C++ and Python modules) that provides a level of common abstraction while unifying a number of ways of rendering a range of common vfx problems in a similar way. Cortex is a suite of open source libraries providing a cross-application framework for computation and rendering. The Cortex group is not new but it has yet to reach critical mass, although as with many successful such projects it comes from being used in production and facing real world tests, particularly inside Image Engine which uses it to tackle problems it sees as normally being much bigger than a company their size could tackle. For example it provides a unified solution to fur/hair, crowds and procedural instancing. Image Engine used it most recently on Fast and Furious 6 – but it is used extensively inside Image Engine.

John Haddon, R&D Programmer at Image Engine: “Cortex’s software components have broad applicability to a variety of visual effects development problems. It was developed primarily in-house at Image Engine and initially deployed on District 9. Since then, it has formed the backbone of the tools for all subsequent Image Engine projects, and has seen some use and development in other facilities around the globe.”

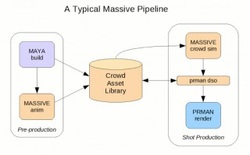

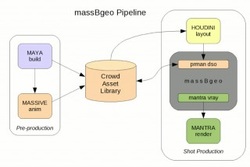

An example outside Image Engine was shown by Ollie Rankin, from Method Studios, who presented at an earlier SIGGRAPH Birds of Feather on Cortex and how it can be used in a crowd pipeline. He had used a typical Massive pipeline which involved Massive providing agent motivation, and yet they felt Massive was not ideal for procedural crowd placement. In a hack for the film Invictus, they used Houdini to place the Massive agents, and they rendered in Mantra.

Massive exports native RIB files and like RenderMan, Mantra would work with Massive and Mantra is very similar to RenderMan – but it was a hack introducing Houdini to just handle procedural placements and still gain agent animation from Massive. Massive provided just the moving, waving, cheering agents but their placement was all from Houdini as “we didn’t need Massive to distribute people into seats in a stadium – we knew exactly where the those seats where – all we needed was to turn those seat positions into people”. The rendering did require a bridge between Massive and Mantra, but with a custom memory hack using PRman’s dso (Dynamic Shared Object).

“While we were happy with the way that Massive manipulates motion capture and happy with the animation it produces, we felt that its layout tools weren’t flexible enough for our needs”, Rankin told fxguide. “We realised that the challenge of filling a stadium with people essentially amounts to turning the known seat positions into people. We also wanted to be able to change the body type, clothing and behaviour of the people, either en masse or individually, without having to re-cache the whole crowd. We decided that a Houdini point cloud is the ideal metaphor for this type of crowd and set about building a suite of tools to manipulate point attributes that would represent body type, clothing and behaviour, using weighted random distributions, clumping and individual overrides.”

They still needed a mechanism to turn points into people and this is where “we had to resort to a monumental hack.” Massive ships with a procedural DSO (Dynamic Shared Object) for RenderMan that can be used to inject geometry into a scene at render-time. It does so by calling Massive’s own libraries for geometry assignment, skeletal animation and skin deformation on a per-agent basis and delivering the resulting deformed geometry to the renderer. “Our hack was a plugin that would call the procedural, then intercept that geometry, straight out of RAM, and instead deliver it to Mantra,” Rankin explained

“While we were happy with the way that Massive manipulates motion capture and happy with the animation it produces, we felt that its layout tools weren’t flexible enough for our needs”, Rankin told fxguide. “We realised that the challenge of filling a stadium with people essentially amounts to turning the known seat positions into people. We also wanted to be able to change the body type, clothing and behaviour of the people, either en masse or individually, without having to re-cache the whole crowd. We decided that a Houdini point cloud is the ideal metaphor for this type of crowd and set about building a suite of tools to manipulate point attributes that would represent body type, clothing and behaviour, using weighted random distributions, clumping and individual overrides.”

They still needed a mechanism to turn points into people and this is where “we had to resort to a monumental hack.” Massive ships with a procedural DSO (Dynamic Shared Object) for RenderMan that can be used to inject geometry into a scene at render-time. It does so by calling Massive’s own libraries for geometry assignment, skeletal animation and skin deformation on a per-agent basis and delivering the resulting deformed geometry to the renderer. “Our hack was a plugin that would call the procedural, then intercept that geometry, straight out of RAM, and instead deliver it to Mantra,” Rankin explained

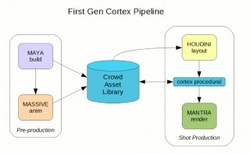

By comparison a Cortex solution would allow a layer of Cortex procedural interface between Massive and RenderMan – it would access and query the Massive Crowd assets library and remove the need for the Houdini/PRman dso hack, but still allow Houdini render Massive’s agents. But once there is a unified standard cortex procedural hub, it is possible to replace say Houdini or update to a different renderer – all without breaking the custom black box hack that existed before.

The same coding approach and API interface used in this example for solving Massive – Houdini could be used for Maya, Nuke or a range of other applications. By introducing a standardized Cortex layer, geometry say could be generated at Render time in a host of situations but all deploying the same basic structure and not requiring new hacks each time or needing to be redone if a version of software changes or need to be replaced. This is just one example of a range of things Cortex is designed to help with layout, modeling, animation, deferred geomerty creation at render time. It can work in a wide variety of situations where interfaces between tools is needed in a production environment. More info click here

OpenSubDiv (major support from Pixar)

OpenSubdiv is a set of open source libraries that implement high-performance subdivision surface (subdiv) evaluation on massively parallel CPU and GPU architectures. This codepath is optimized for drawing deforming subdivs with static topology at interactive framerates. The resulting limit surface matches Pixar’s RenderMan to numerical precision. The code embodies decades of research and experience by Pixar, and a more recent and still active collaboration on fast GPU drawing between Microsoft Research and Pixar.

OpenSubdiv is a set of open source libraries that implement high-performance subdivision surface (subdiv) evaluation on massively parallel CPU and GPU architectures. This codepath is optimized for drawing deforming subdivs with static topology at interactive framerates. The resulting limit surface matches Pixar’s RenderMan to numerical precision. The code embodies decades of research and experience by Pixar, and a more recent and still active collaboration on fast GPU drawing between Microsoft Research and Pixar.

OpenSubdiv is covered by an opensource license and is free to use for commercial or non-commercial use. This is the same code that Pixar uses internally for animated film production. Actually it was the short film Geri’s Game that first used subdivision surfaces at Pixar.See fxguide story including a link to the Siggraph paper explaining it.

Pixar is targeting SIGGRAPH LA 2013 for release 2.0 of OpenSubdiv. The major feature of 2.0 will be the Evaluation (eval) api. This adds functionality to:

“We also expect further performance optimization in the GPU drawing code as the adaptive pathway matures” more information at the OpenSubDiv site.

Pixar is targeting SIGGRAPH LA 2013 for release 2.0 of OpenSubdiv. The major feature of 2.0 will be the Evaluation (eval) api. This adds functionality to:

“We also expect further performance optimization in the GPU drawing code as the adaptive pathway matures” more information at the OpenSubDiv site.

转载自 fxguide.com http://www.fxguide.com/featured/the-state-of-rendering/

- Open Source Rendering Library

- Opencv(open source computer vision library)

- Open Source Framework and Library Recommendations

- Compile Assimp Open Source Library For Android Compile Assimp Open Source Library For Android

- PJNATH - Open Source ICE, STUN, and TURN Library

- Compact Framework 上的 Open Source UI Library

- Folly: Facebook Open-source LibrarY: 开源并行C++库

- C# 二维码的生成 Open Source QRCode Library

- Nice & Easy iOS OpenAL Sound Library – Open Source

- Open Source Cocoa/Cocoa-Touch POP3/SMTP library?

- PJNATH - Open Source ICE, STUN, and TURN Library

- C#的二维码生成和解析 Open Source QRCode Library

- 开源SIFT特征库OpenSIFT: An Open-Source SIFT Library

- Best open-source pedestrian detection library for commercial use?

- Open source

- open source

- OPEN SOURCE

- Open Source

- easyui grid

- 【一步一步,从无到有 --- 安卓项目实战】 Android开发环境的安装

- 自己总结的dos命令

- WindowsXP下修改MAC地址

- SQL中的锁、脏读、不可重复的读及虚读

- Open Source Rendering Library

- HDU 3336 Count the string KMP

- iText生成条形码与二维码

- 关联关系中的CRUD中 cascade,fetch

- ios 计算方法执行的时间

- Oracle 11g RAC OCR 与 db_unique_name 配置关系 说明

- 通过java解决linux下解压来自window生成的zip文件的乱码问题

- ios培训 Objective-C语法大讲堂(一)

- 使用graphviz绘制流程图