LENSES CLASSIFICATION USING NEURAL NETWORKS

来源:互联网 发布:营销qq下载mac 编辑:程序博客网 时间:2024/06/05 09:47

LENSES CLASSIFICATION USING NEURAL NETWORKS

An example of a multivariate data type classification problem using Neuroph framework

by Maja Urosevic, Faculty of Organizational Sciences, University of Belgrade

an experiment for Intelligent Systems course

- Adaptive learning: An ability to learn how to do tasks based on the data given for training or initial experience.

- Self-Organisation: An ANN can create its own organisation or representation of the information it receives during learning time.

- Real Time Operation: ANN computations may be carried out in parallel, and special hardware devices are being designed and manufactured which take advantage of this capability.

- Fault Tolerance via Redundant Information Coding: Partial destruction of a network leads to the corresponding degradation of performance. However, some network capabilities may be retained even with major network damage.

The data set has 4 attributes (age of the patient, spectacle prescription, notion on astigmatism, and information on tear production rate) plus an associated three-valued class, that gives the appropriate lens prescription for patient (hard contact lenses, soft contact lenses, no lenses).

The data set contains 24 instances(class distribution: hard contact lenses- 4, soft contact lenses-5, no contact lenses- 15).

Attribute Information: (the first attribute of the data set is an ID number, and therefore, has not been included in the training set)

- Age of the patient: (1) young, (2) pre-presbyopic, (3) presbyopic

- Spectacle prescription: (1) myope, (2) hypermetrope

- Astigmatic: (1) no, (2) yes

- Tear production rate: (1) reduced, (2) normal

- Class name: (1) the patient should be fitted with hard contact lenses, (2) the patient should be fitted with soft contact lenses, (3) the patient should not be fitted with contact lenses.

- Transform the data set

- Create a Neuroph project

- Create a Training Set

- Create a neural network

- Train the network

- Test the network to make sure that it is trained properly

Several architectures have been tried out during the experiment in order to find the best solution to the given problem.

The last 3 digits of the data set represent the class an instance belongs to. 1 0 0 represent hard contact lenses class, 0 1 0 soft contact lenses class, and 0 0 1 no contact lenses class.

The original data set can be dowloaded here.

The transformed data set can be downloaded here.

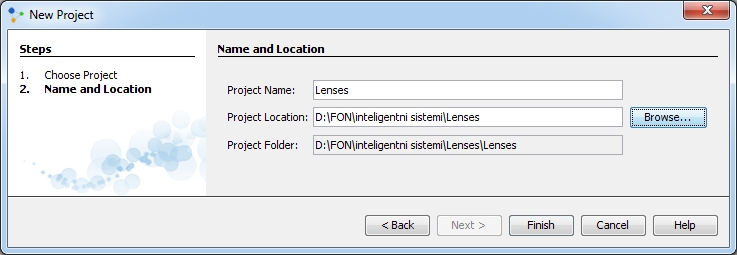

We create a new project in Neuroph Studio by clicking File > New Project, next we choose Neuroph project and click 'Next' button.

Next step is to define project name and location. After that, we click 'Finish' and the created project will appear in Projects window.

During the training, neural network is taken through a number of iterations, until the output of the neural network matches the anticipated output, with a reasonably low error rate.

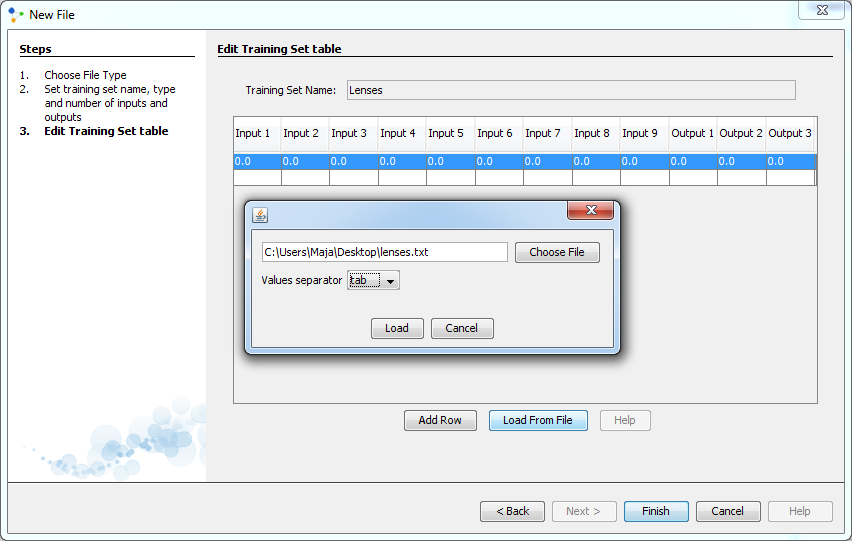

After clicking 'Next', we should insert our data set into training set table. We will load all data directly from a file, by clicking on 'Choose File' and selecting the one where we saved our transformed data set. Values in the file are separated by tab.

By clicking on 'Load', all our data will be loaded into a table. We can see that this table has 12 columns, where the first 9 of them represent inputs, and the last 3 represent outputs from our data set.

After clicking 'Finish' a new training set will appear in our project.

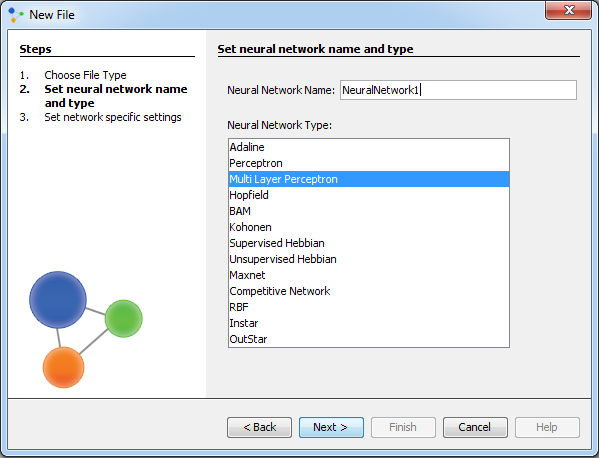

In order to find the best solution to our problem, we will create several neural networks based on this training set, using different sets of parameters.

Standard approaches to validation of neural networks are mostly based on empirical evaluation through simulation and/or experimental testing. There are several methods for supervised training of neural networks. The backpropagation algorithm is the most commonly used training method for artificial neural networks.

Backpropagation is a common method of training artificial neural networks so as to minimize the objective function. It requires a data set of the desired output for many inputs, making up the training set. It is most useful for feed-forward networks (networks that have no feedback, or simply, that have no connections that loop). Main idea is to distribute the error function across the hidden layers, corresponding to their effect on the output.

A multilayer perceptron is a feedforward artificial neural network model that maps sets of input data onto a set of appropriate output. It consists of multiple layers of nodes in a directed graph, with each layer fully connected to the next one. Except for the input nodes, each node is a neuron with a nonlinear activation function. Multilayer perceptron utilizes backpropagation for training the network. It is a modification of the standard linear perceptron, which can distinguish data that is not linearly separable.

In the next window we set parameters of the network. Numbers of input and output neurons are the same as in the training set. We decide the number of hidden layers as well as the number of neurons in each layer.

Problems that require more than one hidden layer are rarely encountered. For many practical problems, there is no reason to use more than one hidden layer. One layer can approximate any function that contains a continuous mapping from one finite space to another. Deciding about the number of hidden neuron layers is only a small part of the problem. We must also determine how many neurons in each of the hidden layers will be. Both the number of hidden layers and the number of neurons in each of these hidden layers must be carefully considered.

Using too few neurons in the hidden layers will result in something called underfitting. Underfitting occurs when there are too few neurons in the hidden layers to adequately detect the signals in a complicated data set.

Using too many neurons in the hidden layers can result in several problems. Firstly, too many neurons in the hidden layers may result in overfitting. Overfitting occurs when the neural network has so much information processing capacity that the limited amount of information contained in the training set is not enough to train all of the neurons in the hidden layers. A second problem can occur even when the training data is sufficient. An inordinately large number of neurons in the hidden layers can increase the time it takes to train the network. The amount of training time can increase to the point that it is impossible to adequately train the neural network.

Obviously, some compromise must be reached between too many and too few neurons in the hidden layers.

We have chosen 1 layer with 8 hidden neurons in this first training attempt. We check 'Use Bias Neurons' option and choose 'Sigmoid' for transfer function in all our networks. For learning rule we choose 'Backpropagation with Momentum'. The momentum is added to speed up the process of learning and to improve the efficiency of the algorithm.

Bias neuron is very important, and the error-back propagation neural network without Bias neuron for hidden layer does not learn. Neural networks are "unpredictable" to a certain extent so if you add a bias neuron you're more likely to find solutions faster then if you didn't use a bias. The Bias weights control shapes, orientation and steepness of all types of Sigmoidal functions through data mapping space. A bias input always has the value of 1. Without a bias, if all inputs are 0, the only output ever possible will be a zero.

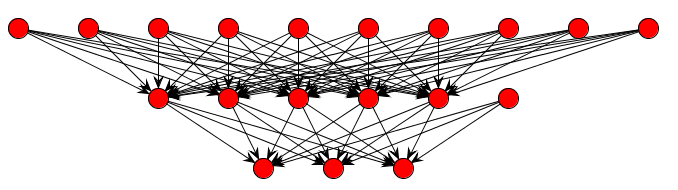

Next, we click 'Finish' and the first neural network is created. In the picture below we can see the graph view of this neural network.

The graph shows the input, output, hidden neurons and how they are connected with each other. Except for the two neurons with activation level 1 (bias activation), all other neurons have an activation level 0. These two neurons represent bias neurons, as we explained above.

After we have created the training set and the neural network, we can train it. Firstly, we select our training set, click 'Train', and then set learning parameters for the training.

Learning rate is a control parameter of training algorithms, which controls the step size when weights are iteratively adjusted.

To help avoid settling into a local minimum, a momentum rate allows the network to potentially skip through local minimum. A momentum rate set at the maximum of 1.0 may result in training which is highly unstable and thus may not achieve even a local minimum, or the network may take an inordinate amount of training time. If set at a low of 0.0, momentum is not considered and the network is more likely to settle into a local minimum.

When the Total Net Error value drops below the max error, the training is complete. The smaller the error, the better the approximation.

In this case, we set the maximum error to 0.01, learning rate to 0.2 and momentum to 0.7.

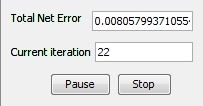

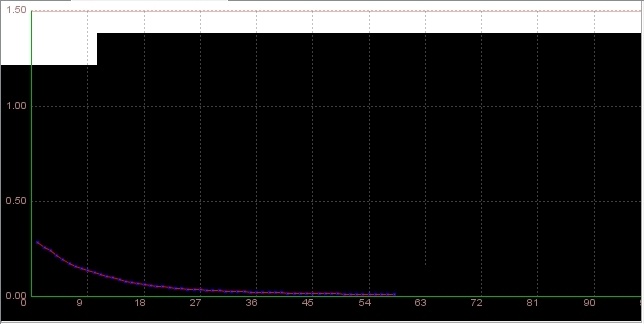

Then we click on the 'Next' button and the training process starts.

After 49 iterations Total Net Error drops down to a specified level of 0.01 which means that training process was successful and that now we can test this neural network.

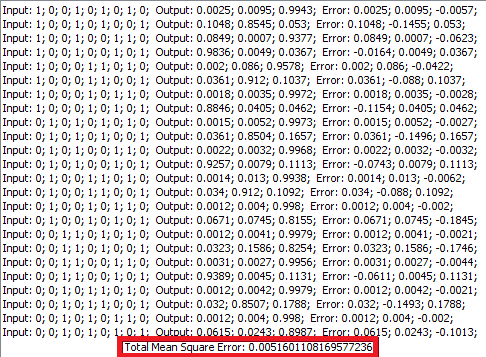

We test the neural network by clicking on the 'Test' button. In the results we can see that the Total Mean Square Error is 0.00516061449608549. That is certainly a very good result, because our goal is to get the total error smaller than 0,01.

Looking over the individual errors we realize that all of them are at the low level, below 0.01. It means that this neural network architecture is acceptable. Still, we will try to find better results by changing learning parameters.

In our second attempt we will only change the learning rate and see what happens. We will set it to 0.6. Momentum stays the same as before.

After only 22 iterations, the max error was reached.

Now we look at the testing results.

In this attempt the total mean square error is lower than in the previous case. We will now look for different architectures to find out if we can get even better results than this one.

In this attempt we will try to get better results by reducing the number of hidden neurons to 5. It is known that the number of hidden neurons is crucial for the success of network training.

At first we create a new neural network. All the parameters are the same as they were in the first training attempt, we only change the number of hidden neurons.

We will train the network with the following learning parameters:

It took 58 iterations to reach the max error of 0,01.

Now, we will test this neural network and see testing results for this neural network architecture.

In this case the total mean square error is slightly higher than it was in the last training attempt, but generally the overall result is good. We will try to find even better result with a lower number of hidden neurons.

In this attempt, we will try to achieve a better result by using 4 hidden neurons.

The neural network we have created can now be trained. In the first testing attempt we will set learning parameters as in the picture below:

It took 55 iterations for network to train.

After testing the neural network, we see that the total mean square error is 0.005698442819583745. It is very similar to the results from previous attempts, but yet we think that it could be lower.

In this attempt we will use the same network architecture as it was in the previous training attempt. We will try to get even better results by changing some learning parameters.

We will put 0.6 for the learning rate and 0.7 for momentum, while the max error will stay the same.

Training process stops after 27 iterations.

After testing, we got that in this attempt the total error is lower than it was in previous case with learning rate 0.2.

So we can conclude that 4 neurons are enough, but we will still try to find an architecture with a lower number of neurons that will give us the best result.

In this attempt we will use the same network architecture as it was in the previous training attempt. We will try to get even better results by changing some learning parameters.

We will put 0.4 for the learning rate and 0.5 for momentum, while max error will stay the same.

Training process stops after 52 iterations.

After testing, the total error is lower than it was in the case with learning rate 0.2, but higher than in the case with learning rate 0.6 and momentum 0.7.

The conclusion is that with 4 hidden neurons, the best result is achieved when putting the learning rate higher than 0.2. Also, we should not decrease momentum too much in order to get a low total error.

We will create a new neural network with 3 hidden neurons.

In this neural network architecture we have 3 hidden neurons. We will see if that is enough for the network to reach the maximum error of 0.01. For the beginning, learning rate will be 0.2 and momentum 0.7.

Network was successfully trained, and after 64 iterations, the desired error was reached. In the total network error graph we can see that the error decreases continuously throughout the whole training cycle.

After we have finished testing, we can see that the total mean square error in this case is slightly higher than the errors in previous attempts, so we will try to improve that by changing learning parameters and see what happens.

With this training attempt we will try to reduce the total error by changing some of the learning parameters. Limitation for the max error will remain 0.01, we will increase the learning rate to 0.4 and decrease momentum- it will be 0.4.

As we can see in the image below, network was successfully trained, and after 73 iterations the total error was reduced to the level below 0.01.

After testing the neural network, we see that in this attempt the total mean square error is 0.0056955553861498635, which is better than the error that was in the previous attempt . But, we are still going to try to find out if it is possible to get even better testing result, with lower total error for the same neural network architecture.

In this training attempt we want to see what will happen if we set higher values for both the learning rate and momentum. We will put 0.6 for the learning rate and 0.7 for momentum.

Training process was successful and after 24 iterations, the total error of 0.01 was reached.

This is the best result achieved with 3 hidden neurons, so the conclusion is to increase the learning rate in this architecture.

We will create a new neural network with 2 hidden neurons.

In this neural network architecture we have 2 hidden neurons. We will see if that is enough for the network to reach the maximum error of 0.01. Firstly, we set learning rate to 0.2 and momentum to 0.7.

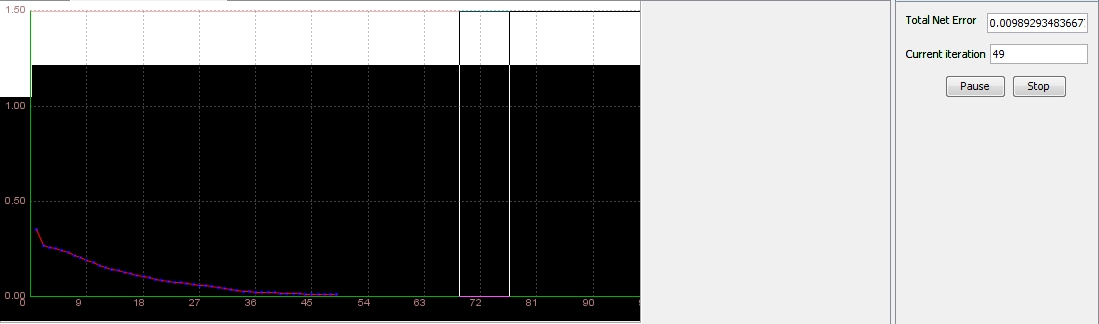

Network was successfully trained, and, after 49 iterations, the desired error was reached.

After we have finished the testing, we see that the total mean square is quite low. Still, we will try to find if there is a combination of learning parameters that will give us the best possible result so far.

In this training attempt we want to see what will happen if we set even higher value for the learning rate- 0.7, while the momentum stays the same.

Training process was successful and after 53 iterations the desired error was reached.

This was the best result achieved with 2 hidden neurons, and also the best result achieved since the beginning of the experiment!

In this attempt, we will create a different type of neural network. We want to see what will happen if we create a neural network with two hidden layers.

We will put 3 neurons in the first layer, and 2 in the second.

The structure of the new network is shown in the graph below:

We will see if these 2 layers are enough for the network to reach the maximum error of 0.01. We will put 0.2 for the learning rate and 0.7 for momentum.

Training process was successful and after 119 iterations the desired error was reached.

The result achieved with two hidden layers is also acceptable, since the total mean square error is 0.005599376700513674.

All in all, there is no need for using more than one hidden layer, since we did not get better results than those from using only one hidden layer.

The final results of all training attempts are shown in Table 1.

In the experiment, we have trained several architectures of neural networks. The goal was to find out the most important factor in determining the best results.

The selection of an appropriate number of hidden neurons during the creating of a new neural network proved to be crucial for our aim. One hidden layer was sufficient for the training success in all cases. We tried out the training of different networks, but we wanted to achieve the lowest error with the lowest number of hidden neurons. We used 8, 5, 4, 3 and 2 hidden neurons and we succeeded in getting the best result with 2 neurons. Also, the experiment showed that the success of a neural network is very sensitive to learning parameters chosen in the training process.

The overall conclusion would be that the higher the learning rate, the faster the network is trained. We recommend using a high learning rate and a moderate momentum to optimize the classification performance and the number of iterations.

The final results of our experiment using standard training techniques are given in the table below. The best solution is indicated by a grey background.

Table 1. Training techniques

Training attemptNumber of hidden neuronsNumber of hidden layersTraining setMaximum errorLearning rateMomentumNumber of iterationsTotal mean square errorNetwork trained181full0.010.20.7490.0052yes281full0.010.60.7220.0029yes351full0.010.20.7580.0055yes441full0.010.20.7550.0057yes541full0.010.60.7270.0039yes641full0.010.40.5520.0051yes731full0.010.20.7640.0059yes831full0.010.40.4730.0057yes931full0.010.60.7240.0047yes1021full0.010.20.7490.0059yes1121full0.010.70.7530.0022yes123, 22full0.010.20.71190.0056yes- LENSES CLASSIFICATION USING NEURAL NETWORKS

- XNOR-Net ImageNet Classification Using Binary Convolutional Neural Networks

- XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks

- XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks随手记

- CNN网络二值化--XNOR-Net: ImageNet Classification Using Binary Convolutional Neural Networks

- ImageNet Classification with deep convolutional neural networks

- ImageNet Classification with Deep Convolutional neural Networks

- ImageNet Classification with Deep Convolutional Neural Networks

- Artificial Neural Networks: Linear Classification (Part 2)

- ImageNet Classification with Deep Convolutional Neural Networks

- ImageNet Classification with Deep Convolutional Neural Networks

- ImageNet Classification with Deep Convolutional Neural Networks

- ImageNet Classification with deep convolutional neural networks

- Imagenet classification with deep convolutional neural networks

- ImageNet Classification with Deep Convolutional Neural Networks

- ImageNet Classification with Deep Convolutional Neural Networks

- Convolutional Neural Networks for Sentence Classification

- ImageNet Classification with Deep Convolutional Neural Networks

- linux的mysql创建用户

- USB VID PID查询

- $.merge(first,second) 数组合并

- 开通csdn博客了,纪念一下

- ExtJs4.1布局

- LENSES CLASSIFICATION USING NEURAL NETWORKS

- android 视频播放器 android videoView 按不同比例缩放

- nginx.conf文件配置参考

- YARN/MRv2 Resource Manager深入剖析—AM管理

- iOS应用内付费(IAP)开发步骤列表

- Makefile教程,很详细的东西,收藏了~长文慎点=。=

- androidr的 界面或者UI间 回传数据

- 10个调试和排错的小建议

- CC EAL认证