基于Zookeeper的分步式队列系统集成案例

来源:互联网 发布:java spliterator 编辑:程序博客网 时间:2024/06/06 00:51

http://blog.fens.me/tag/hadoop/

Hadoop集群(第9期)_MapReduce初级案例

http://www.cnblogs.com/xia520pi/archive/2012/06/04/2534533.html

3. 架构设计:搭建Zookeeper的分步式协作平台

接下来,我们基于zookeeper来构建一个分步式队列的应用,来解决上面的功能需求。下面内容,排除了ESB的部分,只保留zookeeper进行实现。

- 采购数据,为海量数据,基于Hadoop存储和分析。

- 销售数据,为海量数据,基于Hadoop存储和分析。

- 其他费用支出,为少量数据,基于文件或数据库存储和分析。

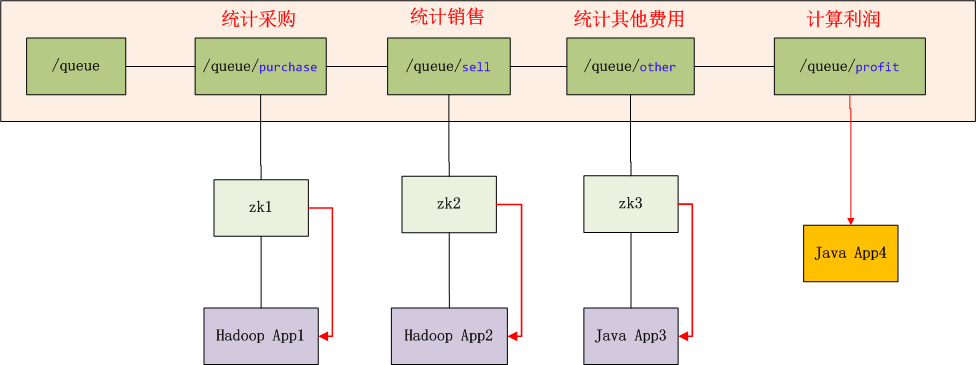

我们设计一个同步队列,这个队列有3个条件节点,分别对应采购(purchase),销售(sell),其他费用(other)3个部分。当3个节点都被创建后,程序会自动触发计算利润,并创建利润(profit)节点。上面3个节点的创建,无顺序要求。每个节点只能被创建一次。

系统环境

- 2个独立的Hadoop集群

- 2个独立的Java应用

- 3个Zookeeper集群节点

图标解释:

- Hadoop App1,Hadoop App2 是2个独立的Hadoop集群应用

- Java App3,Java App4 是2个独立的Java应用

- zk1,zk2,zk3是ZooKeeper集群的3个连接点

- /queue,是znode的队列目录,假设队列长度为3

- /queue/purchase,是znode队列中,1号排对者,由Hadoop App1提交,用于统计采购金额。

- /queue/sell,是znode队列中,2号排对者,由Hadoop App2提交,用于统计销售金额。

- /queue/other,是znode队列中,3号排对者,由Java App3提交,用于统计其他费用支出金额。

- /queue/profit,当znode队列中满了,触发创建利润节点。

- 当/qeueu/profit被创建后,app4被启动,所有zk的连接通知同步程序(红色线),队列已完成,所有程序结束。

补充说明:

- 创建/queue/purchase,/queue/sell,/queue/other目录时,没有前后顺序,程序提交后,/queue目录下会生成对应该子目录

- App1可以通过zk2提交,App2也可通过zk3提交。原则上,找最近路由最近的znode节点提交。

- 每个应用不能重复提出,直到3个任务都提交,计算利润的任务才会被执行。

- /queue/profit被创建后,zk的应用会监听到这个事件,通知应用,队列已完成!

这里的同步队列的架构更详细的设计思路,请参考文章 ZooKeeper实现分布式队列Queue

4. 程序开发:基于Zookeeper的程序设计

最终的功能需求:计算2013年01月的利润。

4.1 实验环境

在真正企业开发时,我们的实验环境应该与需求是一致的,但我的硬件条件有限,因些做了一个简化的环境设置。

- 把zookeeper的完全分步式部署的3台服务器集群节点的,改为一台服务器上3个集群节点。

- 把2个独立Hadoop集群,改为一个集群的2个独立的MapReduce任务。

开发环境:

- Win7 64bit

- JDK 1.6

- Maven3

- Juno Service Release 2

- IP:192.168.1.10

Zookeeper服务器环境:

- Linux Ubuntu 12.04 LTS 64bit

- Java 1.6.0_29

- Zookeeper: 3.4.5

- IP: 192.168.1.201

- 3个集群节点

Hadoop服务器环境:

- Linux Ubuntu 12.04 LTS 64bit

- Java 1.6.0_29

- Hadoop: 1.0.3

- IP: 192.168.1.210

4.2 实验数据

3组实验数据:

- 采购数据,purchase.csv

- 销售数据,sell.csv

- 其他费用数据,other.csv

4.2.1 采购数据集

一共4列,分别对应 产品ID,产品数量,产品单价,采购日期。

1,26,1168,2013-01-082,49,779,2013-02-123,80,850,2013-02-054,69,1585,2013-01-265,88,1052,2013-01-136,84,2363,2013-01-197,64,1410,2013-01-128,53,910,2013-01-119,21,1661,2013-01-1910,53,2426,2013-02-1811,64,2022,2013-01-0712,36,2941,2013-01-2813,99,3819,2013-01-1914,64,2563,2013-02-1615,91,752,2013-02-0516,65,750,2013-02-0417,19,2426,2013-02-2318,19,724,2013-02-0519,87,137,2013-01-2520,86,2939,2013-01-1421,92,159,2013-01-2322,81,2331,2013-03-0123,88,998,2013-01-2024,38,102,2013-02-2225,32,4813,2013-01-1326,36,1671,2013-01-19//省略部分数据4.2.2 销售数据集

一共4列,分别对应 产品ID,销售数量,销售单价,销售日期。

1,14,1236,2013-01-142,19,808,2013-03-063,26,886,2013-02-234,23,1793,2013-02-095,27,1206,2013-01-216,27,2648,2013-01-307,22,1502,2013-01-198,20,1050,2013-01-189,13,1778,2013-01-3010,20,2718,2013-03-1411,22,2175,2013-01-1212,16,3284,2013-02-1213,30,4152,2013-01-3014,22,2770,2013-03-1115,28,778,2013-02-2316,22,874,2013-02-2217,12,2718,2013-03-2218,12,747,2013-02-2319,27,172,2013-02-0720,27,3282,2013-01-2221,28,224,2013-02-0522,26,2613,2013-03-3023,27,1147,2013-01-3124,16,141,2013-03-2025,15,5343,2013-01-2126,16,1887,2013-01-3027,12,2535,2013-01-1228,16,469,2013-01-0729,29,2395,2013-03-3030,17,1549,2013-01-3031,25,4173,2013-03-17//省略部分数据4.2.3 其他费用数据集

一共2列,分别对应 发生日期,发生金额

2013-01-02,5522013-01-03,10922013-01-04,17942013-01-05,4352013-01-06,9602013-01-07,10662013-01-08,13542013-01-09,8802013-01-10,19922013-01-11,9312013-01-12,12092013-01-13,14912013-01-14,8042013-01-15,4802013-01-16,18912013-01-17,1562013-01-18,14392013-01-19,10182013-01-20,15062013-01-21,12162013-01-22,20452013-01-23,4002013-01-24,17952013-01-25,19772013-01-26,10022013-01-27,2262013-01-28,12392013-01-29,7022013-01-30,1396//省略部分数据4.3 程序设计

我们要编写5个文件:

- 计算采购金额,Purchase.java

- 计算销售金额,Sell.java

- 计算其他费用金额,Other.java

- 计算利润,Profit.java

- Zookeeper的调度,ZookeeperJob.java

4.3.1 计算采购金额

采购金额,是基于Hadoop的MapReduce统计计算。

public class Purchase { public static final String HDFS = "hdfs://192.168.1.210:9000"; public static final Pattern DELIMITER = Pattern.compile("[\t,]"); public static class PurchaseMapper extends Mapper { private String month = "2013-01"; private Text k = new Text(month); private IntWritable v = new IntWritable(); private int money = 0; public void map(LongWritable key, Text values, Context context) throws IOException, InterruptedException { System.out.println(values.toString()); String[] tokens = DELIMITER.split(values.toString()); if (tokens[3].startsWith(month)) {// 1月的数据 money = Integer.parseInt(tokens[1]) * Integer.parseInt(tokens[2]);//单价*数量 v.set(money); context.write(k, v); } } } public static class PurchaseReducer extends Reducer { private IntWritable v = new IntWritable(); private int money = 0; @Override public void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException { for (IntWritable line : values) { // System.out.println(key.toString() + "\t" + line); money += line.get(); } v.set(money); context.write(null, v); System.out.println("Output:" + key + "," + money); } } public static void run(Map path) throws IOException, InterruptedException, ClassNotFoundException { JobConf conf = config(); String local_data = path.get("purchase"); String input = path.get("input"); String output = path.get("output"); // 初始化purchase HdfsDAO hdfs = new HdfsDAO(HDFS, conf); hdfs.rmr(input); hdfs.mkdirs(input); hdfs.copyFile(local_data, input); Job job = new Job(conf); job.setJarByClass(Purchase.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setMapperClass(PurchaseMapper.class); job.setReducerClass(PurchaseReducer.class); job.setInputFormatClass(TextInputFormat.class); job.setOutputFormatClass(TextOutputFormat.class); FileInputFormat.setInputPaths(job, new Path(input)); FileOutputFormat.setOutputPath(job, new Path(output)); job.waitForCompletion(true); } public static JobConf config() {// Hadoop集群的远程配置信息 JobConf conf = new JobConf(Purchase.class); conf.setJobName("purchase"); conf.addResource("classpath:/hadoop/core-site.xml"); conf.addResource("classpath:/hadoop/hdfs-site.xml"); conf.addResource("classpath:/hadoop/mapred-site.xml"); return conf; } public static Map path(){ Map path = new HashMap(); path.put("purchase", "logfile/biz/purchase.csv");// 本地的数据文件 path.put("input", HDFS + "/user/hdfs/biz/purchase");// HDFS的目录 path.put("output", HDFS + "/user/hdfs/biz/purchase/output"); // 输出目录 return path; } public static void main(String[] args) throws Exception { run(path()); }}4.3.2 计算销售金额

销售金额,是基于Hadoop的MapReduce统计计算。

public class Sell { public static final String HDFS = "hdfs://192.168.1.210:9000"; public static final Pattern DELIMITER = Pattern.compile("[\t,]"); public static class SellMapper extends Mapper { private String month = "2013-01"; private Text k = new Text(month); private IntWritable v = new IntWritable(); private int money = 0; public void map(LongWritable key, Text values, Context context) throws IOException, InterruptedException { System.out.println(values.toString()); String[] tokens = DELIMITER.split(values.toString()); if (tokens[3].startsWith(month)) {// 1月的数据 money = Integer.parseInt(tokens[1]) * Integer.parseInt(tokens[2]);//单价*数量 v.set(money); context.write(k, v); } } } public static class SellReducer extends Reducer { private IntWritable v = new IntWritable(); private int money = 0; @Override public void reduce(Text key, Iterable values, Context context) throws IOException, InterruptedException { for (IntWritable line : values) { // System.out.println(key.toString() + "\t" + line); money += line.get(); } v.set(money); context.write(null, v); System.out.println("Output:" + key + "," + money); } } public static void run(Map path) throws IOException, InterruptedException, ClassNotFoundException { JobConf conf = config(); String local_data = path.get("sell"); String input = path.get("input"); String output = path.get("output"); // 初始化sell HdfsDAO hdfs = new HdfsDAO(HDFS, conf); hdfs.rmr(input); hdfs.mkdirs(input); hdfs.copyFile(local_data, input); Job job = new Job(conf); job.setJarByClass(Sell.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); job.setMapperClass(SellMapper.class); job.setReducerClass(SellReducer.class); job.setInputFormatClass(TextInputFormat.class); job.setOutputFormatClass(TextOutputFormat.class); FileInputFormat.setInputPaths(job, new Path(input)); FileOutputFormat.setOutputPath(job, new Path(output)); job.waitForCompletion(true); } public static JobConf config() {// Hadoop集群的远程配置信息 JobConf conf = new JobConf(Purchase.class); conf.setJobName("purchase"); conf.addResource("classpath:/hadoop/core-site.xml"); conf.addResource("classpath:/hadoop/hdfs-site.xml"); conf.addResource("classpath:/hadoop/mapred-site.xml"); return conf; } public static Map path(){ Map path = new HashMap(); path.put("sell", "logfile/biz/sell.csv");// 本地的数据文件 path.put("input", HDFS + "/user/hdfs/biz/sell");// HDFS的目录 path.put("output", HDFS + "/user/hdfs/biz/sell/output"); // 输出目录 return path; } public static void main(String[] args) throws Exception { run(path()); }}4.3.3 计算其他费用金额

其他费用金额,是基于本地文件的统计计算。

public class Other { public static String file = "logfile/biz/other.csv"; public static final Pattern DELIMITER = Pattern.compile("[\t,]"); private static String month = "2013-01"; public static void main(String[] args) throws IOException { calcOther(file); } public static int calcOther(String file) throws IOException { int money = 0; BufferedReader br = new BufferedReader(new FileReader(new File(file))); String s = null; while ((s = br.readLine()) != null) { // System.out.println(s); String[] tokens = DELIMITER.split(s); if (tokens[0].startsWith(month)) {// 1月的数据 money += Integer.parseInt(tokens[1]); } } br.close(); System.out.println("Output:" + month + "," + money); return money; }}4.3.4 计算利润

利润,通过zookeeper分步式自动调度计算利润。

public class Profit { public static void main(String[] args) throws Exception { profit(); } public static void profit() throws Exception { int sell = getSell(); int purchase = getPurchase(); int other = getOther(); int profit = sell - purchase - other; System.out.printf("profit = sell - purchase - other = %d - %d - %d = %d\n", sell, purchase, other, profit); } public static int getPurchase() throws Exception { HdfsDAO hdfs = new HdfsDAO(Purchase.HDFS, Purchase.config()); return Integer.parseInt(hdfs.cat(Purchase.path().get("output") + "/part-r-00000").trim()); } public static int getSell() throws Exception { HdfsDAO hdfs = new HdfsDAO(Sell.HDFS, Sell.config()); return Integer.parseInt(hdfs.cat(Sell.path().get("output") + "/part-r-00000").trim()); } public static int getOther() throws IOException { return Other.calcOther(Other.file); }}4.3.5 Zookeeper调度

调度,通过构建分步式队列系统,自动化程序代替人工操作。

public class ZooKeeperJob { final public static String QUEUE = "/queue"; final public static String PROFIT = "/queue/profit"; final public static String PURCHASE = "/queue/purchase"; final public static String SELL = "/queue/sell"; final public static String OTHER = "/queue/other"; public static void main(String[] args) throws Exception { if (args.length == 0) { System.out.println("Please start a task:"); } else { doAction(Integer.parseInt(args[0])); } } public static void doAction(int client) throws Exception { String host1 = "192.168.1.201:2181"; String host2 = "192.168.1.201:2182"; String host3 = "192.168.1.201:2183"; ZooKeeper zk = null; switch (client) { case 1: zk = connection(host1); initQueue(zk); doPurchase(zk); break; case 2: zk = connection(host2); initQueue(zk); doSell(zk); break; case 3: zk = connection(host3); initQueue(zk); doOther(zk); break; } } // 创建一个与服务器的连接 public static ZooKeeper connection(String host) throws IOException { ZooKeeper zk = new ZooKeeper(host, 60000, new Watcher() { // 监控所有被触发的事件 public void process(WatchedEvent event) { if (event.getType() == Event.EventType.NodeCreated && event.getPath().equals(PROFIT)) { System.out.println("Queue has Completed!!!"); } } }); return zk; } public static void initQueue(ZooKeeper zk) throws KeeperException, InterruptedException { System.out.println("WATCH => " + PROFIT); zk.exists(PROFIT, true); if (zk.exists(QUEUE, false) == null) { System.out.println("create " + QUEUE); zk.create(QUEUE, QUEUE.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } else { System.out.println(QUEUE + " is exist!"); } } public static void doPurchase(ZooKeeper zk) throws Exception { if (zk.exists(PURCHASE, false) == null) { Purchase.run(Purchase.path()); System.out.println("create " + PURCHASE); zk.create(PURCHASE, PURCHASE.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } else { System.out.println(PURCHASE + " is exist!"); } isCompleted(zk); } public static void doSell(ZooKeeper zk) throws Exception { if (zk.exists(SELL, false) == null) { Sell.run(Sell.path()); System.out.println("create " + SELL); zk.create(SELL, SELL.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } else { System.out.println(SELL + " is exist!"); } isCompleted(zk); } public static void doOther(ZooKeeper zk) throws Exception { if (zk.exists(OTHER, false) == null) { Other.calcOther(Other.file); System.out.println("create " + OTHER); zk.create(OTHER, OTHER.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.PERSISTENT); } else { System.out.println(OTHER + " is exist!"); } isCompleted(zk); } public static void isCompleted(ZooKeeper zk) throws Exception { int size = 3; List children = zk.getChildren(QUEUE, true); int length = children.size(); System.out.println("Queue Complete:" + length + "/" + size); if (length >= size) { System.out.println("create " + PROFIT); Profit.profit(); zk.create(PROFIT, PROFIT.getBytes(), Ids.OPEN_ACL_UNSAFE, CreateMode.EPHEMERAL); for (String child : children) {// 清空节点 zk.delete(QUEUE + "/" + child, -1); } } }}5. 运行程序

最后,我们运行整个的程序,包括3个部分。

- zookeeper服务器

- hadoop服务器

- 分步式队列应用

5.1 启动zookeeper服务

启动zookeeper服务器集群:

~ cd toolkit/zookeeper345# 启动zk集群3个节点~ bin/zkServer.sh start conf/zk1.cfg~ bin/zkServer.sh start conf/zk2.cfg~ bin/zkServer.sh start conf/zk3.cfg~ jps4234 QuorumPeerMain5002 Jps4275 QuorumPeerMain4207 QuorumPeerMain查看zookeeper集群中,各节点的状态

# 查看zk1节点状态~ bin/zkServer.sh status conf/zk1.cfgJMX enabled by defaultUsing config: conf/zk1.cfgMode: follower# 查看zk2节点状态,zk2为leader~ bin/zkServer.sh status conf/zk2.cfgJMX enabled by defaultUsing config: conf/zk2.cfgMode: leader# 查看zk3节点状态~ bin/zkServer.sh status conf/zk3.cfgJMX enabled by defaultUsing config: conf/zk3.cfgMode: follower启动zookeeper客户端:

~ bin/zkCli.sh -server 192.168.1.201:2181# 查看zk[zk: 192.168.1.201:2181(CONNECTED) 0] ls /[queue, queue-fifo, zookeeper]# /queue路径无子目录[zk: 192.168.1.201:2181(CONNECTED) 1] ls /queue[]5.2 启动Hadoop服务

~ hadoop/hadoop-1.0.3~ bin/start-all.sh~ jps25979 JobTracker26257 TaskTracker25576 DataNode25300 NameNode12116 Jps25875 SecondaryNameNode5.3 启动分步式队列ZookeeperJob

5.3.1 启动统计采购数据程序,设置启动参数1

只显示用户日志,忽略系统日志。

WATCH => /queue/profit/queue is exist!Delete: hdfs://192.168.1.210:9000/user/hdfs/biz/purchaseCreate: hdfs://192.168.1.210:9000/user/hdfs/biz/purchasecopy from: logfile/biz/purchase.csv to hdfs://192.168.1.210:9000/user/hdfs/biz/purchaseOutput:2013-01,9609887create /queue/purchaseQueue Complete:1/3在zk中查看queue目录

[zk: 192.168.1.201:2181(CONNECTED) 3] ls /queue[purchase]5.3.2 启动统计销售数据程序,设置启动参数2

只显示用户日志,忽略系统日志。

WATCH => /queue/profit/queue is exist!Delete: hdfs://192.168.1.210:9000/user/hdfs/biz/sellCreate: hdfs://192.168.1.210:9000/user/hdfs/biz/sellcopy from: logfile/biz/sell.csv to hdfs://192.168.1.210:9000/user/hdfs/biz/sellOutput:2013-01,2950315create /queue/sellQueue Complete:2/3在zk中查看queue目录

[zk: 192.168.1.201:2181(CONNECTED) 5] ls /queue[purchase, sell]5.3.3 启动统计其他费用数据程序,设置启动参数3

只显示用户日志,忽略系统日志。

WATCH => /queue/profit/queue is exist!Output:2013-01,34193create /queue/otherQueue Complete:3/3create /queue/profitcat: hdfs://192.168.1.210:9000/user/hdfs/biz/sell/output/part-r-000002950315cat: hdfs://192.168.1.210:9000/user/hdfs/biz/purchase/output/part-r-000009609887Output:2013-01,34193profit = sell - purchase - other = 2950315 - 9609887 - 34193 = -6693765Queue has Completed!!!在zk中查看queue目录

[zk: 192.168.1.201:2181(CONNECTED) 6] ls /queue[profit]在最后一步,统计其他费用数据程序运行后,从日志中看到3个条件节点都已满足要求。然后,通过同步的分步式队列自动启动了计算利润的程序,并在日志中打印了2013年1月的利润为-6693765。

本文介绍的源代码,已上传到github: https://github.com/bsspirit/maven_hadoop_template/tree/master/src/main/java/org/conan/myzk/hadoop

通过这个复杂的实验,我们成功地用zookeeper实现了分步式队列,并应用到了业务中。当然,实验中也有一些不是特别的严谨的地方,请同学边做边思考。

转载请注明出处:

http://blog.fens.me/hadoop-zookeeper-case/

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- 基于Zookeeper的分步式队列系统集成案例

- C# 关于Zookeeper的分步式锁

- 基于ZooKeeper的分布式锁和队列

- 基于ZooKeeper的分布式锁和队列

- 基于ZooKeeper的分布式锁和队列

- 基于ZooKeeper的分布式锁和队列

- 基于ZooKeeper的分布式锁和队列

- 基于SCM的企业信息系统集成

- 【转】基于ZooKeeper的分布式锁和队列

- Mahout分步式程序开发 基于物品的协同过滤ItemCF

- 基于C#分步式聊天系统的在线视频直播系统设计

- linux挂载和卸载移动设备

- 解决Android 4.0以上版本中OptionsMenu菜单不显示ICON图标的问题

- LeetCode刷题笔录Covert Sorted List to Binary Search Tree

- 修改linux ssh远程端口的几种办法

- Java中的ReentrantLock和synchronized两种锁定机制的对比

- 基于Zookeeper的分步式队列系统集成案例

- Appserver配置zend debugger

- 暑期个人赛--第八场--D

- POJ 3260 The Fewest Coins (多重背包 + 完全背包)

- 基于RPM的Linux安装open office

- unexpected inconsistency

- 思科网络技术学院教程学习——客户端-服务器交互实验

- centos 6.4 安装wps

- PHP上传大文件的设置方法