从瀑布到敏捷:微软的开发模式的变迁

来源:互联网 发布:emui 知乎 编辑:程序博客网 时间:2024/05/16 01:20

原文:How Microsoft dragged its development practices into the 21st century

How Microsoft dragged its development practices into the 21st century

In the Web era of development, Waterfalls are finally out. Agile is in.

SEATTLE—For the longest time, Microsoft had something of a poor reputation as a software developer. The issue wasn't so much the quality of the company's software but the way it was developed and delivered. The company's traditional model involved cranking out a new major version of Office, Windows, SQL Server, Exchange, and so on every three or so years.

The releases may have been infrequent, but delays, or at least perceived delays, were not. Microsoft's reputation in this regard never quite matched the reality—the company tended to shy away from making any official announcements of when something would ship until such a point as the company knew it would hit the date—but leaks, assumptions, and speculation were routine. Windows 95 was late. Windows 2000 was late. Windows Vista was very late and only came out after the original software was scrapped.

In spite of this, Microsoft became tremendously successful. After all, many of its competitors worked in more or less the same way, releasing paid software upgrades every few years. Microsoft didn't do anything particularly different. Even the delays weren't that unusual, with both Microsoft's competitors and all manner of custom software development projects suffering the same.

There's no singular cause for these periodic releases and the delays that they suffered. Software development is a complex and surprisingly poorly understood business; there's no one "right way" to develop and manage a project. That is, there's no reliable process or methodology that will ensure a competent team can actually produce working, correct software on time or on budget. A system that works well with one team or on one project can easily fail when used on a different team or project.

Nonetheless, computer scientists, software engineers, and developers have tried to formalize and describe different processes for building software. The process historically associated with Microsoft—and the process most known for these long development cycles and their delays—is known as the waterfall process.

The basic premise is that progress goes one way. The requirements for a piece of software are gathered, then the software is designed, then the design is implemented, then the implementation is tested and verified, and then, once it has shipped, it goes into maintenance mode.

The wretched waterfall

The waterfall process has always been regarded with suspicion. Even when first named and described in the 1970s, it was not regarded as an ideal process that organizations should aspire to. Rather, it was a description of a process that organizations used but which had a number of flaws that made it unsuitable to most development tasks.

It has, however, persisted. It's still being commonly used today because it has a kind of intuitive appeal. In industries such as manufacturing and construction, design must be done up front because things like cars and buildings are extremely hard to change once they've been built. In these fields, it's imperative to get the design as correct as possible right from the start. It's the only way to avoid the costs of recalling vehicles or tearing down buildings.

Software is cheaper and easier to change than buildings are, but it's still much more effective to write the right software first than it is to build something and then change it later. In spite of this, the waterfall process is widely criticized. Perhaps the biggest problem is that, unlike cars and buildings, we generally have a very poor understanding of software. While some programs—flight control software, say—have very tight requirements and strict parameters, most are more fluid.

For example, lots of companies develop in-house applications to automate various business processes. In the course of developing these applications, it's often discovered that the old process just isn't that great. Developers will discover that there are redundant steps, or that two processes should be merged into one, or that one should be split into two. Electronic forms that mirror paper forms in their layout and sequence can provide familiarity, but it's often the case that rearranging the forms can be more logical. Processes that were thought to be understood and performed by the book can be found to work a little differently in practice.

Often, these things are only discovered after the development process has begun, either during development or even after deployment to end users.

This presents a great problem when attempting to do all the design work up front. The design can be perfectly well-intentioned, but if the design is wrong or needs to be changed in response to user feedback, or if it turns out not to be solving the problem that people were hoping it would solve (and this is extremely common), the project is doomed to fail. Waiting until the end of the waterfall to discover these problems means pouring a lot of time and money into something that isn't right.

Waterfalls in action: Developing Visual Studio

Microsoft didn't practice waterfall in the purest sense; its software development process was slightly iterative. But it was very waterfall-like.

A good example of how this worked comes from the Visual Studio team. For the last few years, Visual Studio has been on a somewhat quicker release cycle than Windows and Office. Major releases come every two or so years rather than every three.

This two-year cycle was broken into a number of stages. At the start there would be four to six months of planning and design work. The goal was to figure out what features the team wanted to add to the product and how to add them. Next came six to eight weeks of actual coding, after which the project would be "code complete," followed by a four-month "stabilization" cycle of testing and debugging.

During this stage, the test team would file hundreds upon hundreds of bugs, and the developers would have to go through and fix as many as they could. No new development occurred during stabilization, only debugging and bug fixing.

At the conclusion of this stabilization phase, a public beta would be produced. There would then be a second six- to eight-week cycle of development, followed by another four months of stabilization. From this, the finished product would emerge.

With a few more weeks for managing the transitions between the phases of development, some extra time for last-minute fixes to both the beta and the final build, and a few weeks to recover between versions, the result was a two-year development process in which only about four months would be spent writing new code. Twice as long would be spent fixing that code.

Microsoft's organizational structure tends to reflect this development approach. The company has three relevant roles: the program manager (PM), responsible for specifying and designing features; the developer, responsible for building them; and QA, responsible for making sure the features do what they're supposed to. The three roles have parallel management structures (PMs reporting to PMs, and so on).

Grim, but more or less effective

This process was far from ideal. Consider, for example, what happened when a Visual Studio user installed the beta and a month later found and reported a bug. It was probably too late for anything to be done about it for that release. By the time the bug had been filed and validated, the development process was nearing completion. If the bug was serious enough, it could be addressed in the stabilization phase, but in most cases it would be left unfixed, perhaps for inclusion in the next version.

If bugs slipped through to the release version, they risked not being scheduled for a fix for years unless they were reported very quickly—within the lengthy planning and design stage. Telling customers that their issue would be fixed in three years doesn't make for a healthy relationship.

Bugs weren't the only issue with this kind of process. For example, after years of stagnation, the C++ specification is now being regularly updated with a mix of updates to the main spec itself and smaller specs called Technical Specifications (TS). (TSes add new capabilities in more focused ways; for example, there are TSes in development for networking, filesystem access, parallel programming, and so on.) Over the next couple of years, eight or more of these TSes should be finalized.

Their release isn't synchronized in any way with Microsoft's development cycle. For the two-year product cycle to be able to easily incorporate specs like these, the spec has to be reasonably complete, if not entirely finished, in time for the planning stage. If it misses that planning stage, it's too late for a particular product cycle and has to wait 18 to 24 months for the next planning stage, and then another 24 months for the next release.

The entire development approach left Visual Studio doomed to be perennially out of date and behind the times.

In addition to making the Visual Studio team enormously unresponsive, this development approach wasn't much good for team morale. A feature developed during the first development phase wouldn't properly get into customer hands for the better part of 18 months. For Windows and Office, with their three-year cycles, the effect is even worse. A developer could be waiting more than two years before the work they did would ever make it to end users. Most developers actually want their software to be used; it's just not that satisfying to know that you've implemented some great new feature that nobody will actually use for years.

With Microsoft's long development cycles, a developer may not even be on the same team—and may not even be at the company—by the time their code makes it to desktops.

The development structure has a similar effect. Spending just four months writing new code and the rest of the time working through a vast backlog of bugs isn't any developer's dream.

And then the world changed

For all these problems and challenges, Microsoft has nonetheless been tremendously successful as a software developer. Before the rise of the World Wide Web, these infrequent releases, troublesome as they may have been, actually made a lot of sense. In those days, distributing software meant shipping out a bunch of floppy disks or CDs and enduring a tedious installation process. Limiting this process such that you only had to suffer through it every few years was not a bad thing, and the waterfall process, or some close approximation to it, was a software industry norm.

However, it wasn't the only development process around, and the rise of the Web has pushed other approaches to the foreground. Web applications change the software delivery model in some important ways. Most significantly, they take "installation" out of the equation. While the application developerdoes have to deploy updates to servers, end users don't have to take any action. They just visit a site and discover that its appearance or functionality has changed since their last visit.

This also makes some things that are tricky or annoying to do with traditional software very easy. A Web application can roll out new features or designs to a minority of users to gauge the response and verify that they work and then either roll back the feature or give it to everyone, depending on the reaction. A/B testing to compare the efficacy of competing designs is also straightforward. As a result, it's a lot easier for Web developers to quickly collect usage data and make decisions based on that data.

They also changed the prevailing pricing model. Instead of buying a perpetual license every three years, users of Web applications either pay nothing (because the application is ad funded) or pay an ongoing subscription. While shrinkwrap software tends to "need" substantial differences between versions in order to justify a slew of new upgrades, Web applications have no corresponding incentive to ship only major updates. Incremental updates and piecemeal improvement are just as effective.

These features of the Web make the waterfall process particularly inappropriate. Fortunately, the waterfall process isn't the only one that's out there these days. As the Web rose, so too did Web-suitable development processes.

Agile development for a changing world

The adjective that's used to describe these processes is "agile." There are many different agile development processes, but all retain certain common features. Fundamentally, agile processes are designed around short development cycles, iterative improvement, and the ability to easily respond to change.

Iterative development processes of one kind or another have been around for almost as long as software development itself. The kinds of practices that have come to be known as "agile" proliferated in the 1990s, and the "agile" label was codified in 2001 when a group of developers published the "Agile Manifesto," which reads:

Similarly, stacks of documentation are reassuring. Documentation and specifications both tend to feel concrete in a way that, to non-developers, software often does not. How can a customer know that a software company has written the software it asked for if there is not documentation to prove it?

With agile processes, these specs and docs are deprioritized, if not abandoned altogether. Customers or users tend to be regularly involved with the development process, using the software as it is incrementally developed and delivered and providing regular feedback.

The advantages of agile development contrast neatly with the disadvantages of waterfall development. Practices vary, but a development cycle in an agile team will typically be in the range of two to four weeks. While development teams can still have a longer outlook—things they'd like to do in the next six months, year, perhaps even longer—the planning and specific development priorities are handled at a much smaller scale, and this makes them much easier to change.

The result of each of these short iterations should be something that is more or less usable. Though major features will likely need to be divided into multiple iterations, each incremental version of the software should nonetheless be usable. This in turn makes it much easier for feedback to be delivered. Perhaps the layout of a screen is awkward; perhaps the feature doesn't quite do what people expected it to do; perhaps there are bugs. In any case, testers and users alike can react to what they see and have before them and tell the developers accordingly.

The short timescales in turn enable the developers to actually respond to these demands.

Agile at Microsoft: Visual Studio

Perhaps unsurprisingly, the Visual Studio team was among the first at Microsoft to adopt an agile approach. Even as Microsoft stuck to its waterfalls internally, Visual Studio was used by third-party developers who themselves wanted to use agile methods, creating pressure on the Developer Division to build better support for these approaches. Further, these developers were often looking at or switching to other development tools, ones that were updated more frequently and hence able to deliver bug fixes and new features more regularly.

The first version of Visual Studio to provide some meaningful support for agile development was Visual Studio 2010, released in April 2010 and developed over the two previous years. However, the team rapidly got feedback that some of its templates intended for agile developers just weren't useful. They were too generic and not directly useful to anyone.

The Developer Division was simultaneously making its first steps toward providing an online, hosted version of its Team Foundation Server (TFS), called Team Foundation Service (also, infuriatingly, TFS). This cloud service was the first time that division had a good chance to break away from the two- to three-year release cycle, instead moving to a more rapid, iterative process.

These two things—delivering something to customers that was unfit for its purpose and delivering a new online service where rapid iteration is both possible and expected—prompted the team to look at new ways of developing its software.

This move wasn't driven from the top. Within Microsoft, it was up to each team to figure out the best approach to developing software, and the Visual Studio team realized it needed to change. They settled on an agile process called scrum. Microsoft's Aaron Bjork, Principal Group Program Manager on TFS, told Ars about how this was done.

Scrum teams are multidisciplinary groups of around a dozen people, encompassing development, testing, design, and analysis roles. Their development work is divided into short, fixed-length iterations called sprints. The length of a sprint is up to a given team, but generally they're between one and four weeks. Daily meetings are held to report on progress, and these are "stand up" meetings where everyone stands, to ensure that they're kept short. One member of the team, the product owner, represents the customer. The product owner writes user stories that describe a task that a user of the software wants to perform. These stories are then prioritized and added to the backlog of work to do.

The short sprints give the flexibility and ability to quickly respond to new customer demands. Scrum has a principle that after each sprint, the software should be compilable and usable, which enables rapid deployment and feedback.

Visual Studio's scrum teams take members from each of the three Microsoft roles: a program manager (who becomes the product owner) and some mix of developers with a dev lead, and QA with a QA lead. Each individual team manages its own backlog. At the start of a sprint, a few items from the backlog are chosen according to their priority, and at the end of each sprint, those items should be complete, with a working, tested implementation.

That's straightforward enough, though it required plenty of adjustment. Bjork told us that initially, teams didn't truly get on board with the sprint concept. They tried having five back-to-back "development" sprints, followed by two "stabilization" sprints. While paying lip service to "agile" with its talk of "sprints," this was essentially the same as the old waterfall process. It suffered the same problems.

Education and discipline solved this issue; teams recognized the importance of building bug fixing and quality work into the sprints rather than treating them as some "other" task as they had done in the past. Fixing bugs and ensuring that the product is always of a shippable quality needed to be integrated into the regular development process.

Even little things like the sprint length had to be learned from trial and error. The Visual Studio team settled on three-week sprints, as for them that was the Goldilocks length.

The problem of scale

However, these were just teething difficulties. The real complexity for Microsoft is scale. Many of these methodologies are built for small teams. This is unsurprising; most in-house development teams at non-software companies just aren't that big. But Microsoft is a software company. It has thousands of developers (some 3,000 in DevDiv alone) and hundreds of teams. Learning how to be agile while remaining coordinated across an organization of this scale required experimentation.

For this, the team has a set of different time outlooks. Beyond the sprint, there's a six-month "season" and an 18-month "vision." As the time window grows, the certainty of each outlook diminishes. A team has a very clear understanding of what it will do and what it will deliver in the next sprint. The season is a little more flexible; while it will generally get the big priorities right, the season's vision is more malleable still. It could be disrupted by the next "Internet" or "smartphone" revolution, but it still sets the broad goals and direction for the organization.

To keep track of all these things, every three sprints the teams talk directly to the PM, dev, and QA leadership. This reporting doesn't go through the (still extant) PM, dev, and QA hierarchies; instead, each team reports directly, in person. The leadership doesn't care about the specific details of each team's backlog, but it does care about their overall direction, priorities, and any problems they may be having. This communication provides the opportunity to coordinate between teams.

In addition, at the start and end of each sprint, teams send division-wide e-mails to say what they're planning to do and what they have done. This communication makes it easier for interested third parties to get a sense of what other teams are up to.

Perhaps one of the most important changes the Visual Studio team made was to their office environment. Microsoft traditionally gave each developer a private office. There was a hierarchy of sorts. At low levels, you'd start with an interior office with no windows (having seen a few of these, they're strikingly grim and depressing). Move up through the company and you'd get a window office, further still and you'd get a corner office.

There is a lot to be said for private offices. Development work can be complex, and distractions during complex tasks are highly unwelcome; open plan offices are by at least some measures less productive as a result. But they also make collaboration a lot harder. Under the old system, there was no guarantee that a PM would be near the devs he or she worked with, likewise the testers. Informal face-to-face discussions meant traipsing through corridors and up and down stairs—or even moving between buildings—to get the teams that worked together in the same place.

For agile development, this is a huge hurdle. Online collaboration is good, but in-person collaboration is a lot better. In person, simple cues, like noticing that a developer has headphones on and so doesn't want to be disturbed, are effective. That's much harder to see online.

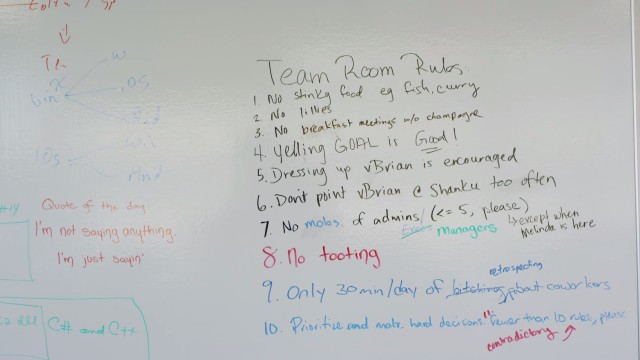

Bjork showed us the Visual Studio team's new office space, and it's very different. Instead of private offices, there are large team rooms that house every member of a team in a few clusters of open-plan desks. The team rooms have small private phone cubicles and meeting spaces, enabling discussions to take place without disturbing others. All have windows. He said that for a lot of developers, giving up the private offices—and one's place in the pecking order for the much-desired corner offices—was scary. But overall, the new environment has been a success. It enables much more ad hoc, informal discussion, making it much easier for PMs, devs, and QA to have the same shared understanding of what they're actually trying to achieve.

The result? Regular releases, regular updates

With the new process in place, the Visual Studio team has been able to build better software and deliver it more frequently. It's now approaching its 70th successful three-week sprint. TFService, now rolled into a broader suite called Visual Studio Online, has a service update every three weeks, putting new capabilities and features into users' hands on a continuous basis. On-premises software hasn't been forgotten either. The sprint-based updates are rolled into quarterly updates for the on-premises TFS.

Visual Studio has similarly received a range of updates on a more or less quarterly basis. No longer is it the case that developers have to wait years at a time for better standards conformance; they get to see, and use, the progress several times each year.

The need for regular iterations has also caused improvement to some unsexy parts of the software. The setup and upgrade process for Visual Studio was annoying and complicated, with a large testing matrix to handle upgrades from various different versions. With an iteration every three weeks, this situation was set to become intractable. Because setup and upgrades are done every sprint, the system needed to be more robust and easier to test and manage. The agile process motivated that work, and the result should be that upgrading end-user machines is less disruptive and prone to failure.

The integration of testing and QA into part of the regular development process, instead of the old test and stabilization phase, means that the code quality is always decent and always shippable. This keeps developers happy, as they're no longer faced with month upon month of endless bug fixing.

Beyond Visual Studio

When DevDiv went down the agile path, it was doing so on its own. Other teams, including Skype/Lync and Microsoft Studios Shared Services, have gone down similar paths for similar reasons. Sometimes they've done things differently from Visual Studio—Skype, for example, uses two-week sprints—but the overall approach, including the need to scale up processes designed for small teams, has been very similar.

Lately we've heard from within the company that there is now pressure from the top to adopt these kinds of practices and become agile as an organization.Company-wide, there has been a new approach to source code and its management. Microsoft is traditionally viewed as a series of fiefdoms, with each team jealously guarding its own work and not sharing with others. As such, people in one team have historically had little access to other teams; they couldn't see what they were working on or the source code they were producing.

When code did need to be shared—for example, the Xbox team's fork of Windows, or the Azure team's fork of Hyper-V—the sharing was a one-off thing. The new team would take the old team's source code and then develop in parallel. This obviously introduces long-term maintenance problems; the maintainer of each fork needs to somehow incorporate important changes from the main version of the code, which can require complex integration work.

This is changing.

The parallel development, for example, is frowned upon. While a fork may be appropriate in the short term—the Azure team had particular scaling and management needs that Windows Server 2008's Hyper-V didn't address, for example—the long-term goal is to ensure that all these modifications are folded back into the main codebase. Nowadays, we're told that the Azure Hyper-V is identical to Window Server 2012's Hyper-V. Xbox One similarly needed to make modifications to support its usage scenarios, but we're told that these too are being folded back into the main Windows codebase.

Amusingly, we've heard this described by several within the company as "open source." It isn't, of course, but the terminology is striking. That this greater collaboration is viewed as being "open source" highlights in many ways how insular the divisions within the company were and how those borders are now being broken down.

The agile approach of combining development and testing, under the name "combined engineering" (first used in the Bing team), is also spreading. At Bing, the task of creating programmatic tests was moved onto developers, instead of dedicated testers. QA still exists and is still important, but it performs end-user style "real world" testing, not programmatic automated testing. This testing has been successful for Bing, improving the team's ability to ship changes without harming overall software quality.

However, there are costs to this setup. The recent layoffs have been poorly communicated both within Microsoft and beyond, but one victim group appears to have been the dedicated programmatic testersin the Operating Systems Group (OSG), as OSG is following Bing's lead and moving to a combined engineering approach. Prior to these cuts, Testing/QA staff was in some parts of the company outnumbering developers by about two to one. Afterward, the ratio was closer to one to one. As a precursor to these layoffs and the shifting roles of development and testing, the OSG renamed its test team to "Quality."

- 从瀑布到敏捷:微软的开发模式的变迁

- 从瀑布开发模式到敏捷开发模式(scrum)的思路转换

- 从瀑布模型、极限编程到敏捷开发---软件开发管理者思维的变化

- 从瀑布模型、极限编程到敏捷开发——软件开发管理者思维的变化

- 从瀑布模型、极限编程到敏捷开发——软件开发管理者思维的变化

- 从VISTA到WIN 7 揭秘全球软件开发模式的变迁

- 从瀑布到敏捷(二)瀑布的脑袋顶着敏捷的旗号进行裸奔的疯狂

- 从瀑布到敏捷(一)不得不为的敏捷尝试

- 从瀑布到敏捷(三)迈出走向敏捷的第一步CI

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- 从瀑布模型、极限编程到敏捷开发

- bzoj1091[SCOI2003]切割多边形

- C#学习

- JDBC连接oracle 12c,数据表转JSON格式

- Quartz 学习笔记

- Android AndroidManifest 清单文件以及权限详解

- 从瀑布到敏捷:微软的开发模式的变迁

- jQuery Vlidate 示例

- 关于命令回传值的学习总结

- Unix like下gcc编译连接c/c++使用方法小结

- hdu-oj 1018 Big Number

- 【OpenCV】访问Mat图像中每个像素的值

- 二分匹配相关

- iOS 事件的响应与分发

- 关于堆和栈的很有价值的总结