Lucene - Overview

来源:互联网 发布:php九九乘法表代码视频 编辑:程序博客网 时间:2024/06/05 18:24

Lucene is simple yet powerful java based search library. It can be used in any application to add search capability to it. Lucene is open-source project. It is scalable and high-performance library used to index and search virtually any kind of text. Lucene library provides the core operations which are required by any search application. Indexing and Searching.

How Search Application works?

Any search application does the few or all of the following operations.

Apart from these basic operations, search application can also provide administration user interface providing administrators of the application to control the level of search based on the user profiles. Analytics of search result is another important and advanced aspect of any search application.

Lucene's role in search application

Lucene plays role in steps 2 to step 7 mentioned above and provides classes to do the required operations. In nutshell, lucene works as a heart of any search application and provides the vital operations pertaining to indexing and searching. Acquiring contents and displaying the results is left for the application part to handle. Let's start with first simple search application using lucene search library in next chapter.

Lucene - Environment Setup

Environment Setup

This tutorial will guide you on how to prepare a development environment to start your work with Spring Framework. This tutorial will also teach you how to setup JDK, Tomcat and Eclipse on your machine before you setup Spring Framework:

Step 1 - Setup Java Development Kit (JDK):

You can download the latest version of SDK from Oracle's Java site: Java SE Downloads. You will find instructions for installing JDK in downloaded files, follow the given instructions to install and configure the setup. Finally set PATH and JAVA_HOME environment variables to refer to the directory that contains java and javac, typically java_install_dir/bin and java_install_dir respectively.

If you are running Windows and installed the JDK in C:\jdk1.6.0_15, you would have to put the following line in your C:\autoexec.bat file.

set PATH=C:\jdk1.6.0_15\bin;%PATH%set JAVA_HOME=C:\jdk1.6.0_15

Alternatively, on Windows NT/2000/XP, you could also right-click on My Computer, select Properties, then Advanced, then Environment Variables. Then, you would update the PATH value and press the OK button.

On Unix (Solaris, Linux, etc.), if the SDK is installed in /usr/local/jdk1.6.0_15 and you use the C shell, you would put the following into your .cshrc file.

setenv PATH /usr/local/jdk1.6.0_15/bin:$PATHsetenv JAVA_HOME /usr/local/jdk1.6.0_15

Alternatively, if you use an Integrated Development Environment (IDE) like Borland JBuilder, Eclipse, IntelliJ IDEA, or Sun ONE Studio, compile and run a simple program to confirm that the IDE knows where you installed Java, otherwise do proper setup as given document of the IDE.

Step 2 - Setup Eclipse IDE

All the examples in this tutorial have been written using Eclipse IDE. So I would suggest you should have latest version of Eclipse installed on your machine.

To install Eclipse IDE, download the latest Eclipse binaries from http://www.eclipse.org/downloads/. Once you downloaded the installation, unpack the binary distribution into a convenient location. For example in C:\eclipse on windows, or /usr/local/eclipse on Linux/Unix and finally set PATH variable appropriately.

Eclipse can be started by executing the following commands on windows machine, or you can simply double click on eclipse.exe

%C:\eclipse\eclipse.exe

Eclipse can be started by executing the following commands on Unix (Solaris, Linux, etc.) machine:

$/usr/local/eclipse/eclipse

After a successful startup, if everything is fine then it should display following result:

Step 3 - Setup Lucene Framework Libraries

Now if everything is fine, then you can proceed to setup your Lucene framework. Following are the simple steps to download and install the framework on your machine.

- http://archive.apache.org/dist/lucene/java/3.6.2/

Make a choice whether you want to install Lucene on Windows, or Unix and then proceed to the next step to download .zip file for windows and .tz file for Unix.

Download the suitable version of Lucene framework binaries fromhttp://archive.apache.org/dist/lucene/java/.

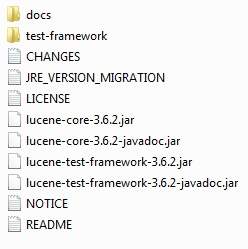

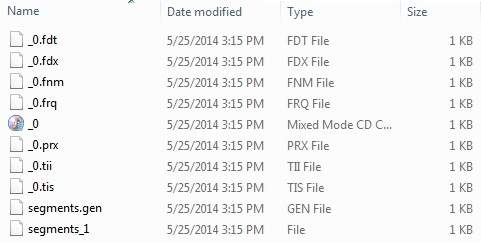

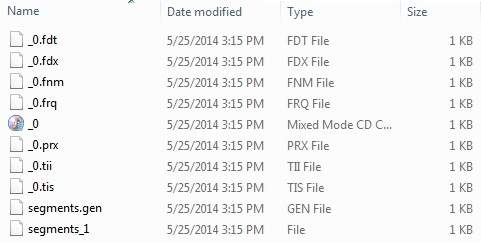

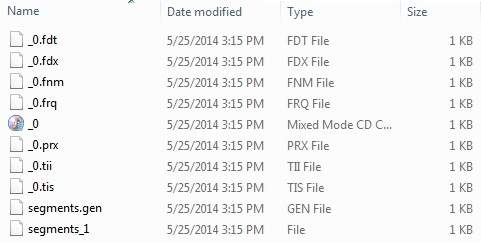

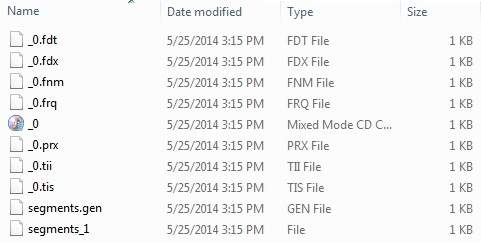

At the time of writing this tutorial, I downloaded lucene-3.6.2.zip on my Windows machine and when you unzip the downloaded file it will give you directory structure inside C:\lucene-3.6.2 as follows.

You will find all the Lucene libraries in the directory C:\lucene-3.6.2. Make sure you set your CLASSPATH variable on this directory properly otherwise you will face problem while running your application. If you are using Eclipse then it is not required to set CLASSPATH because all the setting will be done through Eclipse.

Once you are done with this last step, you are ready to proceed for your first Lucene Example which you will see in the next chapter.

Lucene - First Application

Let us start actual programming with Lucene Framework. Before you start writing your first example using Lucene framework, you have to make sure that you have setup your Lucene environment properly as explained in Lucene - Environment Setup tutorial. I also assume that you have a little bit working knowledge with Eclipse IDE.

So let us proceed to write a simple Search Application which will print number of search result found. We'll also see the list of indexes created during this process.

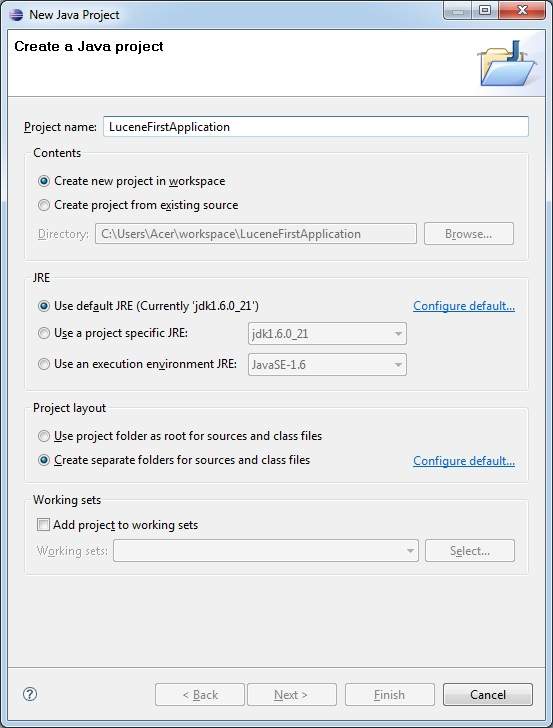

Step 1 - Create Java Project:

The first step is to create a simple Java Project using Eclipse IDE. Follow the option File -> New -> Project and finally select Java Project wizard from the wizard list. Now name your project asLuceneFirstApplication using the wizard window as follows:

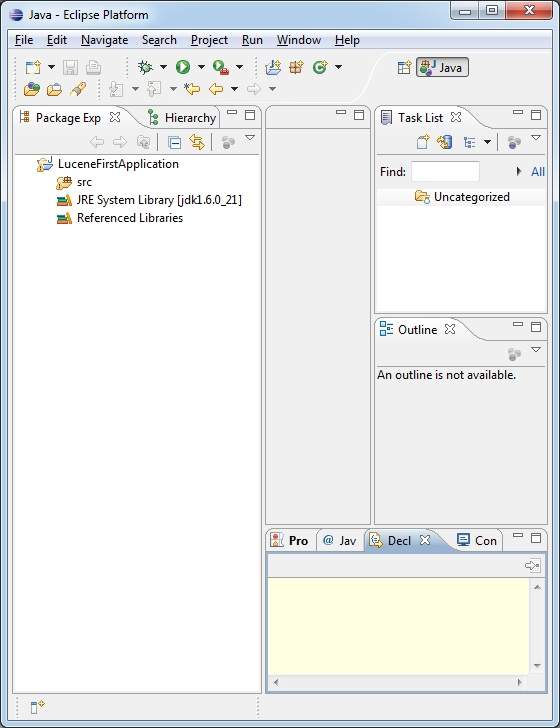

Once your project is created successfully, you will have following content in your Project Explorer:

Step 2 - Add Required Libraries:

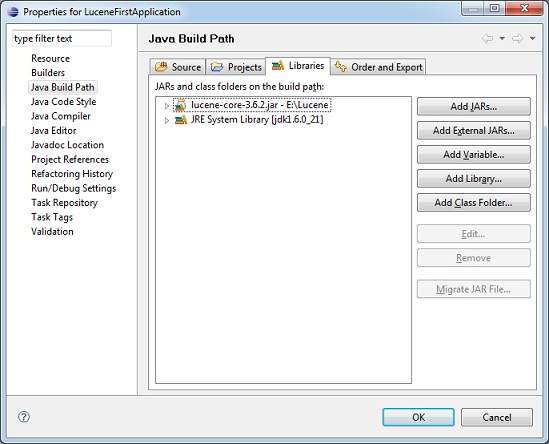

As a second step let us add Lucene core Framework library in our project. To do this, right click on your project name LuceneFirstApplication and then follow the following option available in context menu:Build Path -> Configure Build Path to display the Java Build Path window as follows:

Now use Add External JARs button available under Libraries tab to add the following core JAR from Lucene installation directory:

lucene-core-3.6.2

Step 3 - Create Source Files:

Now let us create actual source files under the LuceneFirstApplication project. First we need to create a package called com.tutorialspoint.lucene. To do this, right click on src in package explorer section and follow the option : New -> Package.

Next we will create LuceneTester.java and other java classes under the com.tutorialspoint.lucene package.

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;public class LuceneConstants { public static final String CONTENTS="contents"; public static final String FILE_NAME="filename"; public static final String FILE_PATH="filepath"; public static final int MAX_SEARCH = 10;}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;public class TextFileFilter implements FileFilter { @Override public boolean accept(File pathname) { return pathname.getName().toLowerCase().endsWith(".txt"); }}

Indexer.java

This class is used to index the raw data so that we can make it searchable using lucene library.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;import java.io.FileReader;import java.io.IOException;import org.apache.lucene.analysis.standard.StandardAnalyzer;import org.apache.lucene.document.Document;import org.apache.lucene.document.Field;import org.apache.lucene.index.CorruptIndexException;import org.apache.lucene.index.IndexWriter;import org.apache.lucene.store.Directory;import org.apache.lucene.store.FSDirectory;import org.apache.lucene.util.Version;public class Indexer { private IndexWriter writer; public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED); } public void close() throws CorruptIndexException, IOException{ writer.close(); } private Document getDocument(File file) throws IOException{ Document document = new Document(); //index file contents Field contentField = new Field(LuceneConstants.CONTENTS, new FileReader(file)); //index file name Field fileNameField = new Field(LuceneConstants.FILE_NAME, file.getName(), Field.Store.YES,Field.Index.NOT_ANALYZED); //index file path Field filePathField = new Field(LuceneConstants.FILE_PATH, file.getCanonicalPath(), Field.Store.YES,Field.Index.NOT_ANALYZED); document.add(contentField); document.add(fileNameField); document.add(filePathField); return document; } private void indexFile(File file) throws IOException{ System.out.println("Indexing "+file.getCanonicalPath()); Document document = getDocument(file); writer.addDocument(document); } public int createIndex(String dataDirPath, FileFilter filter) throws IOException{ //get all files in the data directory File[] files = new File(dataDirPath).listFiles(); for (File file : files) { if(!file.isDirectory() && !file.isHidden() && file.exists() && file.canRead() && filter.accept(file) ){ indexFile(file); } } return writer.numDocs(); }}

Searcher.java

This class is used to search the indexes created by Indexer to search the requested contents.

package com.tutorialspoint.lucene;import java.io.File;import java.io.IOException;import org.apache.lucene.analysis.standard.StandardAnalyzer;import org.apache.lucene.document.Document;import org.apache.lucene.index.CorruptIndexException;import org.apache.lucene.queryParser.ParseException;import org.apache.lucene.queryParser.QueryParser;import org.apache.lucene.search.IndexSearcher;import org.apache.lucene.search.Query;import org.apache.lucene.search.ScoreDoc;import org.apache.lucene.search.TopDocs;import org.apache.lucene.store.Directory;import org.apache.lucene.store.FSDirectory;import org.apache.lucene.util.Version;public class Searcher { IndexSearcher indexSearcher; QueryParser queryParser; Query query; public Searcher(String indexDirectoryPath) throws IOException{ Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); indexSearcher = new IndexSearcher(indexDirectory); queryParser = new QueryParser(Version.LUCENE_36, LuceneConstants.CONTENTS, new StandardAnalyzer(Version.LUCENE_36)); } public TopDocs search( String searchQuery) throws IOException, ParseException{ query = queryParser.parse(searchQuery); return indexSearcher.search(query, LuceneConstants.MAX_SEARCH); } public Document getDocument(ScoreDoc scoreDoc) throws CorruptIndexException, IOException{ return indexSearcher.doc(scoreDoc.doc); } public void close() throws IOException{ indexSearcher.close(); }}

LuceneTester.java

This class is used to test the indexing and search capability of lucene library.

package com.tutorialspoint.lucene;import java.io.IOException;import org.apache.lucene.document.Document;import org.apache.lucene.queryParser.ParseException;import org.apache.lucene.search.ScoreDoc;import org.apache.lucene.search.TopDocs;public class LuceneTester { String indexDir = "E:\\Lucene\\Index"; String dataDir = "E:\\Lucene\\Data"; Indexer indexer; Searcher searcher; public static void main(String[] args) { LuceneTester tester; try { tester = new LuceneTester(); tester.createIndex(); tester.search("Mohan"); } catch (IOException e) { e.printStackTrace(); } catch (ParseException e) { e.printStackTrace(); } } private void createIndex() throws IOException{ indexer = new Indexer(indexDir); int numIndexed; long startTime = System.currentTimeMillis(); numIndexed = indexer.createIndex(dataDir, new TextFileFilter()); long endTime = System.currentTimeMillis(); indexer.close(); System.out.println(numIndexed+" File indexed, time taken: " +(endTime-startTime)+" ms"); } private void search(String searchQuery) throws IOException, ParseException{ searcher = new Searcher(indexDir); long startTime = System.currentTimeMillis(); TopDocs hits = searcher.search(searchQuery); long endTime = System.currentTimeMillis(); System.out.println(hits.totalHits + " documents found. Time :" + (endTime - startTime)); for(ScoreDoc scoreDoc : hits.scoreDocs) { Document doc = searcher.getDocument(scoreDoc); System.out.println("File: " + doc.get(LuceneConstants.FILE_PATH)); } searcher.close(); }}

Step 4 - Data & Index directory creation

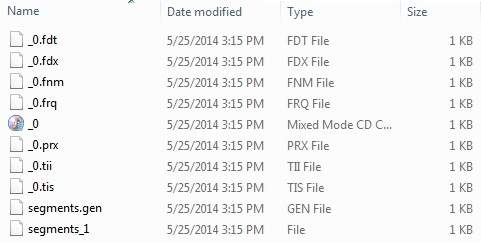

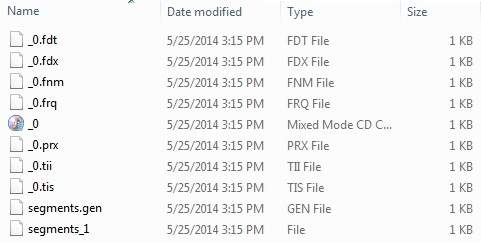

I've used 10 text files named from record1.txt to record10.txt containing simply names and other details of the students and put them in the directory E:\Lucene\Data. Test Data. An index directory path should be created as E:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Step 5 - Running the Program:

Once you are done with creating source, creating the raw data, data directory and index directory, you are ready for this step which is compiling and running your program. To do this, Keep LuceneTester.Java file tab active and use either Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If everything is fine with your application, this will print the following message in Eclipse IDE's console:

Indexing E:\Lucene\Data\record1.txtIndexing E:\Lucene\Data\record10.txtIndexing E:\Lucene\Data\record2.txtIndexing E:\Lucene\Data\record3.txtIndexing E:\Lucene\Data\record4.txtIndexing E:\Lucene\Data\record5.txtIndexing E:\Lucene\Data\record6.txtIndexing E:\Lucene\Data\record7.txtIndexing E:\Lucene\Data\record8.txtIndexing E:\Lucene\Data\record9.txt10 File indexed, time taken: 109 ms1 documents found. Time :0File: E:\Lucene\Data\record4.txt

Once you've run the program successfully, you will have following content in your index directory:

Lucene - Indexing Classes

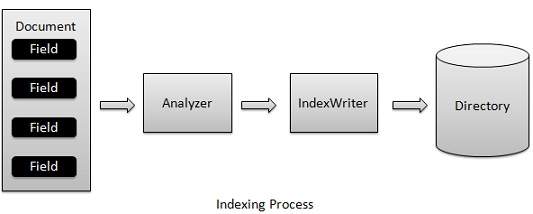

Indexing process is one of the core functionality provided by Lucene. Following diagram illustrates the indexing process and use of classes. IndexWriter is the most important and core component of the indexing process.

We add Document(s) containing Field(s) to IndexWriter which analyzes the Document(s) using theAnalyzer and then creates/open/edit indexes as required and store/update them in a Directory. IndexWriter is used to update or create indexes. It is not used to read indexes.

Indexing Classes:

Following is the list of commonly used classes during indexing process.

This class acts as a core component which creates/updates indexes during indexing process.2Directory

This class represents the storage location of the indexes.3Analyzer

Analyzer class is responsible to analyze a document and get the tokens/words from the text which is to be indexed. Without analysis done, IndexWriter can not create index.4Document

Document represents a virtual document with Fields where Field is object which can contain the physical document's contents, its meta data and so on. Analyzer can understand a Document only.5Field

Field is the lowest unit or the starting point of the indexing process. It represents the key value pair relationship where a key is used to identify the value to be indexed. Say a field used to represent contents of a document will have key as "contents" and the value may contain the part or all of the text or numeric content of the document. Lucene can index only text or numeric contents only.

Lucene - IndexWriter

Introduction

This class acts as a core component which creates/updates indexes during indexing process.

Class declaration

Following is the declaration for org.apache.lucene.index.IndexWriter class:

public class IndexWriter extends Object implements Closeable, TwoPhaseCommit

Field

Following are the fields for org.apache.lucene.index.IndexWriter class:

static int DEFAULT_MAX_BUFFERED_DELETE_TERMS -- Deprecated. use IndexWriterConfig.DEFAULT_MAX_BUFFERED_DELETE_TERMS instead.

static int DEFAULT_MAX_BUFFERED_DOCS -- Deprecated. Use IndexWriterConfig.DEFAULT_MAX_BUFFERED_DOCS instead.

static int DEFAULT_MAX_FIELD_LENGTH -- Deprecated. See IndexWriterConfig.

static double DEFAULT_RAM_BUFFER_SIZE_MB -- Deprecated. Use IndexWriterConfig.DEFAULT_RAM_BUFFER_SIZE_MB instead.

static int DEFAULT_TERM_INDEX_INTERVAL -- Deprecated. Use IndexWriterConfig.DEFAULT_TERM_INDEX_INTERVAL instead.

static int DISABLE_AUTO_FLUSH -- Deprecated. Use IndexWriterConfig.DISABLE_AUTO_FLUSH instead.

static int MAX_TERM_LENGTH -- Absolute hard maximum length for a term.

static String WRITE_LOCK_NAME -- Name of the write lock in the index.

static long WRITE_LOCK_TIMEOUT -- Deprecated. Use IndexWriterConfig.WRITE_LOCK_TIMEOUT instead.

Class constructors

Deprecated. use IndexWriter(Directory, IndexWriterConfig) instead.2IndexWriter(Directory d, Analyzer a, boolean create, IndexWriter.MaxFieldLength mfl)

Deprecated. use IndexWriter(Directory, IndexWriterConfig) instead.3IndexWriter(Directory d, Analyzer a, IndexDeletionPolicy deletionPolicy, IndexWriter.MaxFieldLength mfl)

Deprecated. use IndexWriter(Directory, IndexWriterConfig) instead.4IndexWriter(Directory d, Analyzer a, IndexDeletionPolicy deletionPolicy, IndexWriter.MaxFieldLength mfl, IndexCommit commit)

Deprecated. use IndexWriter(Directory, IndexWriterConfig) instead.5IndexWriter(Directory d, Analyzer a, IndexWriter.MaxFieldLength mfl)

Deprecated. use IndexWriter(Directory, IndexWriterConfig) instead.6IndexWriter(Directory d, IndexWriterConfig conf)

Constructs a new IndexWriter per the settings given in conf.

Class methods

Adds a document to this index.2void addDocument(Document doc, Analyzer analyzer)

Adds a document to this index, using the provided analyzer instead of the value of getAnalyzer().3void addDocuments(Collection<Document> docs)

Atomically adds a block of documents with sequentially assigned document IDs, such that an external reader will see all or none of the documents.4void addDocuments(Collection<Document> docs, Analyzer analyzer)

Atomically adds a block of documents, analyzed using the provided analyzer, with sequentially assigned document IDs, such that an external reader will see all or none of the documents.5void addIndexes(Directory... dirs)

Adds all segments from an array of indexes into this index.6void addIndexes(IndexReader... readers)

Merges the provided indexes into this index.7void addIndexesNoOptimize(Directory... dirs)

Deprecated.use addIndexes(Directory...) instead8void close()

Commits all changes to an index and closes all associated files.9void close(boolean waitForMerges)

Closes the index with or without waiting for currently running merges to finish.10void commit()

Commits all pending changes (added & deleted documents, segment merges, added indexes, etc.) to the index, and syncs all referenced index files, such that a reader will see the changes and the index updates will survive an OS or machine crash or power loss.11void commit(Map<String,String> commitUserData)

Commits all changes to the index, specifying a commitUserData Map (String -> String).12void deleteAll()

Delete all documents in the index.13void deleteDocuments(Query... queries)

Deletes the document(s) matching any of the provided queries.14void deleteDocuments(Query query)

Deletes the document(s) matching the provided query.15void deleteDocuments(Term... terms)

Deletes the document(s) containing any of the terms.16void deleteDocuments(Term term)

Deletes the document(s) containing term.17void deleteUnusedFiles()

Expert: remove any index files that are no longer used.18protected void doAfterFlush()

A hook for extending classes to execute operations after pending added and deleted documents have been flushed to the Directory but before the change is committed (new segments_N file written).19protected void doBeforeFlush()

A hook for extending classes to execute operations before pending added and deleted documents are flushed to the Directory.20protected void ensureOpen()

21protected void ensureOpen(boolean includePendingClose)

Used internally to throw an AlreadyClosedException if this IndexWriter has been closed.22void expungeDeletes()

Deprecated.23void expungeDeletes(boolean doWait)

Deprecated.24protected void flush(boolean triggerMerge, boolean applyAllDeletes)

Flush all in-memory buffered updates (adds and deletes) to the Directory.25protected void flush(boolean triggerMerge, boolean flushDocStores, boolean flushDeletes)

NOTE: flushDocStores is ignored now (hardwired to true); this method is only here for backwards compatibility26void forceMerge(int maxNumSegments)

Forces merge policy to merge segments until there's <= maxNumSegments.27void forceMerge(int maxNumSegments, boolean doWait)

Just like forceMerge(int), except you can specify whether the call should block until all merging completes.28void forceMergeDeletes()

Forces merging of all segments that have deleted documents.29void forceMergeDeletes(boolean doWait)

Just like forceMergeDeletes(), except you can specify whether the call should block until the operation completes.30Analyzer getAnalyzer()

Returns the analyzer used by this index.31IndexWriterConfig getConfig()

Returns the private IndexWriterConfig, cloned from the IndexWriterConfig passed to IndexWriter(Directory, IndexWriterConfig).32static PrintStream getDefaultInfoStream()

Returns the current default infoStream for newly instantiated IndexWriters.33static long getDefaultWriteLockTimeout()

Deprecated.use IndexWriterConfig.getDefaultWriteLockTimeout() instead34Directory getDirectory()

Returns the Directory used by this index.35PrintStream getInfoStream()

Returns the current infoStream in use by this writer.36int getMaxBufferedDeleteTerms()

Deprecated.use IndexWriterConfig.getMaxBufferedDeleteTerms() instead37int getMaxBufferedDocs()

Deprecated.use IndexWriterConfig.getMaxBufferedDocs() instead.38int getMaxFieldLength()

Deprecated.use LimitTokenCountAnalyzer to limit number of tokens.39int getMaxMergeDocs()

Deprecated.use LogMergePolicy.getMaxMergeDocs() directly.40IndexWriter.IndexReaderWarmer getMergedSegmentWarmer()

Deprecated.use IndexWriterConfig.getMergedSegmentWarmer() instead.41int getMergeFactor()

Deprecated.use LogMergePolicy.getMergeFactor() directly.42MergePolicy getMergePolicy()

Deprecated.use IndexWriterConfig.getMergePolicy() instead43MergeScheduler getMergeScheduler()

Deprecated.use IndexWriterConfig.getMergeScheduler() instead44Collection<SegmentInfo> getMergingSegments()

Expert: to be used by a MergePolicy to a void selecting merges for segments already being merged.45MergePolicy.OneMerge getNextMerge()

Expert: the MergeScheduler calls this method to retrieve the next merge requested by the MergePolicy46PayloadProcessorProvider getPayloadProcessorProvider()

Returns the PayloadProcessorProvider that is used during segment merges to process payloads.47double getRAMBufferSizeMB()

Deprecated.use IndexWriterConfig.getRAMBufferSizeMB() instead.48IndexReader getReader()

Deprecated.Please use IndexReader.open(IndexWriter,boolean) instead.49IndexReader getReader(int termInfosIndexDivisor)

Deprecated.Please use IndexReader.open(IndexWriter,boolean) instead. Furthermore, this method cannot guarantee the reader (and its sub-readers) will be opened with the termInfosIndexDivisor setting because some of them may have already been opened according to IndexWriterConfig.setReaderTermsIndexDivisor(int). You should set the requested termInfosIndexDivisor through IndexWriterConfig.setReaderTermsIndexDivisor(int) and use getReader().50int getReaderTermsIndexDivisor()

Deprecated.use IndexWriterConfig.getReaderTermsIndexDivisor() instead.51Similarity getSimilarity()

Deprecated.use IndexWriterConfig.getSimilarity() instead52int getTermIndexInterval()

Deprecated.use IndexWriterConfig.getTermIndexInterval()53boolean getUseCompoundFile()

Deprecated.use LogMergePolicy.getUseCompoundFile()54long getWriteLockTimeout()

Deprecated.use IndexWriterConfig.getWriteLockTimeout()55boolean hasDeletions()

56static boolean isLocked(Directory directory)

Returns true iff the index in the named directory is currently locked.57int maxDoc()

Returns total number of docs in this index, including docs not yet flushed (still in the RAM buffer), not counting deletions.58void maybeMerge()

Expert: asks the mergePolicy whether any merges are necessary now and if so, runs the requested merges and then iterate (test again if merges are needed) until no more merges are returned by the mergePolicy.59void merge(MergePolicy.OneMerge merge)

Merges the indicated segments, replacing them in the stack with a single segment.60void message(String message)

Prints a message to the infoStream (if non-null), prefixed with the identifying information for this writer and the thread that's calling it.61int numDeletedDocs(SegmentInfo info)

Obtain the number of deleted docs for a pooled reader.62int numDocs()

Returns total number of docs in this index, including docs not yet flushed (still in the RAM buffer), and including deletions.63int numRamDocs()

Expert: Return the number of documents currently buffered in RAM.64void optimize()

Deprecated.65void optimize(boolean doWait)

Deprecated.66void optimize(int maxNumSegments)

Deprecated.67void prepareCommit()

Expert: prepare for commit.68void prepareCommit(Map<String,String> commitUserData)

Expert: prepare for commit, specifying commitUserData Map (String -> String).69long ramSizeInBytes()

Expert: Return the total size of all index files currently cached in memory.70void rollback()

Close the IndexWriter without committing any changes that have occurred since the last commit (or since it was opened, if commit hasn't been called).71String segString()

72String segString(Iterable<SegmentInfo> infos)

73String segString(SegmentInfo info)

74static void setDefaultInfoStream(PrintStream infoStream)

If non-null, this will be the default infoStream used by a newly instantiated IndexWriter.75static void setDefaultWriteLockTimeout(long writeLockTimeout)

Deprecated.use IndexWriterConfig.setDefaultWriteLockTimeout(long) instead76void setInfoStream(PrintStream infoStream)

If non-null, information about merges, deletes and a message when maxFieldLength is reached will be printed to this.77void setMaxBufferedDeleteTerms(int maxBufferedDeleteTerms)

Deprecated.use IndexWriterConfig.setMaxBufferedDeleteTerms(int) instead.78void setMaxBufferedDocs(int maxBufferedDocs)

Deprecated.use IndexWriterConfig.setMaxBufferedDocs(int) instead.79void setMaxFieldLength(int maxFieldLength)

Deprecated.use LimitTokenCountAnalyzer instead. Note that the behvaior slightly changed - the analyzer limits the number of tokens per token stream created, while this setting limits the total number of tokens to index. This only matters if you index many multi-valued fields though.80void setMaxMergeDocs(int maxMergeDocs)

Deprecated.use LogMergePolicy.setMaxMergeDocs(int) directly.81void setMergedSegmentWarmer(IndexWriter.IndexReaderWarmer warmer)

Deprecated.use IndexWriterConfig.setMergedSegmentWarmer( org.apache.lucene.index.IndexWriter.IndexReaderWarmer ) instead.82void setMergeFactor(int mergeFactor)

Deprecated.use LogMergePolicy.setMergeFactor(int) directly.83void setMergePolicy(MergePolicy mp)

Deprecated.use IndexWriterConfig.setMergePolicy(MergePolicy) instead.84void setMergeScheduler(MergeScheduler mergeScheduler)

Deprecated.use IndexWriterConfig.setMergeScheduler(MergeScheduler) instead85void setPayloadProcessorProvider(PayloadProcessorProvider pcp)

Sets the PayloadProcessorProvider to use when merging payloads.86void setRAMBufferSizeMB(double mb)

Deprecated.use IndexWriterConfig.setRAMBufferSizeMB(double) instead.87void setReaderTermsIndexDivisor(int divisor)

Deprecated.use IndexWriterConfig.setReaderTermsIndexDivisor(int) instead.88void setSimilarity(Similarity similarity)

Deprecated.use IndexWriterConfig.setSimilarity(Similarity) instead89void setTermIndexInterval(int interval)

Deprecated.use IndexWriterConfig.setTermIndexInterval(int)90void setUseCompoundFile(boolean value)

Deprecated.use LogMergePolicy.setUseCompoundFile(boolean).91void setWriteLockTimeout(long writeLockTimeout)

Deprecated.use IndexWriterConfig.setWriteLockTimeout(long) instead92static void unlock(Directory directory)

Forcibly unlocks the index in the named directory.93void updateDocument(Term term, Document doc)

Updates a document by first deleting the document(s) containing term and then adding the new document.94void updateDocument(Term term, Document doc, Analyzer analyzer)

Updates a document by first deleting the document(s) containing term and then adding the new document.95void updateDocuments(Term delTerm, Collection<Document> docs)

Atomically deletes documents matching the provided delTerm and adds a block of documents with sequentially assigned document IDs, such that an external reader will see all or none of the documents.96void updateDocuments(Term delTerm, Collection<Document> docs, Analyzer analyzer)

Atomically deletes documents matching the provided delTerm and adds a block of documents, analyzed using the provided analyzer, with sequentially assigned document IDs, such that an external reader will see all or none of the documents.97boolean verbose()

Returns true if verbosing is enabled (i.e., infoStream !98void waitForMerges()

Wait for any currently outstanding merges to finish.

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - Directory

Introduction

This class represents the storage location of the indexes and generally it is a list of files. These files are called index files. Index files are normally created once and then used for read operation or can be deleted.

Class declaration

Following is the declaration for org.apache.lucene.store.Directory class:

public abstract class Directory extends Object implements Closeable

Field

Following are the fields for org.apache.lucene.store.Directory class:

protected boolean isOpen

protected LockFactory lockFactory -- Holds the LockFactory instance (implements locking for this Directory instance).

Class constructors

Class methods

Attempt to clear (forcefully unlock and remove) the specified lock.2abstract void close()

Closes the store.3static void copy(Directory src, Directory dest, boolean closeDirSrc)

Deprecated. Should be replaced with calls to copy(Directory, String, String) for every file that needs copying. You can use the following code:

IndexFileNameFilter filter = IndexFileNameFilter.getFilter(); for (String file : src.listAll()) { if (filter.accept(null, file)) { src.copy(dest, file, file); } }4void copy(Directory to, String src, String dest)

Copies the file src to Directory to under the new file name dest.5abstract IndexOutput createOutput(String name)

Creates a new, empty file in the directory with the given name.6abstract void deleteFile(String name)

Removes an existing file in the directory.7protected void ensureOpen()

8abstract boolean fileExists(String name)

Returns true iff a file with the given name exists.9abstract long fileLength(String name)

Returns the length of a file in the directory.10abstract long fileModified(String name)

Deprecated.11LockFactory getLockFactory()

Get the LockFactory that this Directory instance is using for its locking implementation.12String getLockID()

Return a string identifier that uniquely differentiates this Directory instance from other Directory instances.13abstract String[] listAll()

Returns an array of strings, one for each file in the directory.14Lock makeLock(String name)

Construct a Lock.15abstract IndexInput openInput(String name)

Returns a stream reading an existing file.16IndexInput openInput(String name, int bufferSize)

Returns a stream reading an existing file, with the specified read buffer size.17void setLockFactory(LockFactory lockFactory)

Set the LockFactory that this Directory instance should use for its locking implementation.18void sync(Collection<String> names)

Ensure that any writes to these files are moved to stable storage.19void sync(String name)

Deprecated. use sync(Collection) instead. For easy migration you can change your code to call sync(Collections.singleton(name))20String toString()

21abstract void touchFile(String name)

Deprecated. Lucene never uses this API; it will be removed in 4.0.

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - Analyzer

Introduction

Analyzer class is responsible to analyze a document and get the tokens/words from the text which is to be indexed. Without analysis done, IndexWriter can not create index.

Class declaration

Following is the declaration for org.apache.lucene.analysis.Analyzer class:

public abstract class Analyzer extends Object implements Closeable

Class constructors

Class methods

Frees persistent resources used by this Analyzer2int getOffsetGap(Fieldable field)

Just like getPositionIncrementGap(java.lang.String), except for Token offsets instead.3int getPositionIncrementGap(String fieldName)

Invoked before indexing a Fieldable instance if terms have already been added to that field.4protected Object getPreviousTokenStream()

Used by Analyzers that implement reusableTokenStream to retrieve previously saved TokenStreams for re-use by the same thread.5TokenStream reusableTokenStream(String fieldName, Reader reader)

Creates a TokenStream that is allowed to be re-used from the previous time that the same thread called this method.6protected void setPreviousTokenStream(Object obj)

Used by Analyzers that implement reusableTokenStream to save a TokenStream for later re-use by the same thread.7abstract TokenStream tokenStream(String fieldName, Reader reader)

Creates a TokenStream which tokenizes all the text in the provided Reader.

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - Document

Introduction

Document represents a virtual document with Fields where Field is object which can contain the physical document's contents, its meta data and so on. Analyzer can understand a Document only.

Class declaration

Following is the declaration for org.apache.lucene.document.Document class:

public final class Document extends Object implements Serializable

Class constructors

Constructs a new document with no fields.

Class methods

Attempt to clear (forcefully unlock and remove) the specified lock.2void add(Fieldable field)

Adds a field to a document.3String get(String name)

Returns the string value of the field with the given name if any exist in this document, or null.4byte[] getBinaryValue(String name)

Returns an array of bytes for the first (or only) field that has the name specified as the method parameter.5byte[][] getBinaryValues(String name)

Returns an array of byte arrays for of the fields that have the name specified as the method parameter.6float getBoost()

Returns, at indexing time, the boost factor as set by setBoost(float).7Field getField(String name)

Deprecated. Use getFieldable(java.lang.String) instead and cast depending on data type.8Fieldable getFieldable(String name)

Returns a field with the given name if any exist in this document, or null.9Fieldable[] getFieldables(String name)

Returns an array of Fieldables with the given name.10List<Fieldable> getFields()

Returns a List of all the fields in a document.11Field[] getFields(String name)

Deprecated. Use getFieldable(java.lang.String) instead and cast depending on data type.12String[] getValues(String name)

Returns an array of values of the field specified as the method parameter.13void removeField(String name)

Removes field with the specified name from the document.14void removeFields(String name)

Removes all fields with the given name from the document.15void setBoost(float boost)

Sets a boost factor for hits on any field of this document.16String toString()

Prints the fields of a document for human consumption.

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - Field

Introduction

Field is the lowest unit or the starting point of the indexing process. It represents the key value pair relationship where a key is used to identify the value to be indexed. Say a field used to represent contents of a document will have key as "contents" and the value may contain the part or all of the text or numeric content of the document.

Lucene can index only text or numeric contents only.This class represents the storage location of the indexes and generally it is a list of files. These files are called index files. Index files are normally created once and then used for read operation or can be deleted.

Class declaration

Following is the declaration for org.apache.lucene.document.Field class:

public final class Field extends AbstractField implements Fieldable, Serializable

Class constructors

Create a field by specifying its name, value and how it will be saved in the index.2Field(String name, byte[] value)

Create a stored field with binary value.3Field(String name, byte[] value, Field.Store store)

Deprecated.4Field(String name, byte[] value, int offset, int length)

Create a stored field with binary value.5Field(String name, byte[] value, int offset, int length, Field.Store store)

Deprecated.6Field(String name, Reader reader)

Create a tokenized and indexed field that is not stored.7Field(String name, Reader reader, Field.TermVector termVector)

Create a tokenized and indexed field that is not stored, optionally with storing term vectors.8Field(String name, String value, Field.Store store, Field.Index index)

Create a field by specifying its name, value and how it will be saved in the index.9Field(String name, String value, Field.Store store, Field.Index index, Field.TermVector termVector)

Create a field by specifying its name, value and how it will be saved in the index.10Field(String name, TokenStream tokenStream)

Create a tokenized and indexed field that is not stored.11Field(String name, TokenStream tokenStream, Field.TermVector termVector)

Create a tokenized and indexed field that is not stored, optionally with storing term vectors.

Class methods

Attempt to clear (forcefully unlock and remove) the specified lock.2Reader readerValue()

The value of the field as a Reader, or null.3void setTokenStream(TokenStream tokenStream)

Expert: sets the token stream to be used for indexing and causes isIndexed() and isTokenized() to return true.4void setValue(byte[] value)

Expert: change the value of this field.5void setValue(byte[] value, int offset, int length)

Expert: change the value of this field.6void setValue(Reader value)

Expert: change the value of this field.7void setValue(String value)

Expert: change the value of this field.8String stringValue()

The value of the field as a String, or null.9TokenStream tokenStreamValue()

The TokesStream for this field to be used when indexing, or null.

Methods inherited

This class inherits methods from the following classes:

org.apache.lucene.document.AbstractField

java.lang.Object

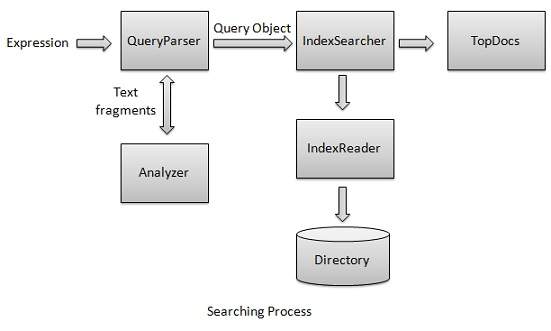

Lucene - Searching Classes

Searching process is again one of the core functionality provided by Lucene. It's flow is similar to that of indexing process. Basic search of lucene can be made using following classes which can also be termed as foundation classes for all search related operations.

Searching Classes:

Following is the list of commonly used classes during searching process.

This class act as a core component which reads/searches indexes created after indexing process. It takes directory instance pointing to the location containing the indexes.2Term

This class is the lowest unit of searching. It is similar to Field in indexing process.3Query

Query is an abstract class and contains various utility methods and is the parent of all types of queries that lucene uses during search process.4TermQuery

TermQuery is the most commonly used query object and is the foundation of many complex queries that lucene can make use of.5TopDocs

TopDocs points to the top N search results which matches the search criteria. It is simple container of pointers to point to documents which are output of search result.

Lucene - IndexSearcher

Introduction

This class acts as a core component which reads/searches indexes during searching process.

Class declaration

Following is the declaration for org.apache.lucene.search.IndexSearcher class:

public class IndexSearcher extends Searcher

Field

Following are the fields for org.apache.lucene.index.IndexWriter class:

protected int[] docStarts

protected IndexReader[] subReaders

protected IndexSearcher[] subSearchers

Class constructors

Deprecated. Use IndexSearcher(IndexReader) instead.2IndexSearcher(Directory path, boolean readOnly)

Deprecated. Use IndexSearcher(IndexReader) instead.3IndexSearcher(IndexReader r)

Creates a searcher searching the provided index.4IndexSearcher(IndexReader r, ExecutorService executor)

Runs searches for each segment separately, using the provided ExecutorService.5IndexSearcher(IndexReader reader, IndexReader[] subReaders, int[] docStarts)

Expert: directly specify the reader, subReaders and their docID starts.6IndexSearcher(IndexReader reader, IndexReader[] subReaders, int[] docStarts, ExecutorService executor)

Expert: directly specify the reader, subReaders and their docID starts, and an ExecutorService.

Class methods

Note that the underlying IndexReader is not closed, if IndexSearcher was constructed with IndexSearcher(IndexReader r).2Weight createNormalizedWeight(Query query)

Creates a normalized weight for a top-level Query.3Document doc(int docID)

Returns the stored fields of document i.4Document doc(int docID, FieldSelector fieldSelector)

Get the Document at the nth position.5int docFreq(Term term)

Returns total docFreq for this term.6Explanation explain(Query query, int doc)

Returns an Explanation that describes how doc scored against query.7Explanation explain(Weight weight, int doc)

Expert: low-level implementation method Returns an Explanation that describes how doc scored against weight.8protected void gatherSubReaders(List allSubReaders, IndexReader r)

9IndexReader getIndexReader()

Return the IndexReader this searches.10Similarity getSimilarity()

Expert: Return the Similarity implementation used by this Searcher.11IndexReader[] getSubReaders()

Returns the atomic subReaders used by this searcher.12int maxDoc()

Expert: Returns one greater than the largest possible document number.13Query rewrite(Query original)

Expert: called to re-write queries into primitive queries.14void search(Query query, Collector results)

Lower-level search API.15void search(Query query, Filter filter, Collector results)

Lower-level search API.16TopDocs search(Query query, Filter filter, int n)

Finds the top n hits for query, applying filter if non-null.17TopFieldDocs search(Query query, Filter filter, int n, Sort sort)

Search implementation with arbitrary sorting.18TopDocs search(Query query, int n)

Finds the top n hits for query.19TopFieldDocs search(Query query, int n, Sort sort)

Search implementation with arbitrary sorting and no filter.20void search(Weight weight, Filter filter, Collector collector)

Lower-level search API.21TopDocs search(Weight weight, Filter filter, int nDocs)

Expert: Low-level search implementation.22TopFieldDocs search(Weight weight, Filter filter, int nDocs, Sort sort)

Expert: Low-level search implementation with arbitrary sorting.23protected TopFieldDocs search(Weight weight, Filter filter, int nDocs, Sort sort, boolean fillFields)

Just like search(Weight, Filter, int, Sort), but you choose whether or not the fields in the returned FieldDoc instances should be set by specifying fillFields.24protected TopDocs search(Weight weight, Filter filter, ScoreDoc after, int nDocs)

Expert: Low-level search implementation.25TopDocs searchAfter(ScoreDoc after, Query query, Filter filter, int n)

Finds the top n hits for query, applying filter if non-null, where all results are after a previous result (after).26TopDocs searchAfter(ScoreDoc after, Query query, int n)

Finds the top n hits for query where all results are after a previous result (after).27void setDefaultFieldSortScoring(boolean doTrackScores, boolean doMaxScore)

By default, no scores are computed when sorting by field (using search(Query,Filter,int,Sort)).28void setSimilarity(Similarity similarity)

Expert: Set the Similarity implementation used by this Searcher.29String toString()

Methods inherited

This class inherits methods from the following classes:

org.apache.lucene.search.Searcher

java.lang.Object

Lucene - Term

Introduction

This class is the lowest unit of searching. It is similar to Field in indexing process.

Class declaration

Following is the declaration for org.apache.lucene.index.Term class:

public final class Term extends Object implements Comparable, Serializable

Class constructors

Constructs a Term with the given field and empty text.2Term(String fld, String txt)

Constructs a Term with the given field and text.

Class methods

Adds a document to this index.2int compareTo(Term other)

Compares two terms, returning a negative integer if this term belongs before the argument, zero if this term is equal to the argument, and a positive integer if this term belongs after the argument.3Term createTerm(String text)

Optimized construction of new Terms by reusing same field as this Term - avoids field.intern() overhead4boolean equals(Object obj)

5String field()

Returns the field of this term, an interned string.6int hashCode()

7String text()

Returns the text of this term.8String toString()

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - Query

Introduction

Query is an abstract class and contains various utility methods and is the parent of all types of queries that lucene uses during search process.

Class declaration

Following is the declaration for org.apache.lucene.search.Query class:

public abstract class Query extends Object implements Serializable, Cloneable

Class constructors

Class methods

Returns a clone of this query.2Query combine(Query[] queries)

Expert: called when re-writing queries under MultiSearcher.3Weight createWeight(Searcher searcher)

Expert: Constructs an appropriate Weight implementation for this query.4boolean equals(Object obj)

5void extractTerms(Set<Term> terms)

Expert: adds all terms occurring in this query to the terms set.6float getBoost()

Gets the boost for this clause.7Similarity getSimilarity(Searcher searcher)

Deprecated. Instead of using "runtime" subclassing/delegation, subclass the Weight instead.8int hashCode()

9static Query mergeBooleanQueries(BooleanQuery... queries)

Expert: merges the clauses of a set of BooleanQuery's into a single BooleanQuery.10Query rewrite(IndexReader reader)

Expert: called to re-write queries into primitive queries.11void setBoost(float b)

Sets the boost for this query clause to b.12String toString()

Prints a query to a string.13abstract String toString(String field)

Prints a query to a string, with field assumed to be the default field and omitted.14Weight weight(Searcher searcher)

Deprecated. never ever use this method in Weight implementations. Subclasses of Query should use createWeight(org.apache.lucene.search.Searcher), instead.

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - TermQuery

Introduction

TermQuery is the most commonly used query object and is the foundation of many complex queries that lucene can make use of.

Class declaration

Following is the declaration for org.apache.lucene.search.TermQuery class:

public class TermQuery extends Query

Class constructors

Constructs a query for the term t.

Class methods

Adds a document to this index.2Weight createWeight(Searcher searcher)

Expert: Constructs an appropriate Weight implementation for this query.3boolean equals(Object o)

Returns true iff o is equal to this.4void extractTerms(Set<Term> terms)

Expert: adds all terms occurring in this query to the terms set.5Term getTerm()

Returns the term of this query.6int hashCode()

Returns a hash code value for this object.7String toString(String field)

Prints a user-readable version of this query.

Methods inherited

This class inherits methods from the following classes:

org.apache.lucene.search.Query

java.lang.Object

Lucene - TopDocs

Introduction

TopDocs points to the top N search results which matches the search criteria. It is simple container of pointers to point to documents which are output of search result.

Class declaration

Following is the declaration for org.apache.lucene.search.TopDocs class:

public class TopDocs extends Object implements Serializable

Field

Following are the fields for org.apache.lucene.search.TopDocs class:

ScoreDoc[] scoreDocs -- The top hits for the query.

int totalHits -- The total number of hits for the query.

Class constructors

Class methods

Returns the maximum score value encountered.2static TopDocs merge(Sort sort, int topN, TopDocs[] shardHits)

Returns a new TopDocs, containing topN results across the provided TopDocs, sorting by the specified Sort.3void setMaxScore(float maxScore)

Sets the maximum score value encountered.

Methods inherited

This class inherits methods from the following classes:

java.lang.Object

Lucene - Indexing Process

Indexing process is one of the core functionality provided by Lucene. Following diagram illustrates the indexing process and use of classes. IndexWriter is the most important and core component of the indexing process.

We add Document(s) containing Field(s) to IndexWriter which analyzes the Document(s) using theAnalyzer and then creates/open/edit indexes as required and store/update them in a Directory. IndexWriter is used to update or create indexes. It is not used to read indexes.

Now we'll show you a step by step process to get a kick start in understanding of indexing process using a basic example.

Create a document

Create a method to get a lucene document from a text file.

Create various types of fields which are key value pairs containing keys as names and values as contents to be indexed.

Set field to be analyzed or not. In our case, only contents is to be analyzed as it can contain data such as a, am, are, an etc. which are not required in search operations.

Add the newly created fields to the document object and return it to the caller method.

private Document getDocument(File file) throws IOException{ Document document = new Document(); //index file contents Field contentField = new Field(LuceneConstants.CONTENTS, new FileReader(file)); //index file name Field fileNameField = new Field(LuceneConstants.FILE_NAME, file.getName(), Field.Store.YES,Field.Index.NOT_ANALYZED); //index file path Field filePathField = new Field(LuceneConstants.FILE_PATH, file.getCanonicalPath(), Field.Store.YES,Field.Index.NOT_ANALYZED); document.add(contentField); document.add(fileNameField); document.add(filePathField); return document;}

Create a IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during indexing process.

Create object of IndexWriter.

Create a lucene directory which should point to location where indexes are to be stored.

Initialize the IndexWriter object created with the index directory, a standard analyzer having version information and other required/optional parameters.

private IndexWriter writer;public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED);}

Start Indexing process

private void indexFile(File file) throws IOException{ System.out.println("Indexing "+file.getCanonicalPath()); Document document = getDocument(file); writer.addDocument(document);}

Example Application

Let us create a test Lucene application to test indexing process.

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;public class LuceneConstants { public static final String CONTENTS="contents"; public static final String FILE_NAME="filename"; public static final String FILE_PATH="filepath"; public static final int MAX_SEARCH = 10;}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;public class TextFileFilter implements FileFilter { @Override public boolean accept(File pathname) { return pathname.getName().toLowerCase().endsWith(".txt"); }}

Indexer.java

This class is used to index the raw data so that we can make it searchable using lucene library.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;import java.io.FileReader;import java.io.IOException;import org.apache.lucene.analysis.standard.StandardAnalyzer;import org.apache.lucene.document.Document;import org.apache.lucene.document.Field;import org.apache.lucene.index.CorruptIndexException;import org.apache.lucene.index.IndexWriter;import org.apache.lucene.store.Directory;import org.apache.lucene.store.FSDirectory;import org.apache.lucene.util.Version;public class Indexer { private IndexWriter writer; public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED); } public void close() throws CorruptIndexException, IOException{ writer.close(); } private Document getDocument(File file) throws IOException{ Document document = new Document(); //index file contents Field contentField = new Field(LuceneConstants.CONTENTS, new FileReader(file)); //index file name Field fileNameField = new Field(LuceneConstants.FILE_NAME, file.getName(), Field.Store.YES,Field.Index.NOT_ANALYZED); //index file path Field filePathField = new Field(LuceneConstants.FILE_PATH, file.getCanonicalPath(), Field.Store.YES,Field.Index.NOT_ANALYZED); document.add(contentField); document.add(fileNameField); document.add(filePathField); return document; } private void indexFile(File file) throws IOException{ System.out.println("Indexing "+file.getCanonicalPath()); Document document = getDocument(file); writer.addDocument(document); } public int createIndex(String dataDirPath, FileFilter filter) throws IOException{ //get all files in the data directory File[] files = new File(dataDirPath).listFiles(); for (File file : files) { if(!file.isDirectory() && !file.isHidden() && file.exists() && file.canRead() && filter.accept(file) ){ indexFile(file); } } return writer.numDocs(); }}

LuceneTester.java

This class is used to test the indexing capability of lucene library.

package com.tutorialspoint.lucene;import java.io.IOException;public class LuceneTester { String indexDir = "E:\\Lucene\\Index"; String dataDir = "E:\\Lucene\\Data"; Indexer indexer; public static void main(String[] args) { LuceneTester tester; try { tester = new LuceneTester(); tester.createIndex(); } catch (IOException e) { e.printStackTrace(); } } private void createIndex() throws IOException{ indexer = new Indexer(indexDir); int numIndexed; long startTime = System.currentTimeMillis(); numIndexed = indexer.createIndex(dataDir, new TextFileFilter()); long endTime = System.currentTimeMillis(); indexer.close(); System.out.println(numIndexed+" File indexed, time taken: " +(endTime-startTime)+" ms"); }}

Data & Index directory creation

I've used 10 text files named from record1.txt to record10.txt containing simply names and other details of the students and put them in the directory E:\Lucene\Data. Test Data. An index directory path should be created as E:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program:

Once you are done with creating source, creating the raw data, data directory and index directory, you are ready for this step which is compiling and running your program. To do this, Keep LuceneTester.Java file tab active and use either Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If everything is fine with your application, this will print the following message in Eclipse IDE's console:

Indexing E:\Lucene\Data\record1.txtIndexing E:\Lucene\Data\record10.txtIndexing E:\Lucene\Data\record2.txtIndexing E:\Lucene\Data\record3.txtIndexing E:\Lucene\Data\record4.txtIndexing E:\Lucene\Data\record5.txtIndexing E:\Lucene\Data\record6.txtIndexing E:\Lucene\Data\record7.txtIndexing E:\Lucene\Data\record8.txtIndexing E:\Lucene\Data\record9.txt10 File indexed, time taken: 109 ms

Once you've run the program successfully, you will have following content in your index directory:

Lucene - Indexing Operations

In this chapter, we'll discuss the four major operations of indexing. These operations are useful at various times and are used throughout of a software search application.

Indexing Operations:

Following is the list of commonly used operations during indexing process.

This operation is used in the initial stage of indexing proces to create the indexes on the newly available contents.2Update Document

This operation is used to update indexes to reflect the changes in the updated contents. It is similar to recreating the index.3Delete Document

This operation is used to update indexes to exclude the documents which are not required to be indexed/searched.4Field Options

Field options specifies a way or controls the way in which contents of a field are to be made searchable.

Lucene - Add Document Operation

Add document is one of the core operation as part of indexing process.

We add Document(s) containing Field(s) to IndexWriter where IndexWriter is used to update or create indexes.

Now we'll show you a step by step process to get a kick start in understanding of add document using a basic example.

Add a document to an index.

Create a method to get a lucene document from a text file.

Create various types of fields which are key value pairs containing keys as names and values as contents to be indexed.

Set field to be analyzed or not. In our case, only contents is to be analyzed as it can contain data such as a, am, are, an etc. which are not required in search operations.

Add the newly created fields to the document object and return it to the caller method.

private Document getDocument(File file) throws IOException{ Document document = new Document(); //index file contents Field contentField = new Field(LuceneConstants.CONTENTS, new FileReader(file)); //index file name Field fileNameField = new Field(LuceneConstants.FILE_NAME, file.getName(), Field.Store.YES,Field.Index.NOT_ANALYZED); //index file path Field filePathField = new Field(LuceneConstants.FILE_PATH, file.getCanonicalPath(), Field.Store.YES,Field.Index.NOT_ANALYZED); document.add(contentField); document.add(fileNameField); document.add(filePathField); return document;}

Create a IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during indexing process.

Create object of IndexWriter.

Create a lucene directory which should point to location where indexes are to be stored.

Initialize the IndexWricrter object created with the index directory, a standard analyzer having version information and other required/optional parameters.

private IndexWriter writer;public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED);}

Add document and start Indexing process

Following two are the ways to add the document.

addDocument(Document) - Adds the document using the default analyzer (specified when index writer is created.)

addDocument(Document,Analyzer) - Adds the document using the provided analyzer.

private void indexFile(File file) throws IOException{ System.out.println("Indexing "+file.getCanonicalPath()); Document document = getDocument(file); writer.addDocument(document);}

Example Application

Let us create a test Lucene application to test indexing process.

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;public class LuceneConstants { public static final String CONTENTS="contents"; public static final String FILE_NAME="filename"; public static final String FILE_PATH="filepath"; public static final int MAX_SEARCH = 10;}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;public class TextFileFilter implements FileFilter { @Override public boolean accept(File pathname) { return pathname.getName().toLowerCase().endsWith(".txt"); }}

Indexer.java

This class is used to index the raw data so that we can make it searchable using lucene library.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;import java.io.FileReader;import java.io.IOException;import org.apache.lucene.analysis.standard.StandardAnalyzer;import org.apache.lucene.document.Document;import org.apache.lucene.document.Field;import org.apache.lucene.index.CorruptIndexException;import org.apache.lucene.index.IndexWriter;import org.apache.lucene.store.Directory;import org.apache.lucene.store.FSDirectory;import org.apache.lucene.util.Version;public class Indexer { private IndexWriter writer; public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED); } public void close() throws CorruptIndexException, IOException{ writer.close(); } private Document getDocument(File file) throws IOException{ Document document = new Document(); //index file contents Field contentField = new Field(LuceneConstants.CONTENTS, new FileReader(file)); //index file name Field fileNameField = new Field(LuceneConstants.FILE_NAME, file.getName(), Field.Store.YES,Field.Index.NOT_ANALYZED); //index file path Field filePathField = new Field(LuceneConstants.FILE_PATH, file.getCanonicalPath(), Field.Store.YES,Field.Index.NOT_ANALYZED); document.add(contentField); document.add(fileNameField); document.add(filePathField); return document; } private void indexFile(File file) throws IOException{ System.out.println("Indexing "+file.getCanonicalPath()); Document document = getDocument(file); writer.addDocument(document); } public int createIndex(String dataDirPath, FileFilter filter) throws IOException{ //get all files in the data directory File[] files = new File(dataDirPath).listFiles(); for (File file : files) { if(!file.isDirectory() && !file.isHidden() && file.exists() && file.canRead() && filter.accept(file) ){ indexFile(file); } } return writer.numDocs(); }}

LuceneTester.java

This class is used to test the indexing capability of lucene library.

package com.tutorialspoint.lucene;import java.io.IOException;public class LuceneTester { String indexDir = "E:\\Lucene\\Index"; String dataDir = "E:\\Lucene\\Data"; Indexer indexer; public static void main(String[] args) { LuceneTester tester; try { tester = new LuceneTester(); tester.createIndex(); } catch (IOException e) { e.printStackTrace(); } } private void createIndex() throws IOException{ indexer = new Indexer(indexDir); int numIndexed; long startTime = System.currentTimeMillis(); numIndexed = indexer.createIndex(dataDir, new TextFileFilter()); long endTime = System.currentTimeMillis(); indexer.close(); System.out.println(numIndexed+" File indexed, time taken: " +(endTime-startTime)+" ms"); }}

Data & Index directory creation

I've used 10 text files named from record1.txt to record10.txt containing simply names and other details of the students and put them in the directory E:\Lucene\Data. Test Data. An index directory path should be created as E:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program:

Once you are done with creating source, creating the raw data, data directory and index directory, you are ready for this step which is compiling and running your program. To do this, Keep LuceneTester.Java file tab active and use either Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If everything is fine with your application, this will print the following message in Eclipse IDE's console:

Indexing E:\Lucene\Data\record1.txtIndexing E:\Lucene\Data\record10.txtIndexing E:\Lucene\Data\record2.txtIndexing E:\Lucene\Data\record3.txtIndexing E:\Lucene\Data\record4.txtIndexing E:\Lucene\Data\record5.txtIndexing E:\Lucene\Data\record6.txtIndexing E:\Lucene\Data\record7.txtIndexing E:\Lucene\Data\record8.txtIndexing E:\Lucene\Data\record9.txt10 File indexed, time taken: 109 ms

Once you've run the program successfully, you will have following content in your index directory:

Lucene - Update Document Operation

Update document is another important operation as part of indexing process.This operation is used when already indexed contents are updated and indexes become invalid. This operation is also known as re-indexing.

We update Document(s) containing Field(s) to IndexWriter where IndexWriter is used to update indexes.

Now we'll show you a step by step process to get a kick start in understanding of update document using a basic example.

Update a document to an index.

Create a method to update a lucene document from an updated text file.

private void updateDocument(File file) throws IOException{ Document document = new Document(); //update indexes for file contents writer.updateDocument( new Term(LuceneConstants.CONTENTS, new FileReader(file)),document); writer.close();}

Create a IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during indexing process.

Create object of IndexWriter.

Create a lucene directory which should point to location where indexes are to be stored.

Initialize the IndexWricrter object created with the index directory, a standard analyzer having version information and other required/optional parameters.

private IndexWriter writer;public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED);}

Update document and start reindexing process

Following two are the ways to update the document.

updateDocument(Term, Document) - Delete the document containing the term and add the document using the default analyzer (specified when index writer is created.)

updateDocument(Term, Document,Analyzer) - Delete the document containing the term and add the document using the provided analyzer.

private void indexFile(File file) throws IOException{ System.out.println("Updating index for "+file.getCanonicalPath()); updateDocument(file); }

Example Application

Let us create a test Lucene application to test indexing process.

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;public class LuceneConstants { public static final String CONTENTS="contents"; public static final String FILE_NAME="filename"; public static final String FILE_PATH="filepath"; public static final int MAX_SEARCH = 10;}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;public class TextFileFilter implements FileFilter { @Override public boolean accept(File pathname) { return pathname.getName().toLowerCase().endsWith(".txt"); }}

Indexer.java

This class is used to index the raw data so that we can make it searchable using lucene library.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;import java.io.FileReader;import java.io.IOException;import org.apache.lucene.analysis.standard.StandardAnalyzer;import org.apache.lucene.document.Document;import org.apache.lucene.document.Field;import org.apache.lucene.index.CorruptIndexException;import org.apache.lucene.index.IndexWriter;import org.apache.lucene.store.Directory;import org.apache.lucene.store.FSDirectory;import org.apache.lucene.util.Version;public class Indexer { private IndexWriter writer; public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED); } public void close() throws CorruptIndexException, IOException{ writer.close(); } private void updateDocument(File file) throws IOException{ Document document = new Document(); //update indexes for file contents writer.updateDocument( new Term(LuceneConstants.FILE_NAME, file.getName()),document); writer.close(); } private void indexFile(File file) throws IOException{ System.out.println("Updating index: "+file.getCanonicalPath()); updateDocument(file); } public int createIndex(String dataDirPath, FileFilter filter) throws IOException{ //get all files in the data directory File[] files = new File(dataDirPath).listFiles(); for (File file : files) { if(!file.isDirectory() && !file.isHidden() && file.exists() && file.canRead() && filter.accept(file) ){ indexFile(file); } } return writer.numDocs(); }}

LuceneTester.java

This class is used to test the indexing capability of lucene library.

package com.tutorialspoint.lucene;import java.io.IOException;public class LuceneTester { String indexDir = "E:\\Lucene\\Index"; String dataDir = "E:\\Lucene\\Data"; Indexer indexer; public static void main(String[] args) { LuceneTester tester; try { tester = new LuceneTester(); tester.createIndex(); } catch (IOException e) { e.printStackTrace(); } } private void createIndex() throws IOException{ indexer = new Indexer(indexDir); int numIndexed; long startTime = System.currentTimeMillis(); numIndexed = indexer.createIndex(dataDir, new TextFileFilter()); long endTime = System.currentTimeMillis(); indexer.close(); }}

Data & Index directory creation

I've used 10 text files named from record1.txt to record10.txt containing simply names and other details of the students and put them in the directory E:\Lucene\Data. Test Data. An index directory path should be created as E:\Lucene\Index. After running this program, you can see the list of index files created in that folder.

Running the Program:

Once you are done with creating source, creating the raw data, data directory and index directory, you are ready for this step which is compiling and running your program. To do this, Keep LuceneTester.Java file tab active and use either Run option available in the Eclipse IDE or use Ctrl + F11 to compile and run your LuceneTester application. If everything is fine with your application, this will print the following message in Eclipse IDE's console:

Updating index for E:\Lucene\Data\record1.txtUpdating index for E:\Lucene\Data\record10.txtUpdating index for E:\Lucene\Data\record2.txtUpdating index for E:\Lucene\Data\record3.txtUpdating index for E:\Lucene\Data\record4.txtUpdating index for E:\Lucene\Data\record5.txtUpdating index for E:\Lucene\Data\record6.txtUpdating index for E:\Lucene\Data\record7.txtUpdating index for E:\Lucene\Data\record8.txtUpdating index for E:\Lucene\Data\record9.txt10 File indexed, time taken: 109 ms

Once you've run the program successfully, you will have following content in your index directory:

Lucene - Delete Document Operation

Delete document is another important operation as part of indexing process.This operation is used when already indexed contents are updated and indexes become invalid or indexes become very large in size then in order to reduce the size and update the index, delete operations are carried out.

We delete Document(s) containing Field(s) to IndexWriter where IndexWriter is used to update indexes.

Now we'll show you a step by step process to get a kick start in understanding of delete document using a basic example.

Delete a document from an index.

Create a method to delete a lucene document of an obsolete text file.

private void deleteDocument(File file) throws IOException{ //delete indexes for a file writer.deleteDocument(new Term(LuceneConstants.FILE_NAME,file.getName())); writer.commit(); System.out.println("index contains deleted files: "+writer.hasDeletions()); System.out.println("index contains documents: "+writer.maxDoc()); System.out.println("index contains deleted documents: "+writer.numDoc());}

Create a IndexWriter

IndexWriter class acts as a core component which creates/updates indexes during indexing process.

Create object of IndexWriter.

Create a lucene directory which should point to location where indexes are to be stored.

Initialize the IndexWricrter object created with the index directory, a standard analyzer having version information and other required/optional parameters.

private IndexWriter writer;public Indexer(String indexDirectoryPath) throws IOException{ //this directory will contain the indexes Directory indexDirectory = FSDirectory.open(new File(indexDirectoryPath)); //create the indexer writer = new IndexWriter(indexDirectory, new StandardAnalyzer(Version.LUCENE_36),true, IndexWriter.MaxFieldLength.UNLIMITED);}

Delete document and start reindexing process

Following two are the ways to delete the document.

deleteDocuments(Term) - Delete all the documents containing the term.

deleteDocuments(Term[]) - Delete all the documents containing any of the terms in the array.

deleteDocuments(Query) - Delete all the documents matching the query.

deleteDocuments(Query[]) - Delete all the documents matching the query in the array.

deleteAll - Delete all the documents.

private void indexFile(File file) throws IOException{ System.out.println("Deleting index for "+file.getCanonicalPath()); deleteDocument(file); }

Example Application

Let us create a test Lucene application to test indexing process.

LuceneConstants.java

This class is used to provide various constants to be used across the sample application.

package com.tutorialspoint.lucene;public class LuceneConstants { public static final String CONTENTS="contents"; public static final String FILE_NAME="filename"; public static final String FILE_PATH="filepath"; public static final int MAX_SEARCH = 10;}

TextFileFilter.java

This class is used as a .txt file filter.

package com.tutorialspoint.lucene;import java.io.File;import java.io.FileFilter;public class TextFileFilter implements FileFilter { @Override public boolean accept(File pathname) { return pathname.getName().toLowerCase().endsWith(".txt"); }}

Indexer.java

This class is used to index the raw data so that we can make it searchable using lucene library.