openstack metadata

来源:互联网 发布:广州多益网络怎么样 编辑:程序博客网 时间:2024/05/22 12:14

openstack中的metadata server

虚拟机启动时候需要注入hostname、password、public-key、network-info之类的信息,以便虚拟机能够被租户管理。对于这些信息的注入openstack提供了两种方式,guestfs-inject以及metadata-server。

guestfs-inject的使用很受限制尤其是并不是所有镜像都能够支持这种方式,I版本也已经取消了这种方式; metadata-server使用上更为灵活,但是他们都依赖镜像内部必须装有cloud-init组件,尽管如此,由于aws力挺metadata-server,所以这已经成为了虚拟机信息注入方案事实上的标准。

cloud-init

cloud-init是一个在启动的时候定制你的Iaas平台中虚拟机的包,它可以帮助你重新定义你的虚拟机而不需要重新安装,只需要加入对应的配置项即可。在Ec2中有很多镜像都安装了cloud-init来方便用户定制自己的虚拟机。它可以让你在虚拟机启动的时候设置语言环境,设置主机名,甚至生成私钥,添加用户自己的ssh公钥到虚拟机.ssh/authorized_keys, 设置临时挂载点等等。

cloud-init 项目地址: https://launchpad.net/cloud-init

cloud-init 文档地址: http://cloudinit.readthedocs.org

metadata元数据服务

metadata字面上是元数据,是一个不容易理解的概念。在除了openstack的其他场合也经常会碰到。openstack里的metadata,是提供一个机制给用户,可以设定每一个instance 的参数。

比如你想给instance设置某个属性,比如主机名。metadata的一个重要应用,是设置每个instance的ssh公钥。公钥的设置有两种方式:

1、创建instance时注入文件镜像

2、启动instance后,通过metadata获取,然后用脚本写入

第二种方式更加灵活,可以给非root用户注入公钥。

Amazon首先提出了metadata的概念,并搭建了metadata的服务,这个服务的公网IP是169.254.169.254,通常虚拟机通过cloud-init发出的请求是:

http://169.254.169.254/latest/meta-data

后来很多人给亚马逊定制了一些操作系统的镜像,比如 ubuntu, fedora, centos 等等,而且将里面获取 metadta 的api地址也写死了。所以opentack为了兼容,保留了这个地址 169.254.169.254。然后通过iptables nat映射到真实的api上:nova中的metadata-server

metadata-server的具体实现是在nova-api组件中,nova.conf中与metadata有关的配置如下:

具体的代码实现在 api/metadata 下:

与neutron的结合使用

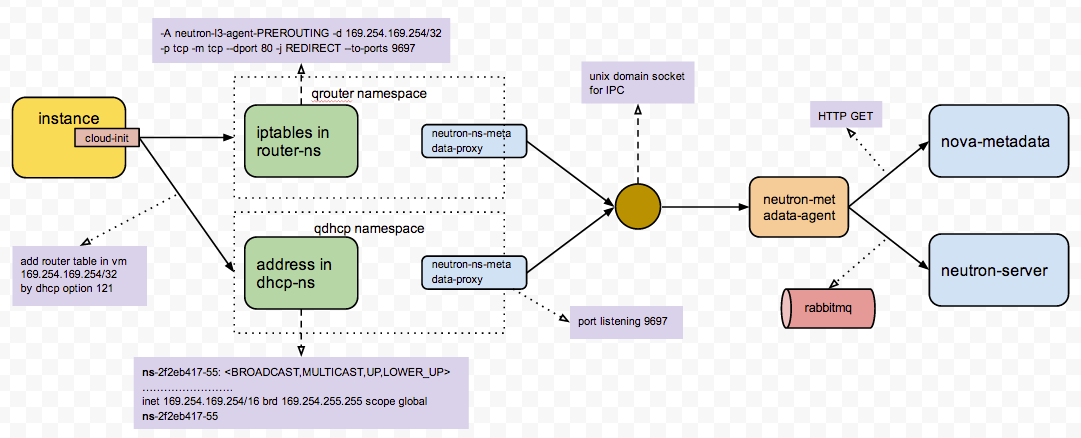

通常使用neutron实现虚拟机的网络方案,neutron中涉及metadata的主要有两个服务:namespace-metadata-proxy和metadata-agent。接收的cloud-init请求由network node上的ns-metadata-proxy处理,ns-metadata-proxy与metadata-agent通过unix domain socket实现IPC,实现将对ns-metadata-proxy的请求交给metadata-agent处理。neutron的metadata-agent并不会自己实现metadata服务,而是把cloud-init的请求转发给nova-api中的metadata服务,服务流程如下图:

1.虚拟机发出请求

虚拟机启动时通过cloud-init组件请求169.254.169.254这个地址的metadata服务(http://169.254.169.254/xxxxxx/meta-data/instance-id),这时这个请求会有两种方式处理:2. namespace-metadata-proxy

- ubuntu@ubuntu-test:~$ ip -4 address show dev eth0

- 2: eth0: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc pfifo_fast state UP qlen 1000

- inet 10.1.1.2/24 brd 10.1.1.255 scope global eth0

- ubuntu@ubuntu-test:~$ ip route

- default via 10.1.1.1 dev eth0 metric 100

- 10.1.1.0/24 dev eth0 proto kernel scope link src 10.1.1.2

先来看看对应的iptables规则:

- -A quantum-l3-agent-PREROUTING -d 169.254.169.254/32 -p tcp -m tcp --dport 80 -j REDIRECT --to-ports 9697

- -A quantum-l3-agent-INPUT -d 127.0.0.1/32 -p tcp -m tcp --dport 9697 -j ACCEPT

- root@network232:~# ip netns exec qrouter-b147a74b-39bb-4c7a-aed5-19cac4c2df13 ip addr show qr-f7ec0f5c-2e

- 12: qr-f7ec0f5c-2e: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

- link/ether fa:16:3e:81:9c:69 brd ff:ff:ff:ff:ff:ff

- inet 10.1.1.1/24 brd 10.1.1.255 scope global qr-f7ec0f5c-2e

- inet6 fe80::f816:3eff:fe81:9c69/64 scope link

- valid_lft forever preferred_lft forever

- root@network232:~# ip netns exec qrouter-b147a74b-39bb-4c7a-aed5-19cac4c2df13 netstat -nlpt | grep 9697

- tcp 0 0 0.0.0.0:9697 0.0.0.0:* LISTEN 13249/python

- root@network232:~# ps -f --pid 13249 | fold -s -w 85

- UID PID PPID C STIME TTY TIME CMD

- root 13249 1 0 16:30 ? 00:00:00 python

- /usr/bin/quantum-ns-metadata-proxy

- --pid_file=/var/lib/quantum/external/pids/b147a74b-39bb-4c7a-aed5-19cac4c2df13.pid

- --router_id=b147a74b-39bb-4c7a-aed5-19cac4c2df13 --state_path=/var/lib/quantum

- --metadata_port=9697 --debug --verbose

- --log-file=quantum-ns-metadata-proxyb147a74b-39bb-4c7a-aed5-19cac4c2df13.log

- --log-dir=/var/log/quantum

- def _spawn_metadata_proxy(self, router_info):

- def callback(pid_file):

- proxy_cmd = ['quantum-ns-metadata-proxy',

- '--pid_file=%s' % pid_file,

- '--router_id=%s' % router_info.router_id,

- '--state_path=%s' % self.conf.state_path,

- '--metadata_port=%s' % self.conf.metadata_port]

- proxy_cmd.extend(config.get_log_args(

- cfg.CONF, 'quantum-ns-metadata-proxy-%s.log' %

- router_info.router_id))

- return proxy_cmd

- pm = external_process.ProcessManager(

- self.conf,

- router_info.router_id,

- self.root_helper,

- router_info.ns_name())

- pm.enable(callback)

1、向请求头部添加X-Forwarded-For和X-Quantum-Router-ID,分别表示虚拟机的fixedIP和router的ID

2、将请求代理至Unix domain socket(/var/lib/quantum/metadata_proxy)

3. neutron metadata agent

metadata-agent接收请求,将请求交给metadata-server的真实实现者nova-api。- root@network232:~# netstat -lxp | grep metadata

- unix 2 [ ACC ] STREAM LISTENING 46859 21025/python /var/lib/quantum/metadata_proxy

- root@network232:~# ps -f --pid 21025 | fold -s

- UID PID PPID C STIME TTY TIME CMD

- quantum 21025 1 0 16:31 ? 00:00:00 python

- /usr/bin/quantum-metadata-agent --config-file=/etc/quantum/quantum.conf

- --config-file=/etc/quantum/metadata_agent.ini

- --log-file=/var/log/quantum/metadata-agent.log

4.nova metadata service

nova metadata agent最终转到metadata service所在地址(controller),对应8775端口。Nova的metadata service是随着nova-api启动的,会同时启动2个服务:OpenStack metadata API和EC2-compatible API。也就是说computer上的虚机获取metadata的请求最终被controller的nova-api-metadata的服务获取到,虚拟机访问169.254.169.254的返回内容,其实就是nova-api-metadata service向nova-conductor查询后,返回给network node的metadata agent,再由metadata agent返回给metadata proxy,然后返回给虚拟机的。- root@controller231:~# netstat -nlpt | grep 8775

- tcp 0 0 0.0.0.0:8775 0.0.0.0:* LISTEN 1714/python

- root@controller231:~# ps -f --pid 1714

- UID PID PPID C STIME TTY TIME CMD

- nova 1714 1 0 16:40 ? 00:00:01 /usr/bin/python /usr/bin/nova-api --config-file=/etc/nova/nova.conf

metadata service使用方法

注意,以下命令是在虚拟机内部执行,不是在宿主机中运行。

基本使用方法

To retrieve a list of supported versions for the OpenStack metadata API, make a GET request to http://169.254.169.254/openstack:

$ curl http://169.254.169.254/openstack2012-08-102013-04-042013-10-17latest

To list supported versions for the EC2-compatible metadata API, make a GET request to http://169.254.169.254:

$ curl http://169.254.169.2541.02007-01-192007-03-012007-08-292007-10-102007-12-152008-02-012008-09-012009-04-04latest

If you write a consumer for one of these APIs, always attempt to access the most recent API version supported by your consumer first, then fall back to an earlier version if the most recent one is not available.

Metadata from the OpenStack API is distributed in JSON format. To retrieve the metadata, make a GET request tohttp://169.254.169.254/openstack/2012-08-10/meta_data.json:

$ curl http://169.254.169.254/openstack/2012-08-10/meta_data.json

1234567891011121314{"uuid":"d8e02d56-2648-49a3-bf97-6be8f1204f38","availability_zone":"nova","hostname":"test.novalocal","launch_index":0,"meta":{"priority":"low","role":"webserver"},"public_keys":{"mykey":"ssh-rsaAAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKVVRNCRX6BlnNbI+USLGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTHbsiyPCIDOKyeHba4MUJq8Oh5b2i71/3BISpyxTBH/uZDHdslW2a+SrPDCeuMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q==GeneratedbyNova\n"},"name":"test"}Instances also retrieve user data (passed as the user_data parameter in the API call or by the --user_data flag in the nova boot command) through the metadata service, by making a GET request to http://169.254.169.254/openstack/2012-08-10/user_data:

$ curl http://169.254.169.254/openstack/2012-08-10/user_data#!/bin/bashecho 'Extra user data here'The metadata service has an API that is compatible with version 2009-04-04 of the Amazon EC2 metadata service. This means that virtual machine images designed for EC2 will work properly with OpenStack.

The EC2 API exposes a separate URL for each metadata element. Retrieve a listing of these elements by making a GET query tohttp://169.254.169.254/2009-04-04/meta-data/:

$ curl http://169.254.169.254/2009-04-04/meta-data/ami-idami-launch-indexami-manifest-pathblock-device-mapping/hostnameinstance-actioninstance-idinstance-typekernel-idlocal-hostnamelocal-ipv4placement/public-hostnamepublic-ipv4public-keys/ramdisk-idreservation-idsecurity-groups$ curl http://169.254.169.254/2009-04-04/meta-data/block-device-mapping/ami$ curl http://169.254.169.254/2009-04-04/meta-data/placement/availability-zone$ curl http://169.254.169.254/2009-04-04/meta-data/public-keys/0=mykeyInstances can retrieve the public SSH key (identified by keypair name when a user requests a new instance) by making a GET request to http://169.254.169.254/2009-04-04/meta-data/public-keys/0/openssh-key:

$ curl http://169.254.169.254/2009-04-04/meta-data/public-keys/0/openssh-keyssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKVVRNCRX6BlnNbI+USLGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTHbsiyPCIDOKyeHba4MUJq8Oh5b2i71/3BISpyxTBH/uZDHdslW2a+SrPDCeuMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q== Generated by NovaInstances can retrieve user data by making a GET request to http://169.254.169.254/2009-04-04/user-data:

$ curl http://169.254.169.254/2009-04-04/user-data#!/bin/bashecho 'Extra user data here'The metadata service is implemented byeither the nova-api serviceor the nova-api-metadata service. Note thatthe nova-api-metadata service is generally only used when running in multi-host mode, as it retrieves instance-specific metadata.If you are running the nova-api service, you must have metadata as one of the elements listed in the enabled_apis configuration option in /etc/nova/nova.conf. The default enabled_apis configuration setting includes the metadata service, so you should not need to modify it.

Hosts access the service at 169.254.169.254:80, and this is translated to metadata_host:metadata_port by an iptables rule established by the nova-network service. In multi-host mode, you can set metadata_host to 127.0.0.1.

For instances to reach the metadata service, the nova-network service must configure iptables to NAT port 80 of the 169.254.169.254 address to the IP address specified in metadata_host (this defaults to $my_ip, which is the IP address of the nova-network service) and port specified in metadata_port(which defaults to 8775) in /etc/nova/nova.conf.

![[Note]](http://docs.openstack.org/admin-guide-cloud/common/images/admon/note.png)

Note The metadata_host configuration option must be an IP address, not a host name.

The default Compute service settings assume that nova-network and nova-api are running on the same host. If this is not the case, in the/etc/nova/nova.conf file on the host running nova-network, set the metadata_host configuration option to the IP address of the host where nova-apiis running.

Table 4.2. Description of metadata configuration options Configuration option = Default value Description [DEFAULT] metadata_cache_expiration = 15(IntOpt) Time in seconds to cache metadata; 0 to disable metadata caching entirely (not recommended). Increasingthis should improve response times of the metadata API when under heavy load. Higher values may increase memoryusage and result in longer times for host metadata changes to take effect.metadata_host = $my_ip(StrOpt) The IP address for the metadata API servermetadata_listen = 0.0.0.0(StrOpt) The IP address on which the metadata API will listen.metadata_listen_port = 8775(IntOpt) The port on which the metadata API will listen.metadata_manager = nova.api.manager.MetadataManager(StrOpt) OpenStack metadata service managermetadata_port = 8775(IntOpt) The port for the metadata API portmetadata_workers = None(IntOpt) Number of workers for metadata service. The default will be the number of CPUs available.vendordata_driver =nova.api.metadata.vendordata_json.JsonFileVendorData(StrOpt) Driver to use for vendor datavendordata_jsonfile_path = None(StrOpt) File to load JSON formatted vendor data from

If you write a consumer for one of these APIs, always attempt to access the most recent API version supported by your consumer first, then fall back to an earlier version if the most recent one is not available.

Metadata from the OpenStack API is distributed in JSON format. To retrieve the metadata, make a GET request tohttp://169.254.169.254/openstack/2012-08-10/meta_data.json:

$ curl http://169.254.169.254/openstack/2012-08-10/meta_data.json

{"uuid":"d8e02d56-2648-49a3-bf97-6be8f1204f38","availability_zone":"nova","hostname":"test.novalocal","launch_index":0,"meta":{"priority":"low","role":"webserver"},"public_keys":{"mykey":"ssh-rsaAAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKVVRNCRX6BlnNbI+USLGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTHbsiyPCIDOKyeHba4MUJq8Oh5b2i71/3BISpyxTBH/uZDHdslW2a+SrPDCeuMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q==GeneratedbyNova\n"},"name":"test"}Instances also retrieve user data (passed as the user_data parameter in the API call or by the --user_data flag in the nova boot command) through the metadata service, by making a GET request to http://169.254.169.254/openstack/2012-08-10/user_data:

$ curl http://169.254.169.254/openstack/2012-08-10/user_data#!/bin/bashecho 'Extra user data here'The metadata service has an API that is compatible with version 2009-04-04 of the Amazon EC2 metadata service. This means that virtual machine images designed for EC2 will work properly with OpenStack.

The EC2 API exposes a separate URL for each metadata element. Retrieve a listing of these elements by making a GET query tohttp://169.254.169.254/2009-04-04/meta-data/:

$ curl http://169.254.169.254/2009-04-04/meta-data/ami-idami-launch-indexami-manifest-pathblock-device-mapping/hostnameinstance-actioninstance-idinstance-typekernel-idlocal-hostnamelocal-ipv4placement/public-hostnamepublic-ipv4public-keys/ramdisk-idreservation-idsecurity-groups$ curl http://169.254.169.254/2009-04-04/meta-data/block-device-mapping/ami$ curl http://169.254.169.254/2009-04-04/meta-data/placement/availability-zone$ curl http://169.254.169.254/2009-04-04/meta-data/public-keys/0=mykeyInstances can retrieve the public SSH key (identified by keypair name when a user requests a new instance) by making a GET request to http://169.254.169.254/2009-04-04/meta-data/public-keys/0/openssh-key:

$ curl http://169.254.169.254/2009-04-04/meta-data/public-keys/0/openssh-keyssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKVVRNCRX6BlnNbI+USLGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTHbsiyPCIDOKyeHba4MUJq8Oh5b2i71/3BISpyxTBH/uZDHdslW2a+SrPDCeuMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q== Generated by NovaInstances can retrieve user data by making a GET request to http://169.254.169.254/2009-04-04/user-data:

$ curl http://169.254.169.254/2009-04-04/user-data#!/bin/bashecho 'Extra user data here'The metadata service is implemented byeither the nova-api serviceor the nova-api-metadata service. Note thatthe nova-api-metadata service is generally only used when running in multi-host mode, as it retrieves instance-specific metadata.If you are running the nova-api service, you must have metadata as one of the elements listed in the enabled_apis configuration option in /etc/nova/nova.conf. The default enabled_apis configuration setting includes the metadata service, so you should not need to modify it.

Hosts access the service at 169.254.169.254:80, and this is translated to metadata_host:metadata_port by an iptables rule established by the nova-network service. In multi-host mode, you can set metadata_host to 127.0.0.1.

For instances to reach the metadata service, the nova-network service must configure iptables to NAT port 80 of the 169.254.169.254 address to the IP address specified in metadata_host (this defaults to $my_ip, which is the IP address of the nova-network service) and port specified in metadata_port(which defaults to 8775) in /etc/nova/nova.conf.

![[Note]](http://docs.openstack.org/admin-guide-cloud/common/images/admon/note.png)

The metadata_host configuration option must be an IP address, not a host name.

The default Compute service settings assume that nova-network and nova-api are running on the same host. If this is not the case, in the/etc/nova/nova.conf file on the host running nova-network, set the metadata_host configuration option to the IP address of the host where nova-apiis running.

metadata_cache_expiration = 15(IntOpt) Time in seconds to cache metadata; 0 to disable metadata caching entirely (not recommended). Increasingthis should improve response times of the metadata API when under heavy load. Higher values may increase memoryusage and result in longer times for host metadata changes to take effect.metadata_host = $my_ip(StrOpt) The IP address for the metadata API servermetadata_listen = 0.0.0.0(StrOpt) The IP address on which the metadata API will listen.metadata_listen_port = 8775(IntOpt) The port on which the metadata API will listen.metadata_manager = nova.api.manager.MetadataManager(StrOpt) OpenStack metadata service managermetadata_port = 8775(IntOpt) The port for the metadata API portmetadata_workers = None(IntOpt) Number of workers for metadata service. The default will be the number of CPUs available.vendordata_driver =nova.api.metadata.vendordata_json.JsonFileVendorData(StrOpt) Driver to use for vendor datavendordata_jsonfile_path = None(StrOpt) File to load JSON formatted vendor data from获取Openstack的虚拟机相关信息

- $ curl [http://169.254.169.254/openstack/2012-08-10/meta_data.json]

- {"uuid": "d8e02d56-2648-49a3-bf97-6be8f1204f38", "availability_zone": "nova", "hostname": "test.novalocal", "launch_index": 0, "meta": {"priority": "low", "role": "webserver"}, "public_keys": {"mykey": "ssh-rsa AAAAB3NzaC1yc2EAAAADAQABAAAAgQDYVEprvtYJXVOBN0XNKVVRNCRX6BlnNbI+USLGais1sUWPwtSg7z9K9vhbYAPUZcq8c/s5S9dg5vTHbsiyPCIDOKyeHba4MUJq8Oh5b2i71/3BISpyxTBH/uZDHdslW2a+SrPDCeuMMoss9NFhBdKtDkdG9zyi0ibmCP6yMdEX8Q== Generated by Nova\n"}, "name": "test"}

获取EC2的虚拟机相关信息

- $ curl [http://169.254.169.254/1.0/meta-data]

- ami-id

- ami-launch-index

- ami-manifest-path

- hostname

- instance-id

- local-ipv4

- reservation-id

- $ curl [http://169.254.169.254/1.0/meta-data/ami-id]

- ami-0000005d

获取user-data

- $ curl [http://169.254.169.254/2009-04-04/user-data]

- \#\!/bin/bash

- echo 'Extra user data here'

Havana新增接口

- curl [http://169.254.169.254/openstack/2013-10-17/vendor_data.json]

可能会遇到的问题:

metadata服务的默认端口是8775,而nova-api的端口就是8775附:

在neutron中,还有dhcp的namespace-metadata-proxy可以提供访问。在使用前,需要在dhcp agent的配置文件中增加一个配置:

- # The DHCP server can assist with providing metadata support on isolated

- # networks. Setting this value to True will cause the DHCP server to append

- # specific host routes to the DHCP request. The metadata service will only

- # be activated when the subnet gateway_ip is None. The guest instance must

- # be configured to request host routes via DHCP (Option 121).

- enable_isolated_metadata = True

- root@controller231:/usr/lib/python2.7/dist-packages# quantum subnet-create testnet01 172.17.17.0/24 --no-gateway --name=sub_no_gateway

- Created a new subnet:

- +------------------+--------------------------------------------------+

- | Field | Value |

- +------------------+--------------------------------------------------+

- | allocation_pools | {"start": "172.17.17.1", "end": "172.17.17.254"} |

- | cidr | 172.17.17.0/24 |

- | dns_nameservers | |

- | enable_dhcp | True |

- | gateway_ip | |

- | host_routes | |

- | id | 34168195-f101-4be4-8ca8-c9d07b58d41a |

- | ip_version | 4 |

- | name | sub_no_gateway |

- | network_id | 3d42a0d4-a980-4613-ae76-a2cddecff054 |

- | tenant_id | 6fbe9263116a4b68818cf1edce16bc4f |

- +------------------+--------------------------------------------------+

- root@network232:~# ip netns | grep qdhcp

- qdhcp-9daeac7c-a98f-430f-8e38-67f9c044e299

- qdhcp-3d42a0d4-a980-4613-ae76-a2cddecff054

- root@network232:~# ip netns exec qdhcp-3d42a0d4-a980-4613-ae76-a2cddecff054 ip -4 a

- 11: tap332ce137-ec: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

- inet 10.1.1.3/24 brd 10.1.1.255 scope global tap332ce137-ec

- inet 10.0.0.2/24 brd 10.0.0.255 scope global tap332ce137-ec

- 14: lo: <LOOPBACK,UP,LOWER_UP> mtu 16436 qdisc noqueue state UNKNOWN

- inet 127.0.0.1/8 scope host lo

- 21: tap21b5c483-84: <BROADCAST,MULTICAST,UP,LOWER_UP> mtu 1500 qdisc noqueue state UNKNOWN

- inet 10.1.1.3/24 brd 10.1.1.255 scope global tap21b5c483-84

- inet 169.254.169.254/16 brd 169.254.255.255 scope global tap21b5c483-84

- inet 10.0.10.2/24 brd 10.0.10.255 scope global tap21b5c483-84

- inet 10.0.2.2/24 brd 10.0.2.255 scope global tap21b5c483-84

- inet 172.17.17.1/24 brd 172.17.17.255 scope global tap21b5c483-84

- root@network232:~# ip netns exec qdhcp-3d42a0d4-a980-4613-ae76-a2cddecff054 route -n

- Kernel IP routing table

- Destination Gateway Genmask Flags Metric Ref Use Iface

- 10.0.0.0 0.0.0.0 255.255.255.0 U 0 0 0 tap332ce137-ec

- 10.0.2.0 0.0.0.0 255.255.255.0 U 0 0 0 tap21b5c483-84

- 10.0.10.0 0.0.0.0 255.255.255.0 U 0 0 0 tap21b5c483-84

- 10.1.1.0 0.0.0.0 255.255.255.0 U 0 0 0 tap332ce137-ec

- 10.1.1.0 0.0.0.0 255.255.255.0 U 0 0 0 tap21b5c483-84

- 169.254.0.0 0.0.0.0 255.255.0.0 U 0 0 0 tap21b5c483-84

- 172.17.17.0 0.0.0.0 255.255.255.0 U 0 0 0 tap21b5c483-84

- root@network232:~# ip netns exec qdhcp-3d42a0d4-a980-4613-ae76-a2cddecff054 netstat -4 -anpt

- Active Internet connections (servers and established)

- Proto Recv-Q Send-Q Local Address Foreign Address State PID/Program name

- tcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 7035/python

- tcp 0 0 10.1.1.3:53 0.0.0.0:* LISTEN 14592/dnsmasq

- tcp 0 0 169.254.169.254:53 0.0.0.0:* LISTEN 14592/dnsmasq

- tcp 0 0 10.0.10.2:53 0.0.0.0:* LISTEN 14592/dnsmasq

- tcp 0 0 10.0.2.2:53 0.0.0.0:* LISTEN 14592/dnsmasq

- tcp 0 0 172.17.17.1:53 0.0.0.0:* LISTEN 14592/dnsmasq

- root@network232:~# ps -f --pid 7035 | fold -s -w 82

- UID PID PPID C STIME TTY TIME CMD

- root 7035 1 0 Jun17 ? 00:00:00 python

- /usr/bin/quantum-ns-metadata-proxy

- --pid_file=/var/lib/quantum/external/pids/3d42a0d4-a980-4613-ae76-a2cddecff054.pid

- --network_id=3d42a0d4-a980-4613-ae76-a2cddecff054 --state_path=/var/lib/quantum

- --metadata_port=80 --debug --verbose

- --log-file=quantum-ns-metadata-proxy3d42a0d4-a980-4613-ae76-a2cddecff054.log

- --log-dir=/var/log/quantum

- root@network232:~# ps -f --pid 14592 | fold -s -w 82

- UID PID PPID C STIME TTY TIME CMD

- nobody 14592 1 0 15:34 ? 00:00:00 dnsmasq --no-hosts --no-resolv

- --strict-order --bind-interfaces --interface=tap21b5c483-84 --except-interface=lo

- --pid-file=/var/lib/quantum/dhcp/3d42a0d4-a980-4613-ae76-a2cddecff054/pid

- --dhcp-hostsfile=/var/lib/quantum/dhcp/3d42a0d4-a980-4613-ae76-a2cddecff054/host

- --dhcp-optsfile=/var/lib/quantum/dhcp/3d42a0d4-a980-4613-ae76-a2cddecff054/opts

- --dhcp-script=/usr/bin/quantum-dhcp-agent-dnsmasq-lease-update --leasefile-ro

- --dhcp-range=set:tag0,10.0.2.0,static,120s

- --dhcp-range=set:tag1,172.17.17.0,static,120s

- --dhcp-range=set:tag2,10.0.10.0,static,120s

- --dhcp-range=set:tag3,10.1.1.0,static,120s --conf-file= --domain=openstacklocal

- root@network232:~# cat /var/lib/quantum/dhcp/3d42a0d4-a980-4613-ae76-a2cddecff054/opts

- tag:tag0,option:router,10.0.2.1

- tag:tag1,option:classless-static-route,169.254.169.254/32,172.17.17.1

- tag:tag1,option:router

- tag:tag2,option:dns-server,8.8.8.7,8.8.8.8

- tag:tag2,option:router,10.0.10.1

- tag:tag3,option:dns-server,8.8.8.7,8.8.8.8

- tag:tag3,option:router,10.1.1.1

- ip route add 169.254.169.254/32 via 172.17.17.1

当虚拟机内有该条静态路由后,到169.254.169.254:80的请求,就会发送到network node上dhcp namespace里的metadata nameserver proxy,proxy就会为消息添加X-Quantum-Network-ID和X-Forwarded-For头部,分别表示network-id和instance-id,然后通过Unix domain socket发送给quantum-metadata-agent,然后的流程就可以参考前一篇blog了。

参考自:

http://www.sebastien-han.fr/blog/2012/07/11/play-with-openstack-instance-metadata/

http://blog.csdn.net/lynn_kong/article/details/9115033

http://blog.csdn.net/gtt116/article/details/17997053

http://www.cnblogs.com/fbwfbi/p/3590571.html

http://www.choudan.net/2014/02/24/OpenStack-Metadata-%E8%AE%BF%E9%97%AE%E9%97%AE%E9%A2%98.html

http://docs.openstack.org/admin-guide-cloud/content/section_metadata-service.html

- openstack metadata

- 深入理解OpenStack -- metadata

- 什么是openstack的metadata

- [openstack]metadata service使用方法

- 什么是openstack的 metadata

- OPENSTACK中的METADATA SERVER

- openstack metadata运行机制

- OpenStack 的 metadata 服务机制

- openstack quantum获取metadata的测试

- OpenStack Metadata 服务机制及配置方式

- openstack的metadata概念和服务机制

- OpenStack METADATA不工作的分析方法

- 【OpenStack】metadata在OpenStack中的使用(一)

- 【OpenStack】metadata在OpenStack中的使用(二)

- openstack 中 metadata 和 userdata 的配置和使用

- metadata

- Metadata

- metadata

- systemd作者博客

- C++ODBC连接数据库

- 最简单的左右滑动item实现不同效果

- CSU 1633: Landline Telephone Network (最小生成树)

- nios ii常用函数整理

- openstack metadata

- hibernate-不能保存数据到数据库(数据不能持久化)

- AutoLayout(二)

- Dijikstra(单源最短路径)

- C# sort 委托使用

- ASP.NET实现微信功能(1)(创建菜单,验证,给菜单添加事件)

- android页面切换

- java数据结构之二叉树的遍历

- java 错误