1)SLAM——using Kinect 2) SFM--CMVS PMVS Bundler 3)VXL

来源:互联网 发布:合成器软件 编辑:程序博客网 时间:2024/05/01 09:30

(2)http://www.di.ens.fr/cmvs/ http://download.csdn.net/detail/chlele0105/5607187#comment http://www.cs.cornell.edu/~snavely/bundler/ http://blog.csdn.net/gisstar/article/details/6776041 http://blog.csdn.net/zzzblog/article/details/17166869

(3)http://vxl.sourceforge.net/

VXL (the Vision-something-Libraries)是计算机视觉研究和实现库集。它从TargetJr和IUE演变而来,目的是成为一个轻量级、速度快和持久的系统。它可移植到很多平台。 http://blog.csdn.net/houston11235/article/details/8146344 http://blog.csdn.net/stereohomology/article/details/27207505

包含的库

◆ 数字化容器和法则:vnl

◆ 图像管理:vil

◆ 几何图形:vgl

◆ I/O控制:vsl

◆ 基本模板:vbl

◆ 功能库:vul

项目主页:http://www.open-open.com/lib/view/home/1328931840421

最近打算使用Kinect实现机器人的室内导航,收集了近年来的一些比较好的文章。《基于Kinect系统的场景建模与机器人自主导航》、《Mobile Robots Navigation in Indoor Environments Using Kinect》、《Using a Depth Camera for Indoor Robot Localization and Navigation》、《Depth Camera Based Indoor Mobile Robot Localization and Navigation》、《Using a Depth Camera for Indoor Robot Localization and Navigation》、《Using the Kinect as a Navigation Sensor for Mobile Robotics》。

by Top Liu

最近打算使用Kinect实现机器人的室内导航,收集了近年来的一些比较好的文章。

基于Kinect系统的场景建模与机器人自主导航

下载:http://robot.sia.cn/CN/article/downloadArticleFile.do?attachType=PDF&id=15382

国外文献:

1.Mobile Robots Navigation in Indoor Environments Using Kinect

This paper appears in:

Critical Embedded Systems (CBSEC), 2012 Second Brazilian

没了方便没有IEEE账户的朋友,我已上传到百度文库。下面的文章可直接点击下载

2.Using a Depth Camera for Indoor Robot Localization and Navigation

Abstract—Depth cameras are a rich source of information for

robot indoor localization and safe navigation. The recent availability

of the low-cost Kinect sensor provides a valid alternative

to other available sensors, namely laser-range finders. This

paper presents the first results of the application of a Kinect

sensor on a wheeled indoor service robot for elderly assistance.

The robot makes use of a metric map of the environment’s

walls and uses the depth information of the Kinect camera to

detect the walls and localize itself in the environment. In our

approach an error minimization method is used providing realtime

efficient robot pose estimation. Furthermore, the depth

camera provides information about the obstacles surrounding

the robot, allowing the application of path-finding algorithms

such as D* Lite achieving safe and robust navigation. Using

the proposed solution, we were able to adapt a robotic soccer

robot developed at the University of Aveiro to successfully

navigate in a domestic environment, across different rooms

without colliding with obstacles in the environment.

3.Depth Camera Based Indoor Mobile Robot Localization and Navigation

Abstract—The sheer volume of data generated by depth

cameras provides a challenge to process in real time, in

particular when used for indoor mobile robot localization and

navigation. We introduce the Fast Sampling Plane Filtering

(FSPF) algorithm to reduce the volume of the 3D point cloud

by sampling points from the depth image, and classifying local

grouped sets of points as belonging to planes in 3D (the “plane

filtered” points) or points that do not correspond to planes

within a specified error margin (the “outlier” points). We then

introduce a localization algorithm based on an observation

model that down-projects the plane filtered points on to 2D, and

assigns correspondences for each point to lines in the 2D map.

The full sampled point cloud (consisting of both plane filtered

as well as outlier points) is processed for obstacle avoidance

for autonomous navigation. All our algorithms process only

the depth information, and do not require additional RGB

data. The FSPF, localization and obstacle avoidance algorithms

run in real time at full camera frame rates (30Hz) with low

CPU requirements (16%). We provide experimental results

demonstrating the effectiveness of our approach for indoor

mobile robot localization and navigation. We further compare

the accuracy and robustness in localization using depth cameras

with FSPF vs. alternative approaches that simulate laser

rangefinder scans from the 3D data.

4.Using a Depth Camera for Indoor Robot Localization and Navigation

Abstract—Depth cameras are a rich source of information for

robot indoor localization and safe navigation. The recent availability

of the low-cost Kinect sensor provides a valid alternative

to other available sensors, namely laser-range finders. This

paper presents the first results of the application of a Kinect

sensor on a wheeled indoor service robot for elderly assistance.

The robot makes use of a metric map of the environment’s

walls and uses the depth information of the Kinect camera to

detect the walls and localize itself in the environment. In our

approach an error minimization method is used providing realtime

efficient robot pose estimation. Furthermore, the depth

camera provides information about the obstacles surrounding

the robot, allowing the application of path-finding algorithms

such as D* Lite achieving safe and robust navigation. Using

the proposed solution, we were able to adapt a robotic soccer

robot developed at the University of Aveiro to successfully

navigate in a domestic environment, across different rooms

without colliding with obstacles in the environment.

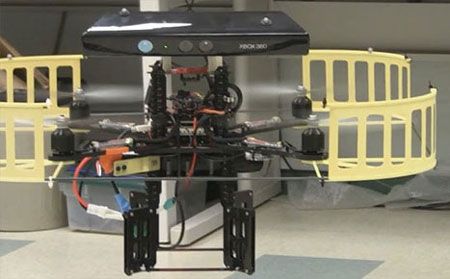

5.Using the Kinect as a Navigation Sensor for Mobile Robotics

ABSTRACT

Localisation and mapping are the key requirements in mobile

robotics to accomplish navigation. Frequently laser scanners

are used, but they are expensive and only provide 2D mapping

capabilities. In this paper we investigate the suitability

of the Xbox Kinect optical sensor for navigation and simultaneous

localisation and mapping. We present a prototype

which uses the Kinect to capture 3D point cloud data of the

external environment. The data is used in a 3D SLAM to

create 3D models of the environment and localise the robot

in the environment. By projecting the 3D point cloud into

a 2D plane, we then use the Kinect sensor data for a 2D

SLAM algorithm. We compare the performance of Kinectbased

2D and 3D SLAM algorithm with traditional solutions

and show that the use of the Kinect sensor is viable. However,

its smaller field of view and depth range and the higher

processing requirements for the resulting sensor data limit its

range of applications in practice.

Mobile Autonomous Robot using the Kinect

- 1)SLAM——using Kinect 2) SFM--CMVS PMVS Bundler 3)VXL

- Bundler,PMVS,CMVS的编译与使用

- Bundler,PMVS,CMVS的编译与使用

- bundler、cmvs、pmvs的程序学习感受

- Bundler,PMVS,CMVS的编译与使用

- Bundler->CMVS-PMVS的配置和使用

- windows7下实现Bundler并通过cygwin编译运行以及pmvs、cmvs的使用(2)

- Bundler及PMVS的配置与使用(简易版)

- linux下使用Bundler + CMVS-PMVS进行三维重建

- linux下使用Bundler + CMVS-PMVS进行三维重建

- linux下使用Bundler + CMVS-PMVS进行三维重建

- 在CYGWIN下编译和运行软件Bundler ,以及PMVS,CMVS的编译与使用

- 在CYGWIN下编译和运行软件Bundler ,以及PMVS,CMVS的编译与使用

- 自己编写 的 bundler、pmvs、cmvs的程序Windows版本的连接

- 在CYGWIN下编译和运行软件Bundler ,以及PMVS,CMVS的编译与使用

- 不用配置bundler及cygwin,实现直接对稠密重建算法CMVS-PMVS进行研究

- 视觉SLAM技术及其应用(章国锋--复杂环境下的鲁棒SfM与SLAM)

- CMVS-PMVS配置

- boost 库编译64位

- java json 时期转换报错

- Xcode中关于布局约束的自适应布局

- 欢迎使用CSDN-markdown编辑器

- windows 通用快捷键定义 及 程序功能

- 1)SLAM——using Kinect 2) SFM--CMVS PMVS Bundler 3)VXL

- iMX257下portmap服务移植

- fpga 定点小数计算

- 最小二乘法学习二

- IIS网站发布

- 类型自定义格式字符串

- 动态生成页面(从数据库中动态取出数据信息生成页面)

- .Net 自定义应用程序配置

- IOS基础之——类扩展(class extensions)