Spark组件之GraphX学习2--triplets实践

来源:互联网 发布:手机加密软件哪个好 编辑:程序博客网 时间:2024/06/05 08:35

更多代码请见:https://github.com/xubo245/SparkLearning

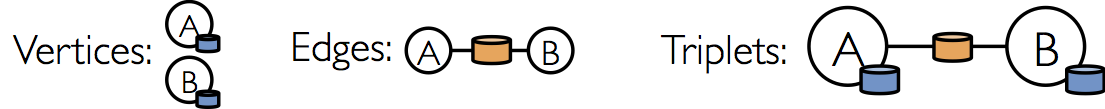

1解释

2.代码:

/** * @author xubo * ref http://spark.apache.org/docs/1.5.2/graphx-programming-guide.html * time 20160503 */package org.apache.spark.graphx.learningimport org.apache.spark._import org.apache.spark.graphx._// To make some of the examples work we will also need RDDimport org.apache.spark.rdd.RDDobject gettingStart { def main(args: Array[String]) { val conf = new SparkConf().setAppName("gettingStart").setMaster("local[4]") // Assume the SparkContext has already been constructed val sc = new SparkContext(conf) // Create an RDD for the vertices val users: RDD[(VertexId, (String, String))] = sc.parallelize(Array((3L, ("rxin", "student")), (7L, ("jgonzal", "postdoc")), (5L, ("franklin", "prof")), (2L, ("istoica", "prof")))) // Create an RDD for edges val relationships: RDD[Edge[String]] = sc.parallelize(Array(Edge(3L, 7L, "collab"), Edge(5L, 3L, "advisor"), Edge(2L, 5L, "colleague"), Edge(5L, 7L, "pi"))) // Define a default user in case there are relationship with missing user val defaultUser = ("John Doe", "Missing") // Build the initial Graph val graph = Graph(users, relationships, defaultUser) // Count all users which are postdocs println(graph.vertices.filter { case (id, (name, pos)) => pos == "postdoc" }.count) // Count all the edges where src > dst println(graph.edges.filter(e => e.srcId > e.dstId).count) //another method println(graph.edges.filter { case Edge(src, dst, prop) => src > dst }.count) // reverse println(graph.edges.filter { case Edge(src, dst, prop) => src < dst }.count) // Use the triplets view to create an RDD of facts. val facts: RDD[String] = graph.triplets.map(triplet => triplet.srcAttr._1 + " is the " + triplet.attr + " of " + triplet.dstAttr._1) facts.collect.foreach(println(_)) // Use the triplets view to create an RDD of facts. println("\ntriplets:"); val facts2: RDD[String] = graph.triplets.map(triplet => triplet.srcId +"("+triplet.srcAttr._1+" "+ triplet.srcAttr._2+")"+" is the" + triplet.attr + " of " + triplet.dstId+"("+triplet.dstAttr._1+" "+ triplet.dstAttr._2+ ")") facts2.collect.foreach(println(_)) }}3.结果:

2016-05-03 19:18:48 WARN MetricsSystem:71 - Using default name DAGScheduler for source because spark.app.id is not set.1113rxin is the collab of jgonzalfranklin is the advisor of rxinistoica is the colleague of franklinfranklin is the pi of jgonzaltriplets:3(rxin student) is thecollab of 7(jgonzal postdoc)5(franklin prof) is theadvisor of 3(rxin student)2(istoica prof) is thecolleague of 5(franklin prof)5(franklin prof) is thepi of 7(jgonzal postdoc)

参考

【1】 http://spark.apache.org/docs/1.5.2/graphx-programming-guide.html

【2】https://github.com/xubo245/SparkLearning

0 0

- Spark组件之GraphX学习2--triplets实践

- Spark组件之GraphX学习20--待学习部分

- Spark组件之GraphX学习1--入门实例Property Graph

- Spark组件之GraphX学习3--Structural Operators:subgraph

- Spark组件之GraphX学习4--Structural Operators:mask

- Spark组件之GraphX学习8--邻居集合

- Spark组件之GraphX学习11--PageRank例子(PageRankAboutBerkeleyWiki)

- Spark组件之GraphX学习13--ConnectedComponents操作

- Spark组件之GraphX学习14--TriangleCount实例和分析

- Spark组件之GraphX学习16--最短路径ShortestPaths

- Spark组件之GraphX学习12--GraphX常见操作汇总SimpleGraphX

- spark组件之graphx图并行计算

- Spark学习笔记-GraphX-2

- Spark组件之GraphX学习10--PageRank学习和使用(From examples)

- Spark组件之GraphX学习6--随机图生成和出度入度等信息显示

- Spark组件之GraphX学习8--随机图生成和TopK最大入度

- Spark组件之GraphX学习9--使用pregel函数求单源最短路径

- Spark组件之GraphX学习15--we-Google.txt大图分析

- iOS xib创建UIScrollView不滑动问题解决

- Android-EventBus-3.0.0使用

- 杭电1000

- Linux开机过程

- 刷题第六天:南邮NOJ【1013三角形判断】

- Spark组件之GraphX学习2--triplets实践

- UVa 1025 A Spy in the Metro (dp)

- ACM:prim最小生成树题目汇总

- Idea 文件注释的变量

- windows下tomcat7+solr5.1+zookeeper3.4.6 伪集群SolrCloud配置

- 多线程并发库高级应用 之 使用java5中同步技术的3个面试题

- Redis主从复制和集群配置

- 多线程并发库高级应用 之 java5中的线程并发库--线程锁技术

- 举例说明一个 java程序的加载,初始化以及运行过程