Hive 安装部署及测试

来源:互联网 发布:全球环境污染数据统计 编辑:程序博客网 时间:2024/04/29 03:35

标签(空格分隔): hive

1) Hive 如何配置与Hadoop 关联,进行创建表,加载数据测试

2) 在Linux下安装MySQL数据库

3) 配置Hive元数据存储在MySQL中,查看相关元数据表信息

4) 熟悉基本的DML和DDL语句(创建数据库、表及加载数据和基本查询语句Select)

hadoop,spark,kafka交流群:459898801

1,相关文档及环境要求

1.1,相关文档

- 官网:http://hive.apache.org

- 文档:hive home getting start

- 下载:download

1.2,环境要求:

- java 1.7

- Hadoop 2.x (preferred)

- 本文配置的环境是centos6.4

2,安装hive

2.1 解压eapache-hive-0.13.1-bin.tar.gz

$tar -zxvf apache-hive-0.13.1-bin.tar.gz ./2.2 设置HIVE_HOME为hive安装目录

$ cd hive-0.13.1$ export HIVE_HOME={{pwd}}2.3 添加HIVE_HOME到系统PATH中

$ export PATH=$HIVE_HOME/bin:$PATH3,运行hive

3.1 创建相关目录

$bin/hdfs dfs -mkdir /tmp$bin/hdfs dfs -mkdir -p /user/hive/warehouse$bin/hdfs dfs -chmod g+w /tmp$bin/hdfs dfs -chmod g+w /user/hive/warehouse3.2 修改配置文件

在hive-env.xml文件中添加如下内容:

# Set HADOOP_HOME to point to a specific hadoop install directoryHADOOP_HOME=/opt/modules/hadoop-2.5.0/# Hive Configuration Directory can be controlled by:export HIVE_CONF_DIR=/opt/modules/hive-0.13.1/conf3.3 运行hive命令界面

(1).进入hive shell

//hive安装目录$bin/hive(2).创建table 名为student。

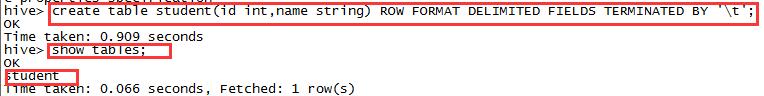

>use default;>create table student(id int,name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t';>show tables;执行结束后:

(2).上传数据

$vi stu.txt//内容:1001 sean1002 jike1003 tony//上传数据到表中>load data local inpath '/opt/datas/stu.txt' into table student;(3).查看表中数据

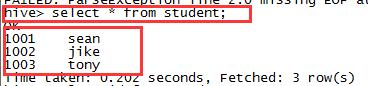

>select * from student;执行结束后:

(4).查看表中某一个列的数据

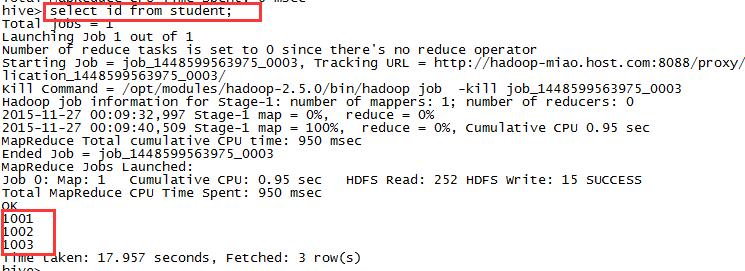

>select id from student;执行结束后:

3.4 运行 HiveServer2 and Beeline

//hive安装目录$bin/hiveserver2$bin/beeline -u jdbc:hive2://$HS2_HOST:$HS2_PORT4,安装mySQL

4.1 联网安装,使用yum

设置自动获取IP地址4.2 替换系统镜像源

$ cd /etc/yum.repos.d $ sudo mv CentOS-Base.repo CentOS-Base.repo.bak $ sudo touch CentOS6-Base-163.repo $ sudo vi CentOS6-Base-163.repo //安装完成后执行 $ sudo yum clean all4.3 安装MYSQL

$ sudo yum list|grep mysql $ sudo yum install mysql-server -y4.4 启动Mysql

$ sudo service mysqld status $ sudo service mysqld start4.5 设置密码

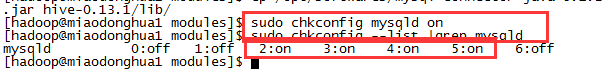

# /usr/bin/mysqladmin -u root password '123456'4.8 设置开机启动mysqld

$sudo chkconfig mysqld on$sudo chkconfig --list |grep mysqld执行结束后

4.7 测试

$ mysql -uroot -p123456 > show databases ; > use test ; > show tables ;5,hive常用属性配置

5.1 Hive hive-site.xml配置

首先创建vi hive-site.xml,并输入任意字符。

<configuration> <property> <name>javax.jdo.option.ConnectionURL</name> <value>jdbc:mysql://hadoop-miao.host.com/metastore_db?createDatabaseIfNotExist=true</value> <description>JDBC connect string for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionDriverName</name> <value>com.mysql.jdbc.Driver</value> <description>Driver class name for a JDBC metastore</description> </property> <property> <name>javax.jdo.option.ConnectionUserName</name> <value>root</value> <description>username to use against metastore database</description> </property> <property> <name>javax.jdo.option.ConnectionPassword</name> <value>123456</value> <description>password to use against metastore database</description> </property>5.2 添加数据库驱动

$tar -zxf mysql-connector-java-5.1.27.tar.gz$cd mysql-connector-java-5.1.27$cp mysql-connector-java-5.1.27-bin.jar /opt/modules/hive-0.13.1/lib/5.3 配置用户链接权限

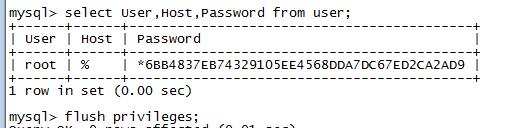

$mysql -uroot -p123456>use mysql;>select User,Host,Password from user;>update user set Host='%' where User = 'root' and Host='localhost';>delete from user where user='root' and host='127.0.0.1';>delete from user where user='root' and host='miaodonghua.host';>delete from user where host='localhost';>delete from user where host='hadoop-miao.host.com';>flush privileges;//查看修改结果>select User,Host,Password from user;执行结束后

5.4 Hive数据仓库位置配置

默认是:/user/hive/warehouse,可按照如下条件修改:

<property> <name>hive.metastore.warehouse.dir</name> <value>/user/hadoop/warehouse</value> <description>location of default database for the warehouse</description> </property> 注意权限: $bin/hdfs dfs -mkdir -p /user/hive/warehouse $bin/hdfs dfs -chmod g+w /user/hive/warehouse5.5 Hive运行日志信息位置

打开hive-log4j.properties,由模板hive-log4j.properties.template修改$vi $HIVE_HOME/conf/log4j.properties//系统/tmp目录hive.log.dir=${java.io.tmpdir}/${user.name}hive.log.file=hive.log可修改为:hive.log.dir=/opt/modules/hive-0.13.1/logshive.log.file=hive.log5.6 指定hive运行时显示的log日志的级别

$HIVE_HOME/conf/log4j.properties 默认是hive.root.logger=INFO,DRFA 可修改为hive.root.logger=DEBUG,DRFA DEBUG调试时可用5.7 在cli命令行上显示当前数据库,以及查询表的行头信息

$HIVE_HOME/conf/hive-site.xml //添加如下内容 <property> <name>hive.cli.print.header</name> <value>true</value> </property> <property> <name>hive.cli.print.current.db</name> <value>true</value> </property>5.8 在启动hive时设置配置属性信息

$bin/hive --hiveconf <property=value>eg:$bin/hive --hiveconf hive.cli.print.current.db=false注意:此种方式设置的属性值,仅仅在当前回话session生效.5.9 hive配置优先级的关系如下:

--hiveconf > hive-site.xml > hive-default.xml5.10 查看当前所有的配置信息

> set ; > set hive.cli.print.header ; ## 获取属性值 > set hive.cli.print.header = false ; ## 设置属性值总共四种方式设置属性值:

set > --hiveconf > hive-site.xml > hive-default.xml6,Hive Shell常用操作

6.1 Hive 类sql语句常用操作

(1).创建表并查询

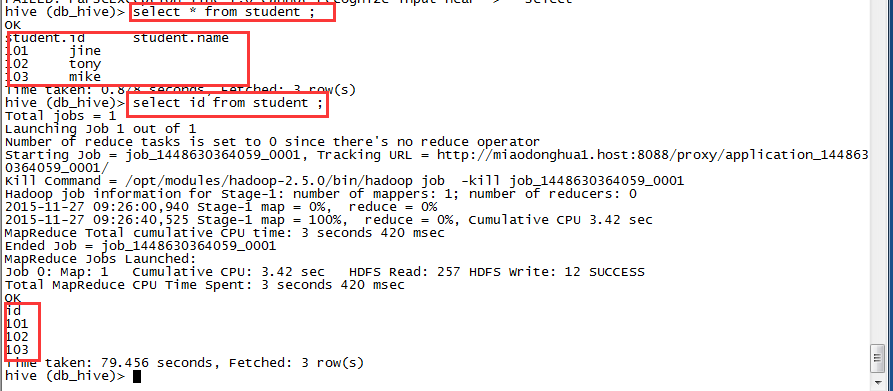

>show databases ;>create database db_hive ; >use db_hive ;>create table student(id int, name string) ROW FORMAT DELIMITED FIELDS TERMINATED BY '\t' ; >load data inpath '/user/hive/warehouse/student/stu.txt' into table student ;>select * from student ;>select id from student ;上述指令执行成功后,secureCRT结果如下:

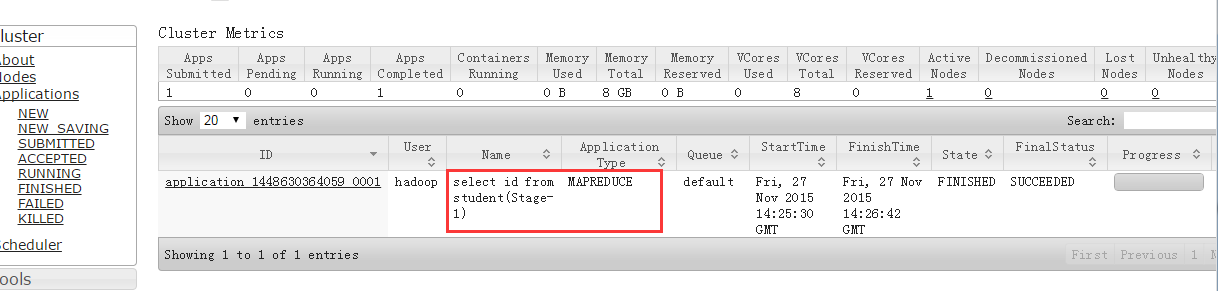

可以在浏览器miaodonghua1.host:8088端口查看:

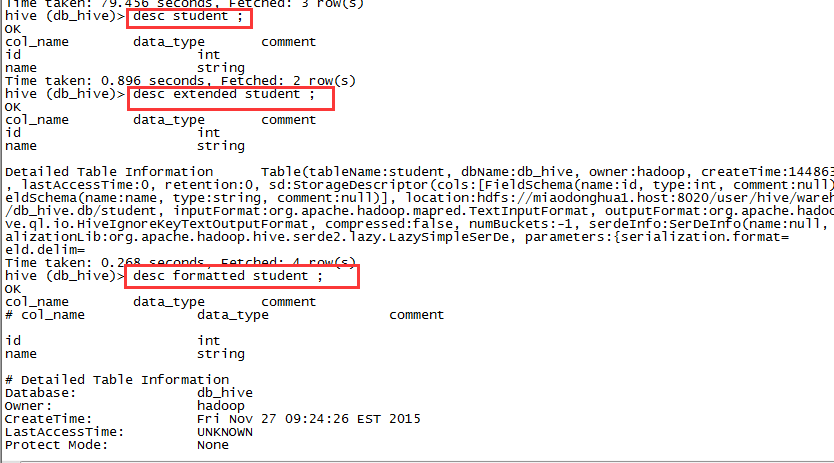

(2).查看表的描述信息

>desc student ;>desc extended student ;>desc formatted student ;执行成功后:

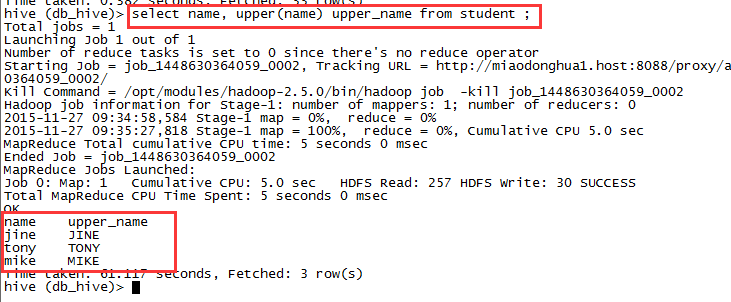

(3).函数的使用

>show functions ;>desc function upper ;>desc function extended upper ;>select name, upper(name) upper_name from student ;执行结束后:

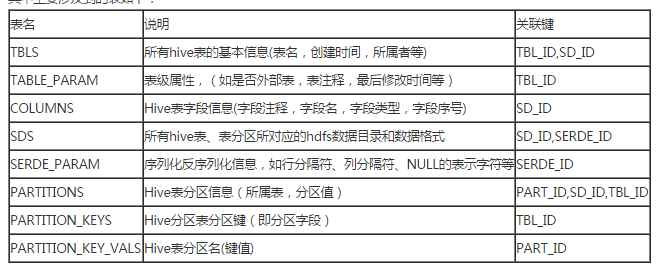

6.2 在mysql中查看hive元数据信息

hive元数据信息具体如下:

>use metastore;>select * from TBLS;>select * from COLUMN_NAME_V2;>select * from DBS6.3 Hive Shell常用操作

(1).查看命令帮助

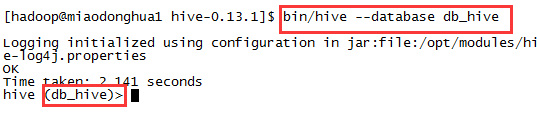

$ bin/hive -helpusage: hive -d,--define <key=value> Variable subsitution to apply to hive commands. e.g. -d A=B or --define A=B --database <databasename> Specify the database to use -e <quoted-query-string> SQL from command line -f <filename> SQL from files -H,--help Print help information -h <hostname> connecting to Hive Server on remote host --hiveconf <property=value> Use value for given property --hivevar <key=value> Variable subsitution to apply to hive commands. e.g. --hivevar A=B -i <filename> Initialization SQL file -p <port> connecting to Hive Server on port number -S,--silent Silent mode in interactive shell -v,--verbose Verbose mode (echo executed SQL to the console)(2).登录指定数据库

$bin/hive --database db_hive

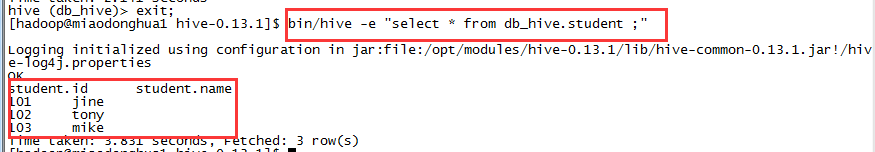

(3).直接查询指定数据库的指定表

$bin/hive -e "select * from db_hive.student ;"

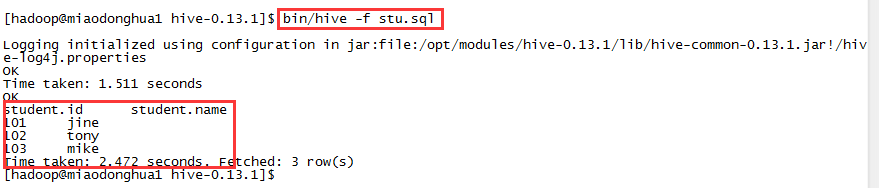

(4).执行hive脚本

$bin/hive -f stu.sql--stu.sql脚本内容 use db_hive ;select *from student ;

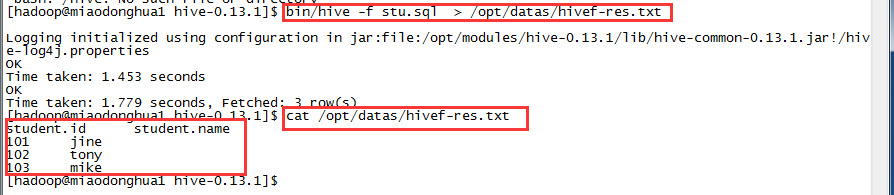

(5).重定向输出结果

$bin/hive -f stu.sql > /opt/datas/hivef-res.txt

6.4 Hive常用交互式操作

>quit/exit ;//退出>set key=value ;//修改特定变量的值 //注意: 如果变量名拼写错误,不会报错>set/set -v;//>! <command>;//从Hive shell执行一个shell命令代表执行本地命令>dfs <dfs command> ;//从Hive shell执行一个dfs命令>query string ;//执行一个Hive查询,然后输出结果到标准输出错误修改

<property> <name>hive.metastore.uris</name> <value>thrift://miaodonghua1.host:9083</value></property>配置这个要是不启动metastore服务,会报如下错误:

Logging initialized using configuration in file:/opt/cdh2.3.6/hive-0.13.1-cdh5.3.6/conf/hive-log4j.propertiesException in thread "main" java.lang.RuntimeException: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:371) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:689) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:633) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.util.RunJar.main(RunJar.java:212)Caused by: java.lang.RuntimeException: Unable to instantiate org.apache.hadoop.hive.metastore.HiveMetaStoreClient at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1426) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:63) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:73) at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:2625) at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:2644) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:365) ... 7 moreCaused by: java.lang.reflect.InvocationTargetException at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:526) at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1424) ... 12 moreCaused by: MetaException(message:Could not connect to meta store using any of the URIs provided. Most recent failure: org.apache.thrift.transport.TTransportException: java.net.ConnectException: Connection refused at org.apache.thrift.transport.TSocket.open(TSocket.java:185) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:351) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:219) at sun.reflect.NativeConstructorAccessorImpl.newInstance0(Native Method) at sun.reflect.NativeConstructorAccessorImpl.newInstance(NativeConstructorAccessorImpl.java:57) at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45) at java.lang.reflect.Constructor.newInstance(Constructor.java:526) at org.apache.hadoop.hive.metastore.MetaStoreUtils.newInstance(MetaStoreUtils.java:1424) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.<init>(RetryingMetaStoreClient.java:63) at org.apache.hadoop.hive.metastore.RetryingMetaStoreClient.getProxy(RetryingMetaStoreClient.java:73) at org.apache.hadoop.hive.ql.metadata.Hive.createMetaStoreClient(Hive.java:2625) at org.apache.hadoop.hive.ql.metadata.Hive.getMSC(Hive.java:2644) at org.apache.hadoop.hive.ql.session.SessionState.start(SessionState.java:365) at org.apache.hadoop.hive.cli.CliDriver.run(CliDriver.java:689) at org.apache.hadoop.hive.cli.CliDriver.main(CliDriver.java:633) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at org.apache.hadoop.util.RunJar.main(RunJar.java:212)Caused by: java.net.ConnectException: Connection refused at java.net.PlainSocketImpl.socketConnect(Native Method) at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:339) at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:200) at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:182) at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392) at java.net.Socket.connect(Socket.java:579) at org.apache.thrift.transport.TSocket.open(TSocket.java:180) ... 19 more) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.open(HiveMetaStoreClient.java:398) at org.apache.hadoop.hive.metastore.HiveMetaStoreClient.<init>(HiveMetaStoreClient.java:219) ... 17 morehive配置的三种模式

0 0

- Hive 安装部署及测试

- 十五、Hive 安装部署及测试

- hive的安装部署及测试,MySql安装

- (一)Hive的安装部署及测试

- Hive on Tez部署及验证测试

- HIVE安装及简单测试

- Hive 安装及测试一

- Hive 安装及测试二

- Hive 安装及测试三

- Hive 的安装部署和测试

- Hive安装、配置及基本测试

- Hive llap服务安装说明及测试

- Hive on CDH4部署、调错及测试

- Hive on CDH4部署、调错及测试

- Hive介绍及部署

- Hive安装与部署

- hive安装部署

- Hive安装部署详解

- Functional Programming Using Fsharp个人答案

- EventBus3.0 使用个人总结

- 初探OpenMP

- 几种排序算法总结

- EXPORT_SYMBOL

- Hive 安装部署及测试

- poj 2063 完全背包

- css之盒子模型案例

- SSD中没有efi分区的解决办法即固态硬盘不能设置uefi引导的解决办法

- Ruby中的变量定义

- 2016百度之星总结帖

- Codeforces 623A Graph and String (构造)

- shader内置函数

- NetBeans8.1开发Spring MVC的基础代码