6-DEADLOCKS

来源:互联网 发布:unity3d 上海培训 编辑:程序博客网 时间:2024/04/29 16:21

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

- Computer systems are full of resources that can be used only by one process at a time. Consequently, all operating systems have the ability to (temporarily) grant a process exclusive access to certain resources.

- A process may needs exclusive access to several resources. Suppose two processes each want to record a scanned document on a Blu-ray disc.

—Process A requests the scanner and is granted it.

—Process B requests the recorder and is also granted it.

Now

—A asks for the Blu-ray recorder, but the request is suspended until B releases it. —B asks for the scanner.

At this point both processes are blocked and will remain so forever. This situation is called a deadlock. - Deadlocks can also occur across machines. If devices(such as Blu-ray disc) can be reserved remotely (i.e., from the user’s home machine), deadlocks of the same kind can occur as described above.

6.1 RESOURCES

- A resource can be a hardware device (e.g., a Blu-ray drive) or a piece of information (e.g., a record in a database). A computer will normally have many different resources that a process can acquire.

- For some resources, several identical instances may be available, such as three Blu-ray drives. When several copies of a resource are available, any one of them can be used to satisfy any request for the resource. In short, a resource is anything that must be acquired, used, and released over the course of time.

6.1.1 Preemptable(可以抢先取得的) and Nonpreemptable Resources

- Two kinds of resources: preemptable and nonpreemptable.

- A preemptable resource is one that can be taken away from the process owning it with no ill effects.

- Memory is a preemptable resource. Consider a system with 1 GB of user memory, one printer, and two 1-GB processes that each want to print something.

—Process A requests and gets the printer, then starts to compute the values to print. Before it has finished the computation, it exceeds its time quantum and is swapped out to disk.

—Process B now runs and tries to acquire the printer, unsuccessfully as it turns out. - Potentially, we now have a deadlock situation, because A has the printer and B has the memory, and neither one can proceed without the resource held by the other. But it is possible to preempt (take away) the memory from B by swapping it out and swapping A in. Now A can run, do its printing, and then release the printer. No deadlock occurs.

- A nonpreemptable resource is one that cannot be taken away from its current owner without potentially causing failure. If a process has begun to burn a Blu-ray, suddenly taking the Blu-ray recorder away from it and giving it to another process will result in a garbled Blu-ray. Blu-ray recorders are not preemptable at an arbitrary moment.

- Whether a resource is preemptable depends on the context. On a standard PC, memory is preemptable because pages can always be swapped out to disk to recover it. However, on a smartphone that does not support swapping or paging, deadlocks can’t be avoided by just swapping out a memory hog.

- In general, deadlocks involve nonpreemptable resources. Potential deadlocks that involve preemptable resources can usually be resolved by reallocating resources from one process to another. Thus, our treatment will focus on nonpreemptable resources.

- The abstract sequence of events required to use a resource is given below.

- Request the resource.

- Use the resource.

- Release the resource.

- If the resource is not available when it is requested, the requesting process is forced to wait. In some operating systems, the process is automatically blocked when a resource request fails, and awakened when it becomes available. In other systems, the request fails with an error code, and it is up to the calling process to wait a little while and try again.

- A process whose resource request has just been denied will normally sit in a tight loop requesting the resource, then sleeping, then trying again. Although this process is not blocked, for all intents and purposes it is as good as blocked, because it cannot do any useful work. In our further treatment, we will assume that when a process is denied a resource request, it is put to sleep.

- The nature of requesting a resource is highly system dependent. In some systems, a request system call is provided to allow processes to explicitly ask for resources. In others, the only resources that the operating system knows about are special files that only one process can have open at a time. These are opened by the usual open call. If the file is already in use, the caller is blocked until its current owner closes it.

6.1.2 Resource Acquisition

- For some kinds of resources, such as records in a database system, it is up to the user processes rather than the system to manage resource usage themselves.

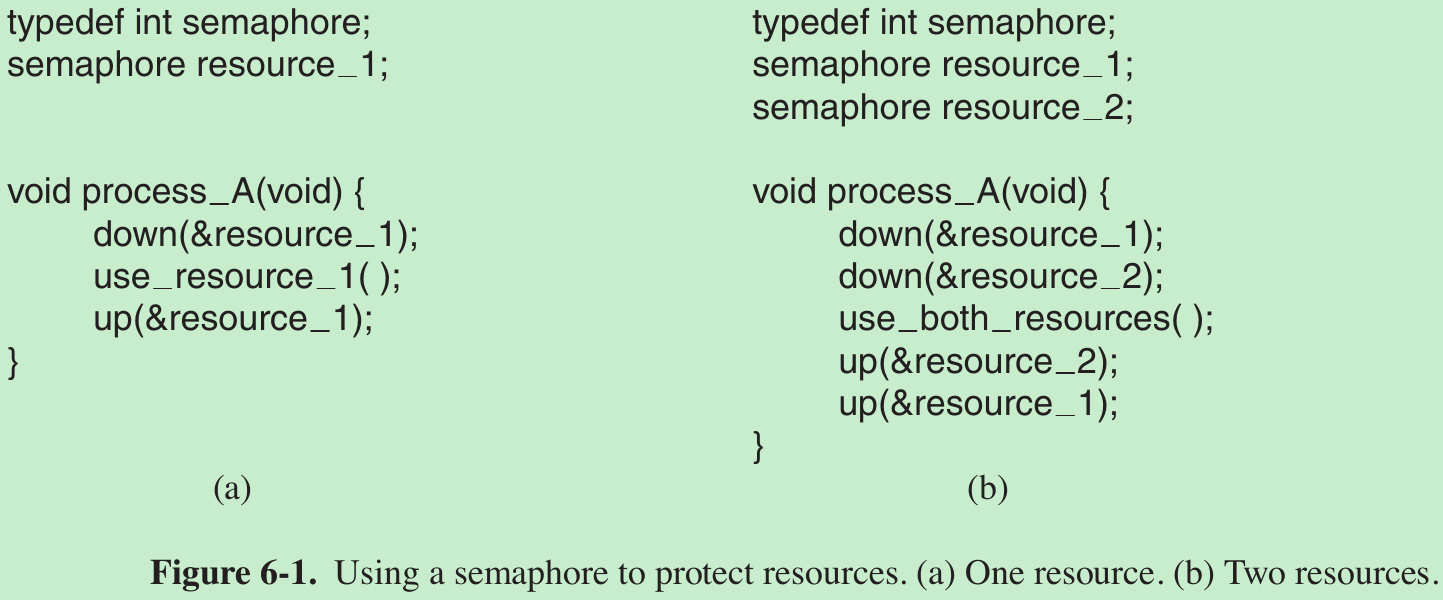

- One way of allowing this is to associate a semaphore with each resource. These semaphores are all initialized to 1. Mutexes can be used equally well. The three steps listed above are then implemented as a down on the semaphore to acquire the resource, the use of the resource, and finally an up on the resource to release it. These steps are shown in Fig. 6-1(a).

- Sometimes processes need two or more resources. They can be acquired sequentially, as shown in Fig. 6-1(b). If more than two resources are needed, they are just acquired one after another.

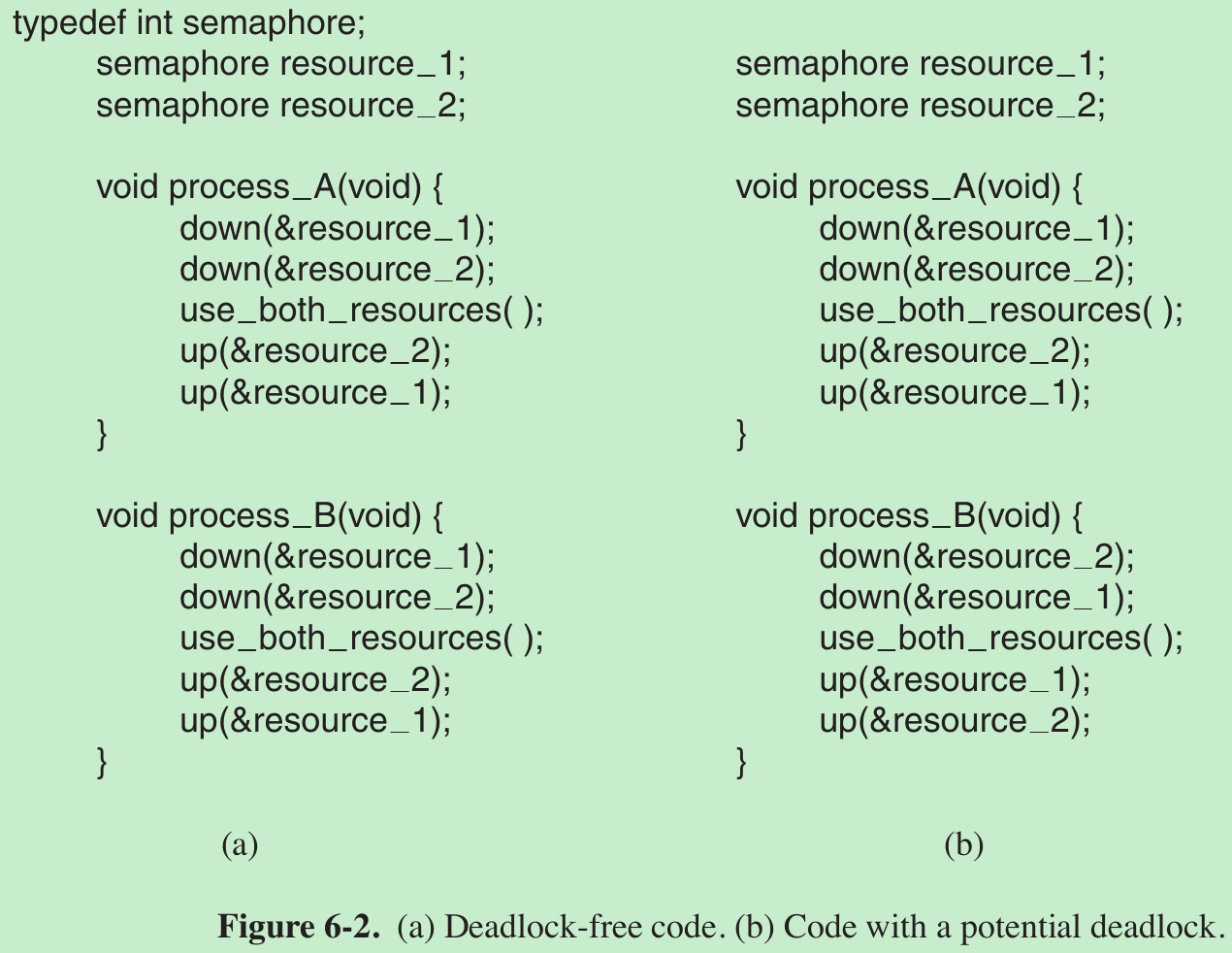

- Consider a situation with two processes, A and B, and two resources. Two scenarios are depicted in Fig. 6-2.

- In Fig. 6-2(a), both processes ask for the resources in the same order. One of the processes will acquire the first resource before the other one. That process will then successfully acquire the second resource and do its work. If the other process attempts to acquire resource 1 before it has been released, the other process will block until it becomes available.

- In Fig. 6-2(b), they ask for them in a different order. It is possible that process A acquires resource 1 and process B acquires resource 2. Each one will now block when trying to acquire the other one. Neither process will ever run again. So this situation is a deadlock.

6.2 INTRODUCTION TO DEADLOCKS

- Deadlock definition:

A set of processes is deadlocked if each process in the set is waiting for an event that only another process in the set can cause. - Because all the processes are waiting, none of them will cause any event that could wake up any of the other members of the set, and all the processes continue to wait forever.

- For this model, we assume that processes are single threaded and that no interrupts are possible to wake up a blocked process. The no-interrupts condition is needed to prevent an otherwise deadlocked process from being awakened by an alarm, and then causing events that release other processes in the set.

- In most cases, the event that each process is waiting for is the release of some resource currently possessed by another member of the set. In other words, each member of the set of deadlocked processes is waiting for a resource that is owned by a deadlocked process. This result holds for any kind of resource, including both hardware and software. This kind of deadlock is called a resource deadlock.

6.2.1 Conditions for Resource Deadlocks

- Four conditions must hold for there to be a (resource) deadlock:

- Mutual exclusion condition.

Each resource is either currently assigned to exactly one process or is available. - Hold-and-wait condition.

Processes currently holding resources that were granted earlier can request new resources. - No-preemption condition.

Resources previously granted cannot be forcibly taken away from a process. They must be explicitly released by the process holding them. - Circular wait condition.

There must be a circular list of two or more processes, each of which is waiting for a resource held by the next member of the chain.

- Mutual exclusion condition.

6.2.2 Deadlock Modeling

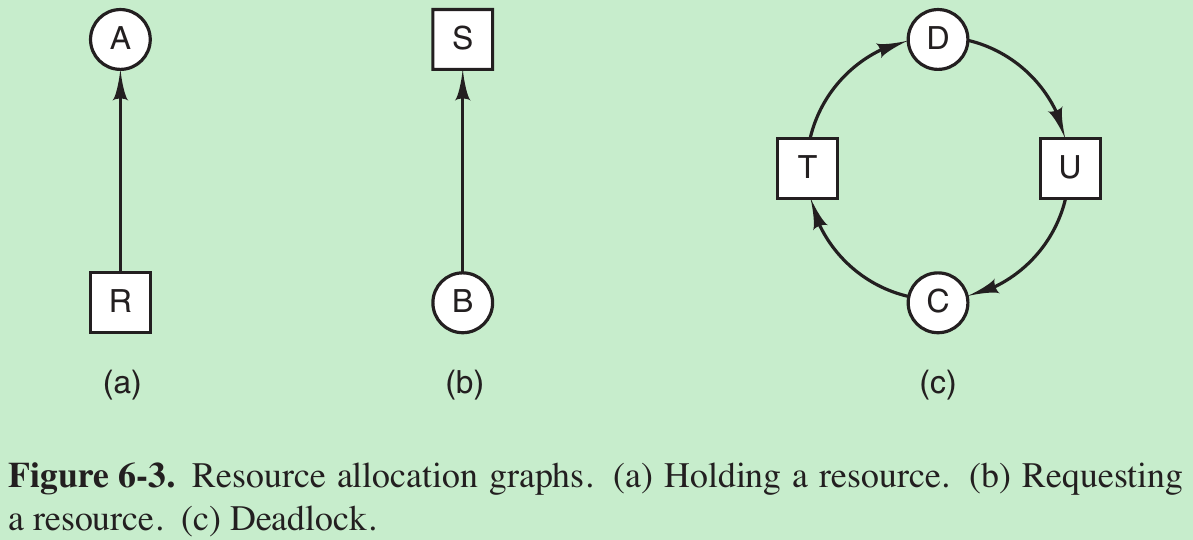

- Use directed graphs to model these four conditions.

circles: processes;

squares: resources.

resource -> process: the resource has previously been requested by, granted to, and is currently held by that process.

process -> resource: the process is currently blocked waiting for that resource. - A cycle in the graph means that there is a deadlock involving the processes and resources in the cycle (assuming that there is one resource of each kind).

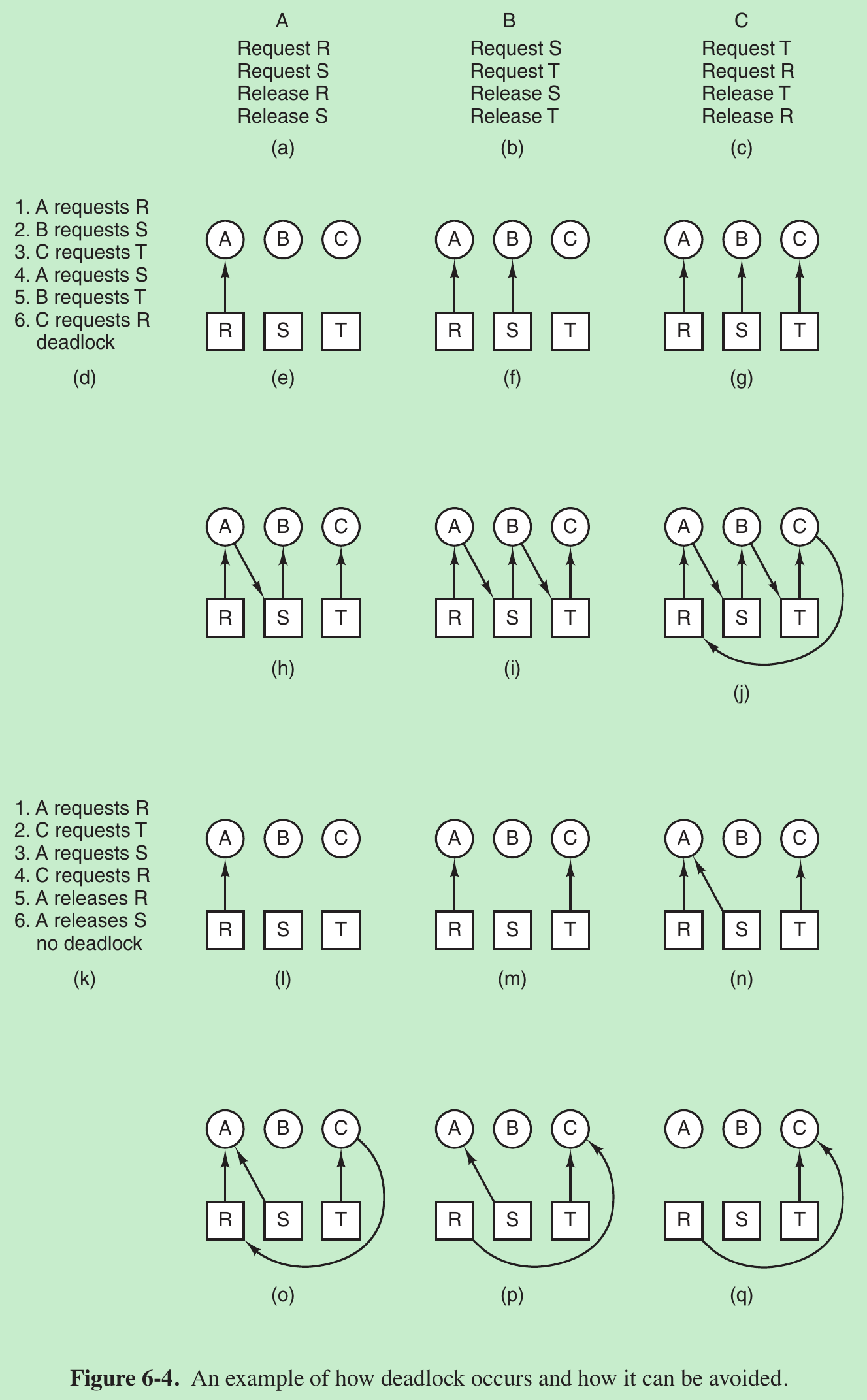

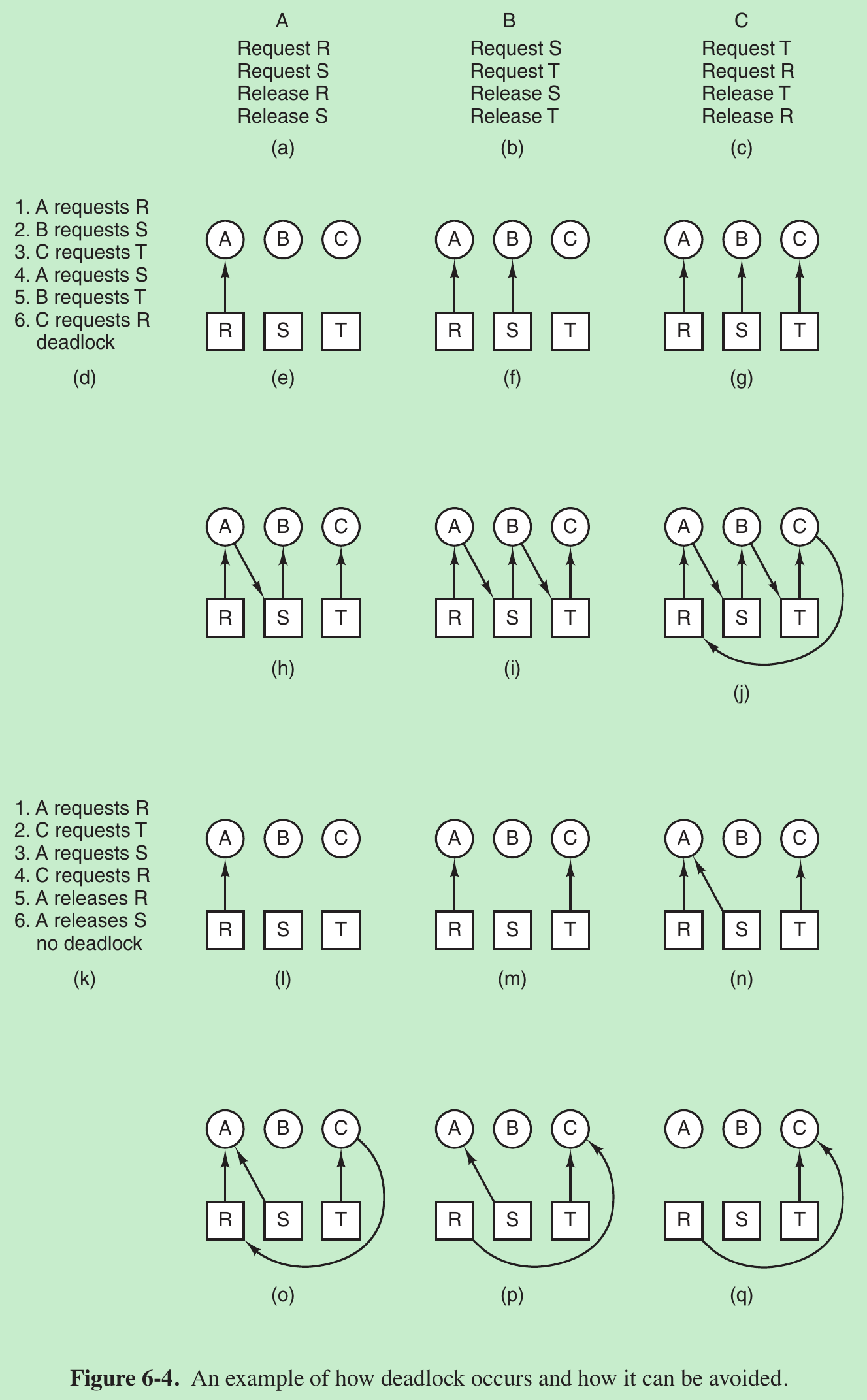

- Imagine that we have three processes, A, B and C, and three resources, R, S and T. The requests and releases of the three processes are given in Fig. 6-4(a)–(c). The operating system is free to run any unblocked process at any instant, so it could decide to run A until A finished all its work, then run B to completion, and finally run C.

- This ordering does not lead to any deadlocks but it also has no parallelism at all. In addition to requesting and releasing resources, processes compute and do I/O. When the processes are run sequentially, there is no possibility that while one process is waiting for I/O, another can use the CPU. Thus, running the processes strictly sequentially may not be optimal.

- Suppose the processes do both I/O and computing. The resource requests might occur in the order of Fig. 6-4(d). If these six requests are carried out in that order, the six resulting resource graphs are as shown in Fig. 6-4(e)–(j).

- However, the operating system is not required to run the processes in any special order. In particular, if granting a particular request might lead to deadlock, the operating system can suspend the process without granting the request (i.e., not schedule the process) until it is safe.

- In Fig. 6-4, if the operating system knew about the impending deadlock, it could suspend B instead of granting it S. By running only A and C, we would get the requests and releases of Fig. 6-4(k) instead of Fig. 6-4(d).

- This sequence leads to the resource graphs of Fig. 6-4(l)–(q), which do not lead to deadlock. After step (q), process B can be granted S because A is finished and C has everything it needs. Even if B blocks when requesting T, no deadlock can occur. B will just wait until C is finished.

- Four strategies are used for dealing with deadlocks.

- Just ignore the problem.

- Detection and recovery. Let them occur, detect them, and take action.

- Dynamic avoidance by careful resource allocation.

- Prevention, by structurally negating one of the four conditions.

6.3 THE OSTRICH(鸵鸟) ALGORITHM

- The simplest approach is the ostrich algorithm: stick your head in the sand and pretend there is no problem. Engineers ask how often the problem is expected, how often the system crashes for other reasons, and how serious a deadlock is. If deadlocks occur on the average once every five years, but system crashes due to hardware failures and operating system bugs occur once a week, most engineers would not be willing to pay a large penalty in performance or convenience to eliminate deadlocks.

6.4 DEADLOCK DETECTION AND RECOVERY

- When detection and recovery technique is used, the system does not attempt to prevent deadlocks from occurring. Instead, it lets them occur, tries to detect when this happens, and then takes some action to recover after the fact.

6.4.1 Deadlock Detection with One Resource of Each Type

- Assume there is only one resource of each type. In other words, we are excluding systems with two printers for the moment.

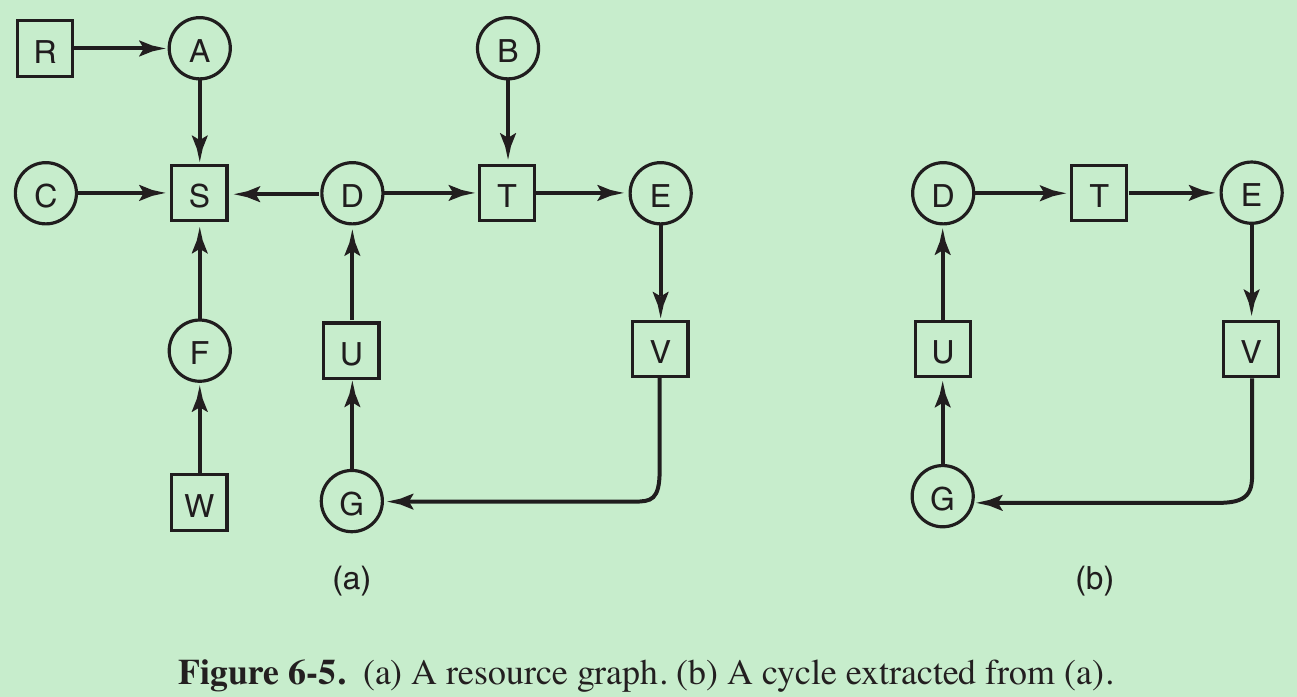

- Consider a system with seven processes, A though G, and six resources, R through W. The state of which resources are currently owned and which ones are currently being requested is as follows:

- Process A holds R and wants S.

- Process B holds nothing but wants T.

- Process C holds nothing but wants S.

- Process D holds U and wants S and T.

- Process E holds T and wants V.

- Process F holds W and wants S.

- Process G holds V and wants U.

- The cycle is shown in Fig. 6-5(b). From this cycle, we can see that processes D, E, and G are all deadlocked. Processes A, C, and F are not deadlocked because S can be allocated to any one of them, which then finishes and returns it. Then the other two can take it in turn and also complete.

- The following algorithm uses one dynamic data structure, L, a list of nodes, as well as a list of arcs. During the algorithm, to prevent repeated inspections, arcs will be marked to indicate that they have already been inspected. The algorithm operates by carrying out the following steps as specified:

- For each node, N, in the graph, perform the following five steps with N as the starting node.

- Initialize L to the empty list, and designate all the arcs as unmarked.

- Add the current node to the end of L and check to see if the node now appears in L two times. If it does, the graph contains a cycle (listed in L) and the algorithm terminates.

- From the given node, see if there are any unmarked outgoing arcs. If so, go to step 5; if not, go to step 6.

- Pick an unmarked outgoing arc at random and mark it. Then follow it to the new current node and go to step 3.

- If this node is the initial node, the graph does not contain any cycles and the algorithm terminates. Otherwise, we have now reached a dead end. Remove it and go back to the previous node, that is, the one that was current just before this one, make that one the current node, and go to step 3.

- What this algorithm does is take each node as the root of what it hopes will be a tree, and do a depth-first search on it.

—If it comes back to a node it has already encountered, then it has found a cycle.

—If it exhausts all the arcs from any given node, it backtracks to the previous node. —If it backtracks to the root and cannot go further, the subgraph reachable from the current node does not contain any cycles. If this property holds for all nodes, the entire graph is cycle free, so the system is not deadlocked.

6.4.2 Deadlock Detection with Multiple Resources of Each Type

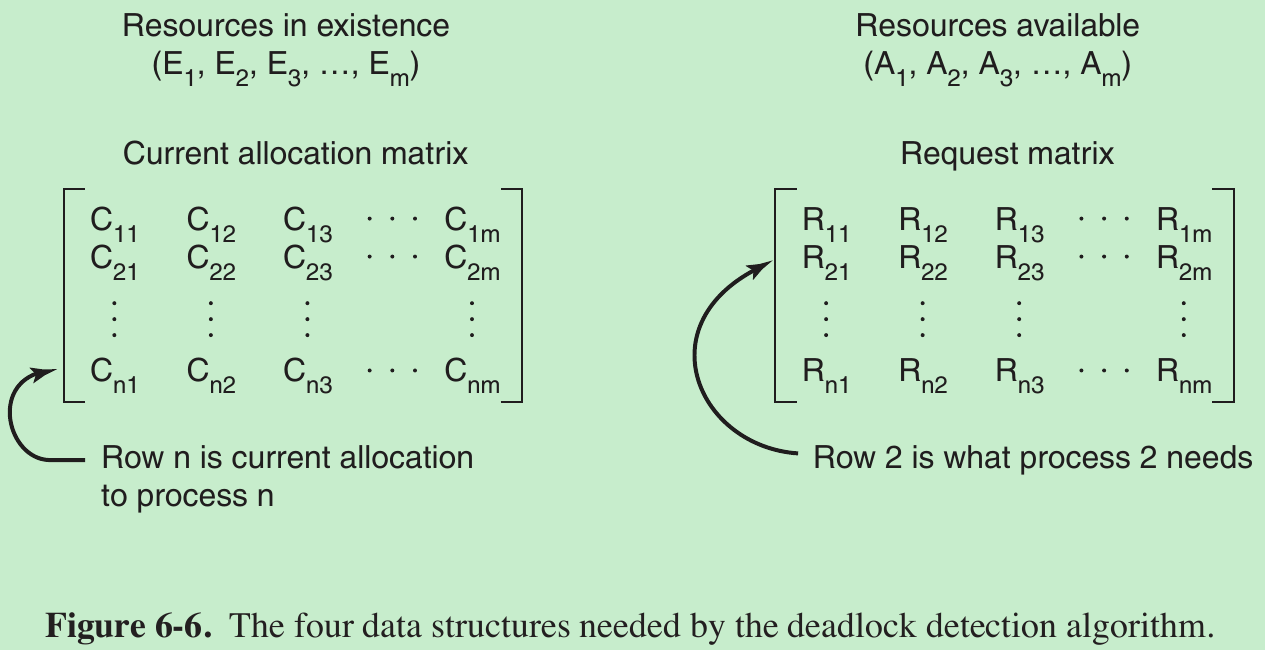

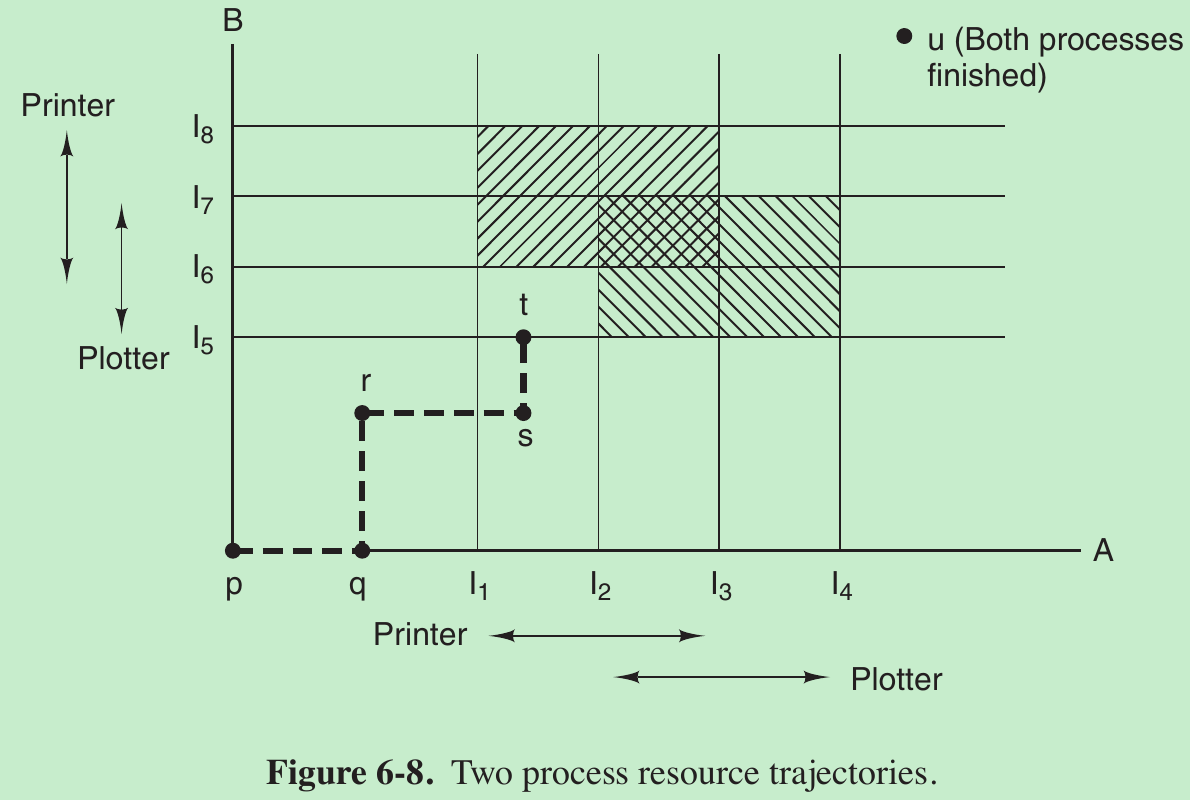

- Consider multiple copies of some of the resources exist, a matrix-based algorithm for detecting deadlock among n processes, P1 through Pn.

- Let the number of resource classes be m, with E1 resources of class 1, E2 resources of class 2, and generally, Ei resources of class i (1 ≤ i ≤ m). E is the existing resource vector. It gives the total number of instances of each resource in existence. For example, if class 1 is tape drives, then E1 = 2 means the system has two tape drives.

- At any instant, some of the resources are assigned and are not available. Let A be the available resource vector, with Ai giving the number of instances of resource i that are currently available (i.e., unassigned). If both of our two tape drives are assigned, A1 will be 0.

- Two arrays, C, the current allocation matrix, and R, the request matrix. Cij is the number of instances of resource j that are held by process i. Rij is the number of instances of resource j that Pi wants.

- An important invariant holds for these four data structures. In particular, every resource is either allocated or is available. This observation means that

- The deadlock detection algorithm is based on comparing vectors. Define the relation A ≤ B on two vectors A and B to mean that each element of A is less than or equal to the corresponding element of B. A ≤ B holds if and only if Ai ≤ Bi for 1 ≤ i ≤ m.

- Each process is initially unmarked. As the algorithm progresses, processes will be marked, indicating that they are able to complete and are thus not deadlocked. When the algorithm terminates, any unmarked processes are known to be deadlocked. This algorithm assumes that all processes keep all acquired resources until they exit.

- The deadlock detection algorithm can now be given as follows.

- Look for an unmarked process, Pi , for which the ith row of R <= A.

- If such a process is found, add the ith row of C to A, mark the process, and go back to step 1.

- If no such process exists, the algorithm terminates. When the algorithm finishes, all the unmarked processes, if any, are deadlocked.

- What the algorithm does is looking for a process that can be run to completion. Such a process is characterized as having resource demands that can be met by the currently available resources. The selected process is then run until it finishes, at which time it returns the resources it is holding to the pool of available resources. It is then marked as completed. If all the processes are ultimately able to run to completion, none of them are deadlocked. If some of them can never finish, they are deadlocked. Although the algorithm is non-deterministic (because it may run the processes in any feasible order), the result is always the same.

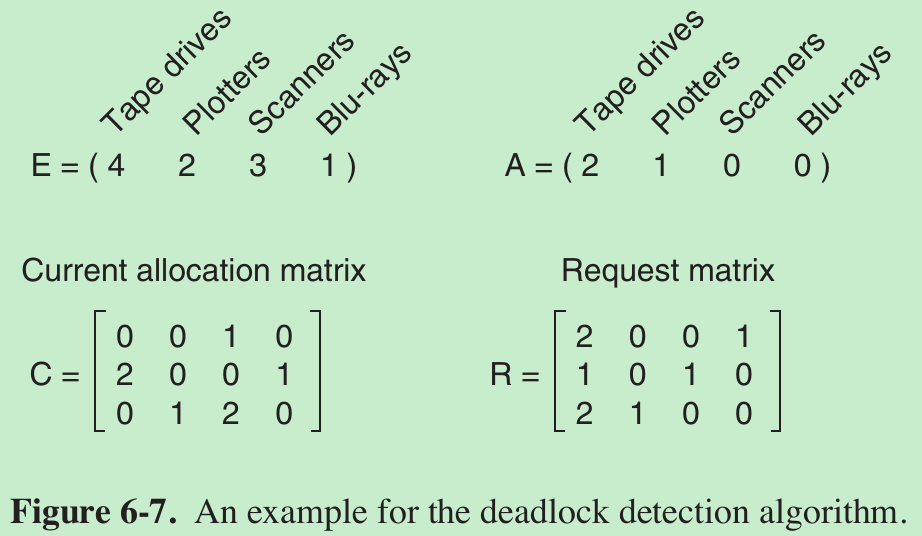

- E.g.: 3 processes and 4 resource classes.

—Process 1 has 1 scanner.

—Process 2 has 2 tape drives and 1 Blu-ray drive.

—Process 3 has 1 plotter and 2 scanners.

Each process needs additional resources, as shown by the R matrix.

- To run the deadlock detection algorithm, we look for a process whose resource request can be satisfied. 1st and 2nd cannot be satisfied. 3rd can be satisfied, so process 3 runs and eventually returns all its resources, giving

A = (2 2 2 0) - At this point process 2 can run and return its resources, giving

A = (4 2 2 1) - Now the remaining process can run. There is no deadlock in the system.

- Suppose that process 3 needs 1 Blu-ray drive and 2 tape drives and 1 plotter. None of the requests can be satisfied, so the entire system will eventually be deadlocked.

- When to look for deadlocks?

One possibility is to check every time a resource request is made. This is good but expensive in terms of CPU time. An alternative strategy is to check every k minutes, or perhaps only when the CPU utilization has dropped below some threshold. The reason for considering the CPU utilization is that if enough processes are deadlocked, there will be few runnable processes, and the CPU will often be idle.

6.4.3 Recovery from Deadlock

Recovery through Preemption

- It may be possible to temporarily take a resource away from its current owner and give it to another process. In many cases, manual intervention may be required, especially in batch-processing operating systems running on mainframes.

- For example, to take a laser printer away from its owner, the operator can collect all the sheets already printed and put them in a pile. Then the process can be suspended (marked as not runnable). At this point the printer can be assigned to another process. When that process finishes, the pile of printed sheets can be put back in the printer’s output tray and the original process restarted.

- The ability to take a resource away from a process, have another process use it, and then give it back without the process noticing it is highly dependent on the nature of the resource. Recovering this way is frequently difficult or impossible. Choosing the process to suspend depends largely on which ones have resources that can easily be taken back.

Recovery through Rollback - If the system designers and machine operators know that deadlocks are likely, they can arrange to have processes check-pointed periodically. Checkpointing a process means that its state is written to a file so that it can be restarted later.

- The checkpoint contains not only the memory image, but also the resource state, in other words, which resources are currently assigned to the process. To be most effective, new checkpoints should not overwrite old ones but should be written to new files, so as the process executes, a whole sequence accumulates.

- When a deadlock is detected, it is easy to see which resources are needed. To do the recovery, a process that owns a needed resource is rolled back to a point in time before it acquired that resource by starting at one of its earlier checkpoints.

- All the work done since the checkpoint is lost (e.g., output printed since the checkpoint must be discarded, since it will be printed again). In effect, the process is reset to an earlier moment when it did not have the resource, which is now assigned to one of the deadlocked processes. If the restarted process tries to acquire the resource again, it will have to wait until it becomes available.

Recovery through Killing Processes - The crudest but simplest way to break a deadlock is to kill one or more processes. One possibility is to kill a process in the cycle. The other processes may be able to continue. If this does not help, it can be repeated until the cycle is broken.

- A process not in the cycle can be chosen as the victim in order to release its resources. In this approach, the process to be killed is carefully chosen because it is holding resources that some process in the cycle needs. For example, one process might hold a printer and want a plotter, with another process holding a plotter and wanting a printer. These two are deadlocked. A third process may hold another identical printer and another identical plotter and be running. Killing the third process will release these resources and break the deadlock involving the first two.

- It is best to kill a process that can be rerun from the beginning with no ill effects. For example, a compilation can always be rerun because all it does is read a source file and produce an object file. If it is killed partway through, the first run has no influence on the second run.

- A process that updates a database cannot always be run a second time safely. If the process adds 1 to some field of a table in the database, running it once, killing it, and then running it again will add 2 to the field, which is incorrect.

6.5 DEADLOCK AVOIDANCE

- Up until now, we assume that when a process asks for resources, it asks for them all at once. But in most systems, resources are requested one at a time. The system must be able to decide whether granting a resource is safe or not and make the allocation only when it is safe.

6.5.1 Resource Trajectories(轨道)

the concept of safety

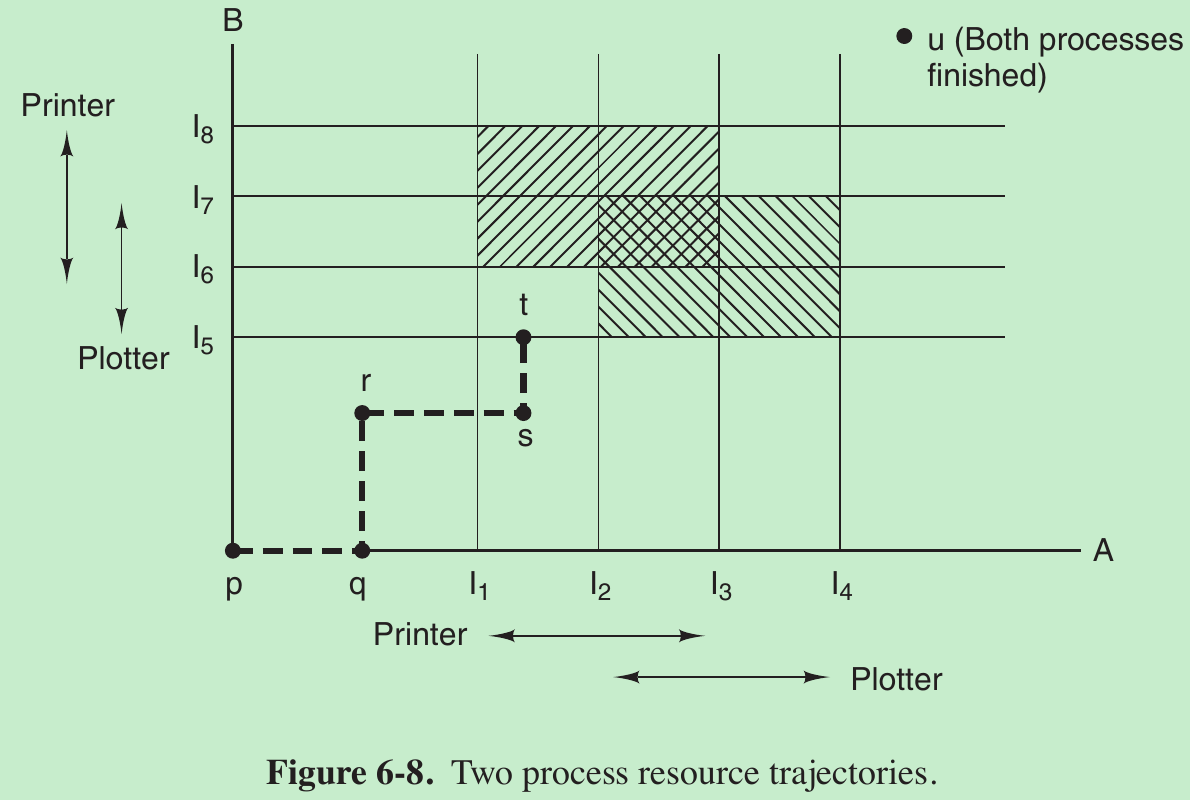

- In Fig. 6-8 we see a model for dealing with 2 processes(A and B) and 2 resources(a printer and a plotter).

- The horizontal axis represents the number of instructions executed by process A. The vertical axis represents the number of instructions executed by process B.

- At I1 A requests a printer; at I2 it needs a plotter. The printer and plotter are released at I3 and I4 , respectively. Process B needs the plotter from I5 to I7 and the printer from I6 to I8 .

- Every point in the diagram represents a joint state of the two processes.

- Initially, the state is at p, with neither process having executed any instructions. If the scheduler chooses to run A first, we get to the point q, in which A has executed some number of instructions, but B has executed none.

- At point q the trajectory becomes vertical, indicating that the scheduler has chosen to run B. With a single processor, all paths must be horizontal or vertical, never diagonal. Furthermore, motion is always to the north or east, never to the south or west because processes cannot run backward in time, of course.

- When A crosses the I1 line on the path from r to s, it requests and is granted the printer. When B reaches point t, it requests the plotter.

- The region with ‘ / ’ lines represents both processes having the printer. The mutual exclusion rule makes it impossible to enter this region. The region shaded the other way represents both processes having the plotter and is equally impossible.

- If the system enters the box bounded by I1 and I2 on the sides and I5 and I6 top and bottom, it will eventually deadlock when it gets to the intersection of I2 and I6. At this point, A is requesting the plotter and B is requesting the printer, and both are already assigned. The entire box is unsafe and must not be entered. At point t the only safe thing to do is run process A until it gets to I4 . Beyond that, any trajectory to u will do.

- Note that at point t, B is requesting a resource. The system must decide whether to grant it or not. If the grant is made, the system will enter an unsafe region and eventually deadlock. To avoid the deadlock, B should be suspended until A has requested and released the plotter.

6.5.2 Safe and Unsafe States

- The deadlock avoidance algorithms use the information of Fig. 6-6. At any instant of time, there is a current state consisting of E, A, C, and R.

- A state is said to be safe if there is some scheduling order in which every process can run to completion even if all of them suddenly request their maximum number of resources immediately.

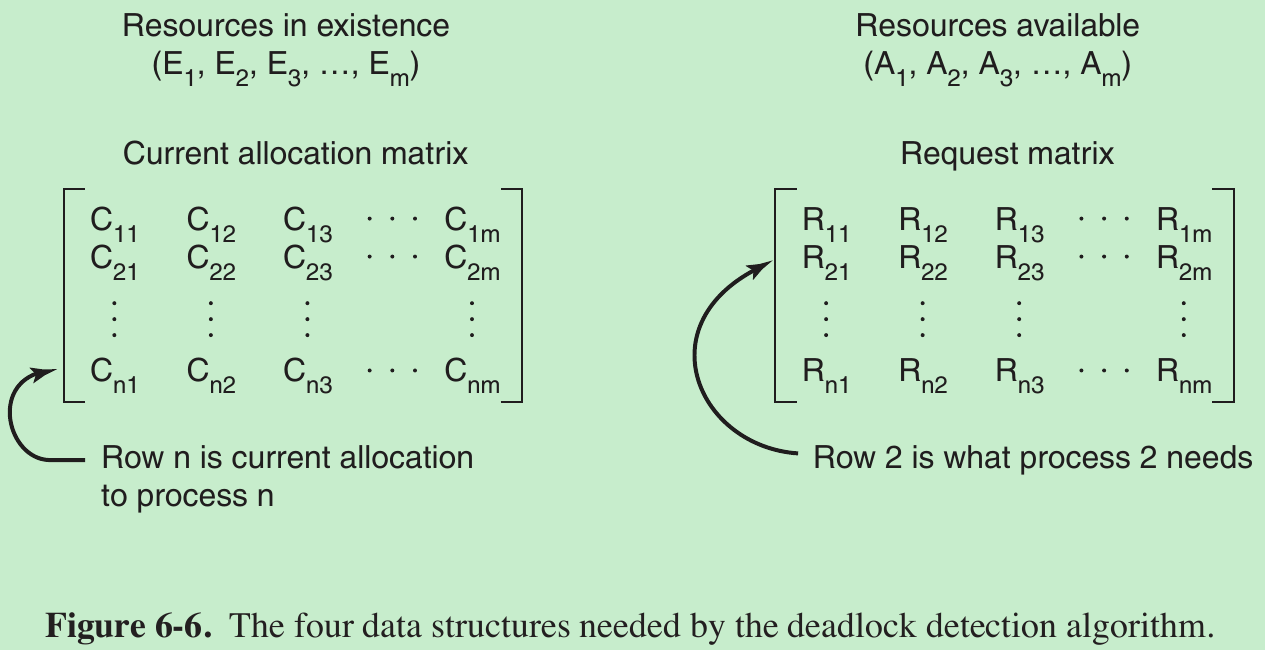

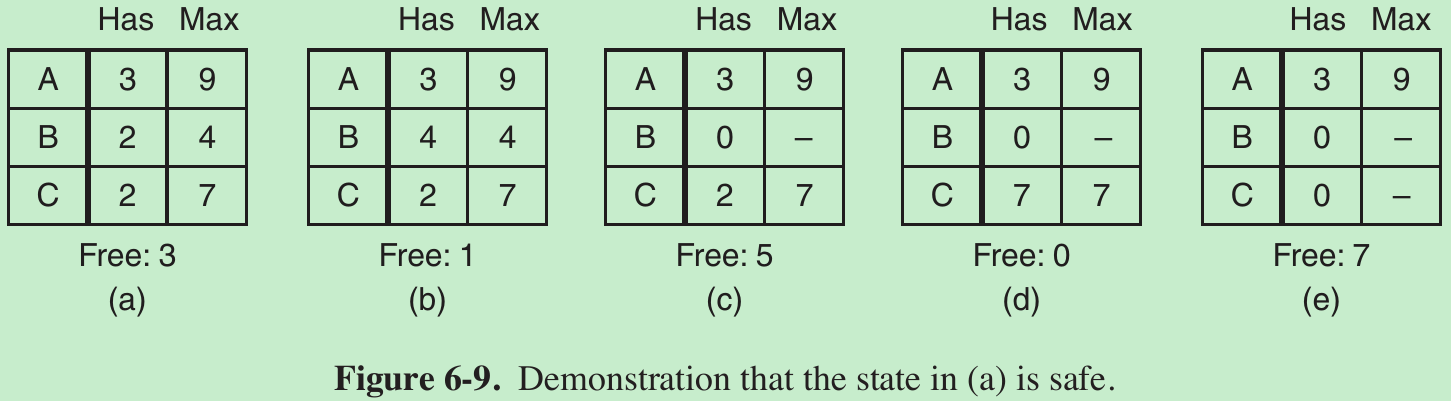

- Fig. 6-9(a): We have a state in which A has 3 instances of the resource but need 9 eventually. Similarly for B and C. A total of 10 instances of the resource exist, with 7 resources already allocated, 3 are still free.

- The state of Fig. 6-9(a) is safe because there exists a sequence of allocations that allows all processes to complete. The scheduler can run B exclusively, until it asks for and gets two more instances of the resource, leading to the state of Fig. 6-9(b). When B completes, we get the state of Fig. 6-9(c). Then the scheduler can run C, leading eventually to Fig. 6-9(d). When C completes, we get Fig. 6-9(e). Now A can get the six instances of the resource it needs and also complete. Thus, the state of Fig. 6-9(a) is safe because the system can avoid deadlock.

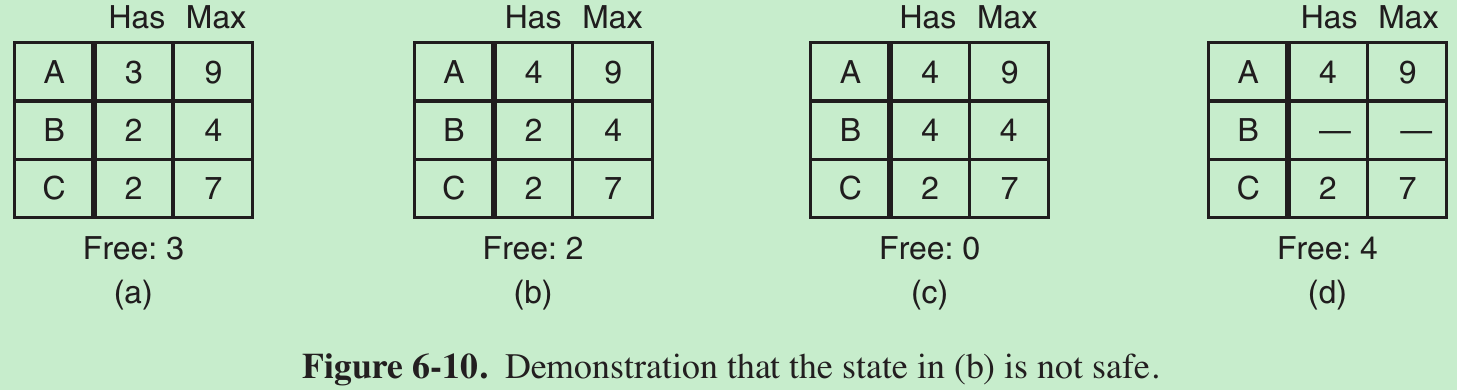

- Suppose we have the initial state shown in Fig. 6-10(a), but this time A requests and gets another resource, giving Fig. 6-10(b). The scheduler could run B until it asked for all its resources, as shown in Fig. 6-10(c). Eventually, B completes and we get the state of Fig. 6-10(d). There is no sequence that guarantees completion. Thus, the allocation decision that moved the system from Fig. 6-10(a) to Fig. 6-10(b) went from a safe to an unsafe state. Running A or C next starting at Fig. 6-10(b) does not work either. So A’s request should not have been granted.

- An unsafe state is not a deadlocked state. Starting at Fig. 6-10(b), the system can run for a while. In fact, one process can even complete. It is possible that A might release a resource before asking for any more, allowing C to complete and avoiding deadlock altogether. So the difference between a safe state and an unsafe state is that from a safe state the system can guarantee that all processes will finish; from an unsafe state, no such guarantee can be given.

6.5.3 The Banker’s Algorithm for a Single Resource

- What the algorithm does is check to see if granting the request leads to an unsafe state. If so, the request is denied. If granting the request leads to a safe state, it is carried out.

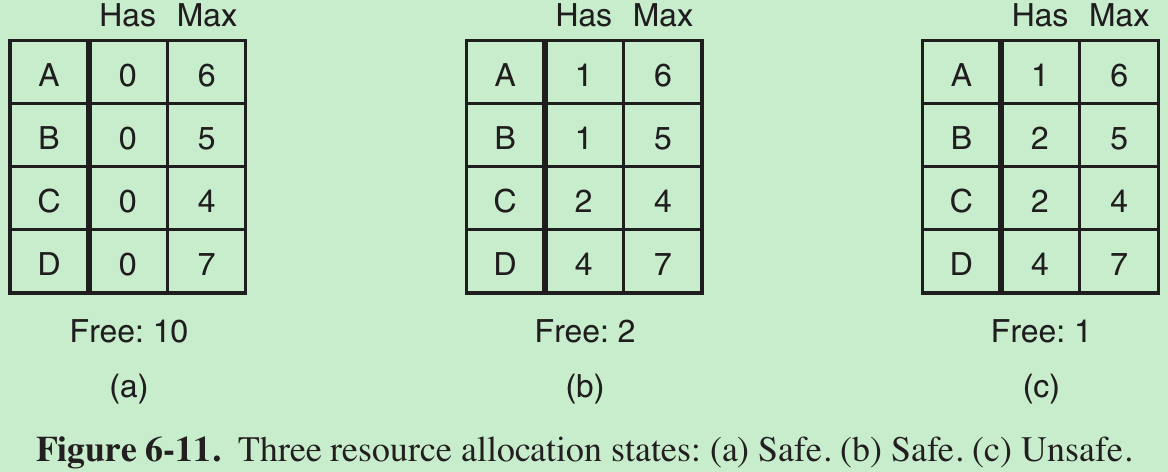

- In Fig. 6-11(a) we see four customers, A, B, C, and D, each of whom has been granted a certain number of credit units. The banker knows that not all customers will need their maximum credit immediately, so he has reserved only 10 units rather than 22 to service them. (In this analogy, customers are processes, units are, say, tape drives, and the banker is the operating system.)

- The customers go about their respective businesses, making loan requests from time to time (i.e., asking for resources). At a certain moment, the situation is as shown in Fig. 6-11(b). This state is safe because with two units left, the banker can delay any requests except C’s, thus letting C finish and release all four of his resources. With four units in hand, the banker can let either D or B have the necessary units, and so on.

- Consider a request from B for one more unit were granted in Fig. 6-11(b). We would have situation Fig. 6-11(c), which is unsafe. If all the customers suddenly asked for their maximum loans, the banker could not satisfy any of them, and we would have a deadlock. An unsafe state does not have to lead to deadlock, since a customer might not need the entire credit line available, but the banker cannot count on this behavior.

- The banker’s algorithm considers each request as it occurs, seeing whether granting it leads to a safe state. If it does, the request is granted; otherwise, it is postponed until later.

- To see if a state is safe, the banker checks to see if he has enough resources to satisfy some customer. If so, those loans are assumed to be repaid, and the customer now closest to the limit is checked, and so on. If all loans can eventually be repaid, the state is safe and the initial request can be granted.

6.5.4 The Banker’s Algorithm for Multiple Resources

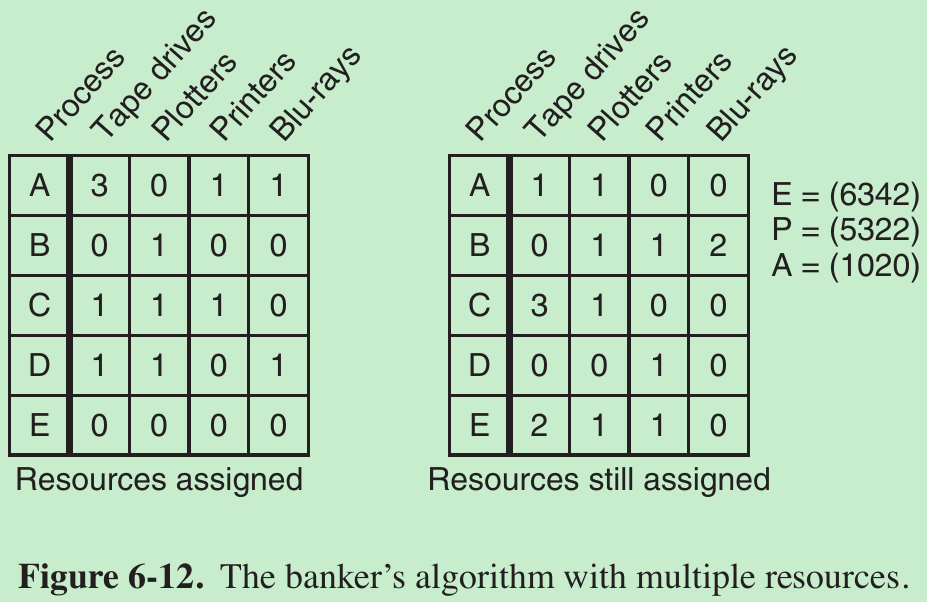

- Fig. 6-12 The left one shows how many of each resource are currently assigned to each of the five processes. The right matrix shows how many resources each process still needs in order to complete.

- E: existing resources

P: possessed resources

A: available resources - Algorithm(assuming processes keep all resources until they exit):

- Look for a row, R, whose unmet resource needs are all <= A. If no such row exists, the system will eventually deadlock since no process can run to completion.

- Assume the process of the chosen row requests all the resources it needs (which is guaranteed to be possible) and finishes. Mark that process as terminated and add all of its resources to the A vector.

- Repeat steps 1 and 2 until either all processes are marked terminated (in which case the initial state was safe) or no process is left whose resource needs can be met (in which case the system was not safe). If several processes are eligible to be chosen in step 1, it does not matter which one is selected: the pool of available resources either gets larger, or at worst, stays the same.

6.6 DEADLOCK PREVENTION

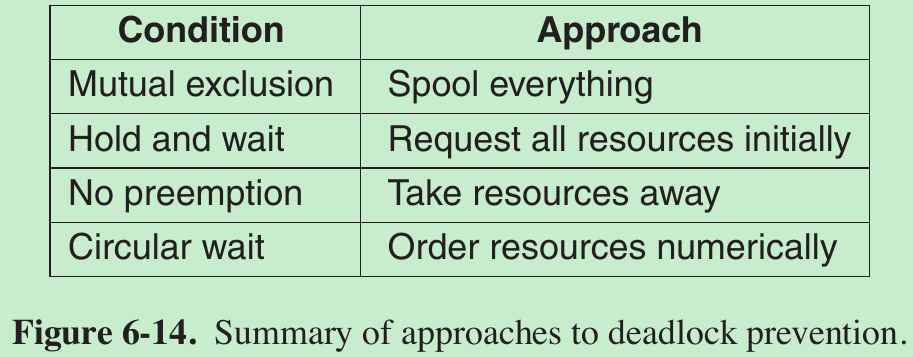

- Four conditions must hold for there to be a (resource) deadlock:

- Mutual exclusion condition.

Each resource is either currently assigned to exactly one process or is available. - Hold-and-wait condition.

Processes currently holding resources that were granted earlier can request new resources. - No-preemption condition.

Resources previously granted cannot be forcibly taken away from a process. They must be explicitly released by the process holding them. - Circular wait condition.

There must be a circular list of two or more processes, each of which is waiting for a resource held by the next member of the chain.

- Mutual exclusion condition.

6.6.1 Attacking the Mutual-Exclusion Condition

- If no resource were assigned exclusively to a single process, we would never have deadlocks. For data, the simplest method is to make data read only, so that processes can use the data concurrently.

- However, allowing two processes to write on the printer at the same time will lead to chaos. By spooling printer output, several processes can generate output at the same time. In this model, the only process that actually requests the physical printer is the printer daemon. Since the daemon never requests any other resources, we can eliminate deadlock for the printer.

- If the daemon is programmed to begin printing even before all the output is spooled, the printer might lie idle if an output process decides to wait several hours after the first burst of output. For this reason, daemons are normally programmed to print only after the complete output file is available. However, this decision itself could lead to deadlock.

- What would happen if two processes each filled up one half of the available spooling space with output and neither was finished producing its full output? In this case, we would have two processes that had each finished part, but not all, of their output, and could not continue. Neither process will ever finish, so we would have a deadlock on the disk.

- Nevertheless, there is an idea that is frequently applicable. Avoid assigning a resource unless absolutely necessary, and try to make sure that as few processes as possible may actually claim the resource.

6.6.2 Attacking the Hold-and-Wait Condition

- If we can prevent processes that hold resources from waiting for more resources, we can eliminate deadlocks.

- One way to achieve this goal is to require all processes to request all their resources before starting execution. If everything is available, the process will be allocated whatever it needs and can run to completion. If one or more resources are busy, nothing will be allocated and the process will just wait.

- A problem with this approach is that many processes do not know how many resources they will need until they have started running. In fact, if they knew, the banker’s algorithm could be used.

- Another problem is that resources will not be used optimally with this approach. E.g.: a process that reads data from an input tape, analyzes it for an hour, and then writes an output tape as well as plotting the results. If all resources must be requested in advance, the process will tie up the output tape drive and the plotter for an hour.

- A different way to break the hold-and-wait condition is to require a process requesting a resource to first temporarily release all the resources it currently holds. Then it tries to get everything it needs all at once.

6.6.3 Attacking the No-Preemption Condition

- If a process has been assigned the printer and is in the middle of printing its output, forcibly taking away the printer because a needed plotter is not available is tricky at best and impossible at worst. However, some resources can be virtualized to avoid this situation.

- Spooling printer output to the disk and allowing only the printer daemon access to the real printer eliminates deadlocks involving the printer, although it creates a potential for deadlock over disk space. With large disks though, running out of disk space is unlikely.

- However, not all resources can be virtualized like this. For example, records in databases or tables inside the operating system must be locked to be used and therein lies the potential for deadlock.

6.6.4 Attacking the Circular Wait Condition

- The circular wait can be eliminated in several ways. One way is to have a rule saying that a process is entitled only to a single resource at any moment. If it needs a second one, it must release the first one. For a process that needs to copy a huge file from a tape to a printer, this restriction is unacceptable.

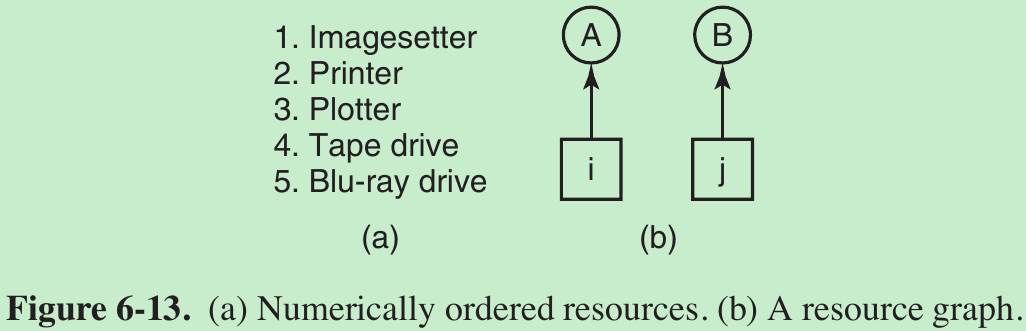

- Another way to avoid the circular wait is to provide a global numbering of all the resources, as shown in Fig. 6-13(a). Now the rule is this: processes can request resources whenever they want to, but all requests must be made in numerical order. A process may request first a printer and then a tape drive, but it may not request first a plotter and then a printer.

- With this rule, the resource allocation graph can never have cycles. Let us see why this is true for the case of two processes, in Fig. 6-13(b).

- We can get a deadlock only if A requests resource j and B requests resource i. Assuming i and j are distinct resources, they will have different numbers. If

—i > j, A is not allowed to request j because that is lower than what it already has.

—i less than j, B is not allowed to request i because that is lower than what it already has.

Either way, deadlock is impossible. - A variation of this algorithm is to drop the requirement that resources be acquired in strictly increasing sequence and merely insist that no process request a resource lower than what it is already holding. If a process initially requests 9 and 10, and then releases both of them, it is starting all over, so there is no reason to prohibit it from now requesting resource 1.

- Although numerically ordering the resources eliminates the problem of deadlocks, it may be impossible to find an ordering that satisfies everyone. When the resources include process-table slots, disk spooler space, locked database records, and other abstract resources, the number of potential resources and different uses may be so large that no ordering could possibly work.

6.7 OTHER ISSUES

6.7.1 Two-Phase Locking

- In database systems, an operation that occurs frequently is requesting locks on several records and then updating all the locked records. When multiple processes are running at the same time, there is a danger of deadlock. The approach often used is called two-phase locking.

- In the first phase, the process tries to lock all the records it needs, one at a time. If it succeeds, it begins the second phase, performing its updates and releasing the locks. No real work is done in the first phase. If during the first phase, some record is needed that is already locked, the process just releases all its locks and starts the first phase all over.

- In a certain sense, this approach is similar to requesting all the resources needed in advance, or at least before anything irreversible is done. In some versions of two-phase locking, there is no release and restart if a locked record is encountered during the first phase. In these versions, deadlock can occur.

- However, this strategy is not applicable in general. E.g.: In real-time systems and process control systems, it is not acceptable to terminate a process partway through because a resource is not available and start all over again.

- Neither is it acceptable to start over if the process has read or written messages to the network, updated files, or anything else that cannot be safely repeated. The algorithm works only in those situations where the programmer has very carefully arranged things so that the program can be stopped at any point during the first phase and restarted. Many applications cannot be structured this way.

6.7.2 Communication Deadlocks

- Resource deadlock is a problem of competition synchronization. Independent processes would complete service if their execution were not interleaved with competing processes.

- A process locks resources in order to prevent inconsistent resource states caused by interleaved access to resources. Interleaved access to locked resources enables resource deadlock.

- Another kind of deadlock can occur in communication systems (e.g., networks), in which two or more processes communicate by sending messages.

- A common arrangement is that process A sends a request message to process B, and then blocks until B sends back a reply message. Suppose that the request message gets lost. A is blocked waiting for the reply. B is blocked waiting for a request asking it to do something. We have a deadlock.

- This situation is called a communication deadlock which is an anomaly of cooperation synchronization. The processes in this type of deadlock could not complete service if executed independently.

- Communication deadlocks cannot be prevented by ordering the resources (since there are no resources) or avoided by careful scheduling (since there are no moments when a request could be postponed).

- Technique to break communication deadlocks: timeouts. Whenever a message is sent to which a reply is expected, a timer is started. If the timer goes off before the reply arrives, the sender of the message assumes that the message has been lost and sends it again (and again and again if needed). In this way, the deadlock is broken.

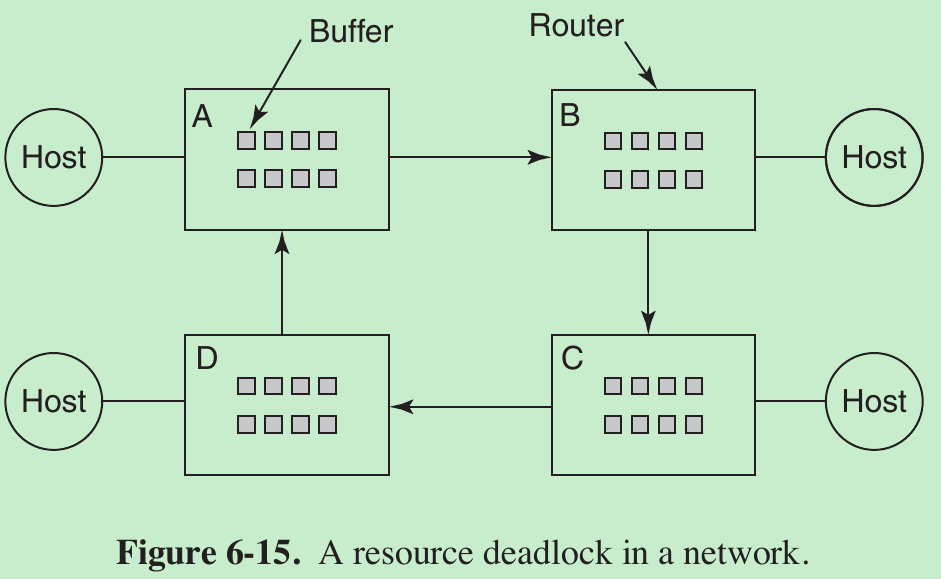

- Resource deadlocks can also occur in communication systems or networks.

- Consider the network of Fig. 6-15. The Internet consists of two kinds of computers: hosts and routers. Hosts do work for people. A router is a communications computer that moves packets of data from the source to the destination. Each host is connected to one or more routers.

- When a packet comes into a router from one of its hosts, it is put into a buffer for subsequent transmission to another router and then to another until it gets to the destination. These buffers are resources and there are a finite number of them.

- In Fig. 6-16 each router has only eight buffers. Suppose that all the packets at router A need to go to B and all the packets at B need to go to C and all the packets at C need to go to D and all the packets at D need to go to A. No packet can move because there is no buffer at the other end and we have a classical resource deadlock, albeit in the middle of a communications system.

6.7.3 Live lock

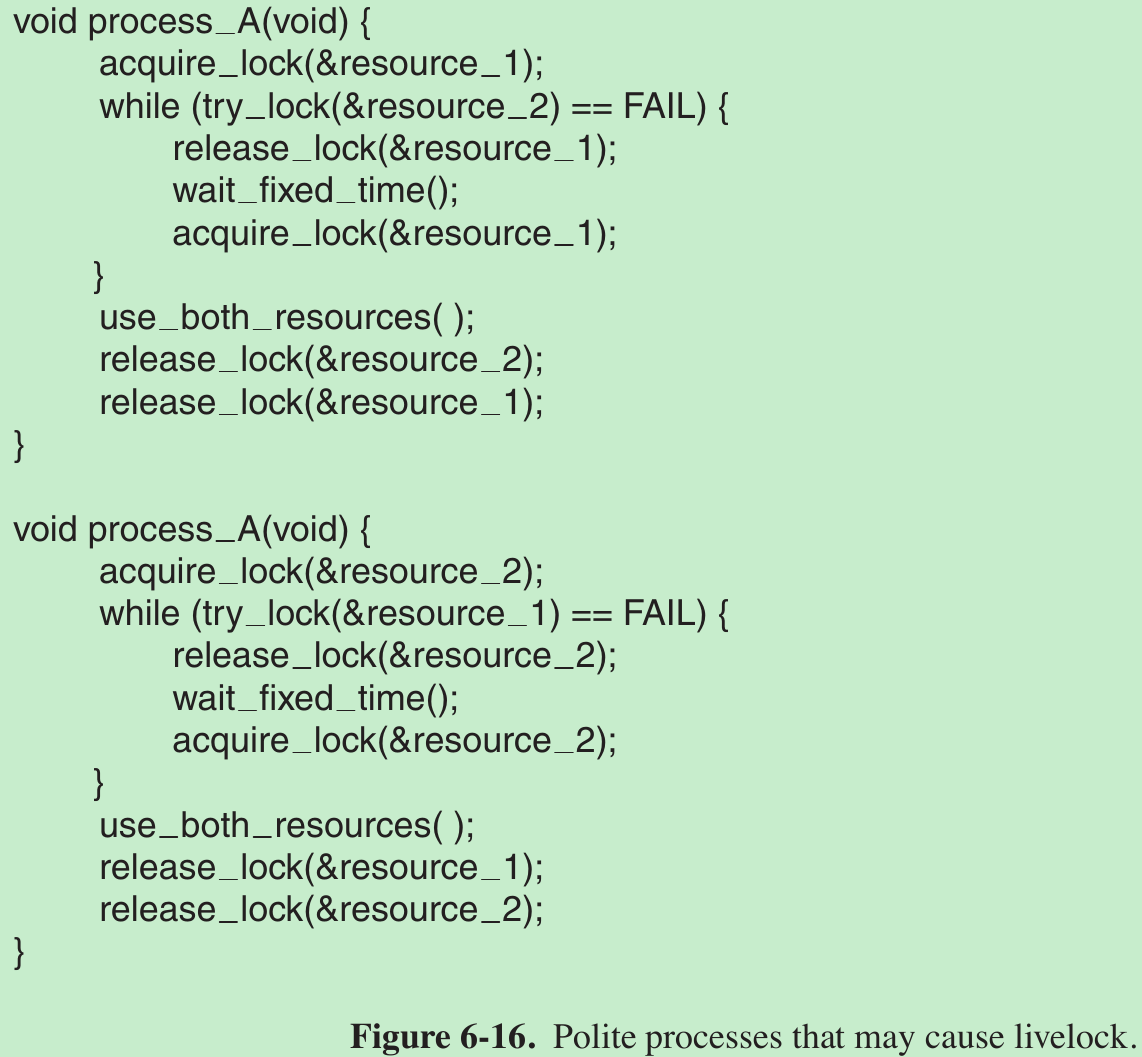

- Consider an atomic primitive try_lock in which the calling process tests a mutex and either grabs it or returns failure. In other words, it never blocks. Programmers can use it together with acquire_lock which also tries to grab the lock, but blocks if the lock is not available. Now imagine a pair of processes running in parallel that use two resources, as shown in Fig. 6-16.

- Each one needs two resources and uses the try_lock primitive to try to acquire the necessary locks. If the attempt fails, the process gives up the lock it holds and tries again.

- Process A runs and acquires resource 1, while process 2 runs and acquires resource 2. Next, they try to acquire the other lock and fail. To be polite, they give up the lock they are currently holding and try again. This procedure repeats until user puts one of these processes out of its misery.

- So no process is blocked and we could say that things are happening, so this is not a deadlock. Still, no progress is possible, so we do have something equivalent: a livelock.

- In some systems, the total number of processes allowed is determined by the number of entries in the process table. Thus, process-table slots are finite resources. If a fork fails because the table is full, a reasonable approach for the program doing the fork is to wait a random time and try again.

- Now suppose that a UNIX system has 100 process slots. Ten programs are running, each of which needs to create 12 children. After each process has created 9 processes, the 10 original processes and the 90 new processes have exhausted the table. Each of the 10 original processes now sits in an endless loop forking and failing—a livelock.

- Most operating systems, including UNIX and Windows, basically ignore the problem on the assumption that most users would prefer an occasional livelock to a rule restricting all users to one process, one open file, and one of everything. The problem is that the price is high, mostly in terms of putting inconvenient restrictions on processes.

6.7.4 Starvation

- In a dynamic system, requests for resources happen all the time. Some policy is needed to make a decision about who gets which resource when. This policy may lead to some processes never getting service even though they are not deadlocked.

- Consider allocation of the printer. Imagine that the system uses some algorithm to ensure that allocating the printer does not lead to deadlock. Now suppose that several processes all want it at once. Who should get it?

- One possible is to give it to the process with the smallest file to print. This approach maximizes the number of customers. Now consider what happens in a busy system when one process has a huge file to print. Every time the printer is free, the system will look around and choose the process with the shortest file. If there is a constant stream of processes with short files, the process with the huge file will never be allocated the printer. It will simply starve to death.

- Starvation can be avoided by using a first-come, first-served resource allocation policy. With this approach, the process waiting the longest gets served next. In due course of time, any given process will eventually become the oldest and thus get the needed resource.

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

0 0

- 6-DEADLOCKS

- Deadlocks

- 《modern operating system》 chapter 6 DEADLOCKS 笔记

- deadlocks(死锁)

- deadlocks(死锁)

- Locks, Deadlocks, and Synchronization

- 消息死锁(Message Deadlocks)

- Debugging Deadlocks on Android

- Locks, Deadlocks, and Synchronization

- mysql deadlocks with concurrent inserts

- 14.5.5 Deadlocks in InnoDB

- 两道面试题解答之一 Trading Server Deadlocks

- oracle lock 04 - Locks and Deadlocks

- Bitmap Indexes and Deadlocks: Deadlocks on DML's and DDL's [ID 171795.1]

- Break Free of Code Deadlocks in Critical Sections Under Windows

- 死锁(deadlocks)及其预防和解除方法

- Finding SQL Server Deadlocks Using Trace Flag 1222

- Threading: Break Free of Code Deadlocks in Critical Sections Under Windows

- inieditor基于注解的扩展使用(java读写.ini文件)

- XZ_HTML之HTML简介

- JS/CSS缓存杀手——VS插件

- 第一章:议题思考(演示用)

- 生活随笔:一元的意义

- 6-DEADLOCKS

- 189. Rotate Array

- Django之celery分布式异步任务队列

- python学习——电子邮件

- 【NOIP模拟】颜料大乱斗

- Java基础技巧

- "Xcode"意外退出

- Exception in thread "main" java.io.IOException: Server returned HTTP response code: 400 for URL解决方案

- HDU - 1021 Fibonacci Again