Nelder–Mead method

来源:互联网 发布:自考培训机构 知乎 编辑:程序博客网 时间:2024/06/08 18:40

http://people.sc.fsu.edu/~jburkardt/m_src/nelder_mead/nelder_mead.html

Nelder–Mead method

polytope

method

nelder-mead

high

dimensional

problems

杂谈

Nelder–Mead simplex search over theRosenbrock banana function (above) andHimmelblau's function (below)

- See simplex algorithm for Dantzig's algorithm for the problem of linear optimization.

The Nelder–Mead method or downhill simplex method or amoeba method is a commonly used nonlinear optimization technique, which is a well-defined numerical method for twice differentiable and unimodal problems. However, the Nelder–Mead technique is only a heuristic, since it can converge to non-stationary points[1] on problems that can be solved by alternative methods.[2]

The Nelder–Mead technique was proposed by John Nelder & Roger Mead (1965) and is a technique for minimizing an objective function in a many-dimensional space.

Contents

[hide]- 1 Overview

- 2 One possible variation of the NM algorithm

- 3 See also

- 4 References

- 4.1 Further reading

- 5 External links

[edit] Overview

The method uses the concept of a simplex, which is a special polytope of N

The method approximates a local optimum of a problem with N variables when the objective function varies smoothly and is unimodal.

For example, a suspension bridge engineer has to choose how thick each strut, cable, and pier must be. Clearly these all link together, but it is not easy to visualize the impact of changing any specific element. The engineer can use the Nelder–Mead method to generate trial designs which are then tested on a large computer model. As each run of the simulation is expensive, it is important to make good decisions about where to look.

Nelder–Mead generates a new test position by extrapolating the behavior of the objective function measured at each test point arranged as a simplex. The algorithm then chooses to replace one of these test points with the new test point and so the technique progresses. The simplest step is to replace the worst point with a point reflected through the centroid of the remaining N points. If this point is better than the best current point, then we can try stretching exponentially out along this line. On the other hand, if this new point isn't much better than the previous value, then we are stepping across a valley, so we shrink the simplex towards a better point.

Unlike modern optimization methods, the Nelder–Mead heuristic can converge to a non-stationary point unless the problem satisfies stronger conditions than are necessary for modern methods.[3]Modern improvements over the Nelder–Mead heuristic have been known since 1979.[4]

Many variations exist depending on the actual nature of the problem being solved. A common variant uses a constant-size, small simplex that roughly follows the gradient direction (which gives steepest descent). Visualize a small triangle on an elevation map flip-flopping its way down a valley to a local bottom. This method is also known as the Flexible Polyhedron Method. This, however, tends to perform poorly against the method described in this article because it makes small, unnecessary steps in areas of little interest.

[edit] One possible variation of the NM algorithm

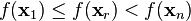

- 1. Order according to the values at the vertices:

- 2. Calculate xo, the center of gravity of all points except xn + 1.

- 3. Reflection

- Compute reflected point

- If the reflected point is better than the second worst, but not better than the best, i.e.:

,

, - then obtain a new simplex by replacing the worst point xn + 1 with the reflected point xr, and go to step 1.

- Compute reflected point

- 4. Expansion

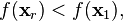

- If the reflected point is the best point so far,

- then compute the expanded point

- If the expanded point is better than the reflected point,

- then obtain a new simplex by replacing the worst point xn + 1 with the expanded point xe, and go to step 1.

- Else obtain a new simplex by replacing the worst point xn + 1 with the reflected point xr, and go to step 1.

- If the expanded point is better than the reflected point,

- Else (i.e. reflected point is not better than second worst) continue at step 5.

- If the reflected point is the best point so far,

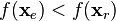

- 5. Contraction

- Here, it is certain that

- Compute contracted point

- If the contracted point is better than the worst point, i.e.

- then obtain a new simplex by replacing the worst point xn + 1 with the contracted point xc, and go to step 1.

- If the contracted point is better than the worst point, i.e.

- Else go to step 6.

- Here, it is certain that

- 6. Reduction

- For all but the best point, replace the point with

. go to step 1.

. go to step 1.

Note:  and σ are respectively the reflection, the expansion, the contraction and the shrink coefficient. Standard values are α = 1, γ = 2, ρ = 1 / 2 and σ = 1 / 2.

and σ are respectively the reflection, the expansion, the contraction and the shrink coefficient. Standard values are α = 1, γ = 2, ρ = 1 / 2 and σ = 1 / 2.

For the reflection, since xn + 1 is the vertex with the higher associated value among the vertices, we can expect to find a lower value at the reflection of xn + 1 in the opposite face formed by all vertices point xi except xn + 1.

For the expansion, if the reflection point xr is the new minimum along the vertices we can expect to find interesting values along the direction from xo to xr.

Concerning the contraction: If f(xr) > f(xn) we can expect that a better value will be inside the simplex formed by all the vertices xi.

The initial simplex is important, indeed, a too small initial simplex can lead to a local search, consequently the NM can get more easily stuck. So this simplex should depend on the nature of the problem.

[edit] See also

- Conjugate gradient method

- Levenberg–Marquardt algorithm

- Direct Search Algorithm

- Broyden–Fletcher–Goldfarb–Shanno or BFGS method

- Differential evolution

[edit] References

- ^ Powell, McKinnon

- ^ Yu, Kolda et alia and Lewis et alia.

- ^ Powell, McKinnon.

- ^ Yu, Kolda et alia, and Lewis et alia.

- J. A. Nelder and R. Mead, "A simplex method for function minimization", Computer Journal, 1965, vol 7, pp 308–313 [1]

- Kolda, Tamara G.; Lewis, Robert Michael; Torczon, Virginia.2003. “Optimization by direct search: new perspectives on some classical and modern methods”. SIAM Rev. 45 (2003), no. 3, 385–482

- Lewis, Robert Michael; Shepherd, Anne; Torczon, Virginia. 2007. “Implementing generating set search methods for linearly constrained minimization”. SIAM J. Sci. Comput. 29 (2007), no. 6, 2507—2530.

- McKinnon, K.I.M. , "Convergence of the Nelder–Mead simplex method to a non-stationary point",SIAM J Optimization, 1999, vol 9, pp. 148–158. (algorithm summary online).

- Powell, Michael J. D. 1973. ”On Search Directions for Minimization Algorithms.” Mathematical Programming 4: 193—201.

- Yu, Wen Ci. 1979. “Positive basis and a class of direct search techniques”. Scientia Sinica[Zhongguo Kexue]: 53—68.

- Yu, Wen Ci. 1979. “The convergent property of the simplex evolutionary technique”. Scientia Sinica [Zhongguo Kexue]: 69–77.

[edit] Further reading

- Avriel, Mordecai (2003). Nonlinear Programming: Analysis and Methods. Dover Publishing. ISBN 0-486-43227-0.

- Coope, I. D.; C.J. Price, 2002. “Positive bases in numerical optimization”, Computational Optimization & Applications, Vol. 21, No. 2, pp. 169–176, 2002.

- Numerical Recipes

[edit] External links

- Nelder–Mead (Simplex) Method

- Nelder–Mead Search for a Minimum

- John Burkardt: Nelder–Mead code in Matlab

- Nelder–Mead method

- scipy.optimize.minimize 的优化算法(1): Nelder–Mead Simplex

- Nelder-Mead(simplex,“单纯形”)算法

- Nelder-Mead算法在Matlab中的实现

- Nelder-Mead算法在Matlab中的实现

- auto extract and summarize a text by mead

- Method

- method

- method

- method

- Method method

- Patterns in SOME –Template Method

- Patterns in SOME –Factory Method

- yii2 Unknown Method – yii\base\UnknownMethodException

- iOS安全–Objective-C Method Swizzling

- iOS安全–Objective-C Method Swizzling

- unbound method & bound method

- Iterative Method / Recursive Method

- Parajumpers Herren and main America You

- Android 流式布局FlowLayout 实现关键字标签

- TextField随着键盘的弹出上移

- ubuntu 16.04 上 Redis利用持久化进行数据迁移

- sublime控制台以及jshint插件安装

- Nelder–Mead method

- 占位22

- JavaScript事件模型

- Android开发之——FilenameFilter文件过滤器

- Python-smtplib

- 练习

- web前端-CSS 伪元素 -023

- target is null for setProperty(null,)错误的引发原因及解决办法

- iOS崩溃日志的处理