在IntelliJ IDEA中运用Maven 开发 Spark应用

来源:互联网 发布:代购淘宝描述模板 编辑:程序博客网 时间:2024/06/05 18:41

Spark Idea Maven 开发环境搭建

一、安装jdk

jdk版本最好是1.7以上,设置好环境变量,安装过程,略。

二、安装Maven

我选择的Maven版本是3.3.3,安装过程,略。

编辑Maven安装目录conf/settings.xml文件,

<!-- 修改Maven 库存放目录--><localRepository>D:\maven-repository\repository</localRepository>三、安装Idea

安装过程,略。

四、创建Spark项目

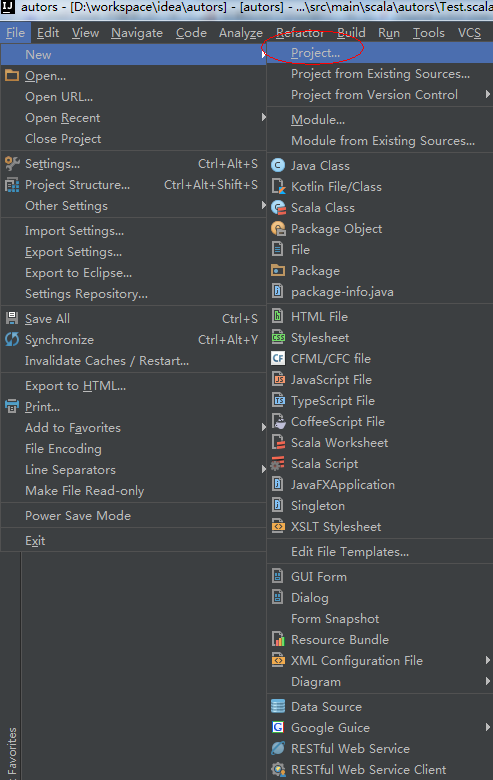

1、新建一个Spark项目,

2、选择Maven,从模板创建项目,

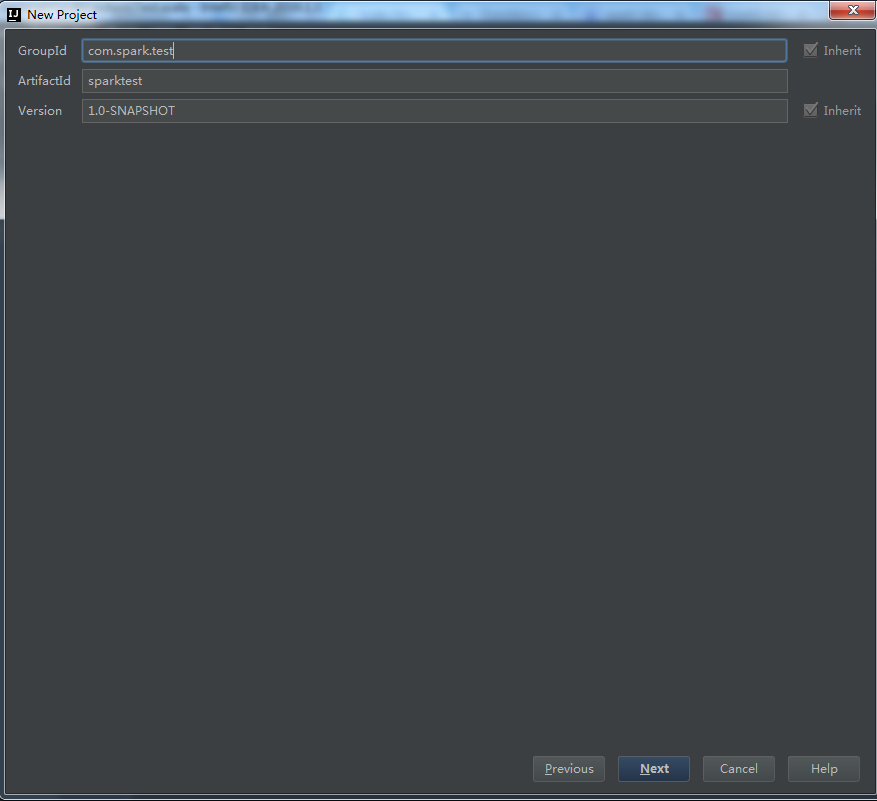

3、填写项目GroupId等,

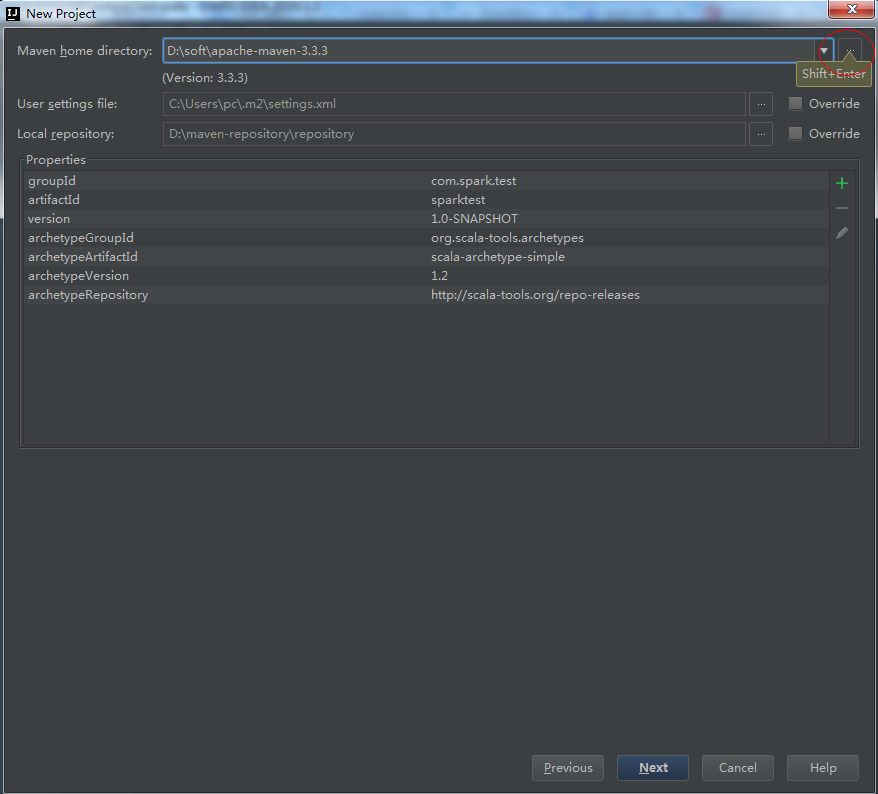

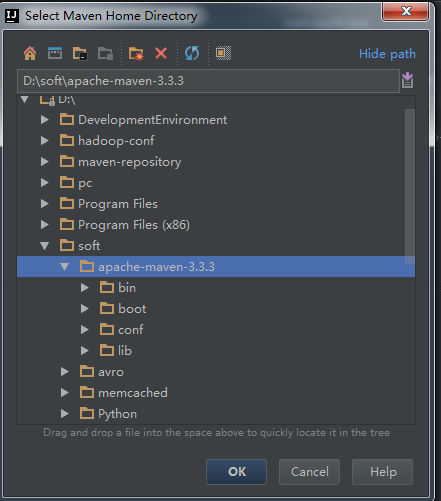

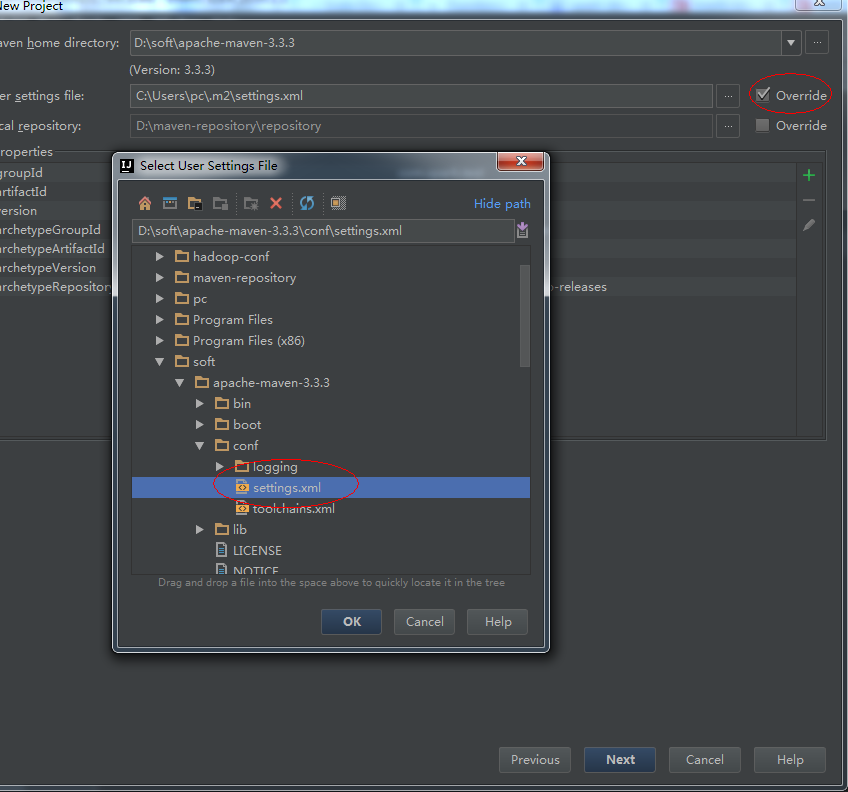

4、选择本地安装的Maven和Maven配置文件。

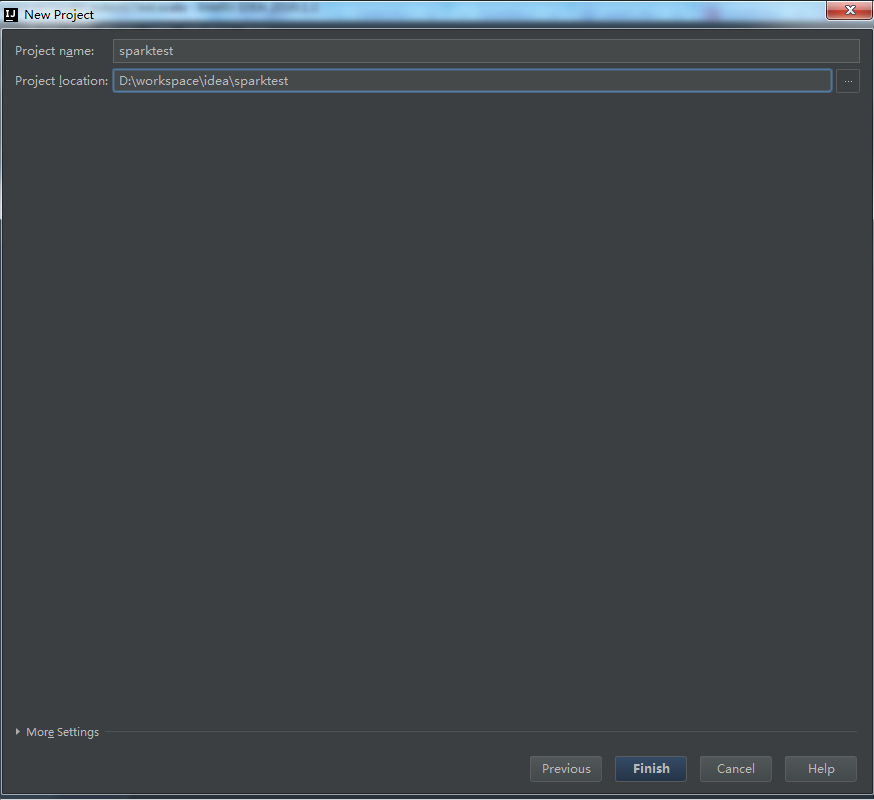

5、next

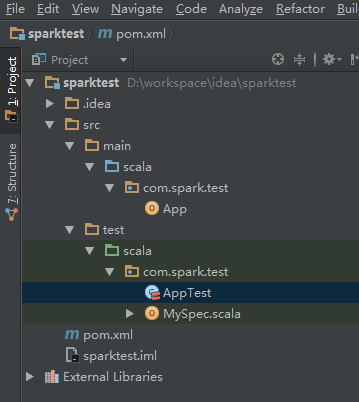

6、创建完毕,查看新项目结构:

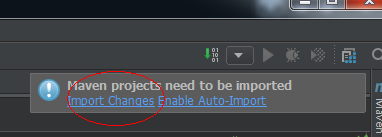

7、自动更新Maven pom文件

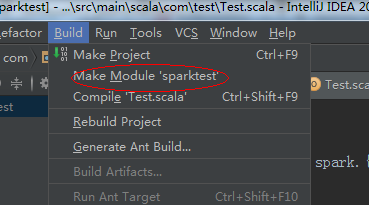

8、编译项目

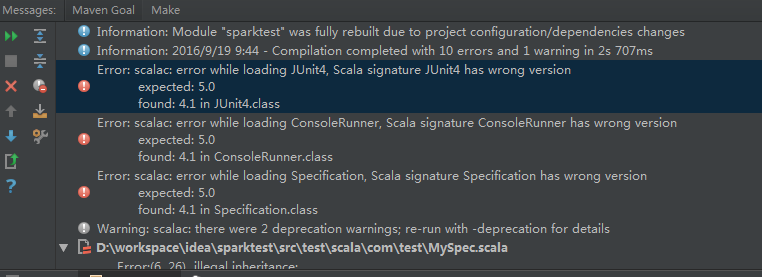

如果出现这种错误,这个错误是由于Junit版本造成的,可以删掉Test,和pom.xml文件中Junit的相关依赖,

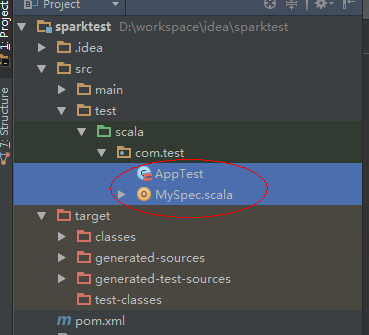

即删掉这两个Scala类:

和pom.xml文件中的Junit依赖:

<dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.12</version></dependency>9、刷新Maven依赖

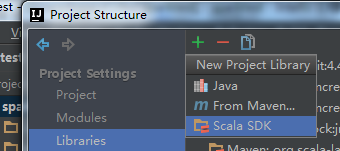

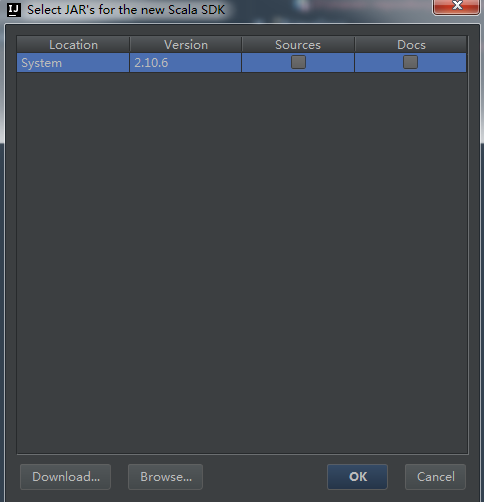

10、引入Jdk和Scala开发库

11、在pom.xml加入相关的依赖包,包括Hadoop、Spark等

<dependency> <groupId>commons-logging</groupId> <artifactId>commons-logging</artifactId> <version>1.1.1</version> <type>jar</type> </dependency> <dependency> <groupId>org.apache.commons</groupId> <artifactId>commons-lang3</artifactId> <version>3.1</version> </dependency> <dependency> <groupId>log4j</groupId> <artifactId>log4j</artifactId> <version>1.2.9</version> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.12</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.1</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.1</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>1.5.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.10</artifactId> <version>1.5.1</version> </dependency>然后刷新maven的依赖,

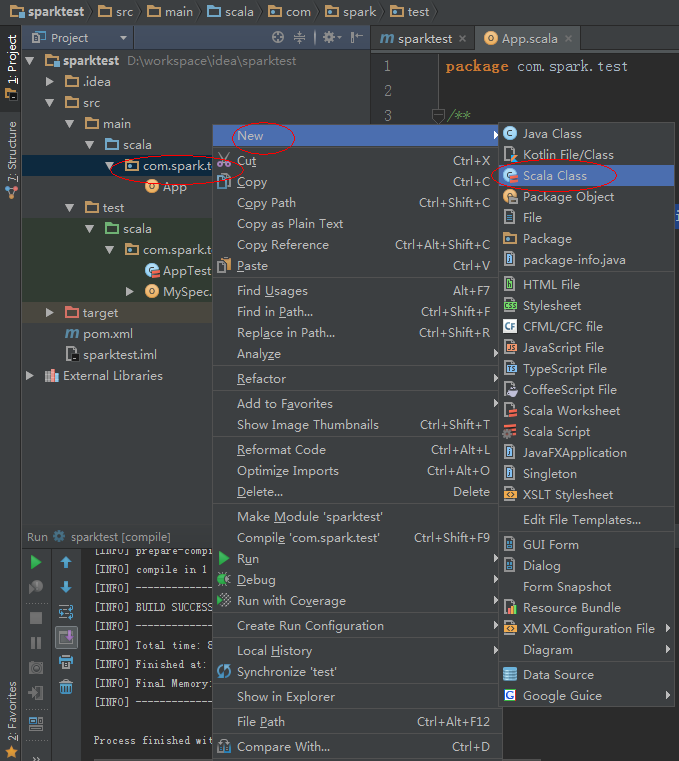

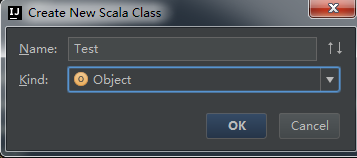

12、新建一个Scala Object。

测试代码为:

def main(args: Array[String]) { println("Hello World!") val sparkConf =new SparkConf().setMaster("local").setAppName("test") val sparkContext =new SparkContext(sparkConf)}执行,

如果报了以下错误,

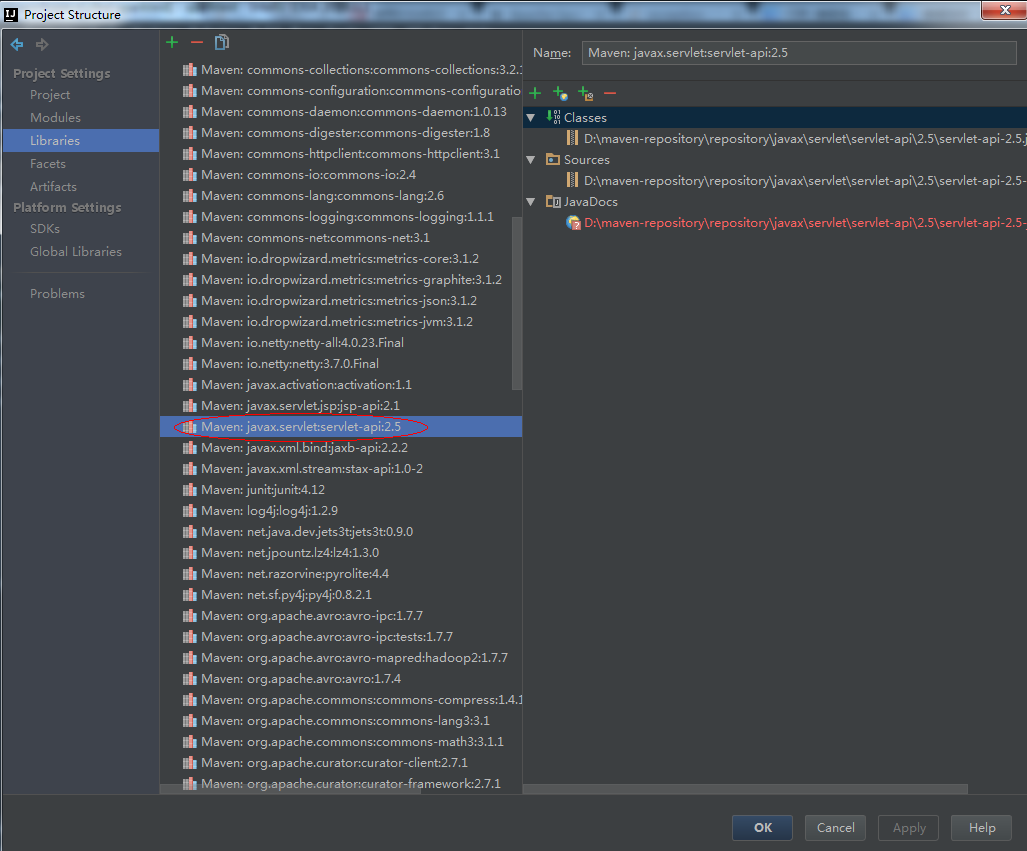

java.lang.SecurityException:class "javax.servlet.FilterRegistration"'s signer information does not match signer information of other classes in the samepackage at java.lang.ClassLoader.checkCerts(ClassLoader.java:952) at java.lang.ClassLoader.preDefineClass(ClassLoader.java:666) at java.lang.ClassLoader.defineClass(ClassLoader.java:794) at java.security.SecureClassLoader.defineClass(SecureClassLoader.java:142) at java.net.URLClassLoader.defineClass(URLClassLoader.java:449) at java.net.URLClassLoader.access$100(URLClassLoader.java:71) at java.net.URLClassLoader$1.run(URLClassLoader.java:361) at java.net.URLClassLoader$1.run(URLClassLoader.java:355) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:354) at java.lang.ClassLoader.loadClass(ClassLoader.java:425) at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:308) at java.lang.ClassLoader.loadClass(ClassLoader.java:358) at org.spark-project.jetty.servlet.ServletContextHandler.<init>(ServletContextHandler.java:136) at org.spark-project.jetty.servlet.ServletContextHandler.<init>(ServletContextHandler.java:129) at org.spark-project.jetty.servlet.ServletContextHandler.<init>(ServletContextHandler.java:98) at org.apache.spark.ui.JettyUtils$.createServletHandler(JettyUtils.scala:110) at org.apache.spark.ui.JettyUtils$.createServletHandler(JettyUtils.scala:101) at org.apache.spark.ui.WebUI.attachPage(WebUI.scala:78) at org.apache.spark.ui.WebUI$$anonfun$attachTab$1.apply(WebUI.scala:62) at org.apache.spark.ui.WebUI$$anonfun$attachTab$1.apply(WebUI.scala:62) at scala.collection.mutable.ResizableArray$class.foreach(ResizableArray.scala:59) at scala.collection.mutable.ArrayBuffer.foreach(ArrayBuffer.scala:47) at org.apache.spark.ui.WebUI.attachTab(WebUI.scala:62) at org.apache.spark.ui.SparkUI.initialize(SparkUI.scala:61) at org.apache.spark.ui.SparkUI.<init>(SparkUI.scala:74) at org.apache.spark.ui.SparkUI$.create(SparkUI.scala:190) at org.apache.spark.ui.SparkUI$.createLiveUI(SparkUI.scala:141) at org.apache.spark.SparkContext.<init>(SparkContext.scala:466) at com.test.Test$.main(Test.scala:13) at com.test.Test.main(Test.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at com.intellij.rt.execution.application.AppMain.main(AppMain.java:144)可以把servlet-api 2.5 jar删除即可:

最好的办法是删除pom.xml中相关的依赖,即

<dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.1</version></dependency>最后的pom.xml文件的依赖是

<dependencies> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.1</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-core_2.10</artifactId> <version>1.5.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-sql_2.10</artifactId> <version>1.5.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-hive_2.10</artifactId> <version>1.5.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.10</artifactId> <version>1.5.2</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-mllib_2.10</artifactId> <version>1.5.2</version> </dependency> <dependency> <groupId>com.databricks</groupId> <artifactId>spark-avro_2.10</artifactId> <version>2.0.1</version> </dependency> <dependency> <groupId>org.apache.spark</groupId> <artifactId>spark-streaming_2.10</artifactId> <version>1.5.2</version> </dependency> </dependencies>

如果是报了这个错误,也没有什么问题,程序依旧可以执行,

java.io.IOException: Could not locate executablenull\bin\winutils.exe in the Hadoop binaries. at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:356) at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:371) at org.apache.hadoop.util.Shell.<clinit>(Shell.java:364) at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80) at org.apache.hadoop.security.SecurityUtil.getAuthenticationMethod(SecurityUtil.java:611) at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:272) at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:260) at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:790) at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:760) at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:633) at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2084) at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2084) at scala.Option.getOrElse(Option.scala:120) at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2084) at org.apache.spark.SparkContext.<init>(SparkContext.scala:311) at com.test.Test$.main(Test.scala:13) at com.test.Test.main(Test.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at com.intellij.rt.execution.application.AppMain.main(AppMain.java:144)最后看到的正常输出:

Hello World!Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties16/09/1911:21:29INFO SparkContext: Running Spark version 1.5.116/09/1911:21:29ERROR Shell: Failed to locate the winutils binary in the hadoop binary pathjava.io.IOException: Could not locate executablenull\bin\winutils.exe in the Hadoop binaries. at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:356) at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:371) at org.apache.hadoop.util.Shell.<clinit>(Shell.java:364) at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80) at org.apache.hadoop.security.SecurityUtil.getAuthenticationMethod(SecurityUtil.java:611) at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:272) at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:260) at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:790) at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:760) at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:633) at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2084) at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2084) at scala.Option.getOrElse(Option.scala:120) at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2084) at org.apache.spark.SparkContext.<init>(SparkContext.scala:311) at com.test.Test$.main(Test.scala:13) at com.test.Test.main(Test.scala) at sun.reflect.NativeMethodAccessorImpl.invoke0(Native Method) at sun.reflect.NativeMethodAccessorImpl.invoke(NativeMethodAccessorImpl.java:57) at sun.reflect.DelegatingMethodAccessorImpl.invoke(DelegatingMethodAccessorImpl.java:43) at java.lang.reflect.Method.invoke(Method.java:606) at com.intellij.rt.execution.application.AppMain.main(AppMain.java:144)16/09/1911:21:29WARN NativeCodeLoader: Unable to load native-hadoop libraryfor your platform... using builtin-java classes where applicable16/09/1911:21:30INFO SecurityManager: Changing view acls to: pc16/09/1911:21:30INFO SecurityManager: Changing modify acls to: pc16/09/1911:21:30INFO SecurityManager: SecurityManager: authentication disabled; ui acls disabled; users with view permissions: Set(pc); users with modify permissions: Set(pc)16/09/1911:21:30INFO Slf4jLogger: Slf4jLogger started16/09/1911:21:31INFO Remoting: Starting remoting16/09/1911:21:31INFO Remoting: Remoting started; listening on addresses :[akka.tcp://sparkDriver@192.168.51.143:52500]16/09/1911:21:31INFO Utils: Successfully started service 'sparkDriver'on port 52500.16/09/1911:21:31INFO SparkEnv: Registering MapOutputTracker16/09/1911:21:31INFO SparkEnv: Registering BlockManagerMaster16/09/1911:21:31INFO DiskBlockManager: Created local directory at C:\Users\pc\AppData\Local\Temp\blockmgr-f9ea7f8c-68f9-4f9b-a31e-b87ec2e702a416/09/1911:21:31INFO MemoryStore: MemoryStore started with capacity 966.9 MB16/09/1911:21:31INFO HttpFileServer: HTTP File server directory is C:\Users\pc\AppData\Local\Temp\spark-64cccfb4-46c8-4266-92c1-14cfc6aa2cb3\httpd-5993f955-0d92-4233-b366-c9a94f7122bc16/09/1911:21:31INFO HttpServer: Starting HTTP Server16/09/1911:21:31INFO Utils: Successfully started service 'HTTP file server'on port 52501.16/09/1911:21:31INFO SparkEnv: Registering OutputCommitCoordinator16/09/1911:21:31INFO Utils: Successfully started service 'SparkUI'on port 4040.16/09/1911:21:31INFO SparkUI: Started SparkUI at http://192.168.51.143:404016/09/1911:21:31WARN MetricsSystem: Using defaultname DAGScheduler forsource because spark.app.id is not set.16/09/1911:21:31INFO Executor: Starting executor ID driver on host localhost16/09/1911:21:31INFO Utils: Successfully started service 'org.apache.spark.network.netty.NettyBlockTransferService'on port 52520.16/09/1911:21:31INFO NettyBlockTransferService: Server created on 5252016/09/1911:21:31INFO BlockManagerMaster: Trying to register BlockManager16/09/1911:21:31INFO BlockManagerMasterEndpoint: Registering block manager localhost:52520with 966.9 MB RAM, BlockManagerId(driver, localhost, 52520)16/09/1911:21:31INFO BlockManagerMaster: Registered BlockManager16/09/1911:21:31INFO SparkContext: Invoking stop() from shutdown hook16/09/1911:21:32INFO SparkUI: Stopped Spark web UI at http://192.168.51.143:404016/09/1911:21:32INFO DAGScheduler: Stopping DAGScheduler16/09/1911:21:32INFO MapOutputTrackerMasterEndpoint: MapOutputTrackerMasterEndpoint stopped!16/09/1911:21:32INFO MemoryStore: MemoryStore cleared16/09/1911:21:32INFO BlockManager: BlockManager stopped16/09/1911:21:32INFO BlockManagerMaster: BlockManagerMaster stopped16/09/1911:21:32INFO OutputCommitCoordinator$OutputCommitCoordinatorEndpoint: OutputCommitCoordinator stopped!16/09/1911:21:32INFO SparkContext: Successfully stopped SparkContext16/09/1911:21:32INFO ShutdownHookManager: Shutdown hook called16/09/1911:21:32INFO ShutdownHookManager: Deleting directory C:\Users\pc\AppData\Local\Temp\spark-64cccfb4-46c8-4266-92c1-14cfc6aa2cb3Process finished with exit code0至此,开发环境搭建完毕。

五、打jar包

1、新建一个Scala Object

代码是:

package com.testimport org.apache.spark.{SparkConf, SparkContext}/** * Created by pc on 2016/9/20. */object WorldCount { def main(args: Array[String]) { val dataFile = args(0) val output = args(1) val sparkConf =new SparkConf().setAppName("WorldCount") val sparkContext =new SparkContext(sparkConf) val lines = sparkContext.textFile(dataFile) val counts = lines.flatMap(_.split(",")).map(s => (s,1)).reduceByKey((a,b) => a+b) counts.saveAsTextFile(output) sparkContext.stop() }}

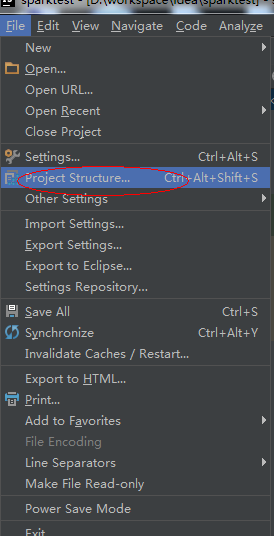

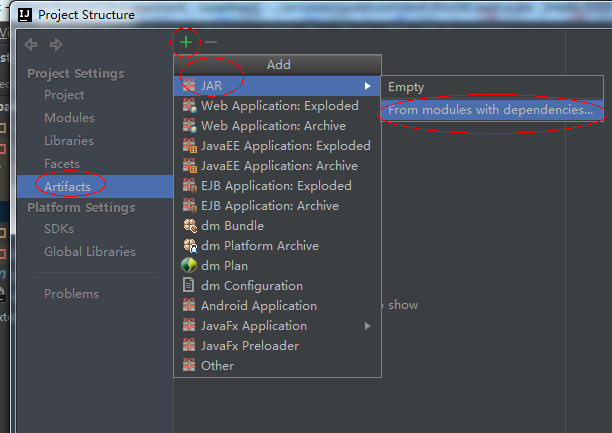

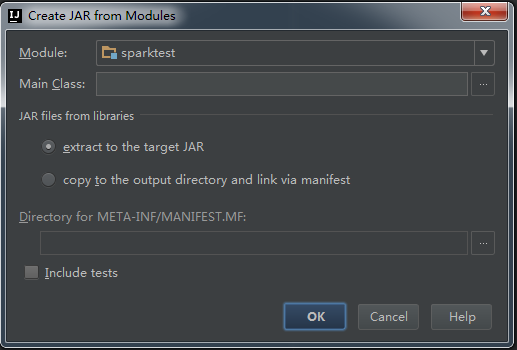

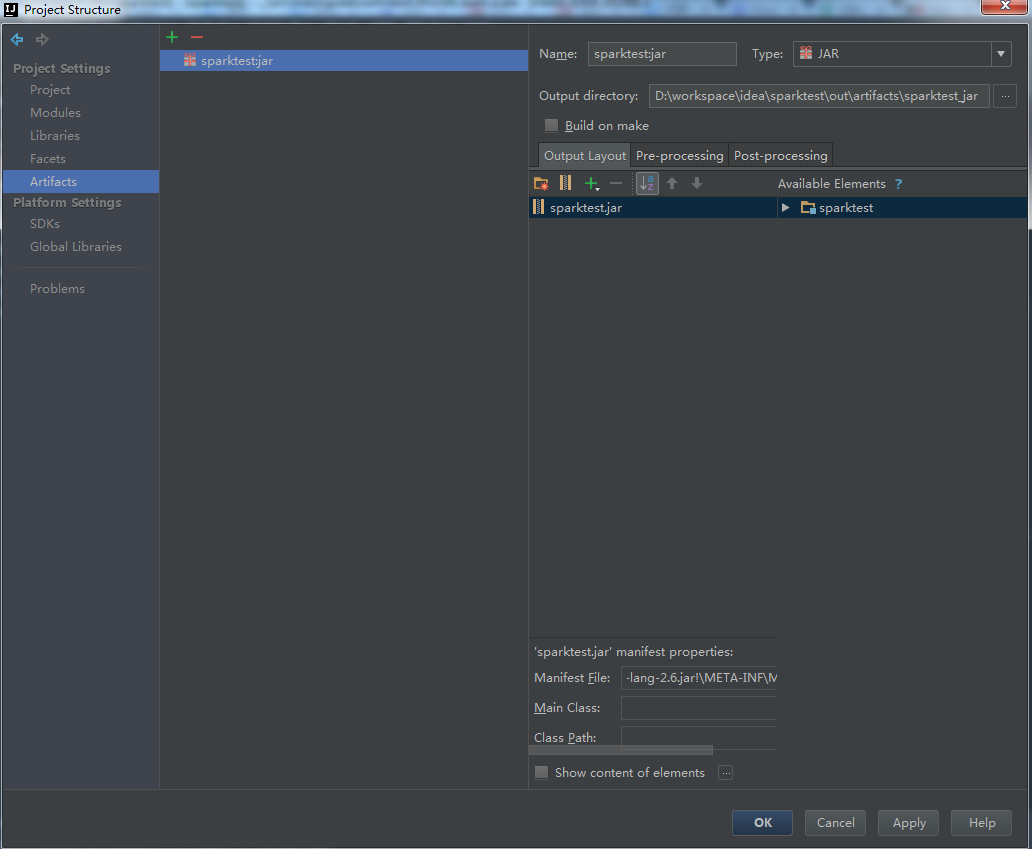

2、 File -》Project Structure

3、点击ok

可以设置jar包输出目录:

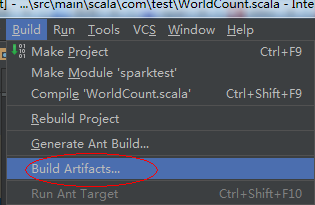

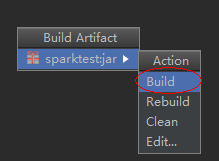

4、build Artifact

5、运行:

把测试文件放到HDFS的/test/ 目录下,提交:

spark-submit --classcom.test.WorldCount --master spark://192.168.18.151:7077 sparktest.jar /test/data.txt /test/test-016、如果出现以下错误

Exception in thread"main" java.lang.SecurityException: Invalid signature file digestfor Manifest main attributes at sun.security.util.SignatureFileVerifier.processImpl(SignatureFileVerifier.java:240) at sun.security.util.SignatureFileVerifier.process(SignatureFileVerifier.java:193) at java.util.jar.JarVerifier.processEntry(JarVerifier.java:305) at java.util.jar.JarVerifier.update(JarVerifier.java:216) at java.util.jar.JarFile.initializeVerifier(JarFile.java:345) at java.util.jar.JarFile.getInputStream(JarFile.java:412) at sun.misc.JarIndex.getJarIndex(JarIndex.java:137) at sun.misc.URLClassPath$JarLoader$1.run(URLClassPath.java:674) at sun.misc.URLClassPath$JarLoader$1.run(URLClassPath.java:666) at java.security.AccessController.doPrivileged(Native Method) at sun.misc.URLClassPath$JarLoader.ensureOpen(URLClassPath.java:665) at sun.misc.URLClassPath$JarLoader.<init>(URLClassPath.java:638) at sun.misc.URLClassPath$3.run(URLClassPath.java:366) at sun.misc.URLClassPath$3.run(URLClassPath.java:356) at java.security.AccessController.doPrivileged(Native Method) at sun.misc.URLClassPath.getLoader(URLClassPath.java:355) at sun.misc.URLClassPath.getLoader(URLClassPath.java:332) at sun.misc.URLClassPath.getResource(URLClassPath.java:198) at java.net.URLClassLoader$1.run(URLClassLoader.java:358) at java.net.URLClassLoader$1.run(URLClassLoader.java:355) at java.security.AccessController.doPrivileged(Native Method) at java.net.URLClassLoader.findClass(URLClassLoader.java:354) at java.lang.ClassLoader.loadClass(ClassLoader.java:425) at java.lang.ClassLoader.loadClass(ClassLoader.java:358) at java.lang.Class.forName0(Native Method) at java.lang.Class.forName(Class.java:270) at org.apache.spark.util.Utils$.classForName(Utils.scala:173) at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$runMain(SparkSubmit.scala:641) at org.apache.spark.deploy.SparkSubmit$.doRunMain$1(SparkSubmit.scala:180) at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:205) at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:120) at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)就使用WinRAR打开jar包, 删除META-INF目录下的除了mainfest.mf,.rsa及maven目录以外的其他所有文件

- 在IntelliJ IDEA中运用Maven 开发 Spark应用

- 在IntelliJ IDEA下开发Spark应用的配置

- spark (java API) 在Intellij IDEA中开发并运行

- Spark入门--基于Intellij IDEA开发Spark应用并在集群上运行

- 在Intellij IDEA上使用Maven构建Spark应用(Scala)

- 如何在IntelliJ IDEA中开发Android应用

- 在IntelliJ IDEA中配置maven

- Spark Streaming+IntelliJ Idea+Maven开发环境搭建

- Intellij IDEA使用Maven构建spark开发环境

- Intellij IDEA使用Maven搭建spark开发环境(scala)

- win7 环境下 运用maven在Idea上 搭建spark

- 使用Intellij Idea开发Spark应用遇到的问题

- 使用IntelliJ IDEA编写SparkPi直接在Spark中运行

- Learning Spark——使用Intellij Idea开发基于Maven的Spark程序

- 在 Intellij IDEA 使用 Maven

- maven版本问题在intellij idea中处理

- 在IntelliJ IDEA中使用插件查看Maven conflict

- IntelliJ IDEA下在Maven项目中配置Mybatis-generator

- Java写txt文件到多级目录

- unity surface shader植物

- Linux升级安装GCC G++ 6.2

- Xcode8新增Debug方法

- 欢迎使用CSDN-markdown编辑器

- 在IntelliJ IDEA中运用Maven 开发 Spark应用

- 解决Activity被系统回收问题

- mp4v2 使用系列(一)

- python——文本简单可逆加密

- 王朝第十二周 立方和的解题

- UVa 1641 ASCII Area

- 如何使用jarsigner签名apk

- 批量建立软连接

- Java几个细节和误区