READING NOTE: Deep Image Matting

来源:互联网 发布:网络消费者购买动机 编辑:程序博客网 时间:2024/06/01 10:11

TITLE: Deep Image Matting

AUTHOR: Ning Xu, Brian Price, Scott Cohen, Thomas Huang

ASSOCIATION: Beckman Institute for Advanced Science and Technology, University of Illinois at Urbana-Champaign, Adobe Research

FROM: arXiv:1703.03872

CONTRIBUTIONS

- A novel deep learning based algorithm is proposed that can predict alpha matte of an image based on both low-level features and high-level context.

METHOD

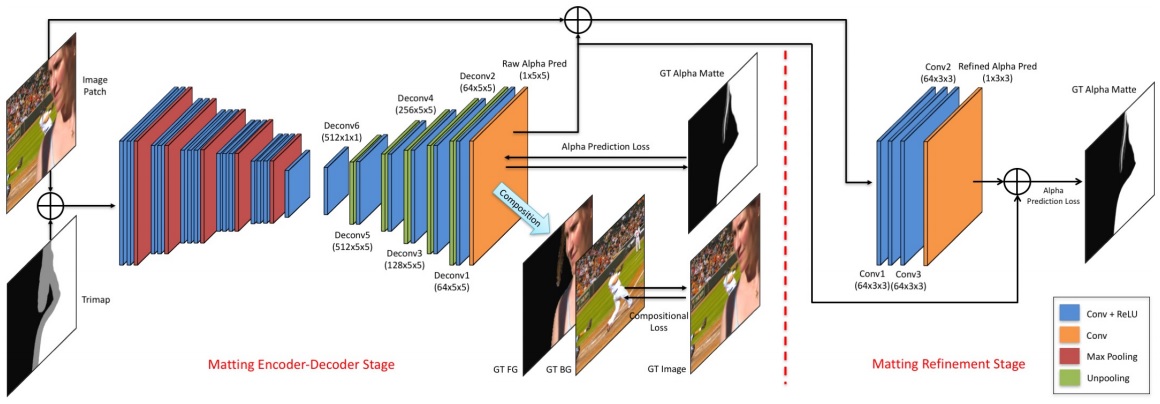

The proposed deep model has two parts.

- The first part is a CNN based encoder-decoder network, which is similar with typical FCN networks that are used for semantic segmentation. This part takes the RGB image and its corresponding trimap as input. Its output is the alpha matte of the image.

- The second part is a small convolutional network that is used to refine the output of the first part. The input of this part is the original image and the predicted alpha matte from the first part.

The method is illustrated in the following figure.

Matting encoder-decoder stage

The first network leverages two losses. One is alpha-prediction loss and the other one is compositional lss.

Alpha-prediction loss is the absolute difference between the ground truth alpha values and the predicted alpha values at each pixel, which defines as

where

Compositional loss the absolute difference between the ground truth RGB colors and the predicted RGB colors composited by the ground truth foreground, the ground truth background and the predicted alpha mattes. The loss is defined as

where

Since only the alpha values inside the unknown regions of trimaps need to be inferred, therefore weights are set on the two types of losses according to the pixel locations, which can help the network pay more attention on the important areas. Specifically,

Matting refinement stage

The input to the second stage of our network is the concatenation of an image patch and its alpha prediction from the first stage, resulting in a 4-channel input. This part is trained after the first part is converged. After the refinement part is also converged, finally fine-tune the the whole network together. Only the alpha prediction loss is used.

SOME IDEAS

- The trimap is a very strong prior. The question is how to get it.

- READING NOTE: Deep Image Matting

- 论文笔记:Deep Image Matting

- 深度抠图--Deep Image Matting

- 论文阅读:《Deep Image Matting》CVPR 2017

- image matting

- image matting

- 论文阅读:《Natural Image Matting Using Deep CNN》ECCV 2016

- READING NOTE: Aggregated Residual Transformations for Deep Neural Networks

- Natural Image Matting

- image matting

- Reading Note

- (reading)Deep Visual-Semantic Alignments for Generating Image Descriptions

- READING NOTE: Pooling the Convolutional Layers in Deep ConvNets for Action Recognition

- READING NOTE: Pushing the Limits of Deep CNNs for Pedestrian Detection

- READING NOTE: ENet: A Deep Neural Network Architecture for Real-Time Semantic Segmentation

- READING NOTE: PVANET: Deep but Lightweight Neural Networks for Real-time Object Detection

- READING NOTE: Optimizing Deep CNN-Based Queries over Video Streams at Scale

- Reading Note: ThiNet: A Filter Level Pruning Method for Deep Neural Network Compression

- 删除 setup.py 安装的 Python 软件包

- Python 基础相关

- MySQL表结构(含数据类型、字段备注注释)导出成Excel

- 【java】编写一个学生类,提供name,age,gender,phone,address,email成员变量, * 且为每个成员变量提供setter、getter方法。为学生类提供默认的构造器和带

- C++11之std--future和std--promise

- READING NOTE: Deep Image Matting

- CentOS7下zabbix汉化

- ARP协议-目的IP在不同子网

- Spring / Hibernate 应用性能调优

- Android studio应用的AndroidManifest.xml文件中跟库中出现相同的配置解决方案

- eclipse中使用git插件

- orderBy排序与筛选的例子

- Xcode显示编译时间

- 小程序 获取用户基本信息