Reading Note: ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

来源:互联网 发布:传智播客大数据百度云 编辑:程序博客网 时间:2024/06/05 11:20

TITLE: ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

AUTHOR: Xiangyu Zhang, Xinyu Zhou, Mengxiao Lin, Jian Sun

ASSOCIATION: Megvii Inc (Face++)

FROM: arXiv:1707.01083

CONTRIBUTIONS

- Two operations, pointwise group convolution and channel shuffle, are proposed to greatly reduce computation cost while maintaining accuracy.

MobileNet Architecture

In MobileNet and other works, efficient depthwise separable convolutions or group convolutions strike an excellent trade-off between representation capability and computational cost. However, both designs do not fully take the

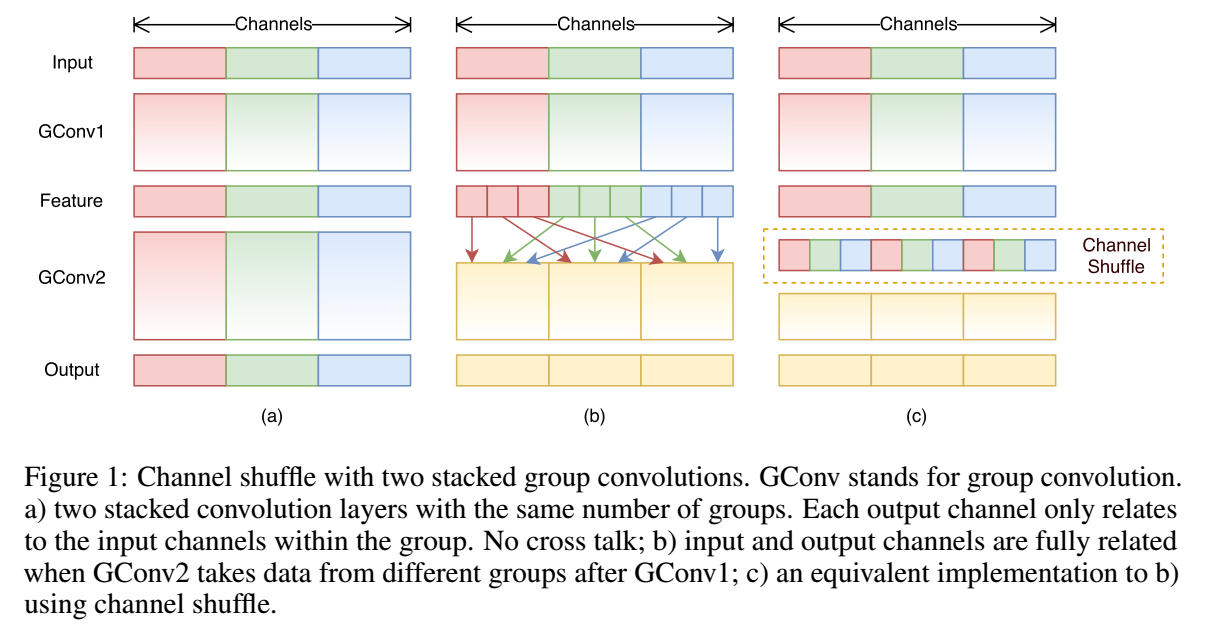

Channel Shuffle for Group Convolutions

In order to address the mentioned issue, a straightforward solution is applying group convolutions on

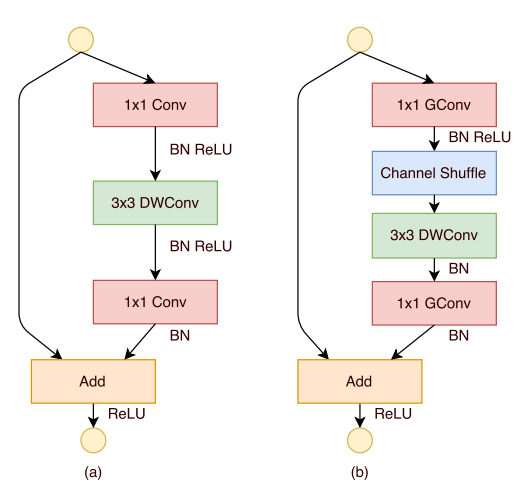

ShuffleNet Unit

The following figure shows the ShuffleNet Unit.

In the figure, (a) is the building block in ResNeXt, and (b) is the building block in ShuffleNet. Given the input size

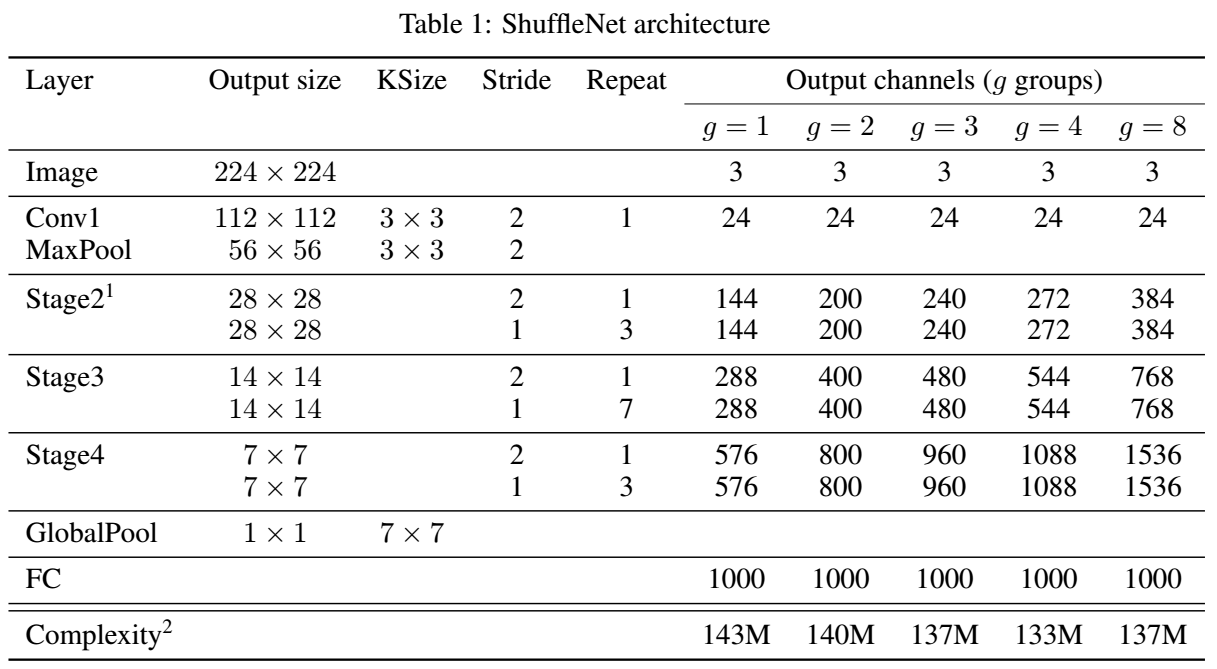

Network Architecture

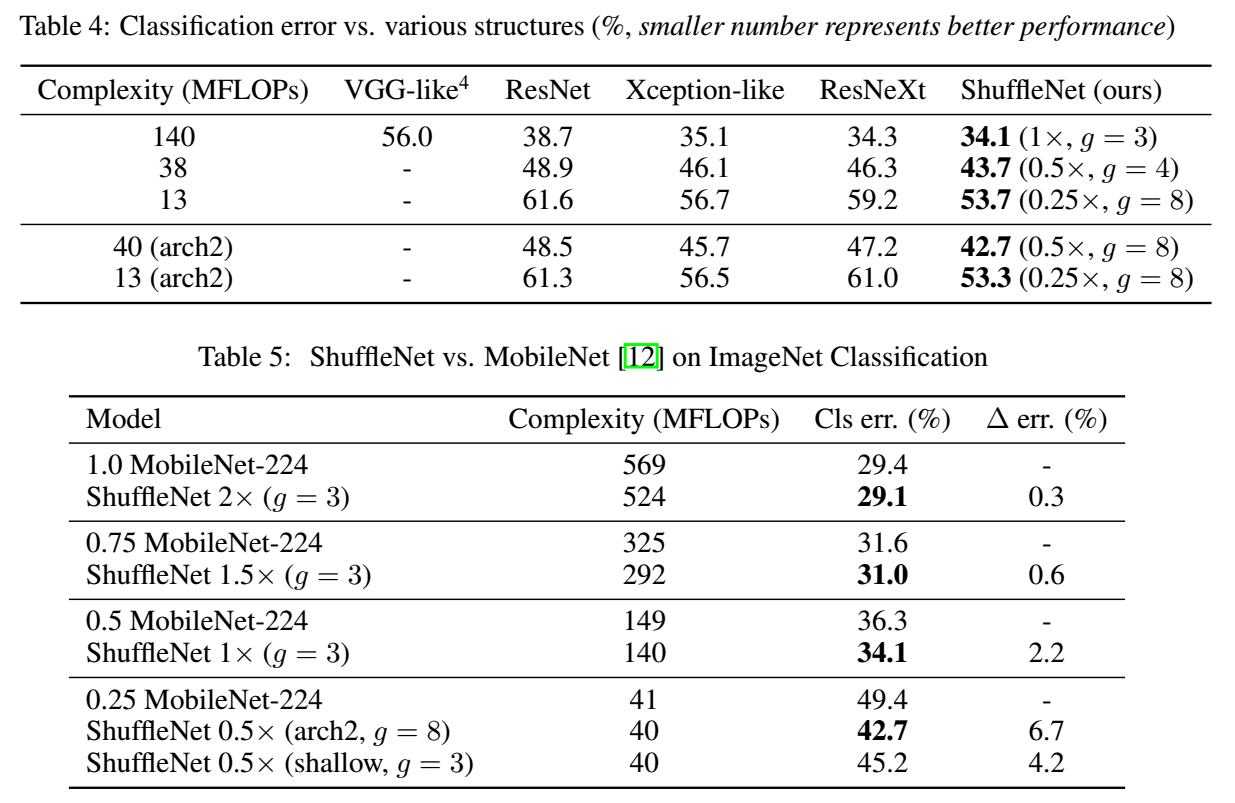

Comparison

- Reading Note: ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

- ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

- ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices的理解

- [论文解读] ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

- Reading Note: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- READING NOTE: SuperCNN: A Superpixelwise Convolutional Neural Network for Salient Object Detection

- READING NOTE:LCNN: Lookup-based Convolutional Neural Network

- MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- CNN网络量化 - Quantized Convolutional Neural Networks for Mobile Devices

- READING NOTE: Factorized Convolutional Neural Networks

- Reading Note: Interpretable Convolutional Neural Networks

- 手机CNN网络模型--MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- 网络小型化MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- How to design DL model(1):Efficient Convolutional Neural Networks for Mobile Vision Applications

- 论文记录_MobileNets Efficient Convolutional Neural Networks for Mobile Vision Application

- 论文记录_MobileNets Efficient Convolutional Neural Networks for Mobile Vision Application

- Reading Note: MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications

- Hbase基本组成

- 数据库设计案例

- 人生第一篇博客

- Android Studio下NDK开发流程

- Reading Note: ShuffleNet: An Extremely Efficient Convolutional Neural Network for Mobile Devices

- 《道德经》第四十九章

- (1)linux环境变量初始化与对应文件的生效顺序

- Phonon MinGW 编译指南

- oracle-12514 or 12520 监听程序无法为请求的服务器类型找到可用的处理程序

- 学习笔记:SQL增删改查; SQL转储和导入;myeclipce导入文件;MyEclipse项目发布

- Volley 请求数据之数据不缓存

- HDU4972 A simple dynamic programming problem(规律)

- Qt4.7.4和tslib移植到开发板