深度自解码器(Deep Auto-encoder)

来源:互联网 发布:excel 一列数据递增 编辑:程序博客网 时间:2024/06/01 22:45

本博客是针对李宏毅教授在youtube上发布的课程视频的学习笔记。

视频地址:ML Lecture 16: Unsupervised Learning - Auto-encoder

- Auto-encoder

- Encoder and Decoder

- Starting from PCA

- Application Text Retrieval

- Vector Space Model and Bag-of-Word Model

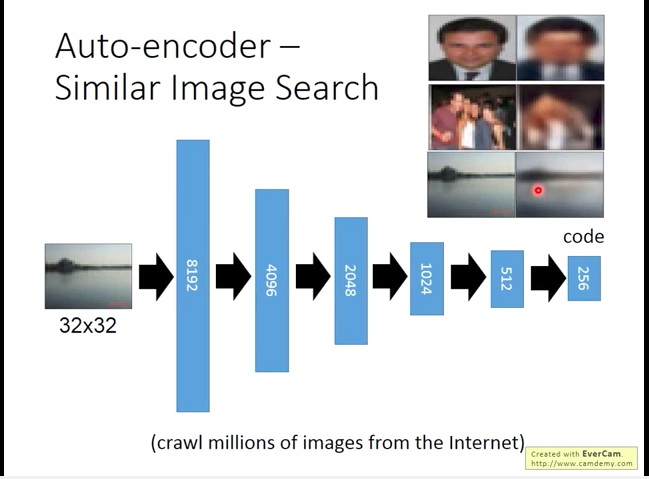

- Application Similar Image Search

- Pre-training DNN

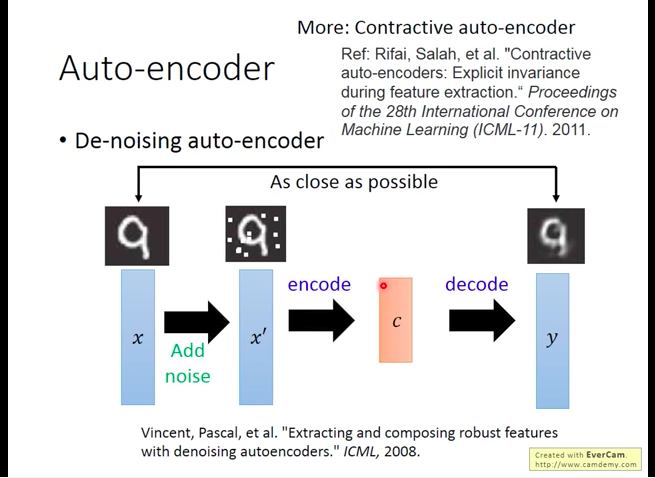

- De-noising auto-encoder contractive auto-encoder

- Restricted Boltzmann Machine RBM -different from DNN just look similar

- Deep Belief Network DBN -different from DNN just look similar

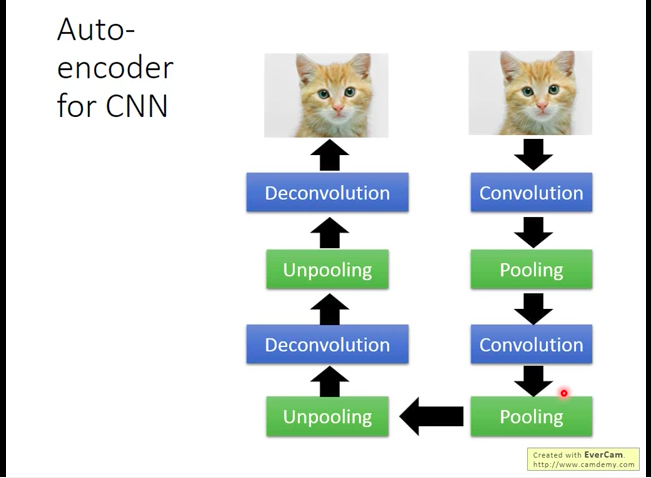

- Auto-encoder for CNN

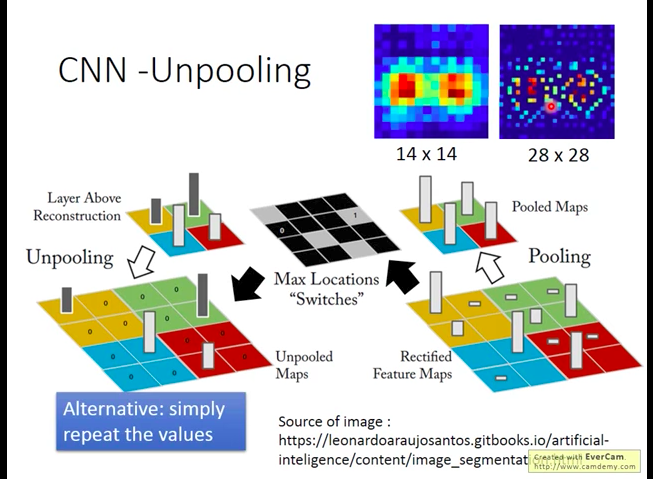

- Unpooling

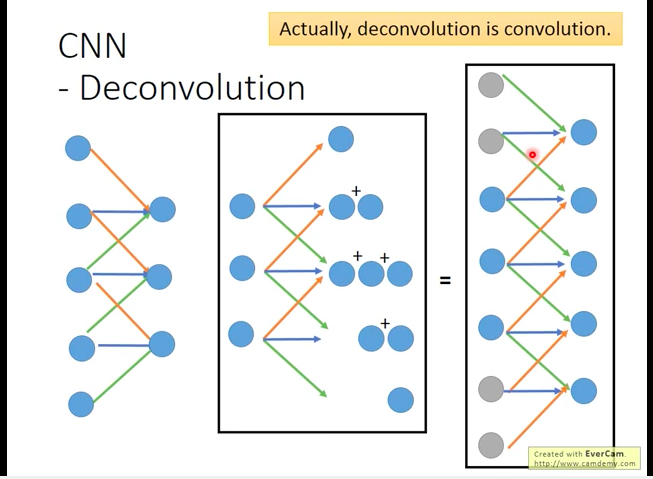

- Deconvolution

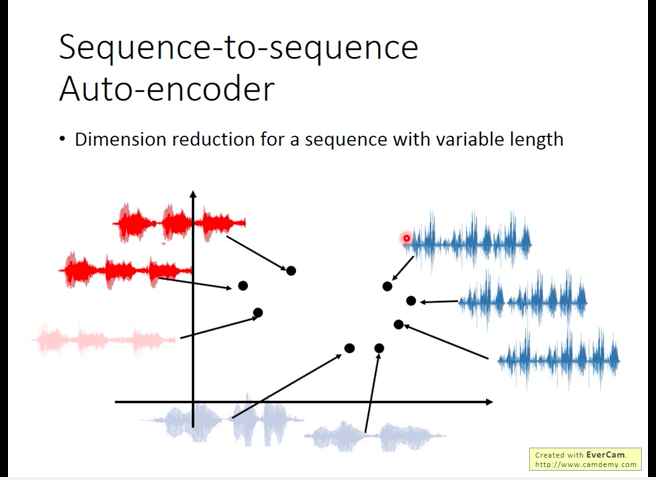

- Suquence-to-Sequence Auto-encoder

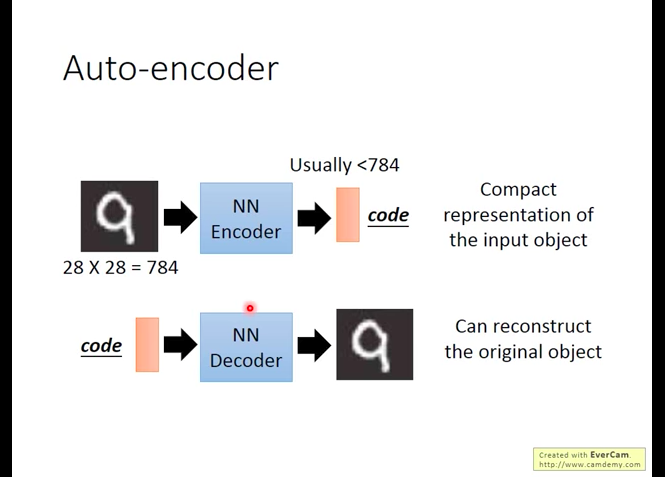

Auto-encoder

Encoder and Decoder

Each one of them can’t be trained respectively(no goal or no input), but they can be linked and trained together.

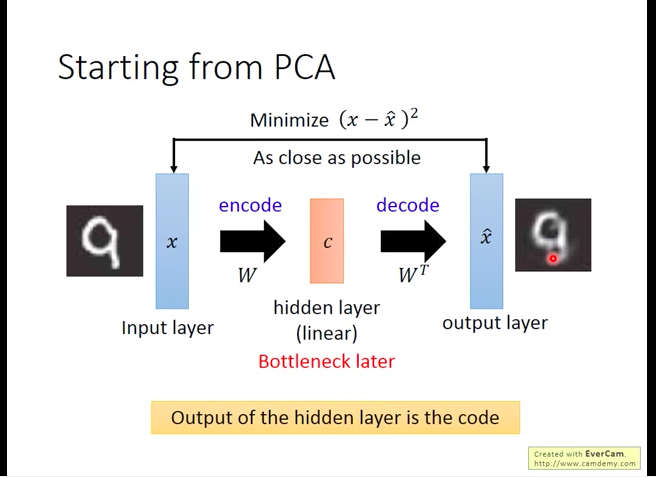

Starting from PCA

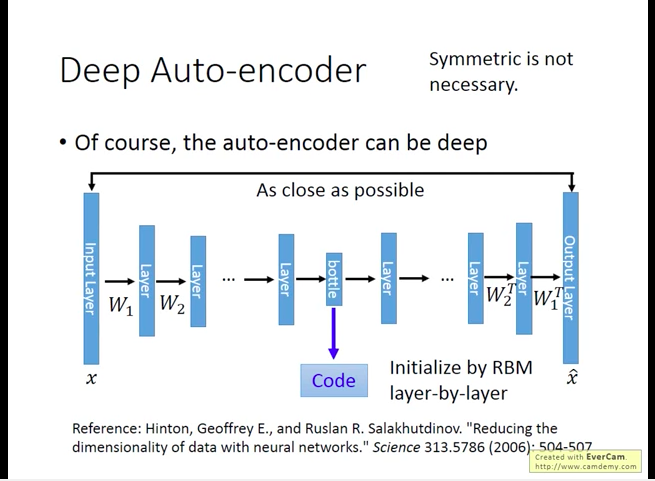

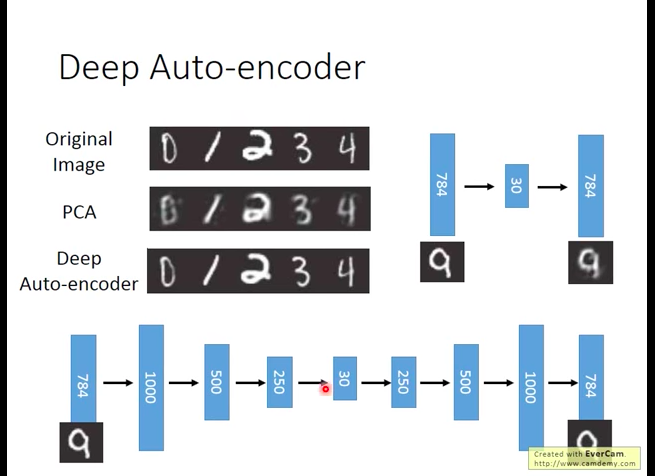

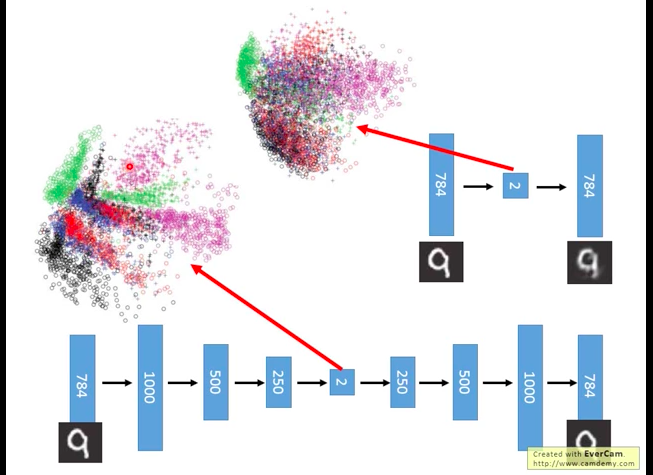

PCA only has one hidden layer, so we can deepen it to Deep Auto-encoder.

Above is Hinton(2006)’s design of deep auto-encoder, it achieves good result.

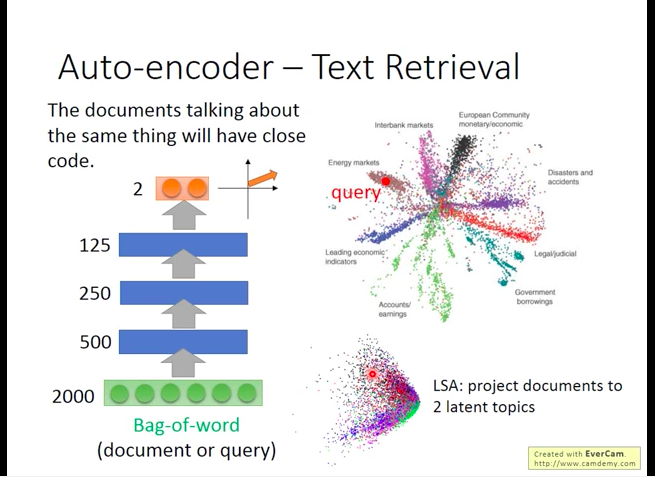

Application: Text Retrieval

To compress an article into a CODE.

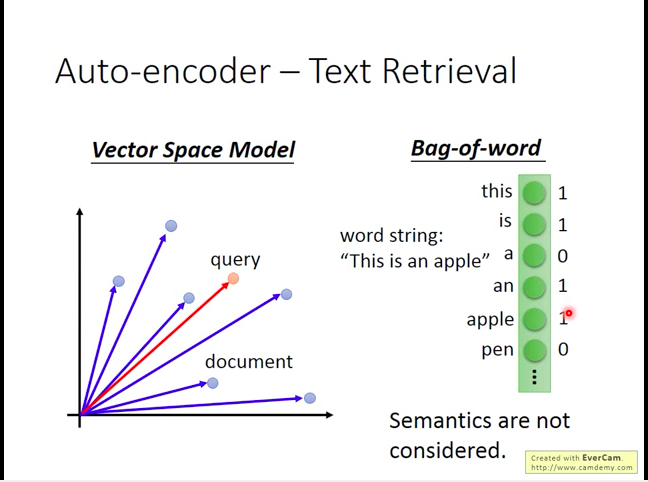

Vector Space Model and Bag-of-Word Model

In Bag-of-Word the shortcoming is that semantic factor is not considered in model.

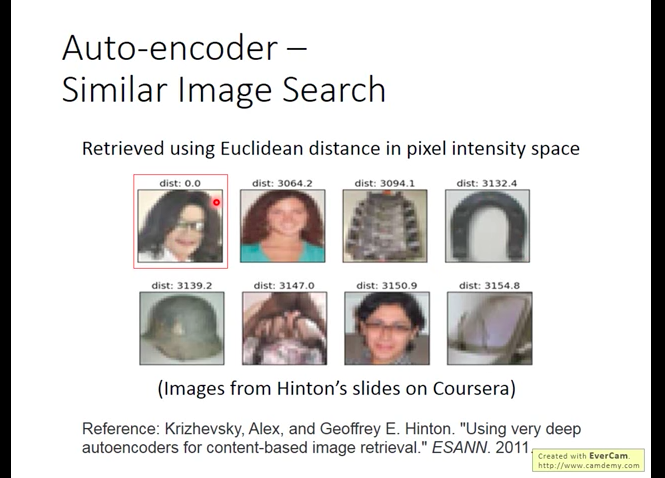

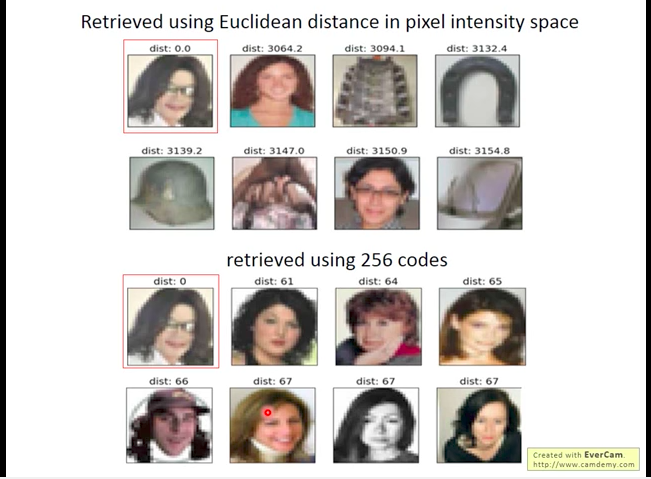

Application: Similar Image Search

Focusing on pixel-wise similarity may not induce good result~(MJ similar to Magnet….)

Use Deep auto-encoder to preprocess picture.

Focusing on CODE similarity induce better result.

Pre-training DNN

Use Auto-encoder to do pre-training.

Learn a auto-encoder first (lower right, apply L1 regularization to avoid auto-encoder’s ‘remembering’ input), then learn another auto-encoder(middle right), ….., at last, 500-10 layer’s weight can be learned using backpropagation.

pre-training is necessary before in training DNN, but now with development of training technology, we can get good training result without pre-training. But When we have many unlabeled data, we could still use these data to pre-training to make final training better.

De-noising auto-encoder (contractive auto-encoder)

Restricted Boltzmann Machine (RBM) -different from DNN, just look similar

Deep Belief Network (DBN) -different from DNN, just look similar

Auto-encoder for CNN

Unpooling

Deconvolution

Padding+Convolution=Deconvolution

Suquence-to-Sequence Auto-encoder

Some data is not ‘good’ to be represented in vector(like voice, article[lose semantic meaning]), it’s better to represent them in sequence.

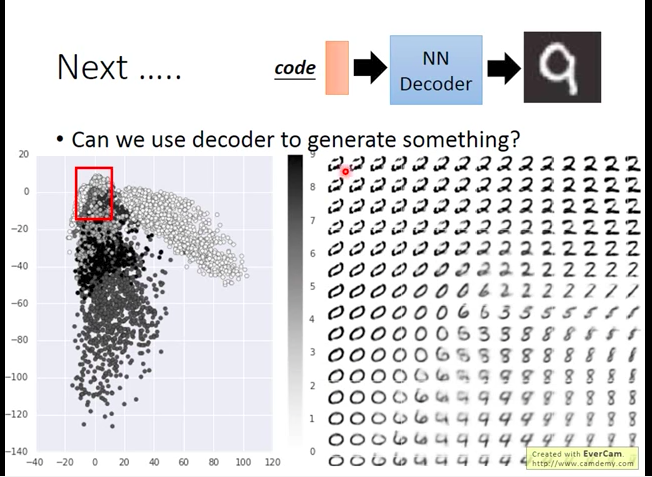

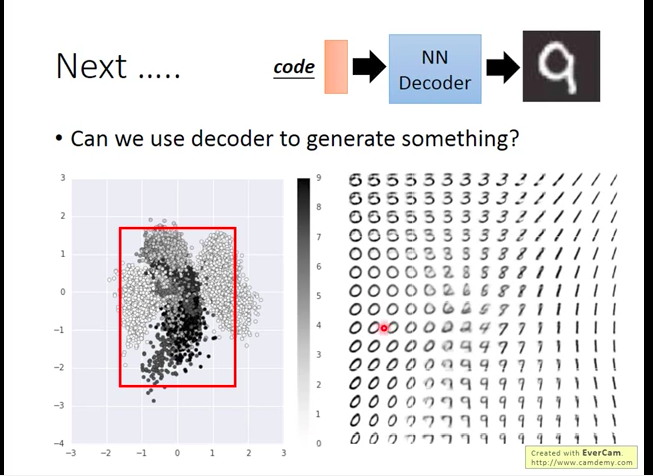

After impact L2 regularization to training process, we can get below:

- 深度自解码器(Deep Auto-encoder)

- Deep Auto-encoder

- deep auto-encoder

- 自编码器(auto-encoder)介绍

- 自编码器Auto-Encoder

- 深度学习之Auto Encoder

- auto-encoder 型深度神经网络

- 变分自编码器(Variational Auto-Encoder,VAE)

- [机器学习入门] 李弘毅机器学习笔记-17(Unsupervised Learning: Deep Auto-encoder;无监督学习:深度自动编码器)

- 自编码器原理以及相关算法 Basic Auto-Encoder,Regularized Auto-Encoder,Denoising Auto-Encoder

- Auto-encoder做的自表达

- 自动编码器(Auto Encoder)

- tensorflow tutorials(五):用tensorflow实现自编码器(Auto-Encoder)

- tensorflow tutorials(十):用tensorflow实现降噪自编码器(Denoising Auto-Encoder)

- 【Learning Notes】变分自编码器(Variational Auto-Encoder,VAE)

- aotoencorder理解(5):VAE(Variational Auto-Encoder,变分自编码器)

- 利用Theano理解深度学习——Auto Encoder

- 利用Theano理解深度学习——Auto Encoder

- VMware Workstation Pro + Ubuntu 16.04 + 上 Caffe 配置安装(Only CPU)

- js清空input类型为type的文件框的内容

- 就 文艺平衡树 一题谈 块状链表 的局限性和注意事项

- leetcode Partition List

- mysql TRUNCATE delete

- 深度自解码器(Deep Auto-encoder)

- 计算机网络——运输层

- Hadoop集群安装 已检测到多个CDH版本

- Linux 对80端口进行访问控制

- java语言的特性

- Caffemodel之C++修改参数

- CATALINA_BASE与CATALINA_HOME的区别

- SharedPreferences记录数据

- 数组的应用——三子棋、五子棋