CUDA SDK VolumeRender 分析

来源:互联网 发布:淘宝网上买东西 编辑:程序博客网 时间:2024/05/20 18:01

曾经遇到过cuda函数在跨编译单元调用的问题,这个例子用到了一个很巧妙的解决方法。

首先描述下这个问题,当多个cu或cpp文件互相包含的时候cu文件中的实现会被nvcc生成在多个编译单元中,从而出现重定义链接错误。具体描述见这里。

CUDA SDK中避免此问题的方法是,添加一个extern层接口,需要被外部使用的功能封装为extern接口,接口函数和实现函数放在一个cu中,调用端通过extern的声明来找到接口,这样避免了实现出现在多个编译单元内(只有函数实现所在的编译单元)。

有点乱?下面给出VolumeRender中的用法:

实现部分

1: // volumeRender_kernel.cu 2: __global__ void3: d_render(uint *d_output, uint imageW, uint imageH,

4: float density, float brightness,

5: float transferOffset, float transferScale)

6: { 7: // implemention8: }

全局接口和实现在同一文件

1: // volumeRender_kernel.cu2: extern "C"

3: void render_kernel(dim3 gridSize, dim3 blockSize, uint *d_output, uint imageW, uint imageH, 4: float density, float brightness, float transferOffset, float transferScale)

5: {6: d_render<<<gridSize, blockSize>>>( d_output, imageW, imageH, density,

7: brightness, transferOffset, transferScale);

8: }

调用端使用声明绕开包含

1: // volumeRender.cpp2: extern "C" void render_kernel(dim3 gridSize, dim3 blockSize, uint *d_output, uint imageW, uint imageH,

3: float density, float brightness, float transferOffset, float transferScale);

4:

本文主要分析VolumeRender中涉及到的一些图形算法:Ray casting、 直线平面求交。

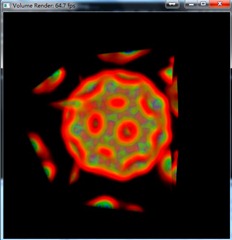

VolumeRender渲染效果

Volume Render通常用来绘制几何图形难以表现的流体、云、火焰、烟雾等效果,流行的volume render算法有:ray casting、texture-based volume rendering。SDK例子使用的是Ray casting算法。

Ray casting volume renderers shoot rays from the camera through each pixel in the output image which then intersect the cells in the volume and are combined via the transfer function to make a color for the pixel. The ray casting volume renderer is slower than the hardware accelerated methods but it produces superior images and shows all of the data instead of a resampled version.

Ray casting渲染volume rendering大体需要两步:

- 计算view ray 和 bounding volume的两个交点(far、 near)

- 沿着ray的方向在far和near间取点,用点坐标在3D texture中采样,并将一条ray的所有点的颜色累加起来,点与点之间的距离(step)可以是统一的或自适应的

在本SDK sample中使用了固定的step,求每个step颜色时先在3D texture中采样,再用得到的值在1D texture中采样得到颜色。

在VolumeRender中, d_render函数实现像素颜色的计算.

首先计算出视线参数:

1: // calculate eye ray in world space2: Ray eyeRay;

3: eyeRay.o = make_float3(mul(c_invViewMatrix, make_float4(0.0f, 0.0f, 0.0f, 1.0f)));

4: eyeRay.d = normalize(make_float3(u, v, -2.0f));

5: eyeRay.d = mul(c_invViewMatrix, eyeRay.d);

其中o为摄像机位置坐标, z为视线方向向量. c_invViewMatrix为视距阵的转置. (u v –2)是ray的方向, (0 0 2)是视平面, 摄像机在 (0 0 –4), uv是这样计算的:

1: // calculate pixel position in view space2: uint x = blockIdx.x*blockDim.x + threadIdx.x;

3: uint y = blockIdx.y*blockDim.y + threadIdx.y;

4: if ((x >= imageW) || (y >= imageH)) return;

5:

6: float u = (x / (float) imageW)*2.0f-1.0f;

7: float v = (y / (float) imageH)*2.0f-1.0f;

这里详细的分析可以看kheresy的分析, 写得十分清楚.

有了ray, 现在需要计算交点, 这在SDK中由intersectBox函数实现

1: // calculate interset with box parallel to coordinate axes2: __device__

3: int intersectBox(Ray r, float3 boxmin, float3 boxmax, float *tnear, float *tfar)

4: { 5: // compute intersection of ray with all six bbox planes 6: float3 invR = make_float3(1.0f) / r.d; 7: float3 tbot = invR * (boxmin - r.o); 8: float3 ttop = invR * (boxmax - r.o);9:

10: // re-order intersections to find smallest and largest on each axis 11: float3 tmin = fminf(ttop, tbot); 12: float3 tmax = fmaxf(ttop, tbot);13:

14: // find the largest tmin and the smallest tmax 15: float largest_tmin = fmaxf(fmaxf(tmin.x, tmin.y), fmaxf(tmin.x, tmin.z)); 16: float smallest_tmax = fminf(fminf(tmax.x, tmax.y), fminf(tmax.x, tmax.z));17:

18: *tnear = largest_tmin;

19: *tfar = smallest_tmax;

20:

21: return smallest_tmax > largest_tmin;22: }

由代码注释中可以看出, 这里先求ray和box所在六个面交点, 再按照这里的规则找到真正的交点. 计算直线与六个平面的交点只用了三行代码, 很漂亮. collision detection是很耗时的计算, 这里通过把volume box转换到(-1 –1 -1), (1 1 1), 以及平行坐标轴的技巧, 将复杂度降为O(1), 可以作为elegant code的典范了.

下面是计算每条ray的颜色的代码片段, 这里为了帮助大家理解对注释和代码稍做修改

1: // march along ray from front to back, accumulating color2: float4 sum = make_float4(0.0f); // final color

3: float t = tnear; // position between tnear and tfar

4: float3 pos = eyeRay.o + eyeRay.d*tnear; 5: float3 step = eyeRay.d*tstep;6:

7: // opacity color and transparent value for each pos in [-1, 1] cube8: for(int i=0; i<maxSteps; i++) {

9: // remap position to [0, 1] coordinates 10: float sample = tex3D(tex, pos.x*0.5f+0.5f, pos.y*0.5f+0.5f, pos.z*0.5f+0.5f);11:

12: // lookup the color and transparent from transferTex 13: float4 col = tex1D(transferTex, (sample-transferOffset)*transferScale);14: col.w *= density;

15:

16: // pre-multiply alpha17: col.x *= col.w;

18: col.y *= col.w;

19: col.z *= col.w;

20:

21: // front-to-back color blending 22: sum = sum + col*(1.0f - sum.w);

23:

24: // exit early if opaque 25: if (sum.w > opacityThreshold) 26: break;27:

28: t += tstep;

29: if (t > tfar) break;

30:

31: pos += step;

32: }

这段代码中的Ray位置的计算, 和颜色, 透明度累加的方式值得深入学习.

Ray casting做volume render的缺点是计算量大, 首先每条ray都需要做求交, 再对每个step进行采样和叠加. 计算量受画面解析度(Ray 数量)和场景复杂程度影响很大. 但是Ray和Ray之间没有数据交换, 因此容易并行. 这个例子就是利用这个特点, 为每个Ray分配一个线程(由CUDA管理虚拟的二维线程集合). 在512*512分辨率下达到了60+fps的效果.

以上就是volumeRender对颜色计算的分析. 欢迎大家一起研究学习.

参考

Volume Render 技术

本文主要分析CUDA SDK sample如何同OpenGL相结合.

在CUDA中调用OpenGL主要有以下几个要点:

- Interoperability with OpenGL requires that the CUDA device be specified by cudaGLSetGLDevice() before any other runtime calls.

- Register resource to CUDA before mapping. 一个资源只需注册一次

- After registering to CUDA, a resource should be mapped before accessing with CUDA function and unmapped after accessing it by calling cudaGraphicsMapResources() and cudaGraphicsUnmapResources().

- A mapped resource can be read from or written to by kernels using the device memory address returned by cudaGraphicsResourceGetMappedPointer()

for buffers and cudaGraphicsSubResourceGetMappedArray() for CUDA arrays. - DO NOT access a resource through OpenGL or Direct3D while it is mapped to CUDA, cause it will produce undefined results.

整体伪代码

1: set_OpenGL_device();

2: register_resources();

3:

4: while( is_running ) 5: {6: map_resource();

7: resource_pointer *pointer = get_mapped_pointetr();

8: process_using_cuda( pointer );

9: unmap_resource();

10: do_normal_rendering();

11: }

12: unregister_resources();

选择设备

1: // sets device as the current device for the calling host thread.2: extern __host__ cudaError_t CUDARTAPI cudaGLSetGLDevice(int device);

本例中被封装在chooseCudaDevice()函数中, 自动选择性能最佳的device.

资源创建和注册

这里使用的资源是Pixel Buffer Object : The buffer object storing pixel data is called Pixel Buffer Object (PBO). initPixelBuffer()函数负责创建并注册PBO.

1: // OpenGL pixel buffer object2: GLuint pbo = 0;

3:

4: // create pixel buffer object for display5: glGenBuffersARB(1, &pbo);

6: glBindBufferARB(GL_PIXEL_UNPACK_BUFFER_ARB, pbo);

7: glBufferDataARB(GL_PIXEL_UNPACK_BUFFER_ARB, width*height*sizeof(GLubyte)*4, 0, GL_STREAM_DRAW_ARB);8: glBindBufferARB(GL_PIXEL_UNPACK_BUFFER_ARB, 0);

9:

10: // register this buffer object with CUDA11: cutilSafeCall(cudaGraphicsGLRegisterBuffer(&cuda_pbo_resource, pbo, cudaGraphicsMapFlagsWriteDiscard));

使用资源

1: // CUDA Graphics Resource (to transfer PBO) 2: struct cudaGraphicsResource *cuda_pbo_resource; 3:

4: // render image using CUDA 5: void render() 6: { 7: // Copy inverse view matrix to const device memory8: copyInvViewMatrix(invViewMatrix, sizeof(float4)*3);

9:

10: // Map graphics resources for access by CUDA11: uint *d_output;

12: cutilSafeCall(cudaGraphicsMapResources(1, &cuda_pbo_resource, 0));

13:

14: // Get CUDA device pointer15: size_t num_bytes;

16: cutilSafeCall(cudaGraphicsResourceGetMappedPointer((void **)&d_output, &num_bytes, 17: cuda_pbo_resource));

18:

19: // clear image20: cutilSafeCall(cudaMemset(d_output, 0, width*height*4));

21:

22: // call CUDA kernel, writing results to PBO23: render_kernel(gridSize, blockSize, d_output, width, height, density, brightness, transferOffset, transferScale);

24:

25: cutilSafeCall(cudaGraphicsUnmapResources(1, &cuda_pbo_resource, 0));

26: }

这里的cutilSafeCall()是cuda util中的函数, 负责log错误. render_kernel()是前面的d_render()函数负责写入计算出的颜色到PBO, d_output是map得到的供CUDA存取的指向PBO内存的指针.

显示

1: // display results using OpenGL 2: void display() 3: { 4: // use OpenGL to build view matrix5: BuildViewMartix();

6:

7: // prepare pbo piexl8: render();

9:

10: // display results11: glClear(GL_COLOR_BUFFER_BIT);

12:

13: // draw image from PBO14: glPixelStorei(GL_UNPACK_ALIGNMENT, 1);

15:

16: // copy from pbo to texture17: glBindBufferARB(GL_PIXEL_UNPACK_BUFFER_ARB, pbo);

18: glBindTexture(GL_TEXTURE_2D, tex);

19: glTexSubImage2D(GL_TEXTURE_2D, 0, 0, 0, width, height, GL_RGBA, GL_UNSIGNED_BYTE, 0);

20: glBindBufferARB(GL_PIXEL_UNPACK_BUFFER_ARB, 0);

21:

22: // draw textured quad23: glEnable(GL_TEXTURE_2D);

24: glBegin(GL_QUADS);

25: glTexCoord2f(0, 0); glVertex2f(0, 0);

26: glTexCoord2f(1, 0); glVertex2f(1, 0);

27: glTexCoord2f(1, 1); glVertex2f(1, 1);

28: glTexCoord2f(0, 1); glVertex2f(0, 1);

29: glEnd();

30:

31: glDisable(GL_TEXTURE_2D);

32: glBindTexture(GL_TEXTURE_2D, 0);

33:

34: glutSwapBuffers();

35: glutReportErrors();

36:

37: cutilCheckError(cutStopTimer(timer));

38:

39: computeFPS();

40: }

其中render()就是上面写入PBO的函数, 这个display()函数是由glutDisplayFunc()注册的显示函数. 也就是渲染的全过程. 为了简化函数, 中间省略了一些统计和不核心的处理.

我们可以看到, 渲染的所有效果都是由CUDA通过volume render产生的, 最后OpenGL只是把结果作为一张图片贴在我们的视口上. 这里面有两个小细节glPixelStorei()函数修改数据对齐的单位, 详细介绍在这里. 第二是如何从PBO拷贝到纹理, Song Ho的OpenGL教程介绍的非常清楚, 我就不再赘述了.

看过以上几期的分析, 希望大家对Volume Render和CUDA能有一些新的理解, 欢迎大家与我讨论学习. 下一次想分析一下这个例子的一些细节技术.

参考:

http://www.cnblogs.com/hucn/category/309207.html

- CUDA SDK VolumeRender 分析

- CUDA SDK VolumeRender 分析

- CUDA SDK VolumeRender

- CUDA SDK VolumeRender:跨编译单元调用

- CUDA SDK示例程序概览

- caffe cuda 程序分析

- cuda之多线程分析

- cuda 性能分析paper

- 扩展CUDA SDK 2.3 の convolutionSeparable

- cuda sdk 2.3 convolution separable之存疑

- cuda sdk中各文件翻译及功能

- CUDA __global__ function 参数分析

- CUDA __global__ function 参数分析

- (CUDA 编程3).CUDA硬件实现分析(一)------安营扎寨

- (CUDA 编程4).CUDA硬件实现分析(二)------规行矩步

- CUDA总结:opencv cuda模块高斯滤波函数分析

- cuda编程1 cuda安装以及实例分析

- cuda的sdk sample中的一个低级错误

- SQL server经典电子书、工具和视频教程汇总

- OpenRisc-52-run openrisc&orpmon on ml501 board

- sqr

- 浅析杀毒软件开发原理(之查毒引擎)

- SQL Server2005 异常处理机制

- CUDA SDK VolumeRender 分析

- Hello World !

- UITableView划动删除的实现

- [leetCode]Search in Rotated Sorted Array

- 大话设计模式--抽象工厂模式 Abstract Factory -- C++实现实例

- php session 跨页面传值

- 设计模式中类的关系

- tcp http 杂知识

- acm每日一练之分数加减法