DeepLearning学习随记(二)Vectorized、PCA和Whitening

来源:互联网 发布:软件测试常见问题 编辑:程序博客网 时间:2024/05/17 02:48

接着上次的记,前面看了稀疏自编码。按照讲义,接下来是Vectorized, 翻译成向量化?暂且这么认为吧。

Vectorized:

这节是老师教我们编程技巧了,这个向量化的意思说白了就是利用已经被优化了的数值运算来编程,矩阵的操作

尽量少用for循环,用已有的矩阵运算符来操作。这里只是粗略的看了下,有些小技巧还是不错的。

PCA:

PCA这个以前都接触过了,简单说就是两步:

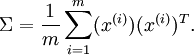

1.协方差矩阵 其中x(i)是输入样本(假设已经均值化)。

其中x(i)是输入样本(假设已经均值化)。

2.SVD分解,得出U向量。其中U向量的每列就是样本的新的方向向量。前面的是主方向。然后用U'*x得到

PCA后的样本值xrot:![]()

Whitening:

我们已经了解了如何使用PCA降低数据维度。在一些算法中还需要一个与之相关的预处理步骤,这个预处理过程称为whitening(一些文献中也叫shpering)。举例来说,假设训练数据是图像,由于图像中相邻像素之间具有很强的相关性,如果用原始图像数据作为输入的话,输入是冗余的。whitening的目的就是降低输入的冗余性;更正式的说,我们希望通过whitening过程使得学习算法的输入具有如下性质:(i)特征之间相关性较低;(ii)所有特征具有相同的方差。

由于PCA以后已经满足了第一个条件(特征之间不相关的),因此需要满足第二个条件。讲义提到了两种whitening方法:

1)PCA whitening:

为了使每个输入特征具有单位方差,我们可以直接使用1/ √λi 来缩放每个特征,

![]()

2)ZCA whitening:

由于使数据的协方差矩阵变为单位矩阵的方式不唯一,如假设R是正交矩阵(RTR=RRT=I),那么RxPCAwhite仍然具有单位协方差。取R = U,

则是ZCA whitening,

![]() .

.

注:考虑到λi某些时候会很小,接近于0,在除的时候要进行正则化,加上一个很小的ε(一般取值为10-5).

另外讲义中提到关于ZCAwhitening一点有意思的就是:事实证明这也是一种对生物眼睛(视网膜)处理图像的粗糙的模型。眼睛感知图像时,由于一幅图像中相邻的部分在亮度上十分相关,大多数临近的“像素”在眼中被感知为相近的值。人眼如果分别传输每个像素(通过视觉神经)到大脑中,会非常不划算。取而代之的是,视网膜进行一个与ZCA中相似的去相关操作 (这是由视网膜上的ON-型和OFF-型光感受器细胞将光信号转变为神经信号完成的)。由此得到对输入图像的更低冗余的表示,并将它传输到大脑。

实现:

这里也是看了tornadomeet的代码:http://www.cnblogs.com/tornadomeet/archive/2013/03/21/2973631.html,代码挺简单的,看了这些,matlab有所了解了,以后也尝试着自己先写一下了。

close all %%================================================================ %% Step 0: Load data % We have provided the code to load data from pcaData.txt into x. % x is a 2 * 45 matrix, where the kth column x(:,k) corresponds to % the kth data point.Here we provide the code to load natural image data into x. % You do not need to change the code below. x = load('pcaData.txt','-ascii'); figure(1); scatter(x(1, :), x(2, :)); title('Raw data'); %%================================================================ %% Step 1a: Implement PCA to obtain U % Implement PCA to obtain the rotation matrix U, which is the eigenbasis % sigma. % -------------------- YOUR CODE HERE -------------------- u = zeros(size(x, 1)); % You need to compute this [n m] = size(x); %x = x-repmat(mean(x,2),1,m);%预处理,均值为0 sigma = (1.0/m)*x*x'; [u s v] = svd(sigma); % -------------------------------------------------------- hold on plot([0 u(1,1)], [0 u(2,1)]);%画第一条线 plot([0 u(1,2)], [0 u(2,2)]);%第二条线 scatter(x(1, :), x(2, :)); hold off %%================================================================ %% Step 1b: Compute xRot, the projection on to the eigenbasis % Now, compute xRot by projecting the data on to the basis defined % by U. Visualize the points by performing a scatter plot. % -------------------- YOUR CODE HERE -------------------- xRot = zeros(size(x)); % You need to compute this xRot = u'*x; % -------------------------------------------------------- % Visualise the covariance matrix. You should see a line across the % diagonal against a blue background. figure(2); scatter(xRot(1, :), xRot(2, :)); title('xRot'); %%================================================================ %% Step 2: Reduce the number of dimensions from 2 to 1. % Compute xRot again (this time projecting to 1 dimension). % Then, compute xHat by projecting the xRot back onto the original axes % to see the effect of dimension reduction % -------------------- YOUR CODE HERE -------------------- k = 1; % Use k = 1 and project the data onto the first eigenbasis xHat = zeros(size(x)); % You need to compute this m_u = u(:,1); xx = [u(:,1),zeros(n,1)]; xxx = [u(:,1),zeros(n,1)]'*x; xHat = u*([u(:,1),zeros(n,1)]'*x); % -------------------------------------------------------- figure(3); scatter(xHat(1, :), xHat(2, :)); title('xHat'); %%================================================================ %% Step 3: PCA Whitening % Complute xPCAWhite and plot the results. epsilon = 1e-5; % -------------------- YOUR CODE HERE -------------------- xPCAWhite = zeros(size(x)); % You need to compute this xPCAWhite = diag(1./sqrt(diag(s)+epsilon))*u'*x; % -------------------------------------------------------- figure(4); scatter(xPCAWhite(1, :), xPCAWhite(2, :)); title('xPCAWhite'); %%================================================================ %% Step 3: ZCA Whitening % Complute xZCAWhite and plot the results. % -------------------- YOUR CODE HERE -------------------- xZCAWhite = zeros(size(x)); % You need to compute this xZCAWhite = u*diag(1./sqrt(diag(s)+epsilon))*u'*x; % -------------------------------------------------------- figure(5); scatter(xZCAWhite(1, :), xZCAWhite(2, :)); title('xZCAWhite'); %% Congratulations! When you have reached this point, you are done! % You can now move onto the next PCA exercise. :)

- DeepLearning学习随记(二)Vectorized、PCA和Whitening

- 深度学习基础(四)PCA和Whitening

- PCA和whitening

- DeepLearning学习随记系列

- UFLDL学习笔记2(Preprocessing: PCA and Whitening)

- PCA Whitening ZCA Whitening

- Whitening&PCA

- Deep learning:十(PCA和whitening)

- Deep learning:十(PCA和whitening)

- Deep learning:十二(PCA和whitening在二自然图像中的练习)

- Deep learning:十二(PCA和whitening在二自然图像中的练习)

- Deep learning:十二(PCA和whitening在二自然图像中的练习)

- 白化(Whitening) PCA白化 ZCA白化

- 白化(Whitening):PCA vs. ZCA

- DeepLearning学习随记(一)稀疏自编码器

- DeepLearning学习随记(五)Deepnetwork深度网络

- DeepLearning学习随记(一)稀疏自编码器

- 学习随记(二)

- 解决在ubuntu系统中安装Chrome失败的问题

- hwm的影响

- PR,ROC,AUC计算方法

- 获取,区分不同apk程序签名的方法

- 更新一点,查寻任意一段中子串的最大和

- DeepLearning学习随记(二)Vectorized、PCA和Whitening

- Ogre -- Demo框架

- 以EJB谈J2EE规范

- Struts2配置详解_配置Action

- android 中service的简单事例

- Long-Polling, Websockets, SSE(Server-Sent Event), WebRTC 之间的区别

- AOJ 0066 Tic Tac Toe

- vc++ 中ADO数据库的配置(SQLServer2005)

- Deep Learning 学习随记(三)Softmax regression - bzjia