11-Threads

来源:互联网 发布:腾牛网怎么下载软件 编辑:程序博客网 时间:2024/06/03 09:02

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

11.1 Introduction

- All threads within a single process have access to the same process components, such as file descriptors and memory.

11.2 Thread Concepts

- A typical UNIX process can be thought of as having a single thread of control: each process is doing only one thing at a time. With multiple threads of control, we can design our programs to do more than one thing at a time within a single process, with each thread handling a separate task. Benefits:

- We can assign a separate thread to handle each event type to deal with asynchronous events. Each thread can then handle its event using a synchronous programming model.

- Threads automatically have access to the same memory address space and file descriptors. Processes have to use complex mechanisms provided by the operating system to share memory and file descriptors(Chapters 15 and 17).

- Problems can be partitioned so that overall program throughput can be improved.

A single-threaded process with multiple tasks to perform implicitly serializes tasks, because there is only one thread of control. With multiple threads of control, the processing of independent tasks can be interleaved by assigning a separate thread per task. Two tasks can be interleaved only if they don’t depend on the processing performed by each other. - Interactive programs can improve response time by using multiple threads to separate the portions of the program that deal with user input and output from the other parts of the program.

- The benefits of a multithreaded programming model can be realized even if program is running on a uniprocessor. A program can be simplified using threads regardless of the number of processors, because the number of processors doesn’t affect the program structure. As long as your program has to block when serializing tasks, you can see improvements in response time and throughput when running on a uniprocessor, because some threads might be able to run while others are blocked.

- A thread consists of the information necessary to represent an execution context within a process. This includes a thread ID that identifies the thread within a process, a set of register values, a stack, a scheduling priority and policy, a signal mask, an errno variable(Section 1.7), and thread-specific data(Section 12.6).

Everything within a process is sharable among the threads in a process, including the text of the executable program, the program’s global and heap memory, the stacks, and the file descriptors.

11.3 Thread Identification

- Every thread has a thread ID that has significance only within the context of the process to which it belongs.

- Thread ID is represented by pthread_t data type. Implementations are allowed to use a structure to represent the pthread_t data type, so portable implementations can’t treat them as integers.

#include <pthread.h>int pthread_equal(pthread_t tid1, pthread_t tid2);Returns: nonzero if equal, 0 otherwise- pthread_equal is used to compare two thread IDs. Linux uses an unsigned long integer for the pthread_t data type.

#include <pthread.h>pthread_t pthread_self(void);Returns: the thread ID of the calling thread- pthread_self obtain thread’s own thread ID.

11.4 Thread Creation

- With pthreads, when a program runs, it starts out as a single process with a single thread of control.

#include <pthread.h>int pthread_create(pthread_t *restrict tidp, const pthread_attr_t *restrict attr, void *(*start_rtn)(void *), void *restrict arg);Returns: 0 if OK, error number on failure- The memory location pointed to by tidp is set to the thread ID of the newly created thread when pthread_create returns successfully.

- attr is used to customize various thread attributes(Section 12.3), for now, we set this to NULL to create a thread with the default attributes.

- The newly created thread starts running at the address of the start_rtn function. This function takes a single argument, arg, which is a typeless pointer. If you need to pass more than one argument to the start_rtn function, you need to store them in a structure and pass the address of the structure in arg.

- When a thread is created, there is no guarantee which will run first: the newly created thread or the calling thread. The newly created thread has access to the process address space and inherits the calling thread’s floating-point environment and signal mask; the set of pending signals for the thread is cleared.

- The pthread functions return an error code when they fail without setting errno. The per-thread copy of errno is provided only for compatibility with existing functions that use it.

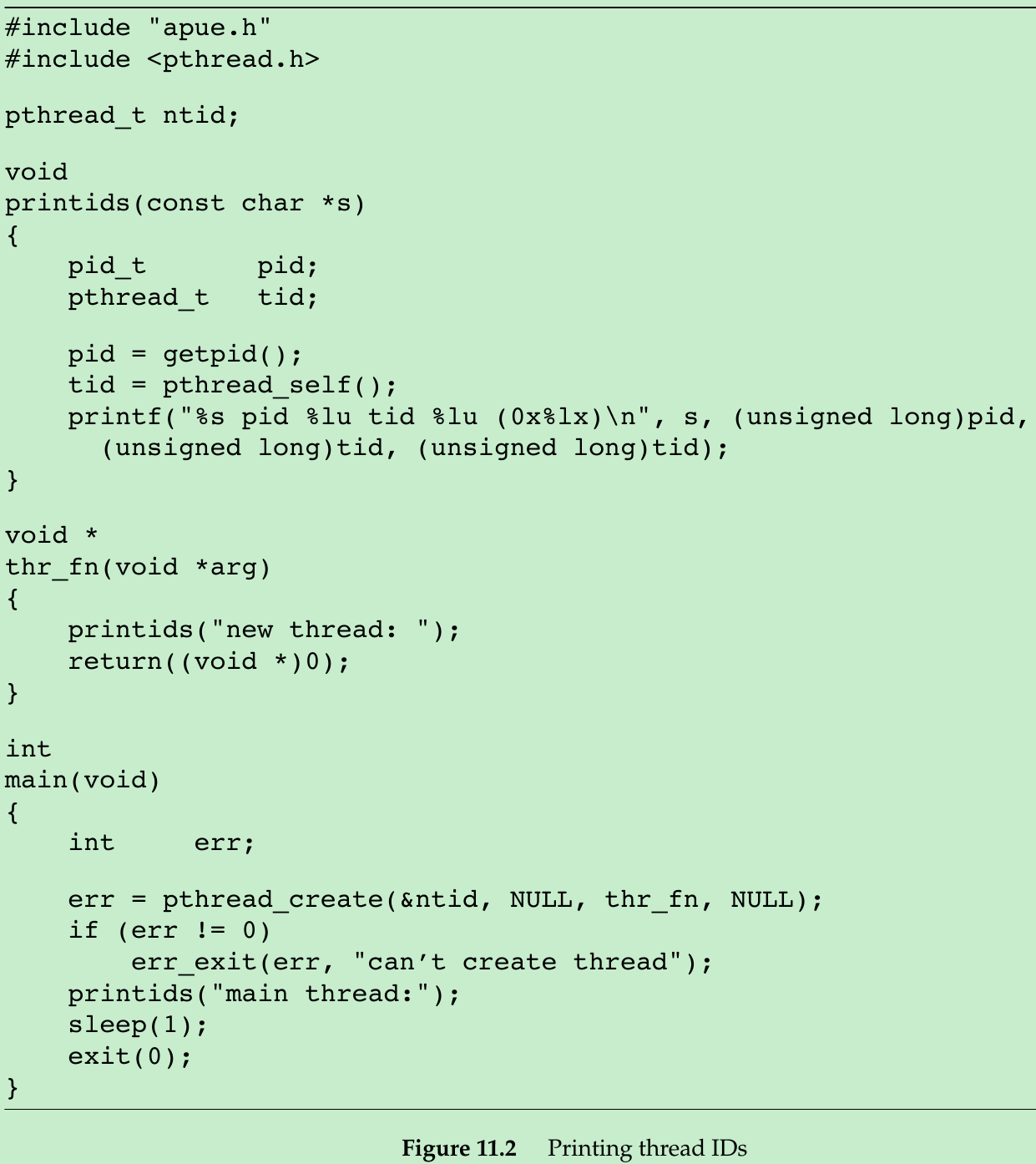

- The program in Figure 11.2 creates one thread and prints the process and thread IDs of the new thread and the initial thread.

#include <stdio.h>#include <stdlib.h>#include <sys/types.h>#include <unistd.h>#include <pthread.h>void Exit(char *string){ printf("%s\n", string); exit(1);}void printids(const char *s){ pid_t pid = getpid(); pthread_t tid = pthread_self(); printf("%s\tpid %lu\ttid %lu(0x%lx)\n", s,(unsigned long)pid, (unsigned long)tid,(unsigned long)tid);}void *thr_fn(void *arg){ printids("new thread: "); return(void *)0;}int main(){ pthread_t ntid; if(pthread_create(&ntid, NULL, thr_fn, NULL) != 0) { Exit("Can't create thread"); } printids("main thread: "); sleep(1); exit(0);}- We need sleep in the main thread. If it doesn’t sleep, the main thread might exit, thereby terminating the entire process before the new thread gets a chance to run. This behavior is dependent on the operating system’s threads implementation and scheduling algorithms.

- The new thread obtains its thread ID by calling pthread_self instead of reading it out of shared memory or receiving it as an argument to its thread-start routine. The main thread stores this ID in ntid, but the new thread can’t safely use it. If the new thread runs before the main thread returns from calling pthread_create, then the new thread will see the uninitialized contents of ntid instead of the thread ID.

$ ./a.out main thread: pid 11134 tid 139753771005760(0x7f1af5e07740)new thread: pid 11134 tid 139753762731776(0x7f1af5623700)11.5 Thread Termination

- If any thread within a process calls exit, _Exit, or _exit, then the entire process terminates. When the default action is to terminate the process, a signal sent to a thread will terminate the entire process(Section 12.8).

- A single thread can exit in three ways to stop its flow of control, without terminating the entire process.

- Return from the start routine. The return value is the thread’s exit code.

- Be canceled by another thread in the same process.

- Call pthread_exit.

#include <pthread.h>void pthread_exit(void *rval_ptr);- rval_ptr is a typeless pointer. This pointer is available to other threads in the process by calling the pthread_join function.

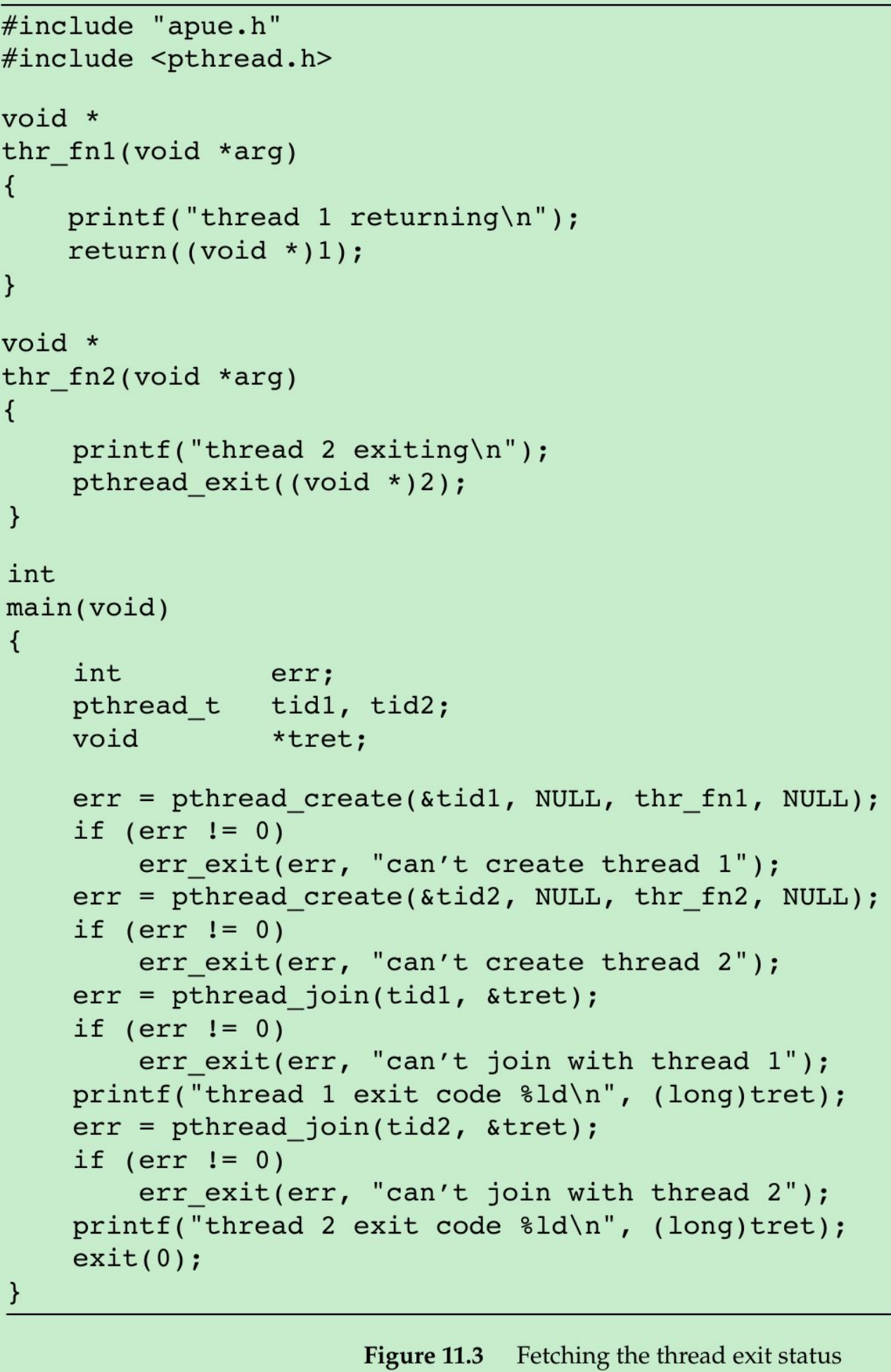

#include <pthread.h>int pthread_join(pthread_t thread, void **rval_ptr);Returns: 0 if OK, error number on failure- The calling thread will block until the specified thread calls pthread_exit, returns from its start routine, or is canceled. If the thread returned from its start routine, rval_ptr will contain the return code. If the thread was canceled, the memory location specified by rval_ptr is set to PTHREAD_CANCELED.

- By calling pthread_join, we place the thread with which we’re joining in the detached state so that its resources can be recovered. If the thread was already in the detached state, pthread_join can fail with returning EINVAL.

- If we’re not interested in a thread’s return value, we can set rval_ptr to NULL. In this case, calling pthread_join allows us to wait for the specified thread, but does not retrieve the thread’s termination status.

#include <stdio.h>#include <stdlib.h>#include <pthread.h>void Exit(char *string){ printf("%s\n", string); exit(1);}void *thr_fn1(void *arg){ printf("Thread 1 return\n"); return(void *)1;}void *thr_fn2(void *arg){ printf("Thread 2 return\n"); return(void *)2;}int main(){ pthread_t tid1, tid2; void *tret; if(pthread_create(&tid1, NULL, thr_fn1, NULL) != 0) { Exit("Can't create thread 1"); } if(pthread_create(&tid2, NULL, thr_fn2, NULL) != 0) { Exit("Can't create thread 2"); } if(pthread_join(tid1, &tret) != 0) { Exit("Can't join with thread 1"); } printf("Thread 1 exit code = %ld\n",(long)tret); if(pthread_join(tid2, &tret) != 0) { Exit("Can't join with thread 2"); } printf("Thread 2 exit code = %ld\n",(long)tret); exit(0);}$ ./a.out Thread 1 returnThread 2 returnThread 1 exit code = 1Thread 2 exit code = 2- When a thread exits by calling pthread_exit or by returning from the start routine, the exit status can be obtained by another thread by calling pthread_join.

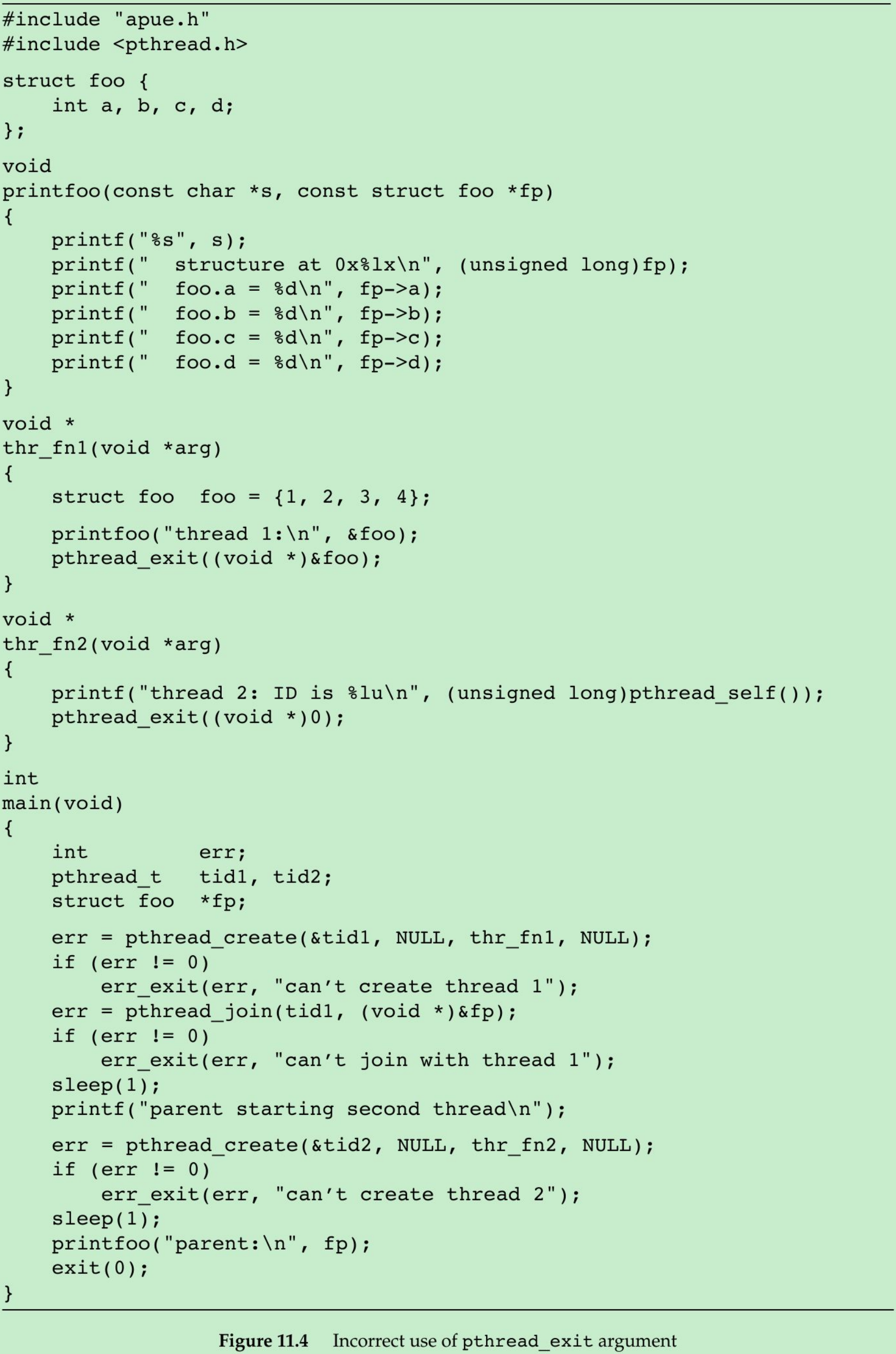

- The typeless pointer passed to pthread_create and pthread_exit can be used to pass the address of a structure containing many information. The memory used for the structure is still valid when the caller has completed. If the structure was allocated on the caller’s stack, the memory contents might have changed by the time the structure is used. If a thread allocates a structure on its stack and passes a pointer to this structure to pthread_exit, then the stack might be destroyed and its memory reused for something else by the time the caller of pthread_join tries to use it.

#include <stdio.h>#include <stdlib.h>#include <unistd.h>#include <pthread.h>void Exit(char *string){ printf("%s\n", string); exit(1);}struct foo{ int a, b, c, d;};void printfoo(const char *s, const struct foo *fp){ printf("%s", s); printf("\tstructure at 0x%lx\n",(unsigned long)fp); printf("\tfoo.a = %d\n", fp->a); printf("\tfoo.b = %d\n", fp->b); printf("\tfoo.c = %d\n", fp->c); printf("\tfoo.d = %d\n", fp->d);}void *thr_fn1(void *arg){ struct foo foo = {1, 2, 3, 4}; printfoo("Thread 1:\n", &foo); pthread_exit((void *)&foo);}void *thr_fn2(void *arg){ printf("Thread 2: ID is %lu\n",(unsigned long)pthread_self()); pthread_exit((void *)0);}int main(){ pthread_t tid1, tid2; struct foo *fp; if(pthread_create(&tid1, NULL, thr_fn1, NULL) != 0) { Exit("Can't create thread 1"); } if(pthread_join(tid1,(void *)&fp) != 0) { Exit("Can't join with thread 1"); } sleep(1); printf("Parent start second thread\n"); if(pthread_create(&tid2, NULL, thr_fn2, NULL) != 0) { Exit("Can't create thread 2"); } sleep(1); printfoo("Parent:\n", fp); exit(0);}- The program in Figure 11.4 shows the problem with using an automatic variable(allocated on the stack) as the argument to pthread_exit. Run on Linux:

$ ./a.out Thread 1: structure at 0x7f34b25aaf00 foo.a = 1 foo.b = 2 foo.c = 3 foo.d = 4Parent start second threadThread 2: ID is 139864307316480Parent: structure at 0x7f34b25aaf00 foo.a = 1 foo.b = 0 foo.c = 1 foo.d = 0- The contents of the structure(allocated on the stack of thread tid1) have changed by the time the main thread can access the structure. The stack of the second thread(tid2) has overwritten the first thread’s stack. To solve this problem, we can either use a global structure or allocate the structure using malloc.

#include <pthread.h>int pthread_cancel(pthread_t tid);Returns: 0 if OK, error number on failure- One thread call pthread_cancel to request that another in the same process be canceled. By default, pthread_cancel will cause the thread specified by tid to behave as if it had called

pthread_exit(PTHREAD_CANCELED);. - A thread can elect to ignore or otherwise control how it is canceled(Section 12.7). pthread_cancel doesn’t wait for the thread to terminate; it merely makes the request.

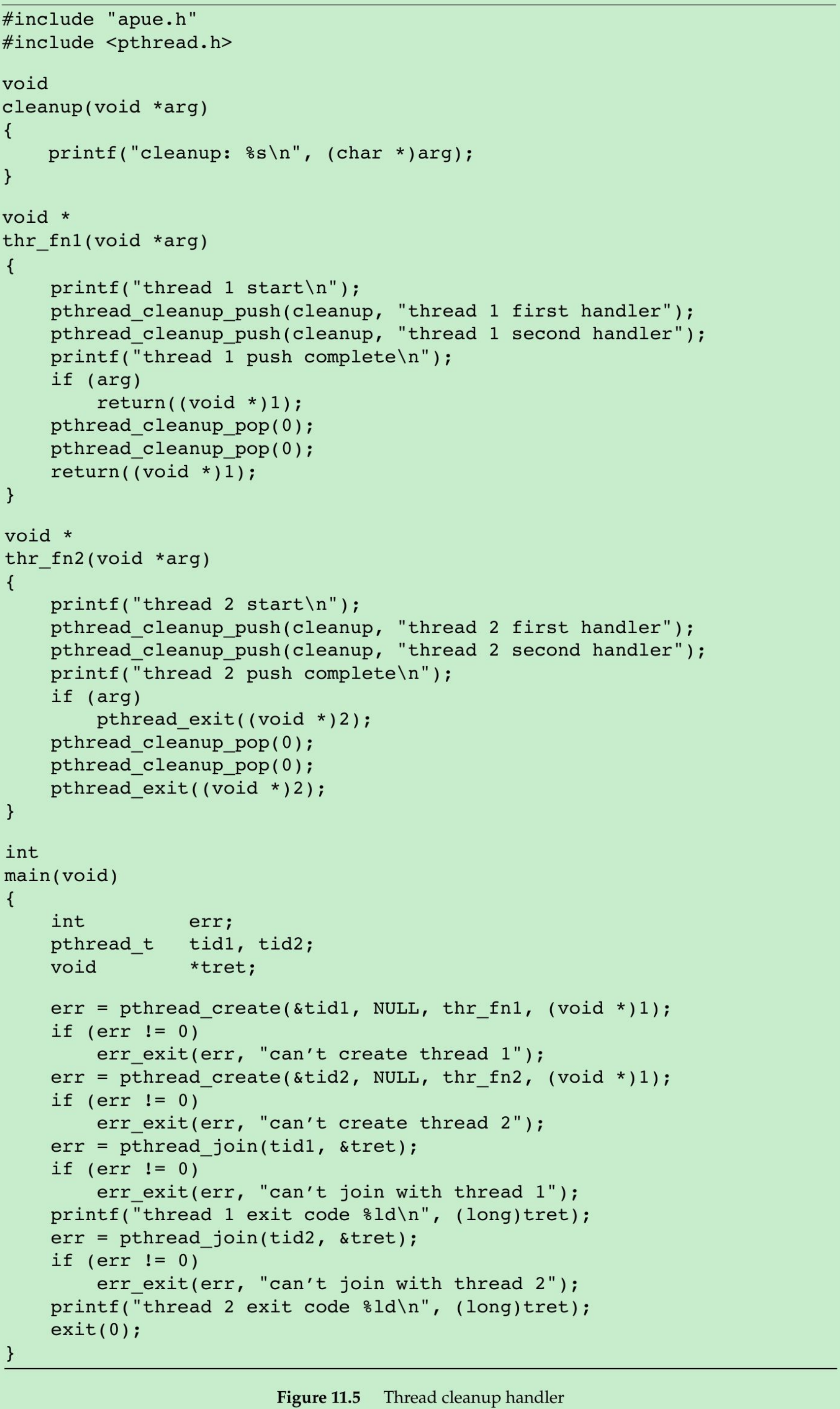

- A thread can arrange for thread cleanup handlers to be called when it exits. More than one cleanup handler can be established for a thread. The handlers are recorded in a stack and they are executed in the reverse order from that with which they were registered.

#include <pthread.h>void pthread_cleanup_push(void(*rtn)(void *), void *arg);void pthread_cleanup_pop(int execute);- pthread_cleanup_push schedules the cleanup function, rtn, to be called with the single argument, arg, when the thread performs one of the following actions:

- Call pthread_exit.

- Respond to a cancellation request.

- Call pthread_cleanup_pop with a nonzero execute argument.

- If the execute argument is set to zero, the cleanup function is not called. In either case, pthread_cleanup_pop removes the cleanup handler established by the last call to pthread_cleanup_push.

- Because two functions can be implemented as macros, they must be used in matched pairs within the same scope in a thread. The macro definition of pthread_cleanup_push can include a ‘{‘ character, in which case the matching ‘}’ character is in the pthread_cleanup_pop definition.

- Although we never intend to pass zero as an argument to the thread start-up routines, we still need to match calls to pthread_cleanup_pop with the calls to pthread_cleanup_push; otherwise, the program might not compile.

$ ./a.out Thread 1 startThread 1 push completeThread 2 startThread 2 push completeThread 1 exit code 1cleanup: Thread 2 second handlercleanup: Thread 2 first handlerThread 2 exit code 2- Both threads start properly and exit, but only the second thread’s cleanup handlers are called. If the thread terminates by returning from its start routine, its cleanup handlers are not called.

- In the Single UNIX Specification, returning while in between a matched pair of calls to pthread_cleanup_push and pthread_cleanup_pop results in undefined behavior. The only portable way to return in between two functions is to call pthread_exit.

- By default, a thread’s termination status is retained until we call pthread_join for that thread. A thread’s underlying storage can be reclaimed immediately on termination if the thread has been detached. After a thread is detached, we can’t use pthread_join to wait for its termination status, because calling pthread_join for a detached thread results in undefined behavior. We can detach a thread by calling pthread_detach.

#include <pthread.h>int pthread_detach(pthread_t tid);Returns: 0 if OK, error number on failure11.6 Thread Synchronization

- When one thread modifies a variable, other threads can see inconsistencies when reading the value of that variable. On processor architectures in which the modification takes more than one memory cycle, this can happen when the memory read is interleaved between the memory write cycles.

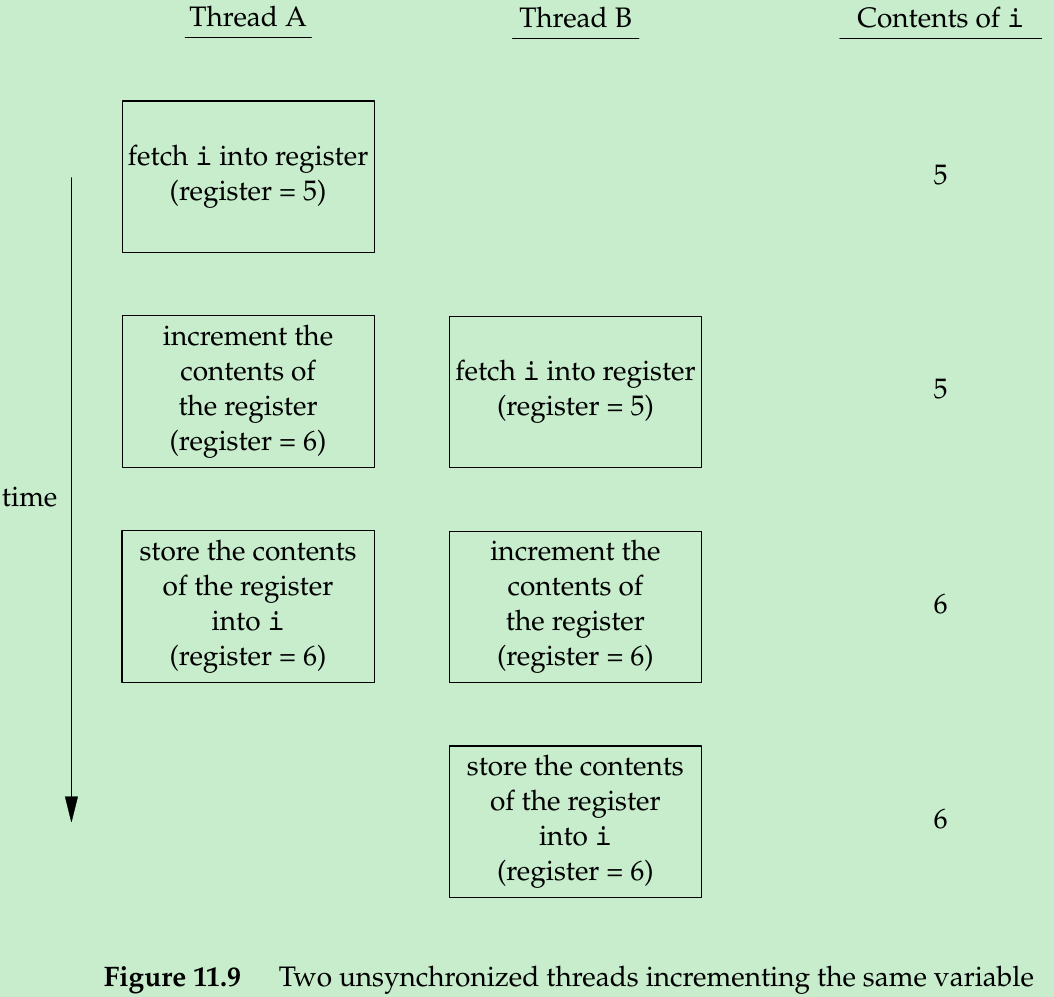

- Consider increment a variable(Figure 11.9). The increment operation is broken down into three steps.

- Read the memory location into a register.

- Increment the value in the register.

- Write the new value back to the memory location.

- If two threads try to increment the same variable at almost the same time without synchronizing with each other, the results can be inconsistent. You end up with a value that is either one or two greater than before, depending on the value observed when the second thread starts its operation.

- In modern computer systems, memory accesses take multiple bus cycles, and multiprocessors interleave bus cycles among multiple processors, so we aren’t guaranteed that our data is sequentially consistent.

11.6.1 Mutexes

- A mutex is a lock that we set(lock) before accessing a shared resource and release (unlock) when we’re done. While it is set, any other thread that tries to set it will block until we release it. If more than one thread is blocked when we unlock the mutex, then all threads blocked on the lock will be made runnable, and the first one to run will be able to set the lock. The others will see that the mutex is still locked and go back to waiting for it to become available again. In this way, only one thread will proceed at a time.

- This mutual-exclusion mechanism works only if we design our threads to follow the same data-access rules. The operating system doesn’t serialize access to data for us.

- A mutex variable is represented by the pthread_mutex_t data type. Before we can use a mutex variable, we must initialize it by either setting it to the constant PTHREAD_MUTEX_INITIALIZER(for statically allocated mutexes only) or calling pthread_mutex_init. If we allocate the mutex dynamically(by calling malloc, for example), then we need to call pthread_mutex_destroy before freeing the memory.

#include <pthread.h>int pthread_mutex_init(pthread_mutex_t *restrict mutex, const pthread_mutexattr_t *restrict attr);int pthread_mutex_destroy(pthread_mutex_t *mutex);Both return: 0 if OK, error number on failure- To initialize a mutex with the default attributes, we set attr to NULL.

- To lock a mutex, we call pthread_mutex_lock. If the mutex is already locked, the calling thread will block until the mutex is unlocked. To unlock a mutex, we call pthread_mutex_unlock.

#include <pthread.h>int pthread_mutex_lock(pthread_mutex_t *mutex);int pthread_mutex_trylock(pthread_mutex_t *mutex);int pthread_mutex_unlock(pthread_mutex_t *mutex);All return: 0 if OK, error number on failure- If a thread can’t afford to block, it can use pthread_mutex_trylock to lock the mutex conditionally. If the mutex is unlocked at the time pthread_mutex_trylock is called, then pthread_mutex_trylock will lock the mutex without blocking and return 0. Otherwise, pthread_mutex_trylock will fail, returning EBUSY without locking the mutex.

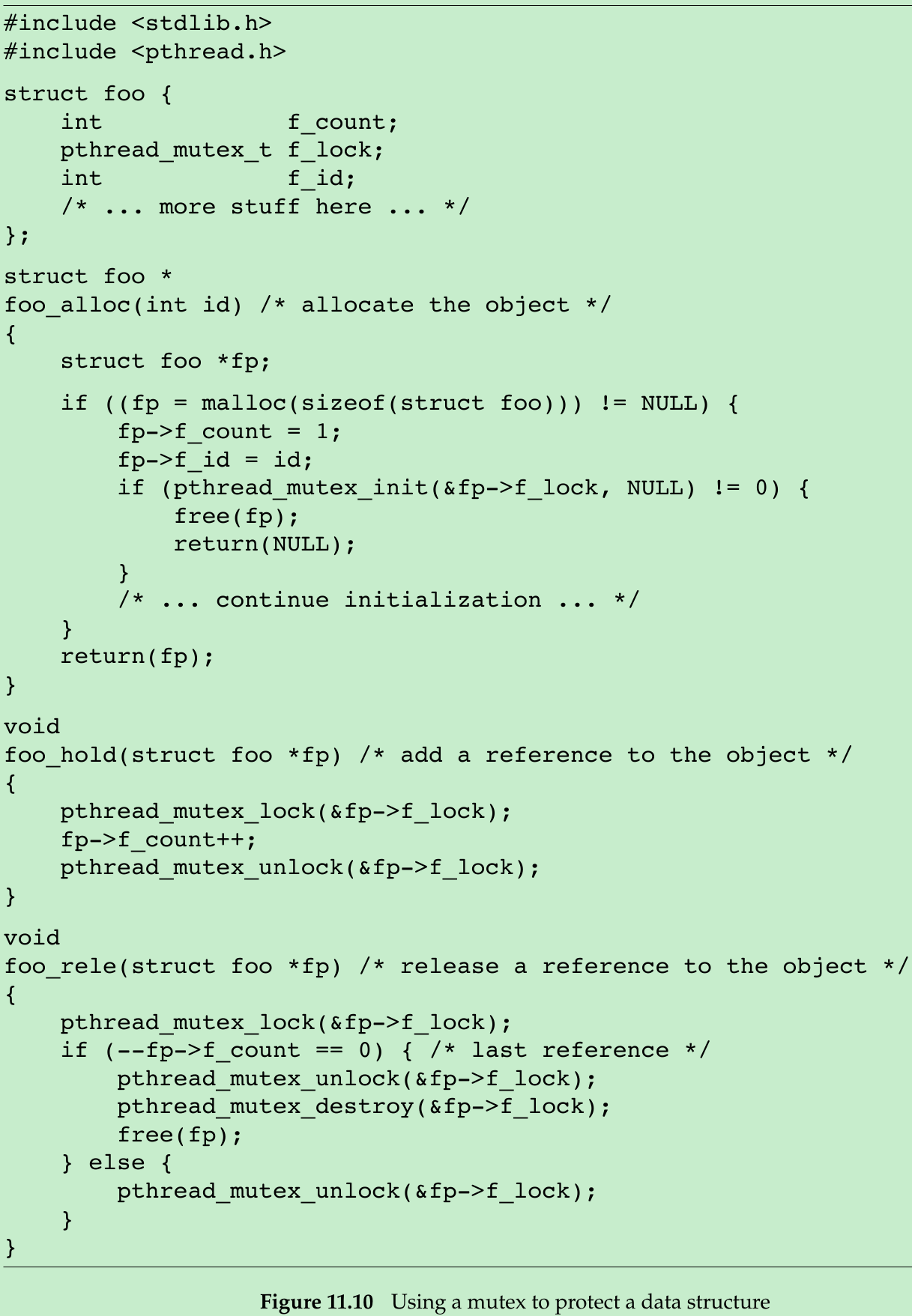

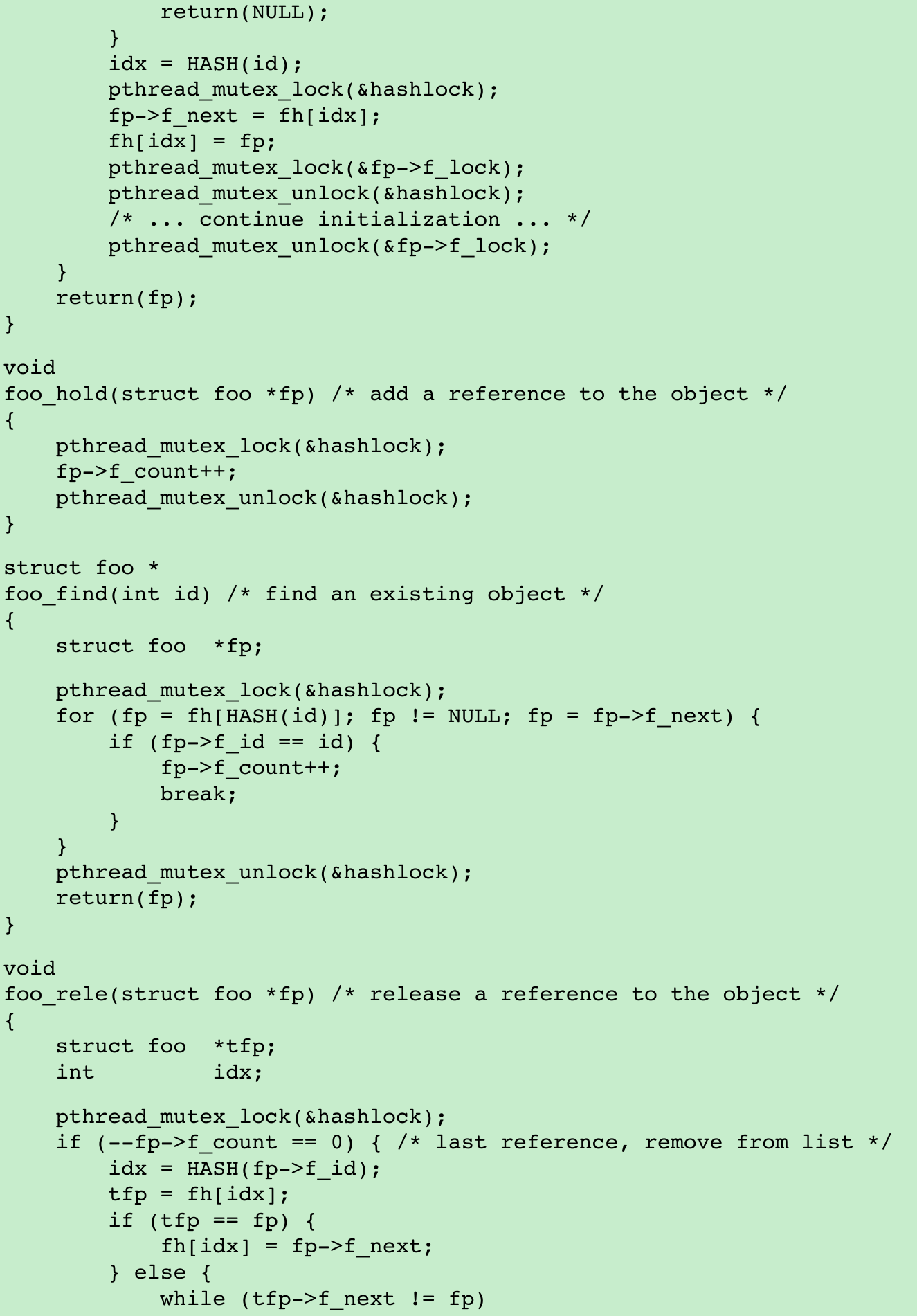

#include <stdio.h>#include <stdlib.h>#include <pthread.h>void Exit(char *string){ printf("%s\n", string); exit(1);}struct foo{ int f_count; pthread_mutex_t f_lock; int f_id;};void printfoo(const char *s, const struct foo *fp){ printf("%s", s); printf("\tstructure at 0x%lx\n", (unsigned long)fp); printf("\tfoo.f_count = %d\n", fp->f_count); printf("\tfoo.f_id = %d\n", fp->f_id);}struct foo *foo_alloc(int id){ struct foo *fp; if((fp = malloc(sizeof(struct foo))) != NULL) { fp->f_count = 1; fp->f_id = id; if(pthread_mutex_init(&fp->f_lock, NULL) != 0) { free(fp); return NULL; } } return fp;}void foo_hold(struct foo *fp){ pthread_mutex_lock(&fp->f_lock); fp->f_count++; pthread_mutex_unlock(&fp->f_lock);}void foo_rele(struct foo *fp){ pthread_mutex_lock(&fp->f_lock); if(--fp->f_count == 0) { pthread_mutex_unlock(&fp->f_lock); pthread_mutex_destroy(&fp->f_lock); free(fp); } else { pthread_mutex_unlock(&fp->f_lock); }}int main(){ struct foo *obj1 = foo_alloc(1); foo_hold(obj1); foo_hold(obj1); foo_hold(obj1); printfoo("obj1", obj1); exit(0);}- Figure 11.10 illustrates a mutex used to protect a data structure. When more than one thread needs to access a dynamically allocated object, we can embed a reference count in the object to ensure that we don’t free its memory before all threads are done using it.

- No locking is necessary when we initialize the reference count to 1 in foo_alloc, because the allocating thread is the only reference to it so far. If we were to place the structure on a list at this point, it could be found by other threads, so we would need to lock it first.

- Before using the object, threads are expected to add a reference to it by calling foo_hold. When they are done, they must call foo_rele to release the reference. When the last reference is released, the object’s memory is freed.

- In this example, we ignore how threads find an object before calling foo_hold. Even though the reference count is zero, it would be a mistake for foo_rele to free the object’s memory if another thread is blocked on the mutex in a call to foo_hold. We can avoid this problem by ensuring that the object can’t be found before freeing its memory(next example).

11.6.2 Deadlock Avoidance

- Deadlocks can be avoided by controlling the order in which mutexes are locked. Some application’s architecture makes it difficult to apply a lock ordering. If enough locks and data structures are involved that the functions you have available can’t be molded to fit a simple hierarchy, then you’ll have to try some other approach.

- In this case, you might be able to release your locks and try again at a later time. You can use the pthread_mutex_trylock interface to avoid deadlocking in this case. If you are already holding locks and pthread_mutex_trylock is successful, then you can proceed. If it can’t acquire the lock, you can release the locks you already hold, clean up, and try again later.

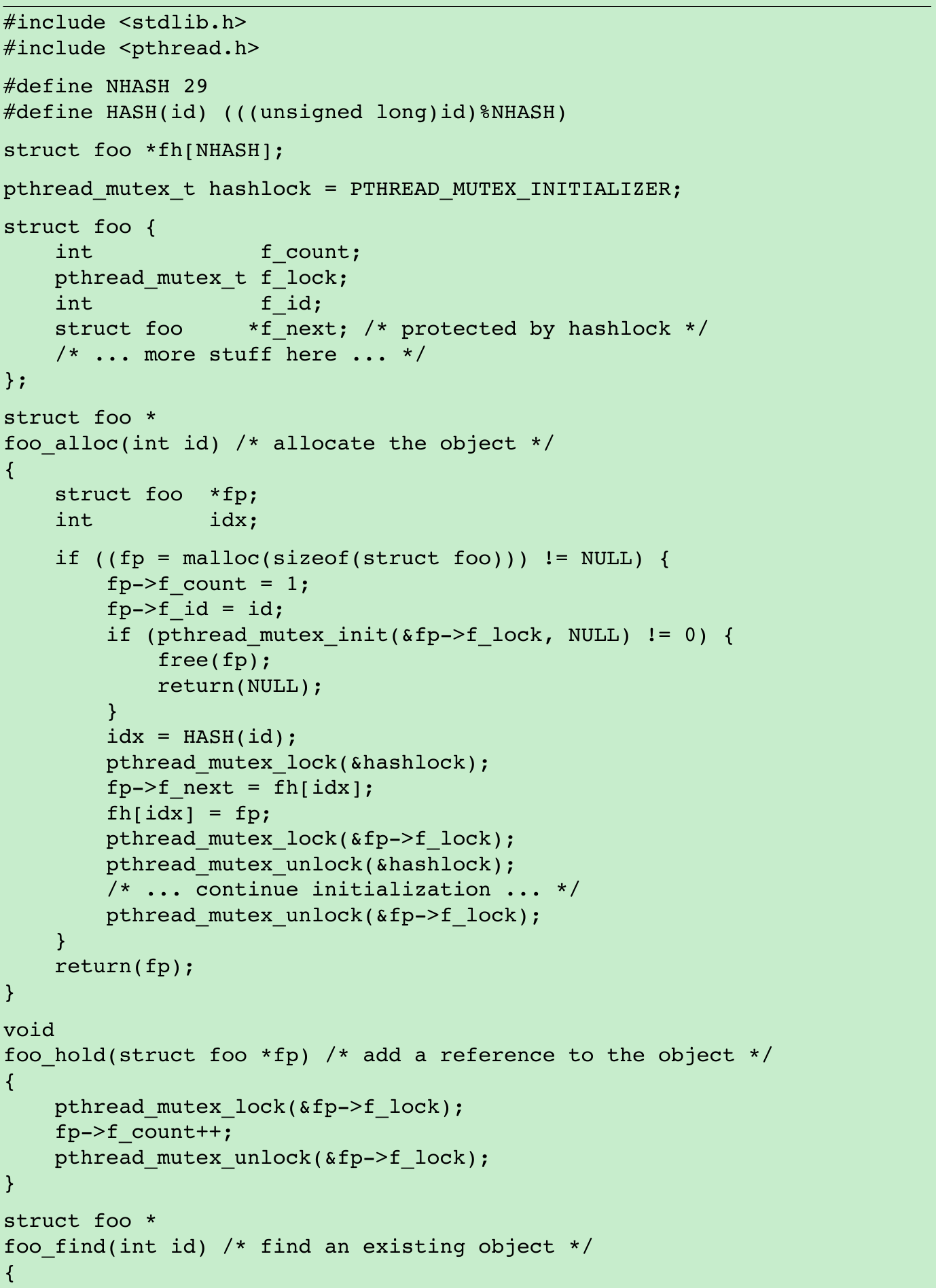

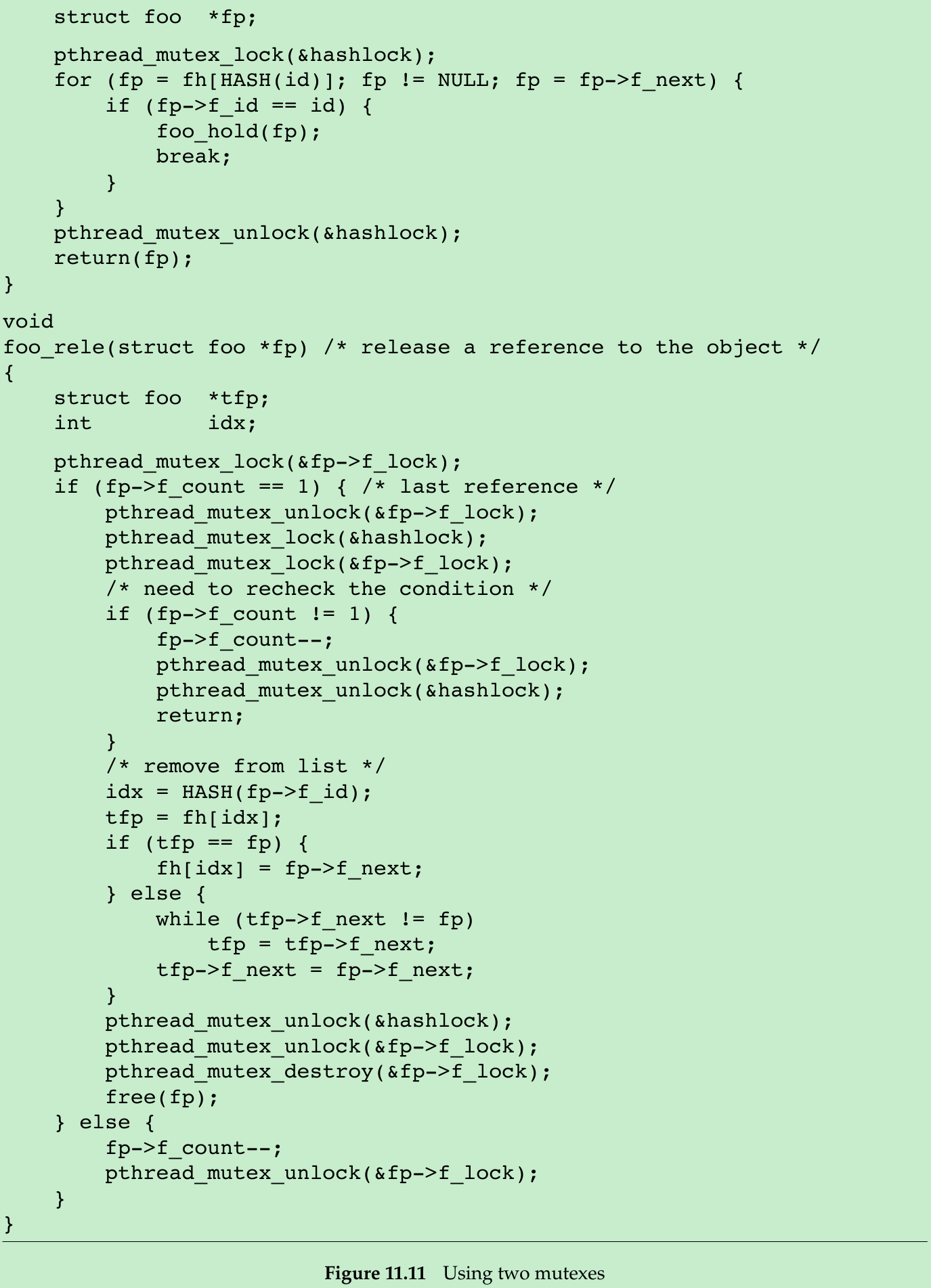

#include <stdio.h>#include <stdlib.h>#include <pthread.h>#define NHASH 10#define HASH(id) (((unsigned long)id)%NHASH)struct foo *fh[NHASH];pthread_mutex_t hashlock = PTHREAD_MUTEX_INITIALIZER;struct foo{ int f_count; pthread_mutex_t f_lock; int f_id; struct foo* f_next;};void printfoo(const char *s, const struct foo *fp){ printf("%s", s); printf("\tstructure at 0x%lx\n", (unsigned long)fp); printf("\tfoo.f_count = %d\n", fp->f_count); printf("\tfoo.f_id = %d\n", fp->f_id);}struct foo *foo_alloc(int id){ struct foo *fp; int idx; if((fp = malloc(sizeof(struct foo))) != NULL) { fp->f_count = 1; fp->f_id = id; if(pthread_mutex_init(&fp->f_lock, NULL) != 0) { free(fp); return NULL; } idx = HASH(id); pthread_mutex_lock(&hashlock); fp->f_next = fh[idx]; fh[idx] = fp; pthread_mutex_lock(&fp->f_lock); pthread_mutex_unlock(&hashlock); pthread_mutex_unlock(&fp->f_lock); } return fp;}void foo_hold(struct foo *fp){ pthread_mutex_lock(&fp->f_lock); fp->f_count++; pthread_mutex_unlock(&fp->f_lock);}struct foo *foo_find(int id){ struct foo *fp; pthread_mutex_lock(&hashlock); for(fp = fh[HASH(id)]; fp != NULL; fp = fp->f_next) { if(fp->f_id == id) { foo_hold(fp); break; } } pthread_mutex_unlock(&hashlock); return fp;}void foo_rele(struct foo *fp){ struct foo *tfp; int idx; pthread_mutex_lock(&fp->f_lock); if(fp->f_count == 1) { pthread_mutex_unlock(&fp->f_lock); pthread_mutex_lock(&hashlock); pthread_mutex_lock(&fp->f_lock); if(fp->f_count != 1) { fp->f_count--; pthread_mutex_unlock(&fp->f_lock); pthread_mutex_unlock(&hashlock); return; } idx = HASH(fp->f_id); tfp = fh[idx]; if(tfp == fp) { fh[idx] = fp->f_next; } else { while(tfp->f_next != fp) { tfp = tfp->f_next; } tfp->f_next = fp->f_next; } pthread_mutex_unlock(&hashlock); pthread_mutex_unlock(&fp->f_lock); pthread_mutex_destroy(&fp->f_lock); free(fp); } else { fp->f_count--; pthread_mutex_unlock(&fp->f_lock); }}int main(){ struct foo *obj1 = foo_alloc(1); foo_hold(obj1); foo_hold(obj1); foo_hold(obj1); printfoo("obj1", obj1); exit(0);}- We avoid deadlocks by ensuring that when we need to acquire two mutexes at the same time, we always lock them in the same order. The hashlock mutex protects both the fh hash table and the f_next hash link field in the foo structure. The f_lock mutex in the foo structure protects access to the remainder of the foo structure’s fields.

- foo_alloc locks the hash list lock, adds the new structure to a hash bucket, and before unlocking the hash list lock, locks the mutex in the new structure. Since the new structure is placed on a global list, other threads can find it, so we need to block them if they try to access the new structure, until we are done initializing it.

- foo_find locks the hash list lock and searches for the requested structure. If it is found, we increase the reference count and return a pointer to the structure. We honor the lock ordering by locking the hash list lock in foo_find before foo_hold locks the foo structure’s f_lock mutex.

- foo_rele: If this is the last reference, we need to unlock the structure mutex so that we can acquire the hash list lock, since we’ll need to remove the structure from the hash list. Then we reacquire the structure mutex. Because we could have blocked since the last time we held the structure mutex, we need to recheck the condition to see whether we still need to free the structure. If another thread found the structure and added a reference to it while we blocked to honor the lock ordering, we need to decrement the reference count, unlock everything, and return.

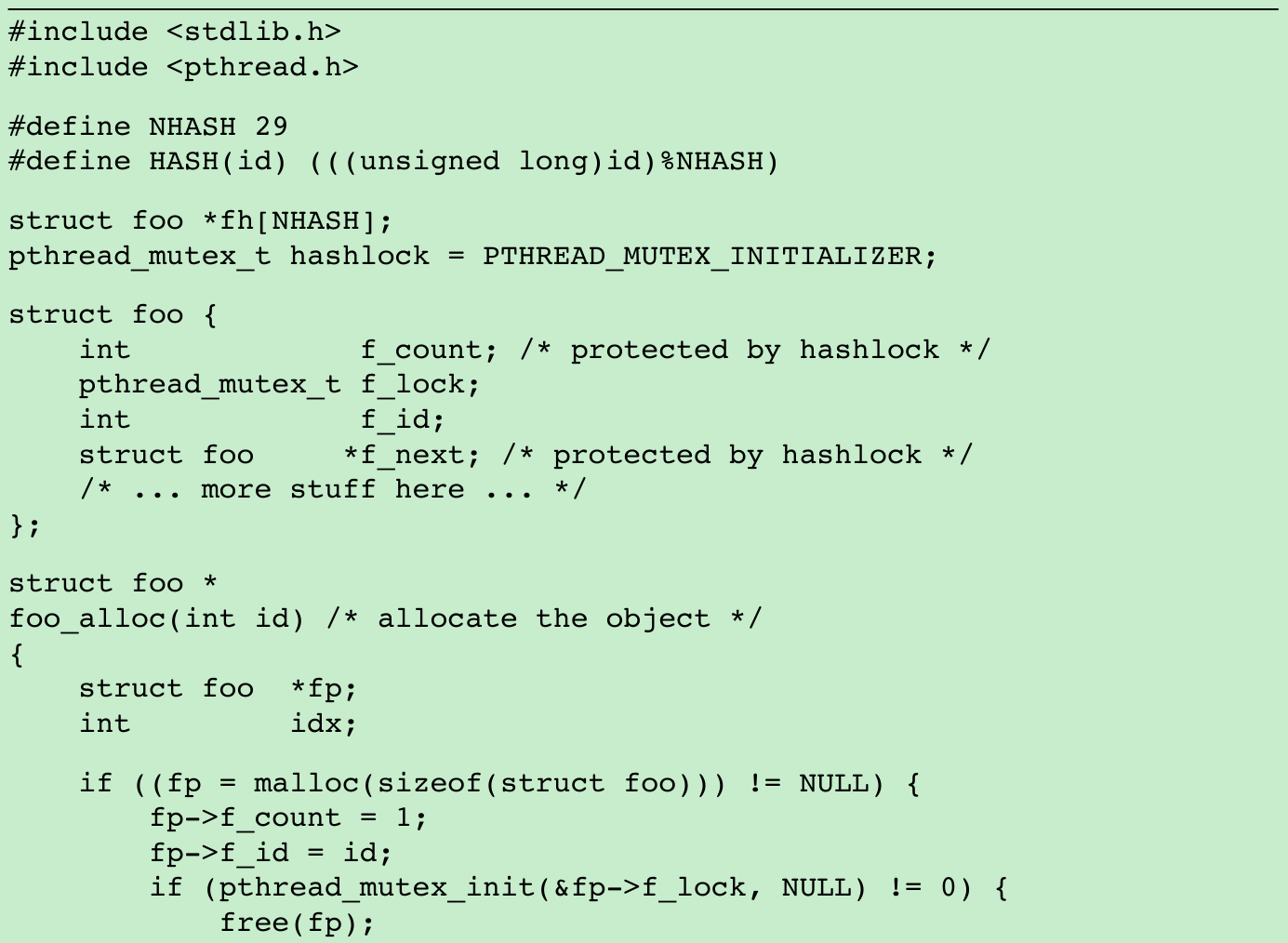

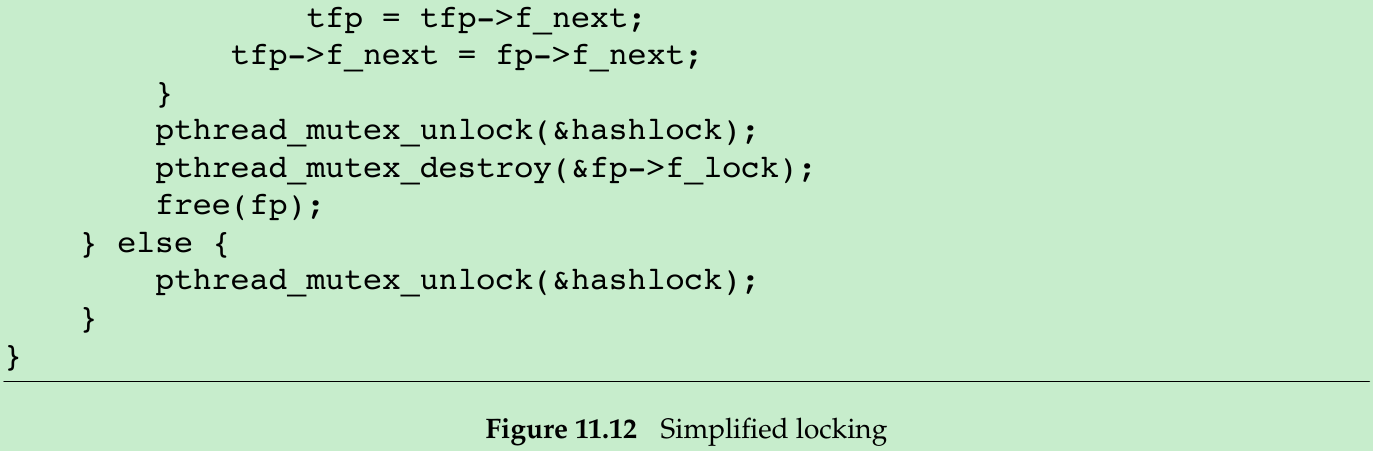

- We can simplify things by using the hash list lock to protect the structure reference count, too. The structure mutex can be used to protect everything else in the foo structure. Figure 11.12 reflects this change.

#include <stdio.h>#include <stdlib.h>#include <pthread.h>#define NHASH 10#define HASH(id) (((unsigned long)id)%NHASH)struct foo *fh[NHASH];pthread_mutex_t hashlock = PTHREAD_MUTEX_INITIALIZER;struct foo{ int f_count; pthread_mutex_t f_lock; int f_id; struct foo* f_next;};void printfoo(const char *s, const struct foo *fp){ printf("%s", s); printf("\tstructure at 0x%lx\n", (unsigned long)fp); printf("\tfoo.f_count = %d\n", fp->f_count); printf("\tfoo.f_id = %d\n", fp->f_id);}struct foo *foo_alloc(int id){ struct foo *fp; int idx; if((fp = malloc(sizeof(struct foo))) != NULL) { fp->f_count = 1; fp->f_id = id; if(pthread_mutex_init(&fp->f_lock, NULL) != 0) { free(fp); return NULL; } idx = HASH(id); pthread_mutex_lock(&hashlock); fp->f_next = fh[idx]; fh[idx] = fp; pthread_mutex_lock(&fp->f_lock); pthread_mutex_unlock(&hashlock); pthread_mutex_unlock(&fp->f_lock); } return fp;}void foo_hold(struct foo *fp){ pthread_mutex_lock(&hashlock); fp->f_count++; pthread_mutex_unlock(&hashlock);}struct foo *foo_find(int id){ struct foo *fp; pthread_mutex_lock(&hashlock); for(fp = fh[HASH(id)]; fp != NULL; fp = fp->f_next) { if(fp->f_id == id) { fp->f_count++; break; } } pthread_mutex_unlock(&hashlock); return fp;}void foo_rele(struct foo *fp){ struct foo *tfp; int idx; pthread_mutex_lock(&hashlock); if(--fp->f_count == 0) { idx = HASH(fp->f_id); tfp = fh[idx]; if(tfp == fp) { fh[idx] = fp->f_next; } else { while(tfp->f_next != fp) { tfp = tfp->f_next; } tfp->f_next = fp->f_next; } pthread_mutex_unlock(&hashlock); pthread_mutex_destroy(&fp->f_lock); free(fp); } else { pthread_mutex_unlock(&hashlock); }}int main(){ struct foo *obj1 = foo_alloc(1); foo_hold(obj1); foo_hold(obj1); foo_hold(obj1); printfoo("obj1", obj1); exit(0);}- The lock-ordering issues surrounding the hash list and the reference count go away when we use the same lock for both purposes.

- Multithreaded software design involves these types of trade-offs.

- If your locking granularity is too coarse, you end up with too many threads blocking behind the same locks, with little improvement possible from concurrency.

- If your locking granularity is too fine, then you suffer bad performance from excess locking overhead, and you end up with complex code.

You need to find the correct balance between code complexity and performance, while still satisfying your locking requirements.

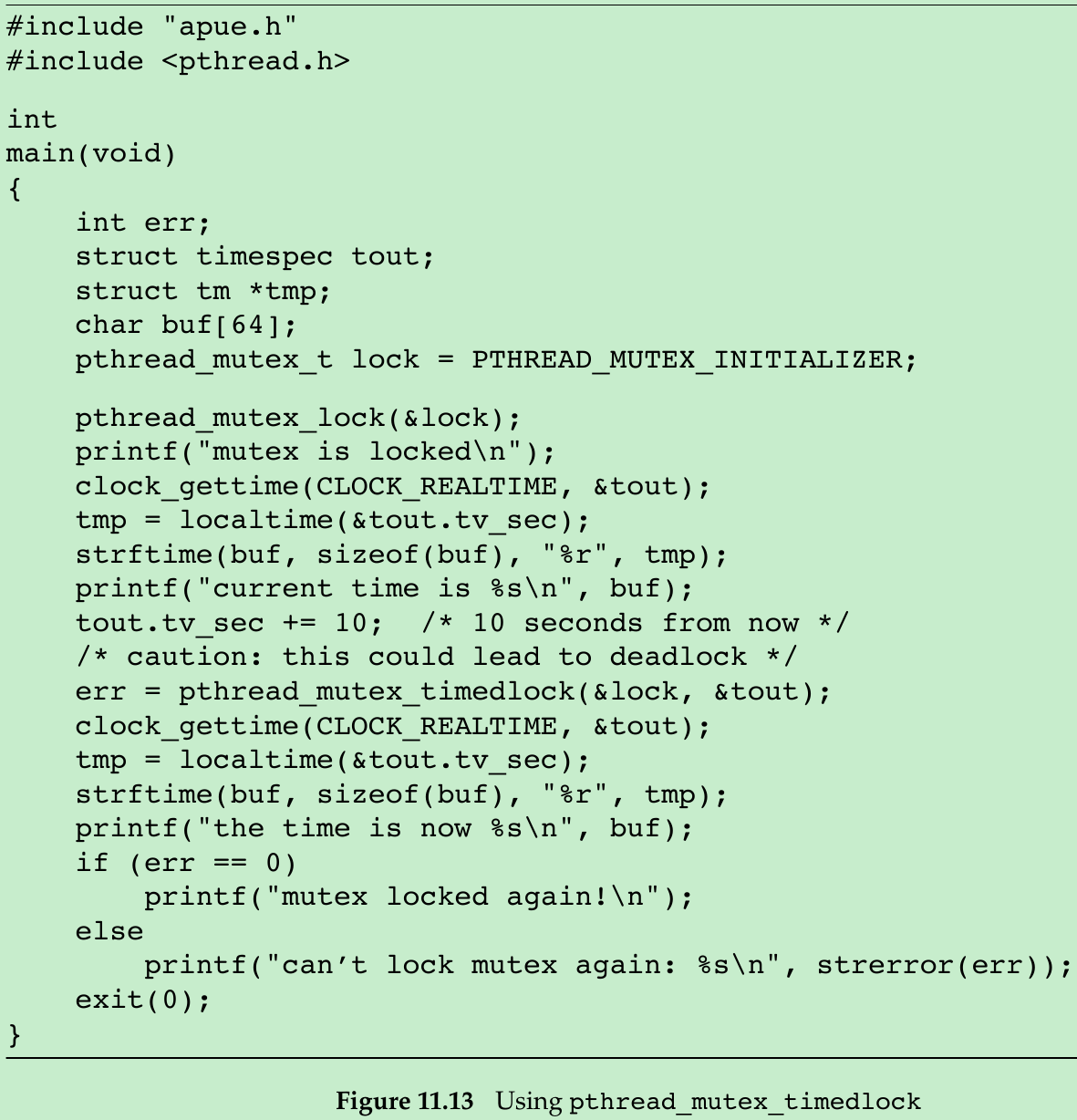

11.6.3 pthread_mutex_timedlock Function

#include <pthread.h>#include <time.h>int pthread_mutex_timedlock(pthread_mutex_t *restrict mutex, const struct timespec *restrict tsptr);Returns: 0 if OK, error number on failure- This function allows us to bound the time that a thread blocks when a mutex it is trying to acquire is already locked. When the timeout value is reached, it will return the error code ETIMEDOUT without locking the mutex.

- The timeout specifies how long we are willing to wait in terms of absolute time (specify that we are willing to block until time X instead of saying that we are willing to block for Y seconds). The timeout is represented by the timespec structure, which describes time in terms of seconds and nanoseconds.

#define _GNU_SOURCE#include <stdio.h>#include <stdlib.h>#include <pthread.h>#include <time.h>#include <string.h>void Exit(char *string){ printf("%s\n", string); exit(1);}int main(){ int err; struct timespec tout; struct tm *tmp; char buf[64]; pthread_mutex_t lock = PTHREAD_MUTEX_INITIALIZER; pthread_mutex_lock(&lock); printf("mutex is locked\n"); clock_gettime(CLOCK_REALTIME, &tout); tmp = localtime(&tout.tv_sec); strftime(buf, sizeof(buf), "%r", tmp); printf("current time is %s\n", buf); tout.tv_sec += 10; /* 10 seconds from now */ /* caution: this could lead to deadlock */ err = pthread_mutex_timedlock(&lock, &tout); clock_gettime(CLOCK_REALTIME, &tout); tmp = localtime(&tout.tv_sec); strftime(buf, sizeof(buf), "%r", tmp); printf("the time is now %s\n", buf); if (err == 0) { printf("mutex locked again!\n"); } else { printf("can’t lock mutex again: %s\n", strerror(err)); } exit(0);}$ ./a.out mutex is lockedcurrent time is 09:06:54 AMthe time is now 09:07:04 AMcan’t lock mutex again: Connection timed out- The time blocked can vary for several reasons: the start time could have been in the middle of a second, the resolution of the system’s clock might not be fine enough to support the resolution of our timeout, or scheduling delays could prolong the amount of time until the program continues execution.

11.6.4 reader-writer(R-W) Locks

- R-W locks are well suited for data structures that are read more often than they are modified. Three states are possible with a R-W lock: locked in read mode, locked in write mode, and unlocked. Only one thread at a time can hold a R-W lock in write mode, but multiple threads can hold a R-W lock in read mode at the same time.

- When a R-W lock is

- write locked: all threads attempting to lock it block until it is unlocked.

- read locked: all threads attempting to lock it in read mode are given access, but any threads attempting to lock it in write mode block until all the threads have released their read locks.

- R-W locks usually block additional readers if a lock is already held in read mode and a thread is blocked trying to acquire the lock in write mode. This prevents a constant stream of readers from starving waiting writers.

- R-W locks are also called shared-exclusive locks: read locked -> locked in shared mode; write locked -> locked in exclusive mode.

- R-W locks must be initialized before use and destroyed before freeing their underlying memory.

#include <pthread.h>int pthread_rwlock_init(pthread_rwlock_t *restrict rwlock, const pthread_rwlockattr_t *restrict attr);int pthread_rwlock_destroy(pthread_rwlock_t *rwlock);Both return: 0 if OK, error number on failure- A R-W lock is initialized by calling pthread_rwlock_init. We can pass a null pointer for attr if we want the R-W lock to have the default attributes. PTHREAD_RWLOCK_INITIALIZER constant can be used to initialize a statically allocated R-W lock when the default attributes are sufficient.

- Before freeing the memory backing a R-W lock, we need to call pthread_rwlock_destroy to clean it up. If pthread_rwlock_init allocated any resources for the R-W lock, pthread_rwlock_destroy frees those resources. If we free the memory backing a R-W lock without first calling pthread_rwlock_destroy, any resources assigned to the lock will be lost.

#include <pthread.h>int pthread_rwlock_rdlock(pthread_rwlock_t *rwlock);int pthread_rwlock_wrlock(pthread_rwlock_t *rwlock);int pthread_rwlock_unlock(pthread_rwlock_t *rwlock);All return: 0 if OK, error number on failure- pthread_rwlock_rdlock: read lock a R-W lock.

pthread_rwlock_wrlock: write lock a R-W lock.

pthread_rwlock_unlock: unlock a R-W lock. - Implementations place a limit on the number of times a R-W lock can be locked in shared mode, so we need to check the return value of pthread_rwlock_rdlock.

#include <pthread.h>int pthread_rwlock_tryrdlock(pthread_rwlock_t *rwlock);int pthread_rwlock_trywrlock(pthread_rwlock_t *rwlock);Both return: 0 if OK, error number on failure- When the lock can be acquired, these functions return 0. Otherwise, they return the error EBUSY. These functions can be used to avoid deadlocks in situations where conforming to a lock hierarchy is difficult.

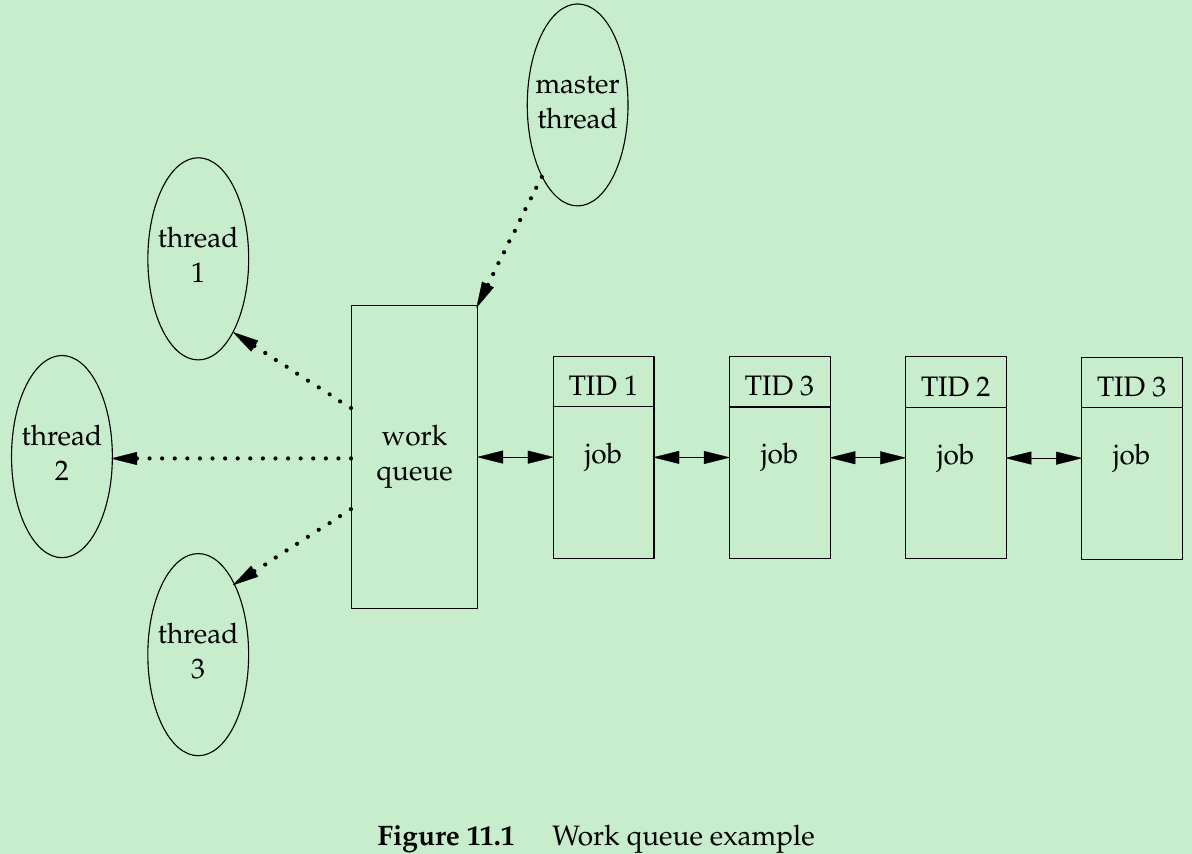

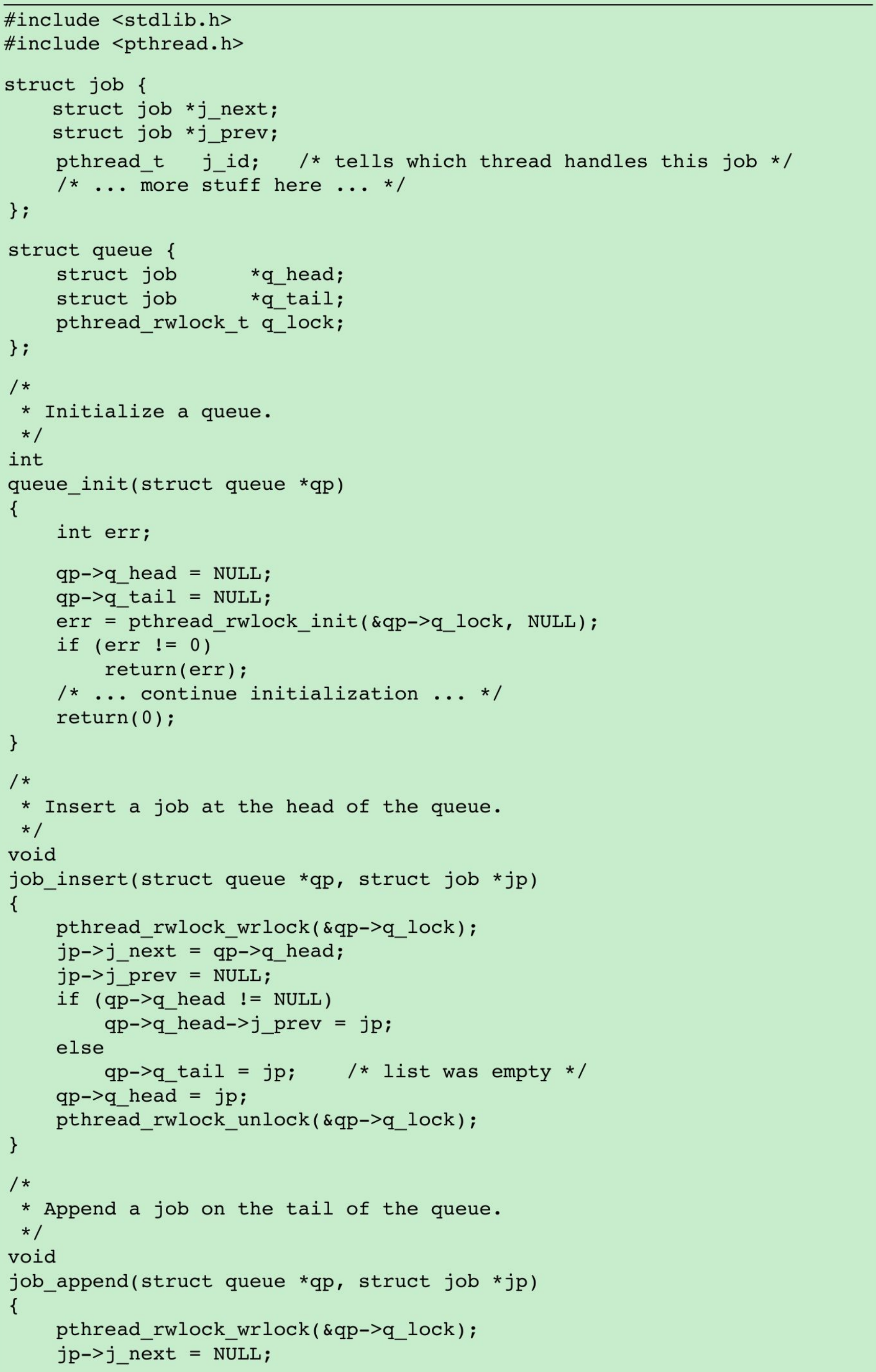

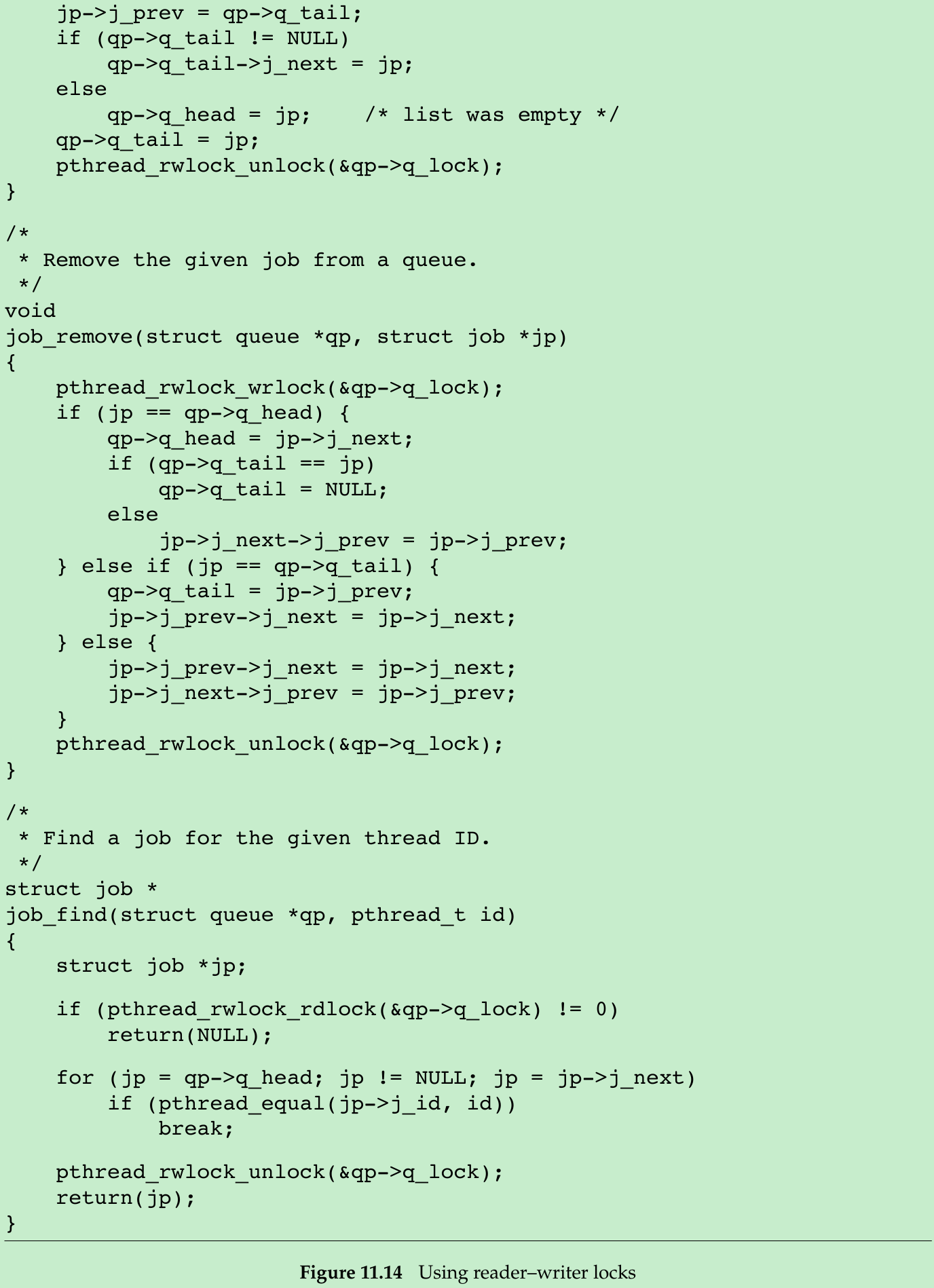

- Figure 11.14: A queue of job requests is protected by a single R-W lock. This example shows a possible implementation of Figure 11.1, whereby multiple worker threads obtain jobs assigned to them by a single master thread.

- We lock the queue’s R-W lock in write mode whenever we need to add a job to the queue or remove a job from the queue. Whenever we search the queue, we grab the lock in read mode, allowing all the worker threads to search the queue concurrently. Using a R-W lock will improve performance in this case only if threads search the queue much more frequently than they add or remove jobs.

- The worker threads take only those jobs that match their thread ID off the queue. Since the job structures are used only by one thread at a time, they don’t need any extra locking.

11.6.5 R-W Locking with Timeouts

#include <pthread.h>#include <time.h>int pthread_rwlock_timedrdlock(pthread_rwlock_t *restrict rwlock, const struct timespec *restrict tsptr);int pthread_rwlock_timedwrlock(pthread_rwlock_t *restrict rwlock, const struct timespec *restrict tsptr);Both return: 0 if OK, error number on failure- tsptr points to a timespec structure specifying the time at which the thread should stop blocking. If they can’t acquire the lock, these functions return ETIMEDOUT error when the timeout expires. The timeout specifies an absolute time, not relative.

11.6.6 Condition Variables

- Condition variables provide a place for threads to rendezvous. When used with mutexes, condition variables allow threads to wait in a race-free way for arbitrary conditions to occur.

- The condition itself is protected by a mutex. A thread must first lock the mutex to change the condition state. Other threads will not notice the change until they acquire the mutex, because the mutex must be locked to be able to evaluate the condition.

#include <pthread.h>int pthread_cond_init(pthread_cond_t *restrict cond, const pthread_condattr_t *restrict attr);int pthread_cond_destroy(pthread_cond_t *cond);Both return: 0 if OK, error number on failure- Before a condition variable is used, it must first be initialized. A condition variable, represented by pthread_cond_t data type, can be initialized in two ways.

- Assign constant PTHREAD_COND_INITIALIZER to a statically allocated condition variable.

- Use pthread_cond_init to initialize the dynamically allocated condition variable.

- pthread_cond_destroy deinitialize a condition variable before freeing its underlying memory.

- We create a conditional variable with default attributes with attr = NULL.

#include <pthread.h>int pthread_cond_wait(pthread_cond_t *restrict cond, pthread_mutex_t *restrict mutex);int pthread_cond_timedwait(pthread_cond_t *restrict cond, pthread_mutex_t *restrict mutex, const struct timespec *restrict tsptr);Both return: 0 if OK, error number on failure- pthread_cond_wait wait for a condition to be true.

- The mutex passed to pthread_cond_wait protects the condition. The caller passes it locked to the function, which then atomically places the calling thread on the list of threads waiting for the condition and unlocks the mutex. This closes the window between the time that the condition is checked and the time that the thread goes to sleep waiting for the condition to change, so that the thread doesn’t miss a change in the condition. When pthread_cond_wait returns, the mutex is again locked.

- pthread_cond_timedwait: The timeout value specifies how long we are willing to wait expressed as a timespec structure. We need to specify how long we are willing to wait as an absolute time instead of a relative time. If the timeout expires without the condition occurring, pthread_cond_timedwait will reacquire the mutex and return the error ETIMEDOUT.

- We can use the clock_gettime function(Section 6.10) to get the current time expressed as a timespec structure. But this function is not yet supported on all platforms. Alternatively, we can use the gettimeofday function to get the current time expressed as a timeval structure and translate it into a timespec structure. To obtain the absolute time for the timeout value, we can use the following function(assuming the maximum time blocked is expressed in minutes):

#include <sys/time.h>#include <stdlib.h>void maketimeout(struct timespec *tsp, long minutes){ struct timeval now; /* get the current time */ gettimeofday(&now, NULL); tsp->tv_sec = now.tv_sec; tsp->tv_nsec = now.tv_usec * 1000;/* usec to nsec */ /* add the offset to get timeout value */ tsp->tv_sec += minutes * 60;}- When returns from a successful call to pthread_cond_wait or pthread_cond_timedwait, a thread needs to reevaluate the condition, since another thread might have run and already changed the condition.

#include <pthread.h>int pthread_cond_signal (pthread_cond_t *cond);int pthread_cond_broadcast(pthread_cond_t *cond);Both return: 0 if OK, error number on failure- Two functions to notify threads that a condition has been satisfied.

pthread_cond_signal will wake up at least one thread waiting on a condition;

pthread_cond_broadcast will wake up all threads waiting on a condition.

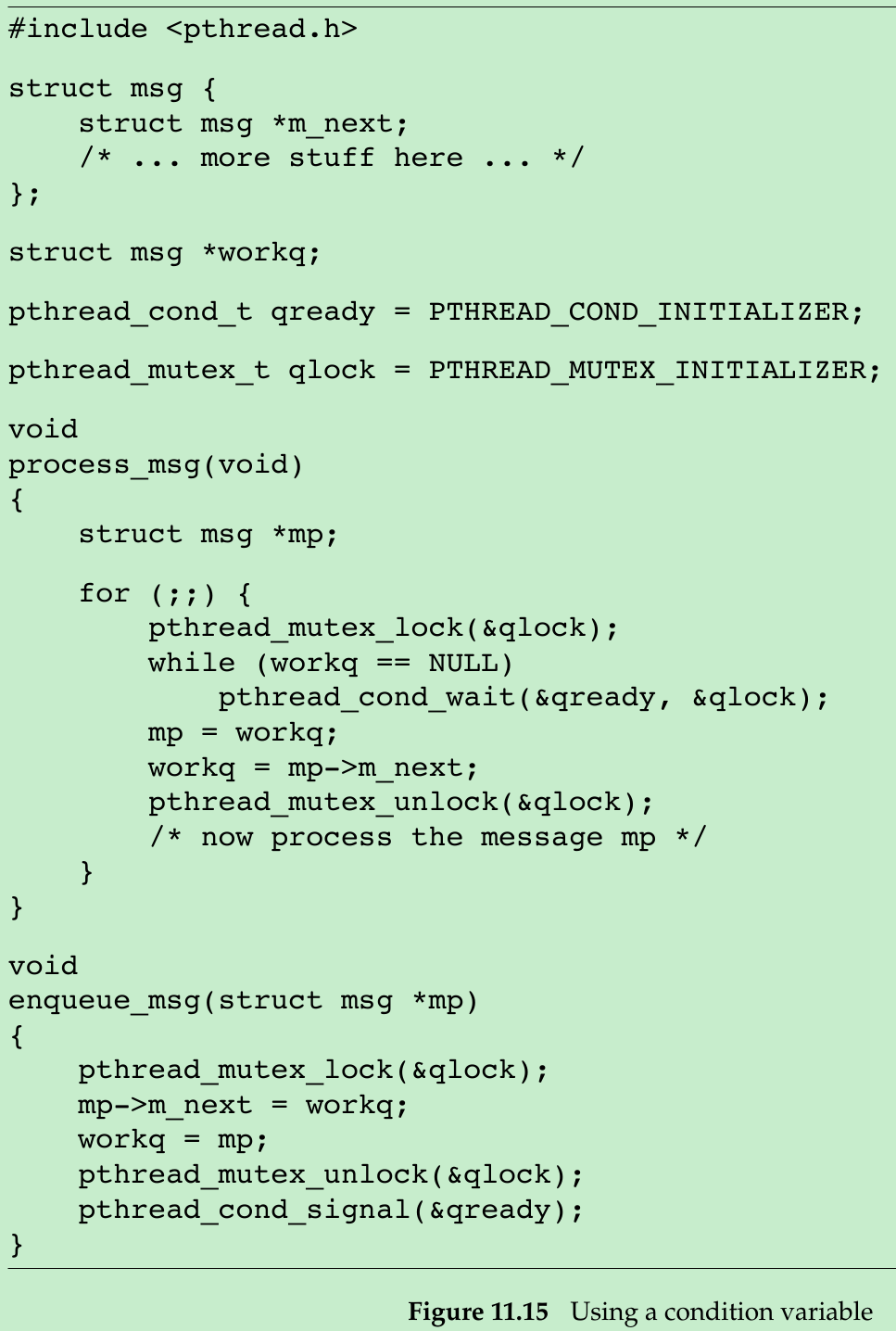

- Figure 11.15 shows an example of how to use a condition variable and a mutex together to synchronize threads.

- The condition is the state of the work queue. We protect the condition with a mutex and evaluate the condition in a while loop. When we put a message on the work queue, we need to hold the mutex, but we don’t need to hold the mutex when we signal the waiting threads. As long as it is okay for a thread to pull the message off the queue before we call cond_signal, we can do this after releasing the mutex. Since we check the condition in a while loop, this doesn’t present a problem; a thread will wake up, find that the queue is still empty, and go back to waiting again. If the code couldn’t tolerate this race, we would need to hold the mutex when we signal the threads.

11.6.7 Spin Locks

- A spin lock is like a mutex except that instead of blocking a process by sleeping, the process is blocked by busy-waiting(spinning) until the lock can be acquired. A spin lock could be used in situations where locks are held for short periods of times and threads don’t want to incur the cost of being descheduled.

- Spin locks are often used as low-level primitives to implement other types of locks. They can lead to wasting CPU resources: while a thread is spinning and waiting for a lock to become available, the CPU can’t do anything else. This is why spin locks should be held only for short periods of time.

- Spin locks are useful when used in a nonpreemptive kernel: besides providing a mutual exclusion mechanism, they block interrupts so an interrupt handler can’t deadlock the system by trying to acquire a spin lock that is already locked(think of interrupts as another type of preemption). In these types of kernels, interrupt handlers can’t sleep, so the only synchronization primitives they can use are spin locks.

- At user level, spin locks are not as useful unless you are running in a real-time scheduling class that doesn’t allow preemption. User-level threads running in a time-sharing scheduling class can be descheduled when their time quantum expires or when a thread with a higher scheduling priority becomes runnable. In these cases, if a thread is holding a spin lock, it will be put to sleep and other threads blocked on the lock will continue spinning longer than intended.

- Many mutex implementations are so efficient that the performance of applications using mutex locks is equivalent to their performance if they had used spin locks. Some mutex implementations will spin for a limited amount of time trying to acquire the mutex, and only sleep when the spin count threshold is reached. These factors, combined with advances in modern processors that allow them to context switch at fast rate, make spin locks useful only in limited circumstances.

#include <pthread.h>int pthread_spin_init(pthread_spinlock_t *lock, int pshared);int pthread_spin_destroy(pthread_spinlock_t *lock);Both return: 0 if OK, error number on failure- One attribute is specified for spin locks, which matters only if the platform supports the Thread Process-Shared Synchronization option(Figure 2.5).

- pshared represents the process-shared attribute, which indicates how the spin lock will be acquired.

- = PTHREAD_PROCESS_SHARED: the spin lock can be acquired by threads that have access to the lock’s underlying memory, even if those threads are from different processes.

- = PTHREAD_PROCESS_PRIVATE: the spin lock can be accessed only from threads within the process that initialized it.

#include <pthread.h>int pthread_spin_lock(pthread_spinlock_t *lock);int pthread_spin_trylock(pthread_spinlock_t *lock);int pthread_spin_unlock(pthread_spinlock_t *lock);All return: 0 if OK, error number on failure- pthread_spin_lock: spin until the lock is acquired;

pthread_spin_trylock: return the EBUSY error if the lock can’t be acquired immediately. It doesn’t spin.

pthread_spin_unlock: unlock a spin lock. - If a spin lock is currently unlocked, then pthread_spin_lock can lock it without spinning.

If the thread already has it locked, the results are undefined. pthread_spin_lock could fail with the EDEADLK error(or some other error), or the call could spin indefinitely. The behavior depends on the implementation.

If we try to unlock a spin lock that is not locked, the results are also undefined. - If either pthread_spin_lock or pthread_spin_trylock returns 0, then the spin lock is locked. We should not call any functions that might sleep while holding the spin lock. If we do, then we’ll waste CPU resources by extending the time other threads will spin if they try to acquire it.

11.6.8 Barriers

- Barriers are a synchronization mechanism that can be used to coordinate multiple threads working in parallel. A barrier allows each thread to wait until all cooperating threads have reached the same point, and then continue executing from there.

- Barrier objects allow an arbitrary number of threads to wait until all of the threads have completed processing, but the threads don’t have to exit. They can continue working after all threads have reached the barrier.

#include <pthread.h>int pthread_barrier_init(pthread_barrier_t *restrict barrier, const pthread_barrierattr_t *restrict attr, unsigned int count);int pthread_barrier_destroy(pthread_barrier_t *barrier);Both return: 0 if OK, error number on failure- When we initialize a barrier, we use the count argument to specify the number of threads that must reach the barrier before all of the threads will be allowed to continue.

- We set attr to NULL to initialize a barrier with the default attributes.

- If pthread_barrier_init allocated any resources for the barrier, the resources will be freed when we deinitialize the barrier by calling pthread_barrier_destroy.

#include <pthread.h>int pthread_barrier_wait(pthread_barrier_t *barrier);Returns: 0 or PTHREAD_BARRIER_SERIAL_THREAD if OK, error number on failure- We use pthread_barrier_wait to indicate that a thread is done with its work and is ready to wait for all the other threads to catch up. The thread calling pthread_barrier_wait is put to sleep if the barrier count is not yet satisfied. If the thread is the last one to call pthread_barrier_wait, thereby satisfying the barrier count, all of the threads are awakened.

- To one arbitrary thread, it will appear as if pthread_barrier_wait returned a value of PTHREAD_BARRIER_SERIAL_THREAD. The remaining threads see a return value of 0. This allows one thread to continue as the master to act on the results of the work done by all of the other threads.

- Once the barrier count is reached and the threads are unblocked, the barrier can be used again. The barrier count can’t be changed unless we call the pthread_barrier_destroy function followed by the pthread_barrier_init function with a different count.

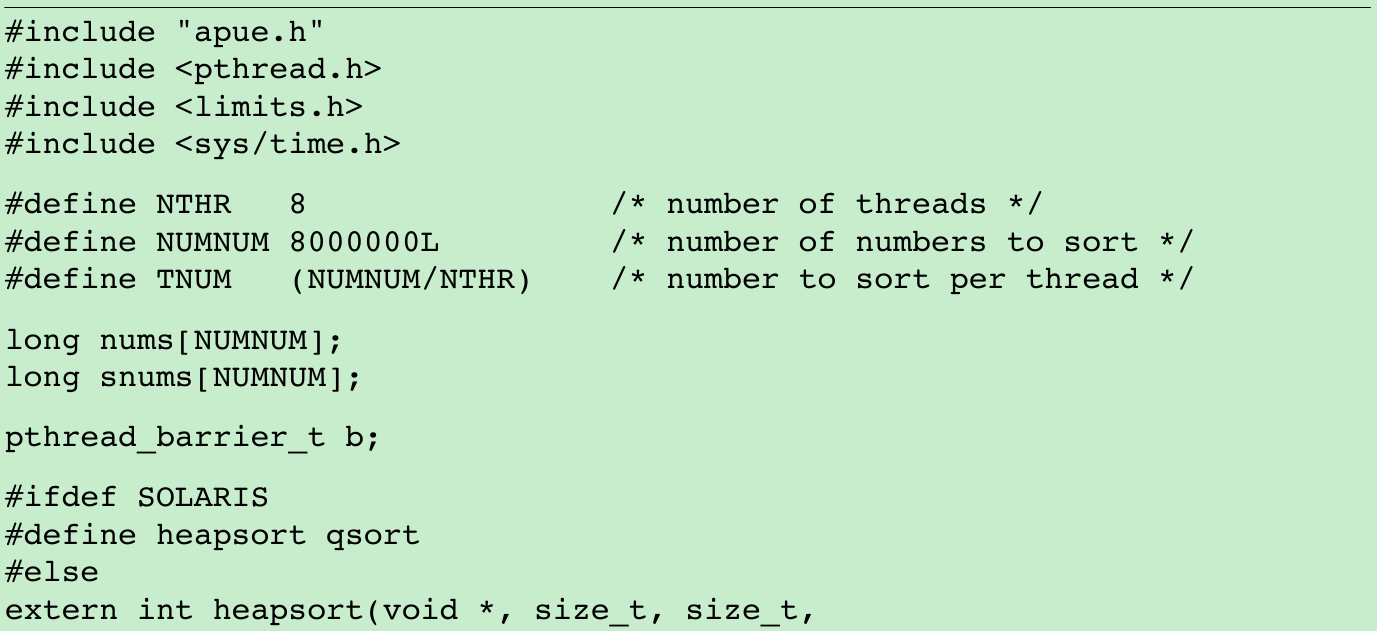

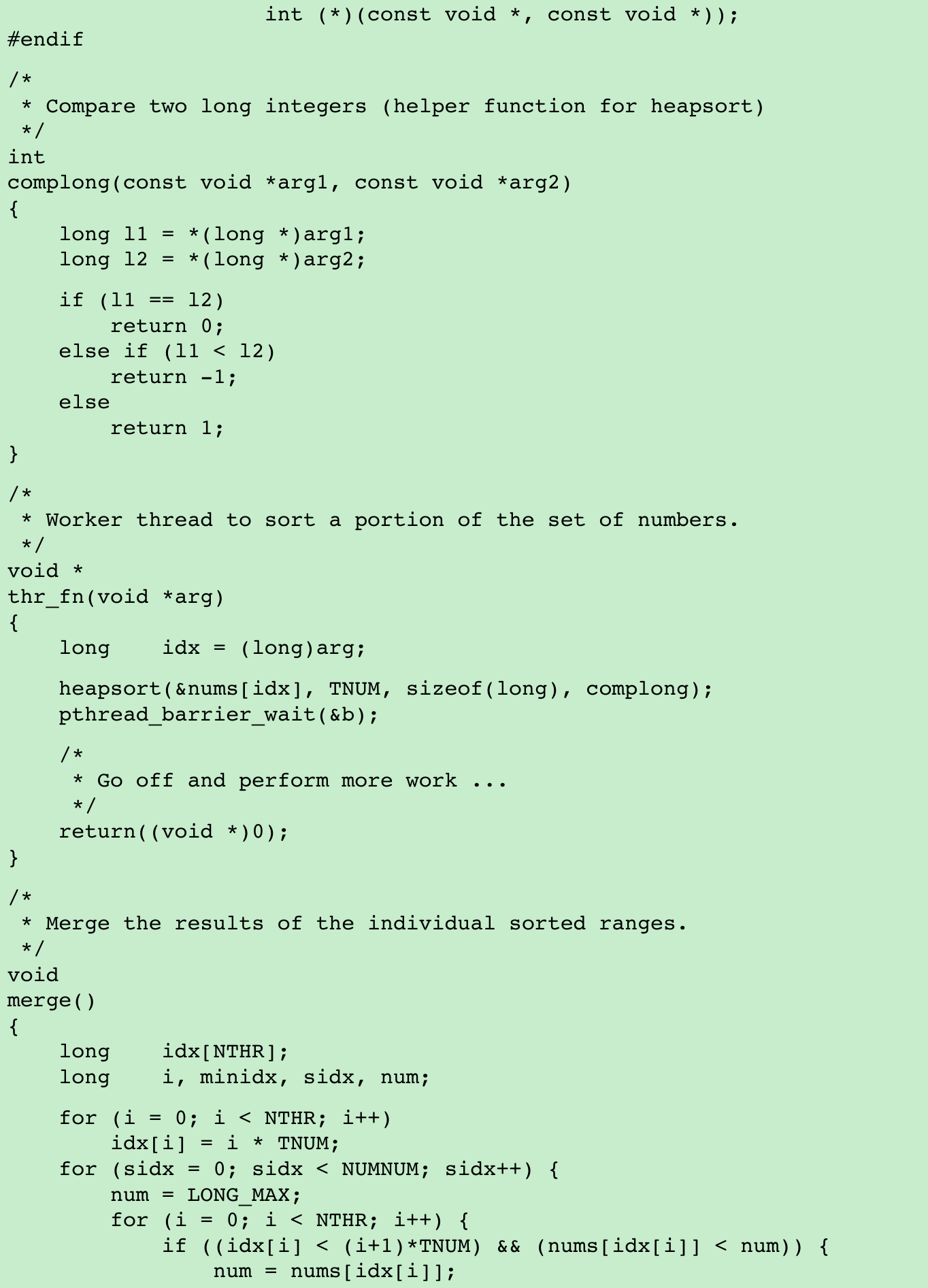

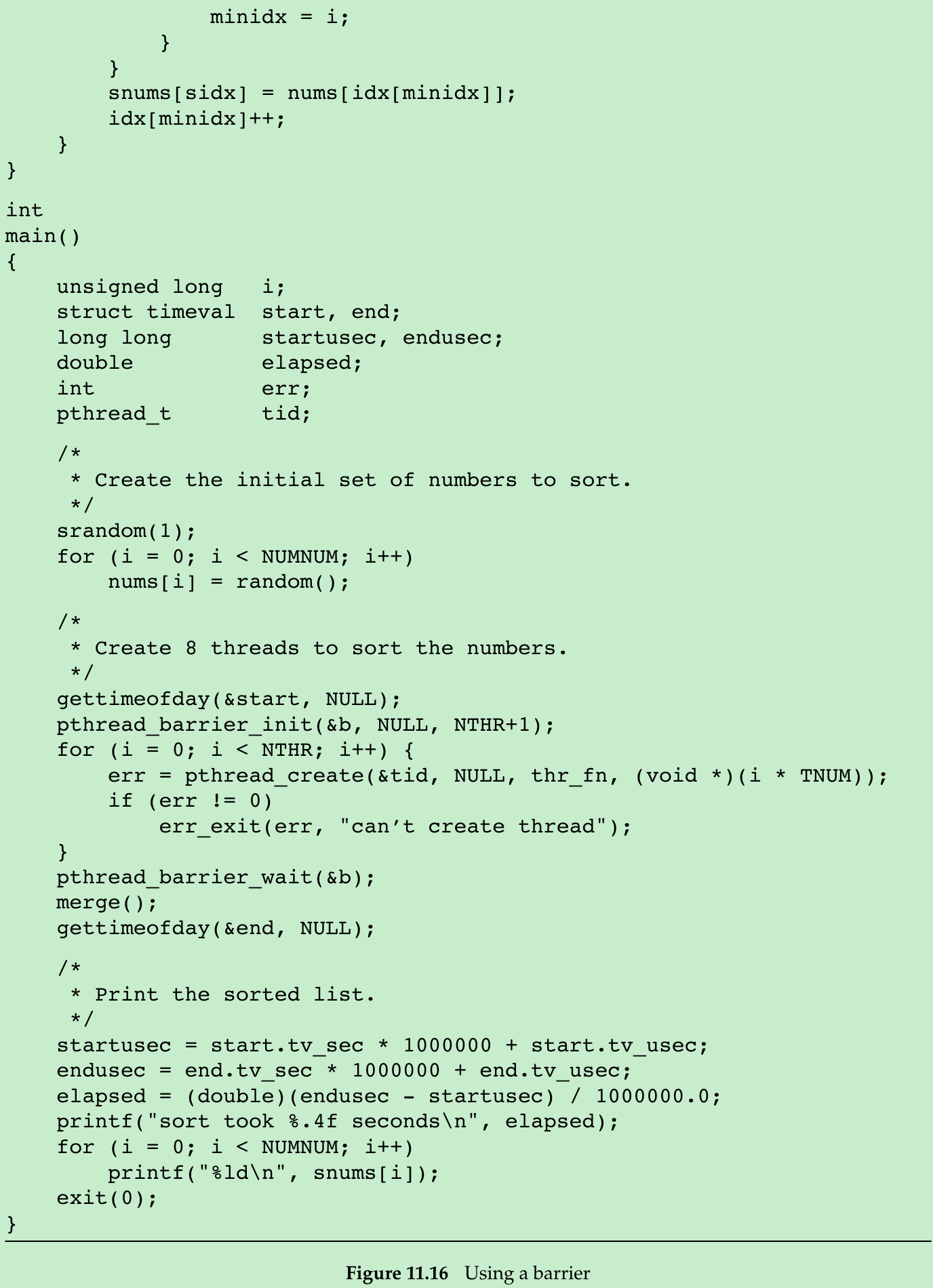

- Figure 11.16 shows the use of a barrier in a situation where the threads perform only one task.

- We use eight threads to divide the job of sorting 8 million numbers. Each thread sorts 1 million numbers using the heap-sort algorithm. Then the main thread calls a function to merge the results.

- We don’t need to use the PTHREAD_BARRIER_SERIAL_THREAD return value from pthread_barrier_wait to decide which thread merges the results, because we use the main thread for this task. That is why we specify the barrier count as one more than the number of worker threads; the main thread counts as one waiter.

11.7 Summary

Exercises(Redo)

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

0 0

- 11-Threads

- Threads

- Threads

- Threads

- Threads

- C++11 threads, locks and condition variables

- C++11 threads, locks and condition variables

- c3 Threads - Running Threads

- C++11 Concurrency – Part 1 : Start Threads

- C++11: std::threads managed by a designated class

- 7.Threads

- 线程 threads

- Creating Threads

- Creating Threads

- Redo Threads

- Boost.Threads

- Green threads

- Detaching Threads

- linux 中shell编程中的test用法

- 提升电路板电磁兼容性的PCB设计技巧

- Es Query Related

- android通过Handler在线程之间传递消息

- 欢迎使用CSDN-markdown编辑器

- 11-Threads

- Android——ScrollView嵌套ListView/GridView的问题

- 12-Thread Control

- [.Net码农]如何在ASP.NET的web.config配置文件中添加MIME类型

- Redis快速入门

- 解决Qt程序在Linux下无法输入中文的办法

- Java面向对象基础课之三/0909号

- 13-Daemon Processes

- libvirt/qemu特性之快照