4-Connection Management

来源:互联网 发布:ios socket编程 编辑:程序博客网 时间:2024/06/05 07:24

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

4.1 TCP Connections

- A client application can open a TCP/IP connection to a server application. Once the connection is established, messages exchanged between the client’s and server’s computers will never be lost, damaged, or received out of order.

- Though messages won’t be lost or corrupted, communication between client and server can be severed if a computer or network breaks. In this case, the client and server are notified of the communication breakdown.

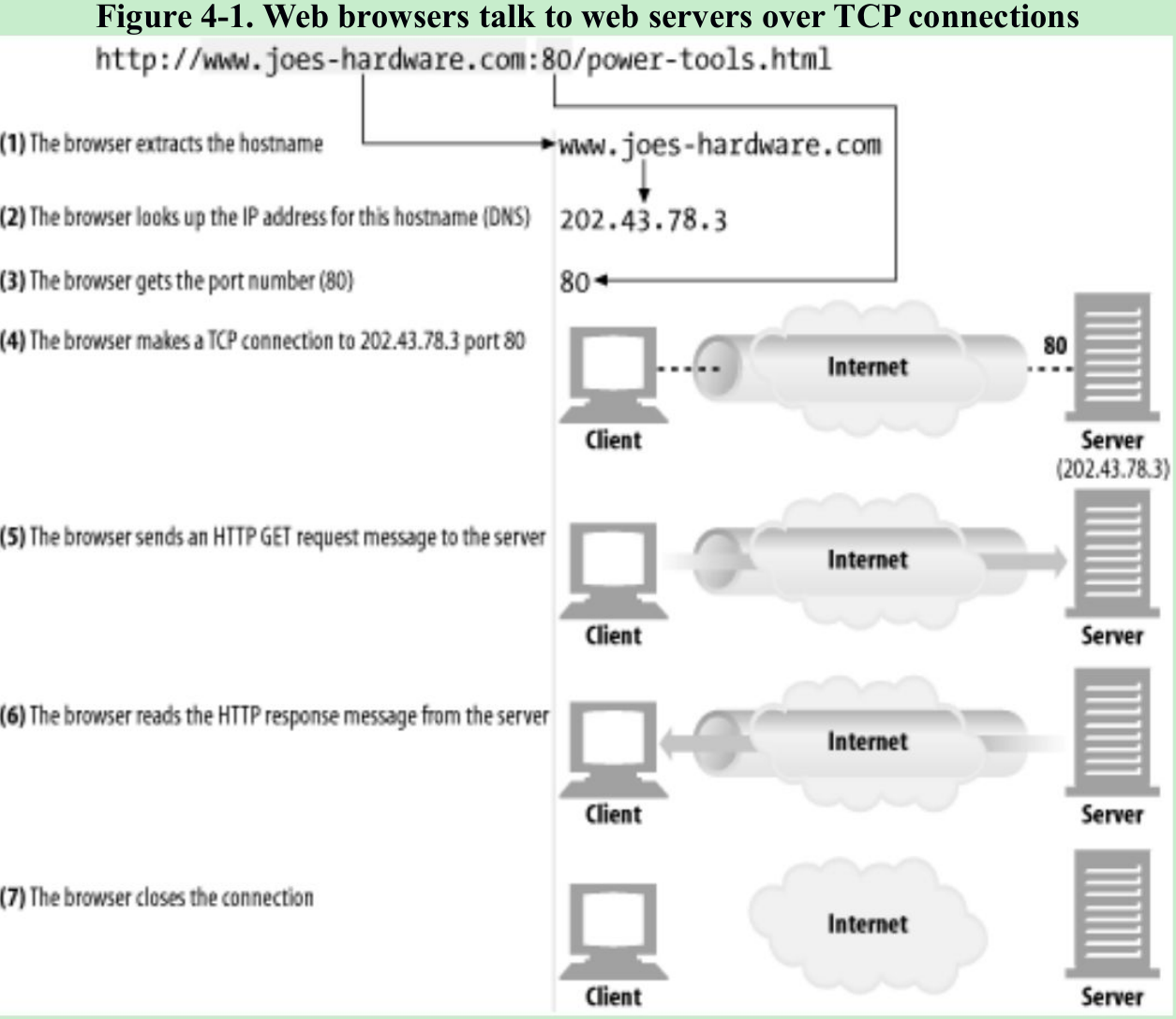

- Given URL “http://www.joes-hardware.com:80/power-tools.html”, your browser performs the steps shown in Figure 4-1.

In Steps 1-3, the IP address and port number of the server are pulled from the URL. A TCP connection is made to the web server in Step 4 and a request message is sent across the connection in Step 5. The response is read in Step 6, and the connection is closed in Step 7.

4.1.1 TCP Reliable Data Pipes

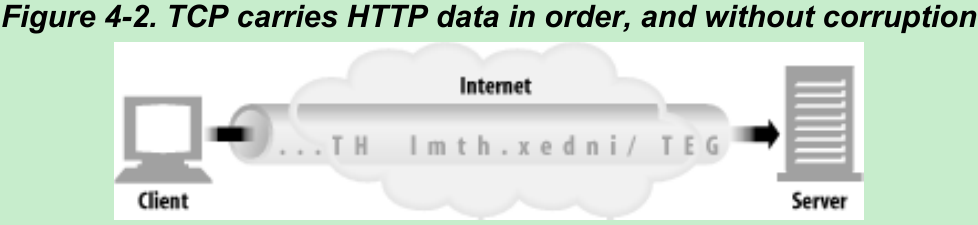

- TCP gives HTTP a reliable bit pipe. Bytes stuffed in one side of a TCP connection come out the other side correctly, and in the right order(Figure 4-2).

4.1.2 TCP Streams Are Segmented and Shipped by IP Packets

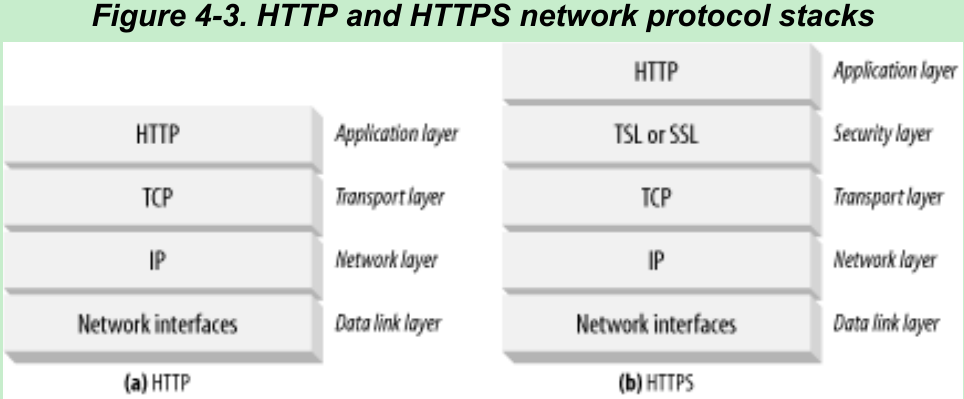

- TCP sends its data in IP datagrams. A secure variant, HTTPS, inserts a cryptographic encryption layer(TLS or SSL) between HTTP and TCP(Figure 4-3b).

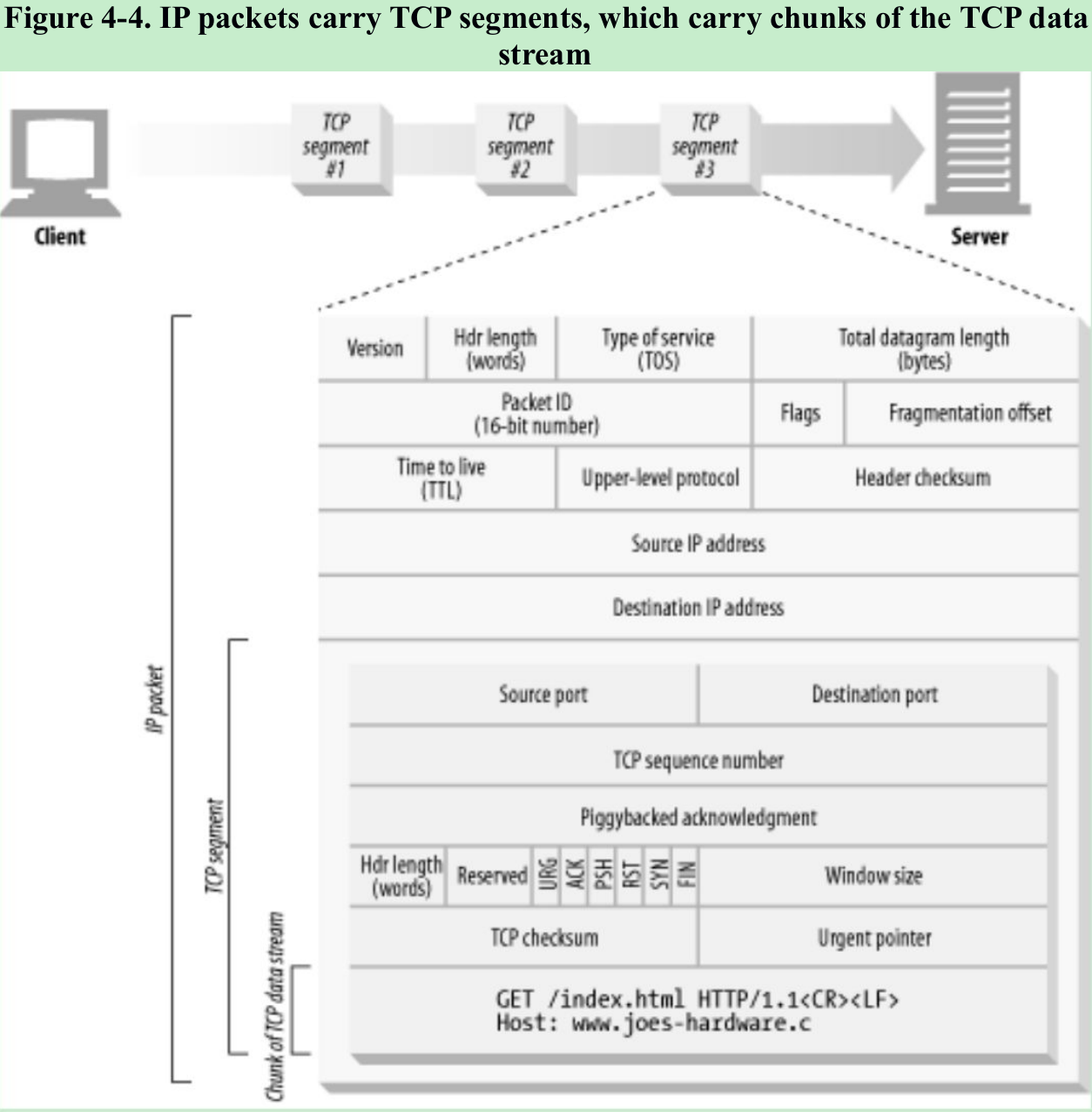

- When HTTP wants to transmit a message, it streams the contents of the message data through an open TCP connection. TCP takes the stream of data, chops up the data stream into segments, and transports the segments across the Internet inside IP packets(Figure 4-4).

- Each TCP segment is carried by an IP packet from one IP address to another IP address. Each of these IP packets contains:

- An IP packet header(usually 20 bytes)

- A TCP segment header(usually 20 bytes)

- A chunk of TCP data(0 or more bytes)

4.1.3 Keeping TCP Connections Straight

- A TCP connection is distinguished by four values:

4.1.4 Programming with TCP Sockets

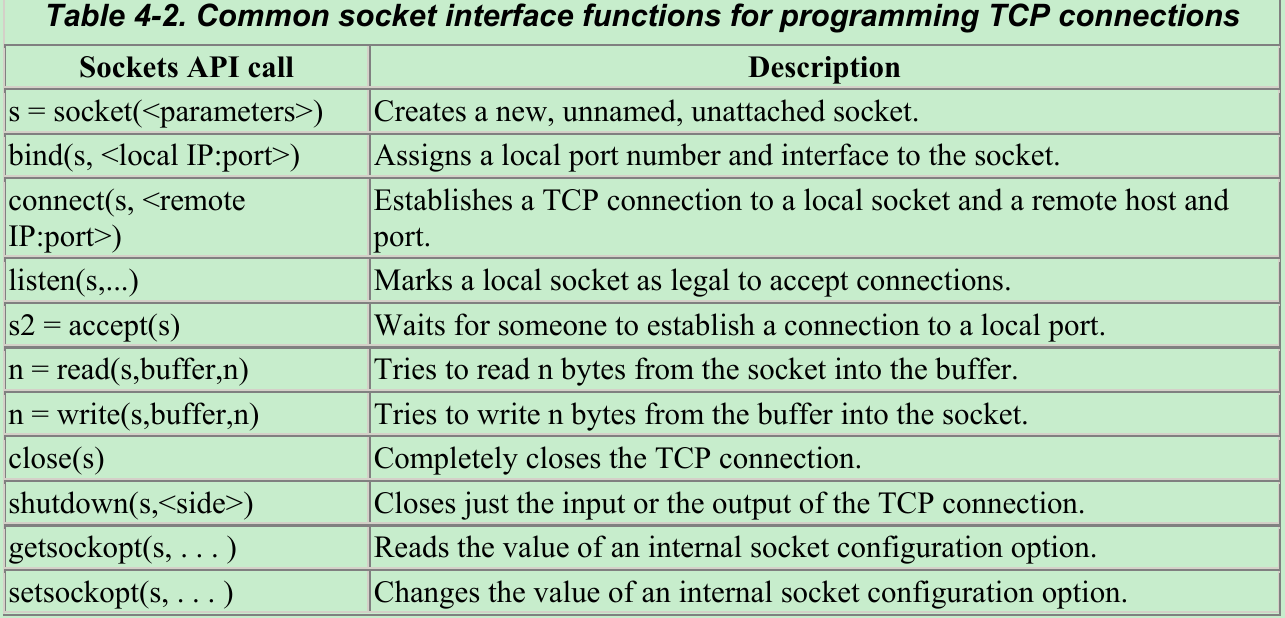

- Table 4-2 shows some interfaces provided by the sockets API. This sockets API hides the details of TCP and IP from the HTTP programmer.

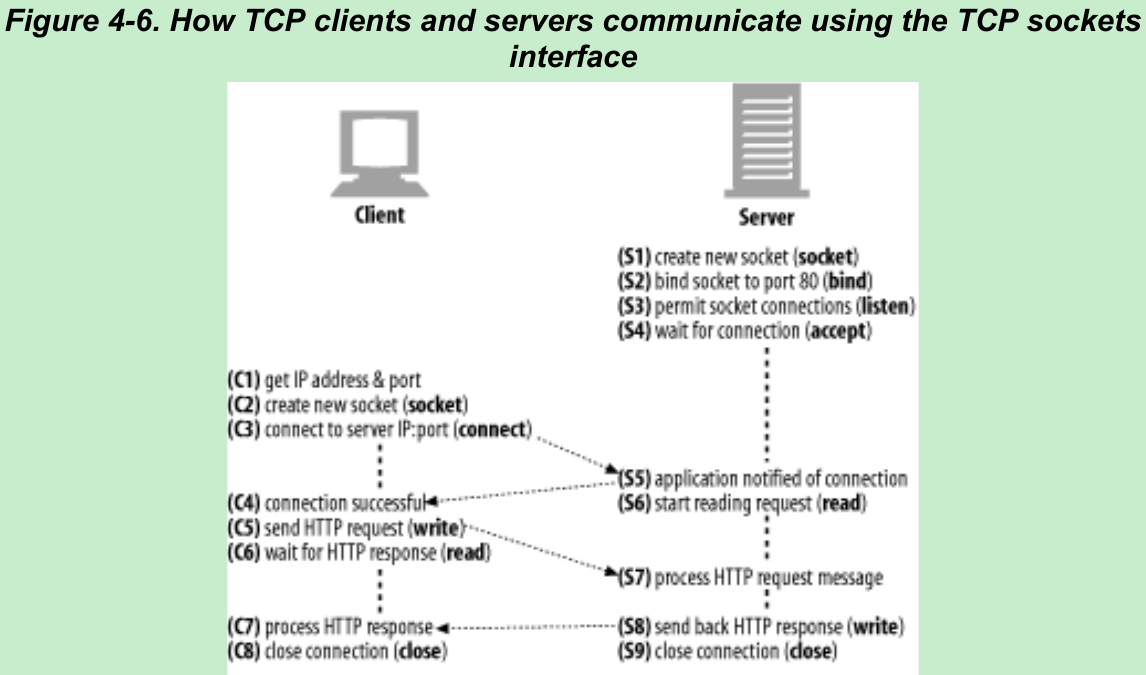

- The pseudocode in Figure 4-6 sketches how we use the sockets API to highlight the steps the client and server could perform to implement Fig.4-1.

4.2 TCP Performance Considerations

4.2.1 HTTP Transaction Delays

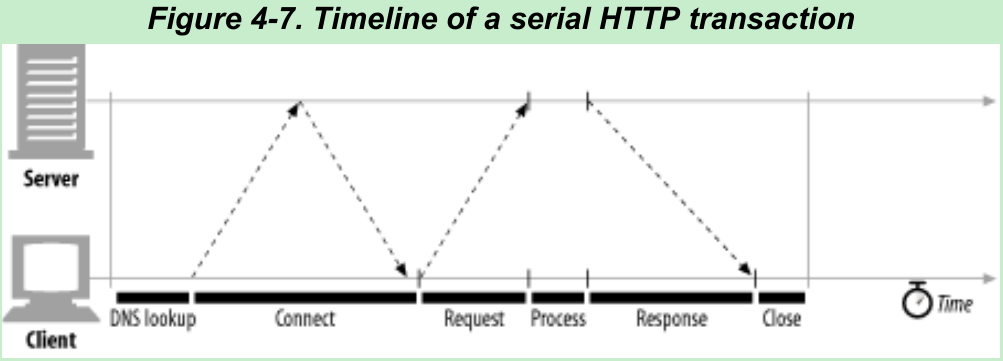

- Figure 4-7. The transaction processing time can be small compared to the time required to set up TCP connections and transfer the request and response messages. Unless the client or server is overloaded or executing complex dynamic resources, most HTTP delays are caused by TCP network delays.

- Possible causes of delay in an HTTP transaction:

- A client first needs to determine the IP address and port number of the web server from the URI. If the hostname in the URI was not recently visited, it may take tens of seconds to convert the hostname from a URI into an IP address using the DNS resolution infrastructure.

Most HTTP clients keep DNS cache of IP addresses for recently accessed sites. When the IP address is cached, the lookup is instantaneous. Because most web browsing is to a small number of popular sites, hostnames usually are resolved very quickly. - Next, the client sends a TCP connection request to the server and waits for the server to send back a connection acceptance reply. Connection setup delay occurs for every new TCP connection. This usually takes at most a second or two, but it can add up quickly when hundreds of HTTP transactions are made.

- Once the connection is established, the client sends the HTTP request over the newly established TCP pipe. The web server reads the request message from the TCP connection as the data arrives and processes the request. It takes time for the request message to travel over the Internet and get processed by the server.

- The web server then writes back the HTTP response, which also takes time.

- A client first needs to determine the IP address and port number of the web server from the URI. If the hostname in the URI was not recently visited, it may take tens of seconds to convert the hostname from a URI into an IP address using the DNS resolution infrastructure.

- The magnitude of these TCP network delays depends on hardware speed, the load of the network and server, the size of the request and response messages, and the distance between client and server.

4.2.2 Performance Focus Areas

4.2.3 TCP Connection Handshake Delays

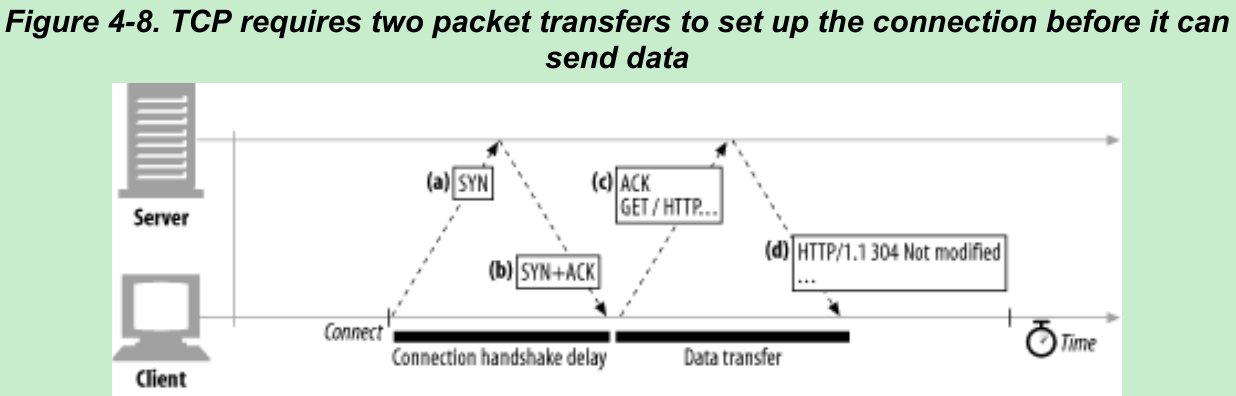

- When you set up a new TCP connection, before you send any data, the TCP software exchanges a series of IP packets to negotiate the terms of the connection(Figure 4-8). These exchanges can significantly degrade HTTP performance if the connections are used for small data transfers.

- Steps in the TCP connection handshake:

- To request a new TCP connection, the client sends a small TCP packet(usually 40-60 bytes) that has a “SYN” flag set to the server. Figure 4-8a.

- If the server accepts the connection, it computes some connection parameters and sends a TCP packet back to the client, with both “SYN” and “ACK” flags set, indicating that the connection request is accepted(Figure 4-8b).

- Finally, the client sends an acknowledgment back to the server, letting it know that the connection was established successfully(Figure 4-8c). TCP let the client send data in this acknowledgment packet.

- The SYN/SYN+ACK handshake(Figure 4-8a and b) creates a delay when HTTP transactions do not exchange much data, as is commonly the case. The TCP connect ACK packet(Figure 4-8c) often is large enough to carry the entire HTTP request message, and many HTTP server response messages fit into a single IP packet.(IP packets are usually a few hundred bytes for Internet traffic and around 1500 bytes for local traffic.)

- The result is that small HTTP transactions may spend 50% or more of their time doing TCP setup.

4.2.4 Delayed Acknowledgments

- Each TCP segment gets a sequence number and a data-integrity checksum. The receiver of each segment returns acknowledgment packets back to the sender when segments have been received intact. If a sender does not receive an acknowledgment within a specified window of time, the sender resends the data.

- Because acknowledgments are small, TCP allows them to piggyback on outgoing data packets heading in the same direction(delayed acknowledgment algorithm). Delayed acknowledgments hold outgoing acknowledgments in a buffer for a certain time, looking for an outgoing data packet on which to piggyback. If no outgoing data packet arrives in that time, the acknowledgment is sent in its own packet.

- But the request-reply behavior of HTTP reduces the chances that piggybacking can occur. There aren’t many packets heading in the reverse direction when you want them. So the delayed acknowledgment algorithms introduce significant delays.

4.2.5 TCP Slow Start

- The performance of TCP data transfer also depends on the age of the TCP connection. TCP connections tune themselves over time, initially limiting the maximum speed of the connection and increasing the speed as data is transmitted successfully. This tuning is called TCP slow start, and it is used to prevent sudden overloading and congestion of the Internet.

- Each time a packet is received successfully, the sender gets permission to send two more packets. If an HTTP transaction has a large amount of data to send, it cannot send all the packets at once. It must send one packet and wait for an acknowledgment; then it can send two packets, each of which must be acknowledged, which allows four packets, etc. This is called “opening the congestion window”.

- Because of congestion-control feature, new connections are slower than tuned connections that already have exchanged a amount of data. Because tuned connections are faster, HTTP let you reuse existing connections.

4.2.6 Nagle’s Algorithm and TCP_NODELAY

- TCP has a data stream interface that permits applications to stream data of any size to the TCP stack even a single byte at a time. Because each TCP segment carries at least 40 bytes of flags and headers, network performance can be degraded if TCP sends large numbers of packets containing small amounts of data.

- Nagle’s algorithm attempts to bundle up a large amount of TCP data before sending a packet, aiding network efficiency. The algorithm is described in RFC 896.

- Nagle’s algorithm discourages the sending of segments that are not full-size(a maximum-size packet is around 1500 bytes on a LAN, or a few hundred bytes across the Internet). It lets you send a non-full-size packet only if all other packets have been acknowledged. If other packets are still in flight, the partial data is buffered. This buffered data is sent only when pending packets are acknowledged or when the buffer has accumulated enough data to send a full packet.

- Nagle’s algorithm causes HTTP several performance problems.

- Small HTTP messages may not fill a packet, so they may be delayed waiting for additional data that will never arrive.

- Nagle’s algorithm interacts poorly with delayed acknowledgments. It will hold up the sending of data until an acknowledgment arrives, but the acknowledgment itself will be delayed 100-200 milliseconds by the delayed acknowledgment algorithm.

- These problems can become worse when using pipelined connections, because clients may have several messages to send to the same server and do not want delays.

- HTTP applications can disable Nagle’s algorithm to improve performance by setting the TCP_NODELAY parameter on their stacks. If you do this, you must ensure that you write large chunks of data to TCP so you don’t create a flurry of small packets.

4.2.7 TIME_WAIT Accumulation and Port Exhaustion

- TIME_WAIT port exhaustion is a performance problem that affects performance benchmarking but is uncommon in real deployments.

- When a TCP endpoint closes a TCP connection, it maintains in memory a control block recording the IP addresses and port numbers of the recently closed connection. This information is maintained for around twice the estimated maximum segment lifetime(2MSL), to make sure a new TCP connection with the same addresses and port numbers is not created during this time.

- This prevents any duplicate packets from the previous connection from being injected into a new connection that has the same addresses and port numbers. In practice, this algorithm prevents two connections with the same IP addresses and port numbers from being created, closed, and recreated within 2MSL.

- The 2MSL connection close delay normally is not a problem, but in benchmarking situations, it can be. It’s common that only one or a few test load-generation computers are connecting to a system under benchmark test, which limits the number of client IP addresses that connect to the server. The server typically is listening on HTTP’s default TCP port, 80. These circumstances limit the available combinations of connection values, at a time when port numbers are blocked from reuse by TIME_WAIT.

- With one client and one web server, of the four values that make up a TCP connection:

4.3 HTTP Connection Handling

4.3.1 The Oft-Misunderstood Connection Header

- HTTP allows a chain of HTTP intermediaries between the client and the origin server (proxies, caches, etc.). HTTP messages are forwarded hop by hop from the client, through intermediary devices, to the origin server(or the reverse).

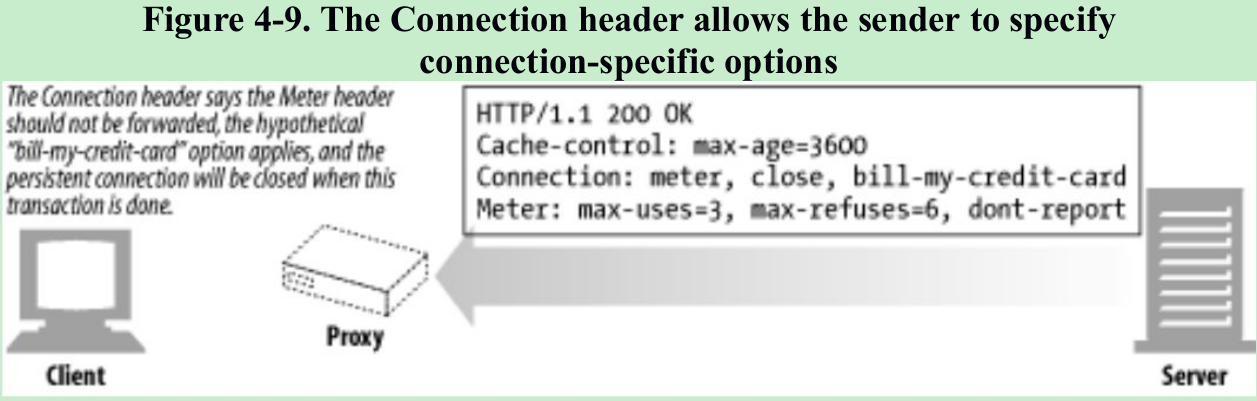

- In some cases, two adjacent HTTP applications may want to apply a set of options to their shared connection. The HTTP Connection header field has a comma-separated list of connection tokens that specify options for the connection that aren’t propagated to other connections. E.g., a connection that must be closed after sending the next message can be indicated by

Connection: close. - The Connection header can carry three types of tokens:

- HTTP header field names, listing headers relevant for this connection.

- Arbitrary token values, describing nonstandard options for this connection.

- The value close, indicating the persistent connection will be closed when done.

- If a connection token contains the name of an HTTP header field, that header field contains connection-specific information and must not be forwarded. Any header fields listed in the Connection header must be deleted before the message is forwarded.

- Placing a hop-by-hop header name in a Connection header is known as “protecting the header”, because the Connection header protects against accidental forwarding of the local header. Figure 4-9.

- When an HTTP application receives a message with a Connection header, the receiver parses and applies all options requested by the sender. It then deletes the Connection header and all headers listed in the Connection header before forwarding the message to the next hop. In addition, there are a few hop-by-hop headers that might not be listed as values of a Connection header, but must not be proxied. These include Proxy-Authenticate, Proxy-Connection, Transfer-Encoding, and Upgrade. For more about the Connection header, see Appendix C.

4.3.2 Serial Transaction Delays

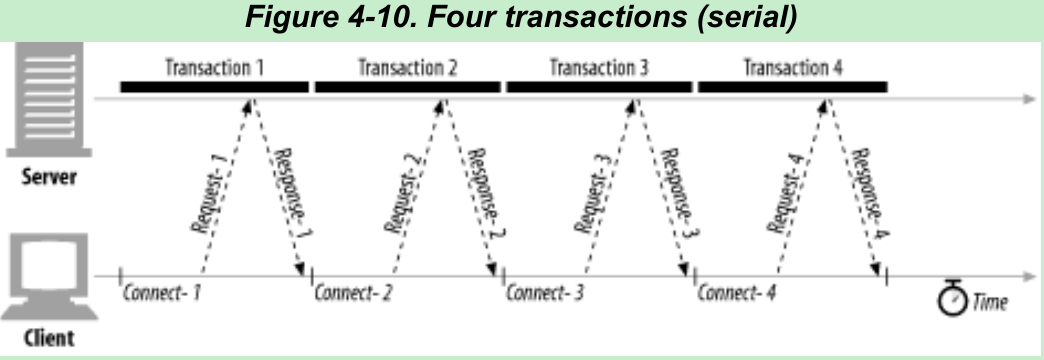

- TCP performance delays can add up if the connections are managed naively. Suppose you have a web page with three embedded images: browser needs to issue four HTTP transactions to display this page(one for the top-level HTML and three for the embedded images). If each transaction requires a new connection, the connection and slow-start delays can add up(Figure 4-10).

- In addition to the real delay imposed by serial loading, there is a slowness when a single image is loading and nothing is happening on the rest of the page. Users prefer multiple images to load at the same time.[10]

- Several techniques are available to improve HTTP connection performance:

- Parallel connections: Concurrent HTTP requests across multiple TCP connections

- Persistent connections: Reusing TCP connections to eliminate connect/close delays

- Pipelined connections: Concurrent HTTP requests across a shared TCP connection

- Multiplexed connections: Interleaving chunks of requests and responses.

4.4 Parallel Connections

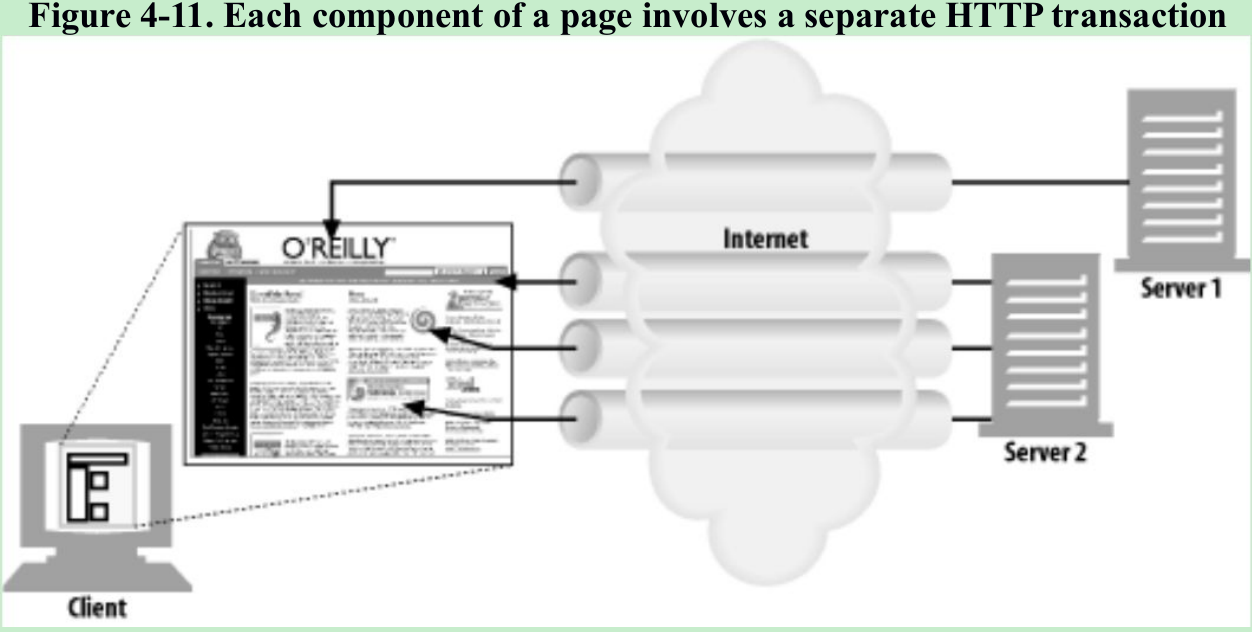

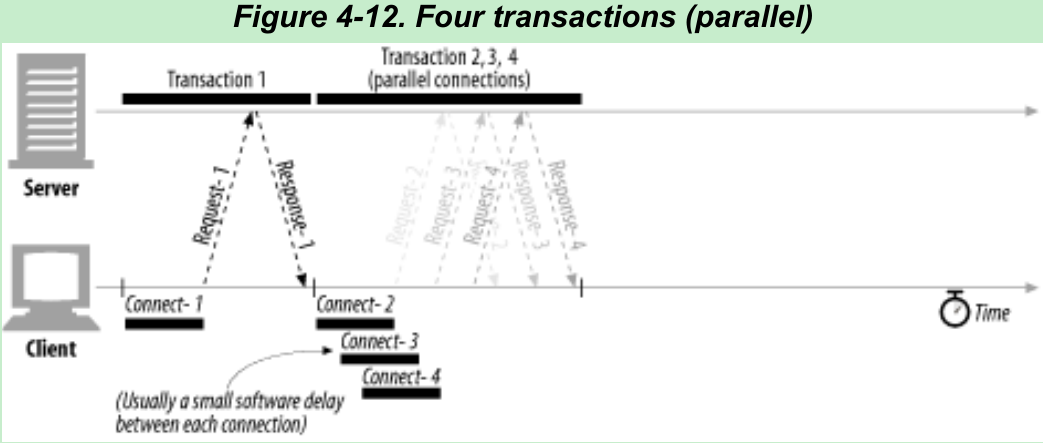

- Figure 4-11. HTTP allows clients to open multiple connections and perform multiple HTTP transactions in parallel. In this example, four embedded images are loaded in parallel, with each transaction getting its own TCP connection.

4.4.1 Parallel Connections May Make Pages Load Faster

- Composite pages consisting of embedded objects may load faster if they take advantage of the dead time and bandwidth limits of a single connection.

- The delays can be overlapped.

- If a single connection does not saturate the client’s Internet bandwidth, the unused bandwidth can be allocated to loading additional objects.

- Figure 4-12: Because the images are loaded in parallel, the connection delays are overlapped. There will be a small delay between each connection request due to software overheads, but the connection requests and transfer times are mostly overlapped.

4.4.2 Parallel Connections Are Not Always Faster

- Parallel connections are not always faster:

- When the client’s network bandwidth is scarce: a single HTTP transaction to a fast server could consume all of the available modem bandwidth. If multiple objects are loaded in parallel, each object will compete for limited bandwidth, so each object will load slower, yielding little or no performance advantage.

- A large number of open connections can consume a lot of memory and cause performance problems of their own. In practice, browsers use parallel connections but limiting the total number of parallel connections to a small number(often four). Servers are free to close excessive connections from a particular client.

4.4.3 Parallel Connections May “Feel” Faster

- Even if parallel connections don’t actually speed up the page transfer, they often make users feel that the page loads faster, because they can see progress being made as multiple component objects appear onscreen in parallel.

4.5 Persistent Connections

- Site Locality: An application that initiates an HTTP request to a server likely will make more requests to that server in the near future.

- HTTP/1.1 allows HTTP devices to keep TCP connections open after transactions complete and to reuse the preexisting connections for future HTTP requests. TCP connections that are kept open after transactions complete are called persistent connections. Non-persistent connections are closed after each transaction. Persistent connections stay open across transactions, until either the client or the server decides to close them.

- By reusing an idle, persistent connection that is already open to the target server, you can avoid the slow connection setup and the slow-start congestion adaptation phase.

4.5.1 Persistent Versus Parallel Connections

- Parallel connections can speed up the transfer of composite pages. But they have disadvantages:

- Each transaction opens/closes a new connection, costing time and bandwidth.

- Each new connection has reduced performance because of TCP slow start.

- There is a limit on the number of open parallel connections.

- Persistent connections offer some advantages over parallel connections: they reduce the delay and overhead of connection establishment, keep the connections in a tuned state, and reduce the potential number of open connections.

- But persistent connections need to be managed with care, or you may end up accumulating a large number of idle connections, consuming local resources and resources on remote clients and servers.

- Persistent connections can be most effective when used in conjunction with parallel connections. Many web applications open a small number of parallel connections, each persistent. There are two types of persistent connections: the HTTP/1.0+ “keep-alive” connections and the HTTP/1.1 “persistent” connections.

4.5.8 HTTP/1.1 Persistent Connections

- HTTP/1.1 persistent connections are active by default. HTTP/1.1 assumes all connections are persistent unless indicated. HTTP/1.1 applications have to explicitly add a

Connection: closeheader to a message to indicate that a connection should close after the transaction is complete. - An HTTP/1.1 client assumes an HTTP/1.1 connection will remain open after a response, unless the response contains a Connection: close header. But clients and servers still can close idle connections at any time. Not sending Connection: close does not mean that the server promises to keep the connection open forever.

4.5.9 Persistent Connection Restrictions and Rules

- After sending a Connection: close request header, the client can’t send more requests on that connection.

- If a client does not want to send another request on the connection, it should send a Connection: close request header in the final request.

- The connection can be kept persistent only if all messages on the connection have a correct, self-defined message length, i.e., the entity bodies must have correct Content-Lengths or be encoded with the chunked-transfer encoding.

- HTTP/1.1 proxies must manage persistent connections separately with clients and servers: each persistent connection applies to a single transport hop.

- HTTP/1.1 proxy servers should not establish persistent connections with an HTTP/1.0 client(because of the problems of older proxies forwarding Connection headers) unless they know the capabilities of the client. This is difficult in practice, and many vendors bend this rule.

- Regardless of the values of Connection headers, HTTP/1.1 devices may close the connection at any time, though servers should try not to close in the middle of transmitting a message and should always respond to at least one request before closing.

- HTTP/1.1 applications must be able to recover from asynchronous closes. Clients should retry the requests as long as they don’t have side effects that could accumulate. Clients must be prepared to retry requests if the connection closes before they receive the entire response, unless the request could have side effects if repeated.

- A single user client should maintain at most two persistent connections to any server or proxy, to prevent the server from being overloaded.

Because proxies may need more connections to a server to support concurrent users, a proxy should maintain at most 2N connections to any server or parent proxy, if there are N users trying to access the servers.

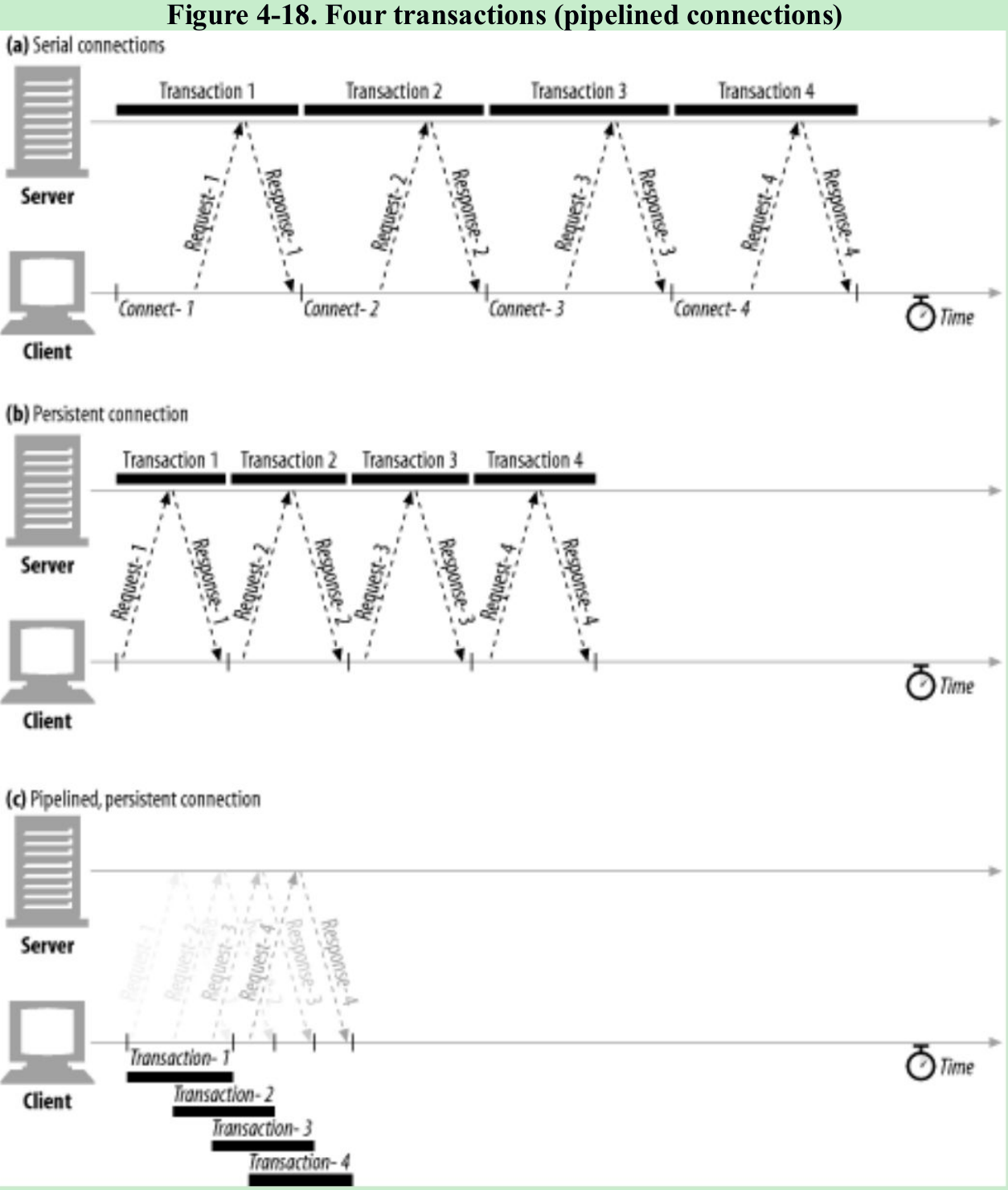

4.6 Pipelined Connections

- HTTP/1.1 permits request pipelining over persistent connections. Multiple requests can be queued before the responses arrive. While the first request is streaming across the network to a server, the second and third requests can get underway. This can improve performance in high-latency network conditions by reducing network round trips. Figure 4-18.

Restrictions for Pipelining

- HTTP clients should not pipeline until they are sure the connection is persistent.

- HTTP responses must be returned in the same order as the requests. HTTP messages are not tagged with sequence numbers, so there is no way to match responses with requests if the responses are received out of order.

- HTTP clients must be prepared for the connection to close at any time and be prepared to redo any pipelined requests that did not finish.

- HTTP clients should not pipeline requests that have side effects(such as POST). In general, pipelining prevents clients from knowing which of a series of pipelined requests were executed by the server. Because non-idempotent requests such as POST cannot safely be retried, you run the risk of some methods never being executed in error conditions.

4.7 The Mysteries of Connection Close

4.7.1 “At Will” Disconnection

- Any HTTP client, server, or proxy can close a TCP transport connection at any time. The connections normally are closed at the end of a message, but during error conditions, the connection may be closed in the middle of a header line or in other strange places.

- HTTP applications are free to close persistent connections after any period of time. E.g., after a persistent connection has been idle for a while, a server may decide to shut it down. But the server can never know for sure that the client wasn’t about to send data at the same time that the idle connection was being shut down by the server. If this happens, the client sees a connection error in the middle of writing its request message.

4.7.2 Content-Length and Truncation

- Each HTTP response should have an accurate Content-Length header to describe the size of the response body. When a client or proxy receives an HTTP response terminating in connection close, and the actual transferred entity length doesn’t match the Content-Length(or there is no Content-Length), the receiver should question the correctness of the length.

- If the receiver is a caching proxy, the receiver should not cache the response to minimize future compounding of a potential error. The proxy should forward the questionable message intact, without attempting to “correct” the Content-Length, to maintain semantic transparency.

4.7.3 Connection Close Tolerance, Retries, and Idem-potency

- Connections can close at any time, even in non-error conditions. HTTP applications have to handle unexpected closes. If a transport connection closes while the client is performing a transaction, the client should reopen the connection and retry one time, unless the transaction has side effects.

- The situation is worse for pipelined connections. The client can enqueue a large number of requests, but the origin server can close the connection, leaving numerous requests unprocessed and in need of rescheduling.

- Side effects are important. When a connection closes after some request data was sent but before the response is returned, the client cannot be sure how much of the transaction was invoked by the server. Some transactions(GET a static HTML page) can be repeated again and again without changing anything; other transactions(POST an order to an online book store) shouldn’t be repeated, or you may risk multiple orders.

- A transaction is idempotent if it yields the same result regardless of whether it is executed once or many times. Implementors can assume the GET, HEAD, PUT, DELETE, TRACE, and OPTIONS methods share this property. Clients shouldn’t pipeline nonidempotent requests(such as POST). Otherwise, a premature termination of the transport connection could lead to indeterminate results. If you want to send a nonidempotent request, you should wait for the response status for the previous request.

- Nonidempotent methods or sequences must not be retried automatically. E.g., most browsers will offer a dialog box when reloading a cached POST response, asking if you want to post the transaction again.

4.7.4 Graceful Connection Close

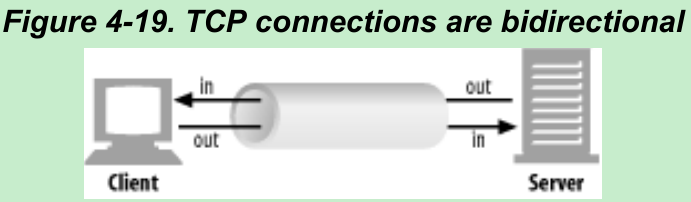

- TCP connections are bidirectional, as shown in Figure 4-19. Each side of a TCP connection has an input queue and an output queue, for data being read or written.

4.7.4.1 Full and half closes

- An application can close either or both of the TCP input and output channels.

- A

close()call closes both the input and output channels of a TCP connection. This is called a “full close” and is depicted in Figure 4-20a. - A

shutdown()call closes either the input or output channel individually. This is called a “half close” and is depicted in Figure 4-20b.

- A

4.7.4.2 TCP close and reset errors

- In general, closing the output channel of your connection is always safe. The peer on the other side of the connection will be notified that you closed the connection by getting an end-of-stream notification once all the data has been read from its buffer.

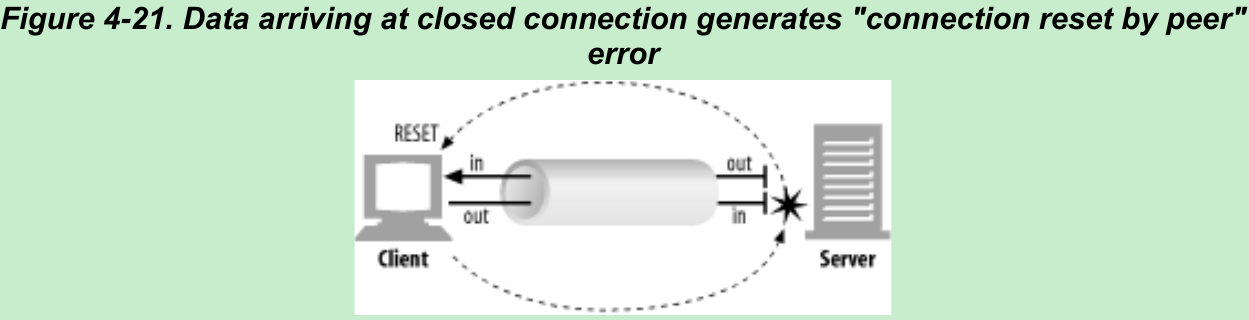

- Closing the input channel of your connection is riskier, unless you know the other side doesn’t plan to send any more data. If the other side sends data to your closed input channel, the operating system will issue a TCP “connection reset by peer” message back to the other side’s machine, as shown in Figure 4-21. Most operating systems treat this as an error and erase any buffered data the other side has not read yet. This is very bad for pipelined connections.

- Say you have sent 10 pipelined requests on a persistent connection, and the responses already have arrived and are sitting in your operating system’s buffer(but the application hasn’t read them yet). Now say you send request #11, but the server decides you’ve used this connection long enough, and closes it. Your request #11 will arrive at a closed connection and will reflect a reset back to you. This reset will erase your input buffers.

- When you finally get to reading data, you will get a connection reset by peer error, and the buffered, unread response data will be lost, even though much of it successfully arrived at your machine.

4.7.4.3 Graceful close

- The HTTP specification indicates that when clients or servers want to close a connection unexpectedly, they should “issue a graceful close on the transport connection”, but it doesn’t describe how to do that.

- In general, applications implementing graceful closes will first close their output channels and then wait for the peer on the other side of the connection to close its output channels. When both sides are done telling each other they won’t be sending any more data, the connection can be closed fully, with no risk of reset.

- But there is no guarantee that the peer implements or checks for half closes. So, applications wanting to close gracefully should half close their output channels and periodically check the status of their input channels(looking for data or for the end of the stream). If the input channel isn’t closed by the peer within some timeout period, the application may force connection close to save resources.

4.8 For More Information

Please indicate the source: http://blog.csdn.net/gaoxiangnumber1

Welcome to my github: https://github.com/gaoxiangnumber1

0 0

- 4-Connection Management

- Smack: Connection Management

- Connection Management in Chromium

- ICE Manual(Documentation for Ice 3.5)---Connection Management(Connection Establishment)

- ICE Manual(Documentation for Ice 3.5)---Connection Management(Active Connection Management)

- Optimizing Data Access and Messaging - SQL Azure Connection Management

- Unable to open a connection to the libvirt management daemon

- ICE Manual(Documentation for Ice 3.5)---Connection Management(Connection Closure)

- logistics-4-region management

- ICE Manual(Documentation for Ice 3.5)---Connection Management(Using Connections)

- ICE Manual(Documentation for Ice 3.5)---Connection Management(Bidirectional Connections)

- HTTPClient4.5.2学习笔记(二):连接管理(Connection management)

- Spring Boot:Unable to open JDBC connection for schema management target

- 第4章 memory management

- connection

- Connection

- Connection

- Connection

- spring初始化容器

- 第十一章 Number()

- 拓扑排序笔记♂

- 责任链模式

- phoenix修改表名

- 4-Connection Management

- K 线图的认识

- Fitnesse 之 Script Table

- linux grep命令和sed命令

- java日期转化工具。

- 5-Web Servers

- Apicloud中引导界面

- jQuery on()方法给动态生成的元素绑定方法

- Linux日志定时清理