Stanford UFLDL教程 Exercise:Sparse Coding

来源:互联网 发布:东莞信捷plc编程兼职 编辑:程序博客网 时间:2024/06/07 12:58

Exercise:Sparse Coding

Contents

[hide]- 1Sparse Coding

- 1.1Dependencies

- 1.2Step 0: Initialization

- 1.3Step 1: Sample patches

- 1.4Step 2: Implement and check sparse coding cost functions

- 1.5Step 3: Iterative optimization

Sparse Coding

In this exercise, you will implement sparse coding and topographic sparse coding on black-and-white natural images.

In the file sparse_coding_exercise.zip we have provided some starter code. You should write your code at the places indicated "YOUR CODE HERE" in the files.

For this exercise, you will need to modify sparseCodingWeightCost.m,sparseCodingFeatureCost.m andsparseCodingExercise.m.

Dependencies

You will need:

- computeNumericalGradient.m from Exercise:Sparse Autoencoder

- display_network.m from Exercise:Sparse Autoencoder

If you have not completed the exercise listed above, we strongly suggest you complete it first.

Step 0: Initialization

In this step, we initialize some parameters used for the exercise.

Step 1: Sample patches

In this step, we sample some patches from the IMAGES.mat dataset comprising 10 black-and-white pre-whitened natural images.

Step 2: Implement and check sparse coding cost functions

In this step, you should implement the two sparse coding cost functions:

- sparseCodingWeightCost in sparseCodingWeightCost.m, which is used for optimizing the weight cost given the features

- sparseCodingFeatureCost in sparseCodingFeatureCost.m, which is used for optimizing the feature cost given the weights

Each of these functions should compute the appropriate cost and gradient. You may wish to implement the non-topographic version ofsparseCodingFeatureCost first, ignoring the grouping matrix and assuming that none of the features are grouped. You can then extend this to the topographic version later. Alternatively, you may implement the topographic version directly - using the non-topographic version will then involve setting the grouping matrix to the identity matrix.

Once you have implemented these functions, you should check the gradients numerically.

Implementation tip - gradient checking the feature cost. One particular point to note is that when checking the gradient for the feature cost,epsilon should be set to a larger value, for instance1e-2 (as has been done for you in the checking code provided), to ensure that checking the gradient numerically makes sense. This is necessary because asepsilon becomes smaller, the functionsqrt(x + epsilon) becomes "sharper" and more "pointed", making the numerical gradient computed near 0 less and less accurate. To see this, consider what would happen if the numerical gradient was computed by using a point with x less than 0 and a point with x greater than 0 - the computed numerical slope would be wildly inaccurate.

Step 3: Iterative optimization

In this step, you will iteratively optimize for the weights and features to learn a basis for the data, as described in the section onsparse coding. Mini-batching and initialization of the features s has already been done for you. However, you need to still need to fill in the analytic solution to the the optimization problem with respect to the weight matrix, given the feature matrix.

Once that is done, you should check that your solution is correct using the given checking code, which checks that the gradient at the point determined by your analytic solution is close to 0. Once your solution has been verified, comment out the checking code, and run the iterative optimization code. 200 iterations should take less than 45 minutes to run, and by 100 iterations you should be able to see bases that look like edges, similar to those you learned inthe sparse autoencoder exercise.

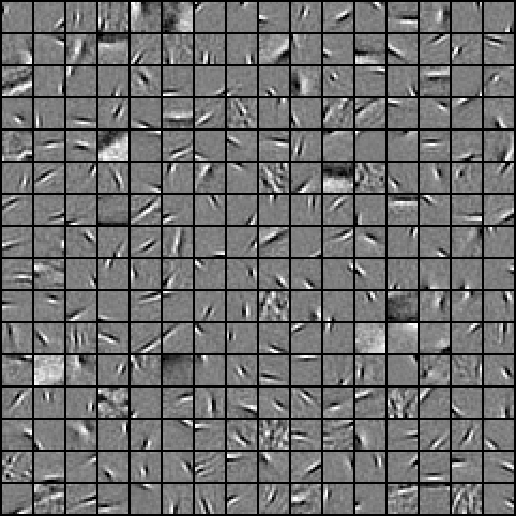

For the non-topographic case, these features will not be "ordered", and will look something like the following:

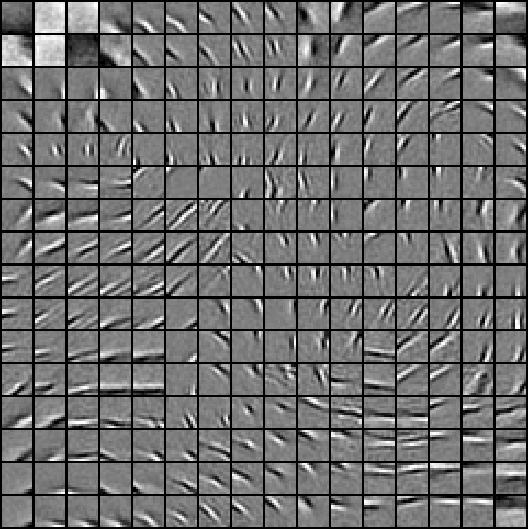

For the topographic case, the features will be "ordered topographically", and will look something like the following:

from: http://ufldl.stanford.edu/wiki/index.php/Exercise:Sparse_Coding

- Stanford UFLDL教程 Exercise:Sparse Coding

- Stanford UFLDL教程 Exercise:Sparse Autoencoder

- Stanford UFLDL教程 Exercise:Learning color features with Sparse Autoencoders

- UFLDL教程: Exercise: Sparse Autoencoder

- Stanford UFLDL教程 Exercise:Vectorization

- UFLDL教程 Exercise:Sparse Autoencoder(答案)

- Stanford UFLDL教程 Exercise:PCA and Whitening

- Stanford UFLDL教程 Exercise:Softmax Regression

- Stanford UFLDL教程 Exercise:Self-Taught Learning

- Stanford UFLDL教程 Exercise:Convolution and Pooling

- Stanford UFLDL教程 Exercise:Independent Component Analysis

- UFLDL Exercise:Sparse Autoencoder

- UFLDL Exercise:Sparse Autoencoder

- UFLDL Exercise:Sparse Autoencoder

- UFLDL Exercise:Sparse Autoencoder

- UFLDL教程: Exercise:Learning color features with Sparse Autoencoders

- Stanford UFLDL教程 Exercise:PCA in 2D

- Stanford UFLDL教程 Exercise: Implement deep networks for digit classification

- HDU-1754I Hate It 线段树区间最值

- Stanford UFLDL教程 稀疏编码自编码表达

- [LeetCode]005-Longest Palindromic Substring

- 第十四周 知原理--哈弗曼树

- Caffe + Ubuntu 15.04 + CUDA 7.0 安装以及配置

- Stanford UFLDL教程 Exercise:Sparse Coding

- ORA-03113: end-of-file on communication channel

- 最长公共子序列(动态规划)

- linux 内核参数优化

- SQL常用语句个人总结

- 完美解决Your Firefox profile cannot be loaded. It may be missing or inaccessible

- Stanford UFLDL教程 独立成分分析

- perspective 3D透视简介

- 电子或通信领域当前的主流技术及其社会需求调查报告