Stanford UFLDL教程 Exercise:Independent Component Analysis

来源:互联网 发布:淘宝主播怎么自我介绍 编辑:程序博客网 时间:2024/06/16 03:21

Exercise:Independent Component Analysis

Contents

[hide]- 1Independent Component Analysis

- 1.1Dependencies

- 1.2Step 0: Initialization

- 1.3Step 1: Sample patches

- 1.4Step 2: ZCA whiten patches

- 1.5Step 3: Implement and check ICA cost functions

- 1.5.1Step 4: Optimization

- 1.6Appendix

- 1.6.1Backtracking line search

Independent Component Analysis

In this exercise, you will implement Independent Component Analysis on color images from the STL-10 dataset.

In the file independent_component_analysis_exercise.zip we have provided some starter code. You should write your code at the places indicated "YOUR CODE HERE" in the files.

For this exercise, you will need to modify OrthonormalICACost.m andICAExercise.m.

Dependencies

You will need:

- computeNumericalGradient.m from Exercise:Sparse Autoencoder

- displayColorNetwork.m from Exercise:Learning color features with Sparse Autoencoders

The following additional file is also required for this exercise:

- Sampled 8x8 patches from the STL-10 dataset (stl10_patches_100k.zip)

If you have not completed the exercises listed above, we strongly suggest you complete them first.

Step 0: Initialization

In this step, we initialize some parameters used for the exercise.

Step 1: Sample patches

In this step, we load and use a portion of the 8x8 patches from the STL-10 dataset (which you first saw in the exercise onlinear decoders).

Step 2: ZCA whiten patches

In this step, we ZCA whiten the patches as required by orthonormal ICA.

Step 3: Implement and check ICA cost functions

In this step, you should implement the ICA cost function:orthonormalICACost inorthonormalICACost.m, which computes the cost and gradient for the orthonormal ICA objective. Note that the orthonormality constraint isnot enforced in the cost function. It will be enforced by a projection in the gradient descent step, which you will have to complete in step 4.

When you have implemented the cost function, you should check the gradients numerically.

Hint - if you are having difficulties deriving the gradients, you may wish to consult the page onderiving gradients using the backpropagation idea.

Step 4: Optimization

In step 4, you will optimize for the orthonormal ICA objective using gradient descent with backtracking line search (the code for which has already been provided for you. For more details on the backtracking line search, you may wish to consult theappendixof this exercise). The orthonormality constraint should be enforced with a projection, which you should fill in.

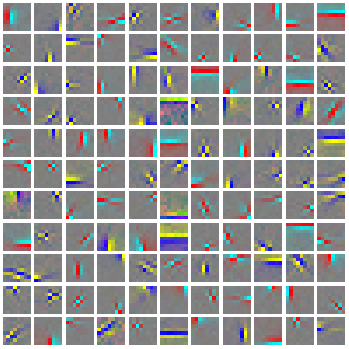

Once you have filled in the code for the projection, check that it is correct by using the verification code provided. Once you have verified that your projection is correct, comment out the verification code and run the optimization. 1000 iterations of gradient descent should take less than 15 minutes, and produce a basis which looks like the following:

It is comparatively difficult to optimize for the objective while enforcing the orthonormality constraint using gradient descent, and convergence can be slow. Hence, in situations where an orthonormal basis is not required, other faster methods of learning bases (such as sparse coding) may be preferable.

Appendix

Backtracking line search

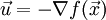

The backtracking line search used in the exercise is based off that in Convex Optimization by Boyd and Vandenbergh. In the backtracking line search, given a descent direction (in this exercise we use

(in this exercise we use ), we want to find a good step sizet that gives us a steep descent. The general idea is to use a linear approximation (the first order Taylor approximation) to the functionf at the current point

), we want to find a good step sizet that gives us a steep descent. The general idea is to use a linear approximation (the first order Taylor approximation) to the functionf at the current point  , and to search for a step sizet such that we can decrease the function's value by more thanα times the decrease predicted by the linear approximation (

, and to search for a step sizet such that we can decrease the function's value by more thanα times the decrease predicted by the linear approximation ( . For more details, you may wish to consultthe book.

. For more details, you may wish to consultthe book.

However, it is not necessary to use the backtracking line search here. Gradient descent with a small step size, or backtracking to a step size so that the objective decreases is sufficient for this exercise.

from: http://ufldl.stanford.edu/wiki/index.php/Exercise:Independent_Component_Analysis

- Stanford UFLDL教程 Exercise:Independent Component Analysis

- Stanford UFLDL教程 Exercise:Vectorization

- Stanford UFLDL教程 Exercise:Sparse Autoencoder

- Stanford UFLDL教程 Exercise:PCA and Whitening

- Stanford UFLDL教程 Exercise:Softmax Regression

- Stanford UFLDL教程 Exercise:Self-Taught Learning

- Stanford UFLDL教程 Exercise:Convolution and Pooling

- Stanford UFLDL教程 Exercise:Sparse Coding

- ICA/ Independent component analysis

- Stanford UFLDL教程 Exercise:PCA in 2D

- Stanford UFLDL教程 Exercise: Implement deep networks for digit classification

- Stanford UFLDL教程 Exercise:Learning color features with Sparse Autoencoders

- [机器学习] UFLDL笔记 - ICA(Independent Component Analysis)(Representation)

- [机器学习] UFLDL笔记 - ICA(Independent Component Analysis)(Code)

- ICA(Independent Component Analysis)

- UFLDL教程:Exercise:Vectorization

- Stanford UFLDL教程 白化

- 独立成分分析(Independent Component Analysis)

- 完美解决Your Firefox profile cannot be loaded. It may be missing or inaccessible

- Stanford UFLDL教程 独立成分分析

- perspective 3D透视简介

- 电子或通信领域当前的主流技术及其社会需求调查报告

- opensuse13.2上nfs无法使用

- Stanford UFLDL教程 Exercise:Independent Component Analysis

- hibernate org.hibernate.DuplicateMappingException错误

- OpenJudge_P8782 乘积最大(DP)

- #读书心得#巧用时间读书的小方法

- 21分钟 MySQL 入门教程

- js实践

- 《电子或通讯邻域当前的主流技术及其社会需求调查报告》

- 【CEOI2010】【BZOJ2013】A huge tower

- Java final修饰符使用总结